-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Digital Foundry Tech Focus - Motion Blur: Is It Good For Gaming Graphics?

- Thread starter chandoog

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Great video. Excessive shutter is always my issue with Motion Blurs being implemented. I liked the suggestion of a slider or amount toggle. As it seems most implementations don't seem to respect the 180 degree shutter rule or can't anticipate it enough they go with the lowest common denominator which is 30fps. So 30fps 180 degree shuter when a game is running at 60fps is excessive.

But my biggest pet peeve seemed brushed upon lightly in the video. I think this is more criminal than excessive camera blur.

It immediately pops out to me and kills my immersion full stop. It looks so ugly.

The God of War and SFV examples above irk me. If only the Makoto 2D Smear frames could actually be done in 3D in real time with polyginal graphics. Not counting what's done in Guilty Gear Xrd or DBZF as those are manually made.

Alpha sorting and alpha separation has to happen. But, it may not be possible with the way things are done, when the images are snapshots of time as explained in the video?

The way it's done for cheap in TV/Film that's convincing and similarly done blur wise, is of course the separation of elements to be composited in post, with blur being applied before combination of say a character onto a background. I wonder if a similar technique could be done in game.

But my biggest pet peeve seemed brushed upon lightly in the video. I think this is more criminal than excessive camera blur.

It immediately pops out to me and kills my immersion full stop. It looks so ugly.

The God of War and SFV examples above irk me. If only the Makoto 2D Smear frames could actually be done in 3D in real time with polyginal graphics. Not counting what's done in Guilty Gear Xrd or DBZF as those are manually made.

Alpha sorting and alpha separation has to happen. But, it may not be possible with the way things are done, when the images are snapshots of time as explained in the video?

The way it's done for cheap in TV/Film that's convincing and similarly done blur wise, is of course the separation of elements to be composited in post, with blur being applied before combination of say a character onto a background. I wonder if a similar technique could be done in game.

Last edited:

I mean most cg movies use motion blur. Theres clearly an asthetic reason to do it. I think its more appropriate for cinematic games. For immersive games like FPS it tends to get in the wayThe problem is even with live action movies whose cameras capture DOF and motion blur as a natural consequence of the laws of physics, things really start to get problematic once that information is conveyed through a TV or monitor.

Watching the world through pixels is already several times removed from reality, and adding motion blur and similar optical effects into the mix only exacerbates that fact.

I mean most cg movies use motion blur. Theres clearly an asthetic reason to do it. I think its more appropriate for cinematic games. For immersive games like FPS it tends to get in the way

I'm not arguing against its use so much as I'm explaining that it's never going to look natural as long as the totality of the image is represented as one plane, and that's true for CG, live action, video games, and everything in between.

Perhaps that was a poor choice of words in my post. I'm not talking about depth.This is impossible with images produced from a screen, with or without motion blur. Every part of an image is 'sharply into focus' at playable distances as far as our eyes are concerned.

I'm talking about being able to track an object which is in motion so that I can clearly see what it is without blurring.

You cannot do that when the motion blur is rendered into the image.

At 24 FPS you get bad stroboscopic artifacts without motion blur, it's not done for "aesthetics".I mean most cg movies use motion blur. Theres clearly an asthetic reason to do it. I think its more appropriate for cinematic games. For immersive games like FPS it tends to get in the way

That said, modern displays give the appearance of there being significantly more motion blur in film than there actually is, as a result of sample and hold driving.

That's why interpolating them to a higher framerate appears to reduce motion blur.

Last edited:

I hate both Chromatic Abberation and motion blur.

They get turned off every time I can. I wish DF would do a similar video on Chromatic Abberation.

Exactly my opinion. It looks aweful.

I disable it any chance I get.

They get turned off every time I can. I wish DF would do a similar video on Chromatic Abberation.

Personally motion blur only starts looking really good at like 100+ fps

Before then it largely looks a lot like Vaseline being smeared on the screen. These days it's mostly a visual trick to make 30fps not look like sluggish vomit. It doesn't help all that much because it still /feels/ like sluggish vomit, though

Exactly my opinion. It looks aweful.

I disable it any chance I get.

Perhaps that was a poor choice of words in my post. I'm not talking about depth.

I'm talking about being able to track an object which is in motion so that I can clearly see what it is without blurring.

You cannot do that when the motion blur is rendered into the image.

Ah, yes, this is true. Motion blur certainly introduces visibility problems as your eyes can't selectively decide what should be in or out of focus as they'd be able to do in the real world.

I love motion blur. One of the most important aspects of post processing effects. I think it gives games a strong cgi vibe when is well implemented.

Just take a look at how Doom, SotC and R&C look. Also, I know it's not released yet but Spiderman appears to have some very solid motion blur.

Just take a look at how Doom, SotC and R&C look. Also, I know it's not released yet but Spiderman appears to have some very solid motion blur.

I can't play 30fps games without motion blur, it looks so jittery without it. Makes me dizzy. As much as I wish games like UFC were 60fps and would benefit from it, the only reason they don't feel completely awful is because of their aggressive motion blur. Doom on Switch feels so much better to play with motion blur on. Nintendo as an example has always done a good job at making 30fps games like Sunshine and Wind Waker feel fluid and smooth.

The best example I've ever seen of motion blur was in PGR4 on the 360. I don't know how they did it, but I think because they blurred objects and the backgrounds, nothing ever looked out of place:

No idea how no other driving/racing game has been able to replicate it since. And I'd still rather take that than 60fps with less detail in an arcadey racing game.

No idea how no other driving/racing game has been able to replicate it since. And I'd still rather take that than 60fps with less detail in an arcadey racing game.

Fantastic video, Alex! It would be really interesting to see Z-axis only screen blur as an option in games.

This is also your best narration yet, by the way. No distracting changes in tone coming from different cuts, it all sounded great, and there's a better sense of continuity to your video as a result.

I didn't need any convincing, as I really like the effect, but it was still very informative, learned a lot with it.

This is also your best narration yet, by the way. No distracting changes in tone coming from different cuts, it all sounded great, and there's a better sense of continuity to your video as a result.

I didn't need any convincing, as I really like the effect, but it was still very informative, learned a lot with it.

I think its the fakeyness of the gaming blur that messes with my brain. Natural movement blur in real life causes me no issues.

Amazing video, Alex! Your description regarding the snapshots/visual gaps is exactly what came to realize after using a 480fps capable display. Without MB, not even 480fps is enough to fill in those gaps in certain situations.

What I didn't see talked about too much was the nature of the end user display and its role in the MB chain. When using something like a high hz projector, CRT, or OLED, the display's response time mostly stays out of the way of motion blur featured in content. When the end of that chain involves a smearing non strobbed/scanned TN or slow IPS/VA panel panel with anywhere from 4-10+ms of response time instead, its a recipe for a mediocre visual experience no matter the type of blur implemented or the granularity of in game control.

What I didn't see talked about too much was the nature of the end user display and its role in the MB chain. When using something like a high hz projector, CRT, or OLED, the display's response time mostly stays out of the way of motion blur featured in content. When the end of that chain involves a smearing non strobbed/scanned TN or slow IPS/VA panel panel with anywhere from 4-10+ms of response time instead, its a recipe for a mediocre visual experience no matter the type of blur implemented or the granularity of in game control.

Last edited:

Nope, I'll take motion clarity any day over smudging up the screen. I know about the different methods of motion blur and still prefer being able to see. Instead of pushing toward more blur options, we should be pushing toward better implementations of monitor strobing like ULMB that will allow blur free experiences without affecting picture quality and colors etc.

I have been playing (and LOVING) God of War. I tend to favour 30fps mode on PS4 Pro, but when I switched to 60fps I felt that the motion blur seemed out of place. A higher frame rate would get rid of motion blur if you were using a real camera, so it didnt feel right.

Yeah. This really turned me off from the game and is one thing that alienated me when everyone kept acting as if the graphics were so good it was unlike anything else. Even just its lighting has many moments where it jusg looks rather flat while even in 2016 there were other PS4 games that were just as if not more impressive in that regard.I love me some good object based motion blur. While Doom and God of War have great motion blur, Uncharted 4 for example has a low sample implementation that doesn't look great at all imo.

In the absence of proper eye-tracking technology that allows us to somehow composite motion blur only on the parts the eyes aren't tracking, I'd still much rather have more motion blur than not. Indeed, Crysis ingrained in me a powerful appreciation for how much good motion blur can add to any game. Actions feel much more impactful when the full motion is conveyed instead of only discrete frames. It's especially gratifying to throw people around with such great motion blur.

I love it so much that it actually grates on me to read so many comments decrying its use. Not that I can't understand it - my values are simply different. There's a certain ineffable quality to games with great motion blur that games without it simply lack. I can scarcely live without it nowadays.

I love it so much that it actually grates on me to read so many comments decrying its use. Not that I can't understand it - my values are simply different. There's a certain ineffable quality to games with great motion blur that games without it simply lack. I can scarcely live without it nowadays.

I remember playing Wolfenstein 3D on my mum's black and white 286 laptop. The LCD screen would leave a trail behind anything that moved, so the whole game had a smearing motion, especially when turning. Pretty interesting tbh.

Who is still playing on a display with motion blur? Are people using a cheap LCD from the 90s?LCD as a technology has a large inherent motion blur. Adding more seems redundant. It'd make more sense after we have a display technology that is already motion blur free.

The first thing I disable when I start up a PC game for the first time is Motion Blur. I absolutely hate it from the bottom of my heart.

Nice video!

Though... I feel like there are some key concepts that aren't covered or explained in it which I'll go over briefly here: the effects of eye tracking as well as sample-and-hold displays.

https://www.testufo.com/blackframes

Viewed on an LCD screen, this UFO test shows a moving object with no motion blur effect applied, but when your eye tracks it across the screen it's obviously quite smeared. The reason being that while your eye can follow a more or less continuous motion, but the object itself is moving back and forth relative to the "ideal" motion. When a black frame is inserted the blur is reduced by half, and viewing it on a CRT (where each pixel only persists for as long as the electron beam swipes across it) at its refresh rate would reduce it to nothing: you'd only see the normal flickering of a CRT display.

This is the motion blur "inherent" to all LCD screens mentioned above.

(https://www.testufo.com/framerates-marquee#count=5&pps=960 - at 60 fps and above the frequency of this effect is high enough that it only reads as a blur, at 30 fps there's a visible pulsing and at 7.5 it's broken down completely since you can track the starts and the stops.)

The overall point here being that the ideal amount of post-process motion blur for an object moving slowly and consistently enough to be tracked is zero (hence the distaste for motion blur on predictable player-controlled camera movements). But a more general solution here is impossible without either a return to low-persistence display technology like CRT (pretty unlikely in the near future) or eye tracking and foveated rendering.

As it stands though, I generally agree that the benefits of motion blur (smoothing out everything that isn't being tracked and adding more coverage and fluidity to prevent objects from "breaking apart") currently outweigh its downsides - as long as developers are smart enough to implement a healthy deadzone where objects moving slowly enough don't get blurred at all.

Though... I feel like there are some key concepts that aren't covered or explained in it which I'll go over briefly here: the effects of eye tracking as well as sample-and-hold displays.

https://www.testufo.com/blackframes

Viewed on an LCD screen, this UFO test shows a moving object with no motion blur effect applied, but when your eye tracks it across the screen it's obviously quite smeared. The reason being that while your eye can follow a more or less continuous motion, but the object itself is moving back and forth relative to the "ideal" motion. When a black frame is inserted the blur is reduced by half, and viewing it on a CRT (where each pixel only persists for as long as the electron beam swipes across it) at its refresh rate would reduce it to nothing: you'd only see the normal flickering of a CRT display.

Who is still playing on a display with motion blur? Are people using a cheap LCD from the 90s?

This is the motion blur "inherent" to all LCD screens mentioned above.

(https://www.testufo.com/framerates-marquee#count=5&pps=960 - at 60 fps and above the frequency of this effect is high enough that it only reads as a blur, at 30 fps there's a visible pulsing and at 7.5 it's broken down completely since you can track the starts and the stops.)

The overall point here being that the ideal amount of post-process motion blur for an object moving slowly and consistently enough to be tracked is zero (hence the distaste for motion blur on predictable player-controlled camera movements). But a more general solution here is impossible without either a return to low-persistence display technology like CRT (pretty unlikely in the near future) or eye tracking and foveated rendering.

As it stands though, I generally agree that the benefits of motion blur (smoothing out everything that isn't being tracked and adding more coverage and fluidity to prevent objects from "breaking apart") currently outweigh its downsides - as long as developers are smart enough to implement a healthy deadzone where objects moving slowly enough don't get blurred at all.

Last edited:

Who is still playing on a display with motion blur? Are people using a cheap LCD from the 90s?

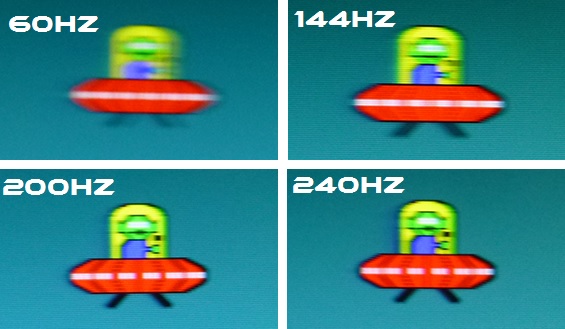

Motion blur is inherent in LCD technoglogy due to it's "sample and hold" foundation. This is one of the fastest LCD monitors on the market the Asus PG258Q:

Even if you can maintain 144fps you still get a significant amount of motion blur. Once you start pushing 200fps the level of blur diminishes significantly.

You can test your current display here: https://www.testufo.com/

Last edited:

Playing through the Witcher 3 and finally started Blood and Wine on XBX. Who the hell set the camera motion blur to EXTREME?!? This is the first game where the motion is making me sick ever.

Oh yeah, tons of games on the Xbox One... Due to the weak GPU I assume? I didn't see so much implemented on the base PS4 as much as the Xbox One.

One of the reasons I love PC gaming is due to remove the bloody Chromatic Filters / Motion Blur / set shadows do med or high.

And Ultrawide aspect ratio obviously... :)

Oh yeah, tons of games on the Xbox One... Due to the weak GPU I assume? I didn't see so much implemented on the base PS4 as much as the Xbox One.

One of the reasons I love PC gaming is due to remove the bloody Chromatic Filters / Motion Blur / set shadows do med or high.

And Ultrawide aspect ratio obviously... :)

Don't think its the hardware, but the dev. Motion was fine for me on base and HoS, but when I went to Toussaint, something wasn't right. Not sure what the devs were thinking.

Majora's Mask used the accumulation technique in it's dream sequences among other portions of the game, leveraging its severe inaccuracies and short comings in creative ways. a few n64 games use this.

Then there's this which isn't actually per object motion blur (or any kind of blur at all), but it tries really hard to be by duplicating the model a few times (maybe using a lower poly one) and I think its kinda a cute effect for that era of games. lol

Then there's this which isn't actually per object motion blur (or any kind of blur at all), but it tries really hard to be by duplicating the model a few times (maybe using a lower poly one) and I think its kinda a cute effect for that era of games. lol

144hz with a touch of both camera and per-object motion blur feels smooth as fuck since it blends in with the screen's existing motion blur as well. Emphasis on "touch." Games that have no customizable amounts or options and have way too much just make the motion look terrible.

The main factor in motion blur on modern displays is image persistence, not response time. That is what I was trying to demonstrate in this post.Amazing video, Alex! Your description regarding the snapshots/visual gaps is exactly what came to realize after using a 480fps capable display. Without MB, not even 480fps is enough to fill in those gaps in certain situations.

What I didn't see talked about too much was the nature of the end user display and its role in the MB chain. When using something like a high hz projector, CRT, or OLED, the display's response time mostly stays out of the way of motion blur featured in content. When the end of that chain involves a smearing non strobbed/scanned TN or slow IPS/VA panel panel with anywhere from 4-10+ms of response time instead, its a recipe for a mediocre visual experience no matter the type of blur implemented or the granularity of in game control.

Image persistence is determined by how long the frame is held on-screen.

The majority of flat-panel displays are "sample and hold" type displays which have full persistence - they do not flicker at all, and hold the image on-screen for the entire duration of a frame.

So if the response time is <16.7ms, it is no longer the main factor in motion blur on a sample and hold display at 60Hz.

Here's tracking motion blur on an OLED TV with 0.3 ms response times:

And tracking motion blur on an LCD TV with 4.7ms response times:

There's some minor smearing on the trailing edge with the LCD as a result of the slower response time, but their performance is largely the same.

Meanwhile, here is that same LCD with backlight strobing (a better form of black frame insertion) that reduces the image persistence:

This reduces brightness and causes the panel to flicker, but significantly reduces motion blur.

You can also see that ghosting is more evident as a result of the slower LCD response time - there are muiltiple faint after-images on the trailing edge of motion - but there is significantly less motion blur than the OLED TV.

I'm not sure that they measured it, but I believe Sony only reduce image persistence by 3/4 at 60Hz on their LCDs that have the option for it.

A CRT can have as little as 1/20th the image persistence of a 60Hz sample and hold display.

Newer OLED TVs are starting to add black frame insertion options, but since the panels are 120Hz native, that means they can only reduce persistence by 1/2.

LCD displays are actually better for this than OLEDs, as they can switch the backlight on/off instantly, and independently from the LCD panel itself.

An LCD with good strobing options - such as NVIDIA's ULMB - can reduce motion blur by a significant amount compared to anything else currently available. They're the closest thing to a CRT for motion clarity right now - though still quite far behind.

What we really need are OLED TVs with the option to be driven as an impulse-type display, rather than only adding BFI.

It is possible though - the OLED display that LG and Google collaborated on for VR looks amazing, with 1.65ms image persistence and extremely well-controlled response times. We need to somehow convince them that we also want this for TVs.

No problem with motion blur on important movements lke high speed and some dash, punch, kick and others.

But stop with the camera rotation it's ugly.

Yeah. If you rotate your head there is no motion blur, unless you are doing it real fast and you get dizzy. I don't understand how even slowly rotating camera in some games has so much motion blur.

The main factor in motion blur on modern displays is image persistence, not response time. That is what I was trying to demonstrate in this post.

Image persistence is determined by how long the frame is held on-screen.

The majority of flat-panel displays are "sample and hold" type displays which have full persistence - they do not flicker at all, and hold the image on-screen for the entire duration of a frame.

So if the response time is <16.7ms, it is no longer the main factor in motion blur on a sample and hold display at 60Hz.

Here's tracking motion blur on an OLED TV with 0.3 ms response times:

And tracking motion blur on an LCD TV with 4.7ms response times:

There's some minor smearing on the trailing edge with the LCD as a result of the slower response time, but their performance is largely the same.

Meanwhile, here is that same LCD with backlight strobing (a better form of black frame insertion) that reduces the image persistence:

This reduces brightness and causes the panel to flicker, but significantly reduces motion blur.

You can also see that ghosting is more evident as a result of the slower LCD response time - there are muiltiple faint after-images on the trailing edge of motion - but there is significantly less motion blur than the OLED TV.

I'm not sure that they measured it, but I believe Sony only reduce image persistence by 3/4 at 60Hz on their LCDs that have the option for it.

A CRT can have as little as 1/20th the image persistence of a 60Hz sample and hold display.

Newer OLED TVs are starting to add black frame insertion options, but since the panels are 120Hz native, that means they can only reduce persistence by 1/2.

LCD displays are actually better for this than OLEDs, as they can switch the backlight on/off instantly, and independently from the LCD panel itself.

An LCD with good strobing options - such as NVIDIA's ULMB - can reduce motion blur by a significant amount compared to anything else currently available. They're the closest thing to a CRT for motion clarity right now - though still quite far behind.

What we really need are OLED TVs with the option to be driven as an impulse-type display, rather than only adding BFI.

It is possible though - the OLED display that LG and Google collaborated on for VR looks amazing, with 1.65ms image persistence and extremely well-controlled response times. We need to somehow convince them that we also want this for TVs.

The big problem with strobing is the motion artifacts when the frame rate of the game drops below the refresh rate of the display. The ideal with current technology would be a variable refresh rate strobing diplay. For games where you can maintain the refresh rate strobing is the way to go though.

The fastest screens still exhibit this. If you use the ULMB modes on select monitors with a high refresh rate you can counter it somewhat but it's still not quite perfect.Who is still playing on a display with motion blur? Are people using a cheap LCD from the 90s?

If you play a side scrolling game on a CRT display then jump over to an LCD, the difference is night and day.

I hope The Last Of Us 2 will get some nice motion blur.

One of the few visual laws in Naughty Dog games has always been their motion blur for me.

One of the few visual laws in Naughty Dog games has always been their motion blur for me.

Yes, it's not ideal if you can't hold a solid 60 FPS (at 60Hz) or higher.The big problem with strobing is the motion artifacts when the frame rate of the game drops below the refresh rate of the display. The ideal with current technology would be a variable refresh rate strobing diplay. For games where you can maintain the refresh rate strobing is the way to go though.

Combining VRR with strobing is possible, but problematic for a number of reasons that would require a huge post to go into detail.

The problem is that many people use VRR for sub-60 FPS gaming, while strobing becomes very obvious at lower framerates. NVIDIA have shown demos where they repeat frames below 1/2 the maximum refresh rate, but that means you will see double-images instead.

What probably needs to happen to actually solve that problem is to have a variable persistence display that has lower persistence the higher the framerate is, and transitions to being flicker-free at lower framerates.

That's a big technical challenge though, and far beyond what we have today.

I'd be happy if we can even get OLED TVs that can do low-persistence strobing (like that 1.65ms VR display) at any fixed refresh rate that it supports. That alone would be a significant improvement.

Combining it with VRR would be great though.

So long as the image is only strobed once per frame, I am not bothered by flicker in the slightest. I've done tests with CRTs running at an effective rate of only 24Hz for movie playback, and though it flickers severely, it looks like nothing you've ever seen before. Motion is so much clearer and smoother that you'd think it was being interpolated.

One of the big issues with ULMB is its 85Hz minimum refresh rate. Strobing needs to be in sync with the framerate to work correctly.The fastest screens still exhibit this. If you use the ULMB modes on select monitors with a high refresh rate you can counter it somewhat but it's still not quite perfect.

Here's an example of 24 FPS being displayed on a CRT at refresh rates of effectively 96Hz, 72Hz, 48Hz, and 24Hz (technically all are 96Hz with varying amounts of BFI).

When the refresh rate is double the framerate you start to get double-images, and it only gets worse from there.

There are apparently ways to trick monitors with ULMB support to refresh at 60Hz, and even enable it at the same time as G-Sync, but my understanding is that it's a somewhat unreliable hack that only works on certain displays.

OLEDs too.If you play a side scrolling game on a CRT display then jump over to an LCD, the difference is night and day.

Yeah and, even though plasmas use phosphors, they seem to decay at slightly different rates so you get slight blur in motion.

OLED has potential but companies insist on using sample and hold.

Yes, Plasma TVs are somewhat complicated due to the PWM (or similar) panel driving techniques.Yeah and, even though plasmas use phosphors, they seem to decay at slightly different rates so you get slight blur in motion.

OLED has potential but companies insist on using sample and hold.

The end result is that they're technically an impulse-type display, but have moderate image persistence (rather than the very low persistence of a CRT) and suffer from other undesirable motion artifacts where the image can break up into separate colors in motion as a result of the difference in response time for the red/green/blue phosphors and the high-frequency panel driving methods used.

So better than a full-persistence sample and hold display as far as motion blur is concerned, but I personally found the PWM driving to be very problematic during motion and they gave me migraines after a very short time of watching them.

But that's not really true... The control of motion blur has artistic interest and conveys information on its own, both in still images and in motion pictures, about the direction and speed of movement.The problem is even with live action movies whose cameras capture DOF and motion blur as a natural consequence of the laws of physics, things really start to get problematic once that information is conveyed through a TV or monitor.

Watching the world through pixels is already several times removed from reality, and adding motion blur and similar optical effects into the mix only exacerbates that fact.

Like Alex said, a photo with an infinitely short exposure time is a simple snapshot, but if you lengthen the exposure time you get information about the movement of some pieces of the image with respect to others, and of those with respect to the camera. And it can be used for artistic effect, or to smoothen the image. If anything it's less removed to the real world than watching a series of snapshots with an infinitely short exposure time.

That is, if it is properly done. DOOM is the perfect example of good motion blur, imo.

I loved the video, but the absence of Tekken was weird because I remember the DF face off for Tekken Tag Tournament 2 had DF give a good analysis of its use of motion blur.

https://www.eurogamer.net/articles/digitalfoundry-tekken-tag-tournament-2-face-off

https://www.eurogamer.net/articles/digitalfoundry-tekken-tag-tournament-2-face-off