-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

AI Neural Networks being used to generate HQ textures for older games (You can do it yourself!)

- Thread starter vestan

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

There's information here on how to train models: https://github.com/xinntao/BasicSRAre there any tools that make it easy to train your own neural network simply by providing sets of before/after images?

I can think of quite a few ways that I'd like to use it, and the volume of source material required seems like it probably would not be an issue - at least for some of them.

I stopped looking into it once I realized how large the training sets need to be.

Left click on them.Are these pictures suppose to be before and after? I dont see a difference.

Download icon in upper right.

Right click on them in new browser widow and save locally.

Use you picture viewer (in "always zoom/autofit/scale to screen/whatever is it called" mode).

Report back about seeing a difference.

Are these pictures suppose to be before and after? I dont see a difference.

One picture (the original) is a 320×144 px image.

The other (upscaled by AI) is a 1280×576 px image that still looks like an artwork done by human.

There's information here on how to train models: https://github.com/xinntao/BasicSR

I stopped looking into it once I realized how large the training sets need to be.

There ought to be some way to crowdsource model training.

The size of the dataset required would not be an issue for the sort of training I was thinking of doing, as it was related to image compression for a specific type of image (rather than photographs) which is easy to generate a large dataset for, as you just need high quality source images and can automate the creation of varying degrees of compressed images.There's information here on how to train models: https://github.com/xinntao/BasicSR

I stopped looking into it once I realized how large the training sets need to be.

After looking around for more information on this, I did find a neural network built for removing JPEG artifacts from photographs which is producing incredible results: https://i.buriedjet.com/projects/DMCNN/

Credit to Christian Ledig, Lucas Theis, Ferenc Huszar, Jose Caballero, Andrew Cunningham, Alejandro Acosta, Andrew Aitken, Alykhan Tejani, Johannes Totz, Zehan Wang, Wenzhe Shi/Arxiv on their paper about Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. I suggest you give it a read if you're interested in this kind of thing.

Hey! Thanks for using my Resident Evil image in OP. I just made an account cause I was googling around a bit and found this post. This upscaling thing is insane and I have been doing a lot of work in the past few days, trying out different methods of approach and I have seriously learned so much in such little time.

Oh. Here is 80 more Resident Evil Remake Images processed with Kingdomakrillics Manga109 model.

https://imgur.com/gallery/N0RgHOt

Great stuff!Hey! Thanks for using my Resident Evil image in OP. I just made an account cause I was googling around a bit and found this post. This upscaling thing is insane and I have been doing a lot of work in the past few days, trying out different methods of approach and I have seriously learned so much in such little time.

Oh. Here is 80 more Resident Evil Remake Images processed with Kingdomakrillics Manga109 model.

https://imgur.com/gallery/N0RgHOt

Hold it in front of a mirror and magic happens!

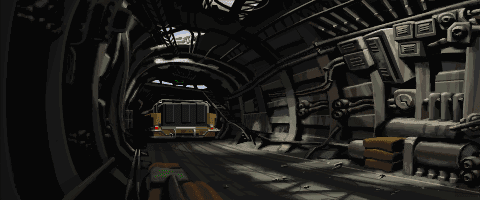

Is this real time post-processing? Or just screenshots that then gets processed via the effect ?

It's screenshots and original artwork, not real-time unfortunately. I'm using an RTX 2080 Ti and the longest processing time I've seen is about 8 seconds, so there would be a very long way to go to have this working in real time. Maybe one day.

It's screenshots and original artwork, not real-time unfortunately. I'm using an RTX 2080 Ti and the longest processing time I've seen is about 8 seconds, so there would be a very long way to go to have this working in real time. Maybe one day.

Damn. so the only implementation into a game will be texture mods.

I mean, what else would you want, if the AI is for image processing and upscaling.Damn. so the only implementation into a game will be texture mods.

Look into Reshade for post process implementation

For retro content, ie 240p I still use a trusty CRT and an OSSC for modern display scaling.

I dont see it.

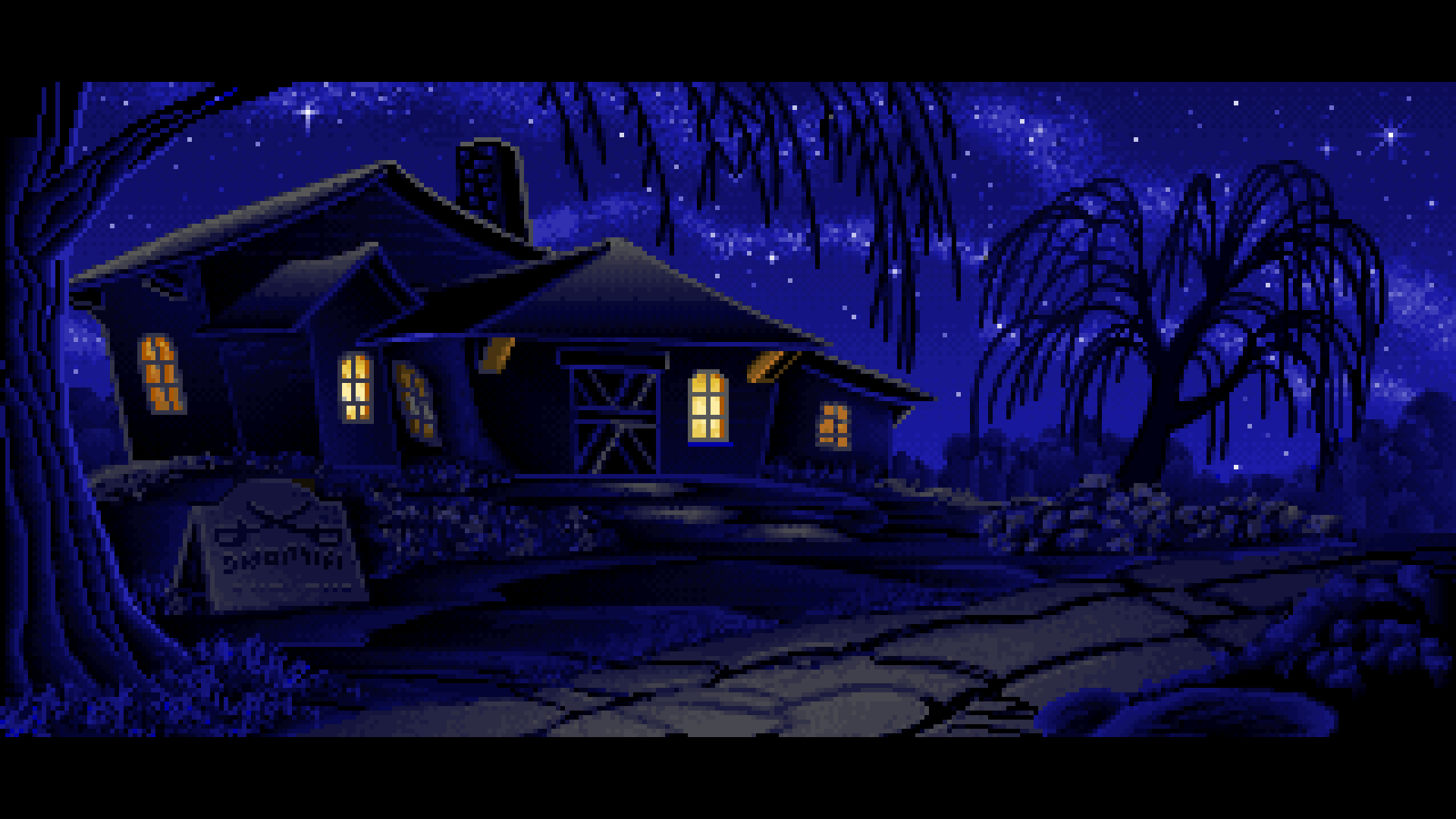

Or backgrounds, assets redone in a point-click adventure like Monkey Island or REmakeDamn. so the only implementation into a game will be texture mods.

Going through this thread, best way i can describe my reaction is: "what the fuck". It is like damn magic.

Especially impressed with those pics of Morrowind early on in this thread. Never cared for texture packs for that game because they never seem to retain the original's style, but if someone were to make a pack with these things? I might be actually interested.

Especially impressed with those pics of Morrowind early on in this thread. Never cared for texture packs for that game because they never seem to retain the original's style, but if someone were to make a pack with these things? I might be actually interested.

Damn. so the only implementation into a game will be texture mods.

In a world with infinite processing capability, not needing to have it all preprocessed and stored at multiple times the disk space of the original images would be useful. Or in more modern games that already have high res textures, it would be useful (and take less RAM) if it could selectively do it only when it knows specific textures are being displayed very zoomed in.I mean, what else would you want, if the AI is for image processing and upscaling.

Doesn't Hexadrive have this very tech (well intended result) and used it in Okami HD and Wind Waker HD?

With and without 16x anisotropic filtering enabled :p

I'm trying to install it, I get to the windows CMD line but it says

I installed Python 3.7 and CUDA 10- anyone know what I did wrong?

'pip3' is not recognized as an internal or external command,

operable program or batch file.

I installed Python 3.7 and CUDA 10- anyone know what I did wrong?

I'm trying to install it, I get to the windows CMD line but it says

I installed Python 3.7 and CUDA 10- anyone know what I did wrong?

Run the command in Python's scripts folder.

Ta, I actually had to reinstall and noticed there's a tiny "add python PATH" tickbox you have to check.

Anyone know where I can find some good rips of Baten Kaitos assets?

Are these pictures suppose to be before and after? I dont see a difference.

if you are on mobile I noticed it scales all the images to the same size, therefore I don't think you can really see the size/res difference between images.

if viewing on desktop, i think it immediately shows the difference

Now that I've had more time, I've tested ESRGAN, SFTGAN and Waifu2x on a particular Legend of Mana sprite to see how each network performs. On each of them, I magnified the image 4x.

Original Image:

The results can be found here.

My favorite result (ESRGAN trained on Manga109 dataset):

My Observations:

Original Image:

The results can be found here.

My favorite result (ESRGAN trained on Manga109 dataset):

My Observations:

- Before going into this, I expected Waifu2x (urgh, why the name?) to do best because it was trained on illustrations that I think are closest to the image I was trying to upscale.

- However, to my eye, I think the ESRGAN trained on the Manga109 dataset did the best. It had the fewest artifacts and I was very impressed with the result.

- The results from Waifu2x were disappointing - the image just looked as if someone rescaled the original image to 4x using nearest neighbor interpolation and ran a blur filter through it. I tried it on every pre-trained model available and tried combining it with de-noising but frankly everything came out looking very similar to each other.

- The default ESRGAN model did not work so well - lots of artifacts especially in the vine region in the center of the image.

- I ran into a bunch of random issues getting SFTGAN to work. I don't remember all the specifics but there were times it had issues detecting CUDA, crashed my PC, using a lot of resources, etc. I didn't really have time to figure out what was going on so not sure if it's on my end.

- I actually find the artifacts from SFTGAN quite aesthetically pleasing. It looks like oil painting textures.

Last edited:

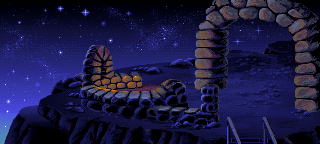

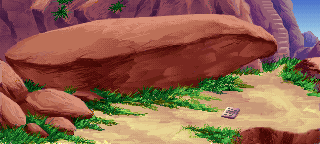

CMI screenshot scaled up using the Anime model:

I first scaled down the original screenshot to 75% of the size, then did the upscale. Otherwise, my computer could not process it.

I first scaled down the original screenshot to 75% of the size, then did the upscale. Otherwise, my computer could not process it.

This is unbelievable.That's kind of the point though? I am using the Manga109 model which is meant for illustrations more than anything. It's intentionally a lot softer. The model that comes with ESRGAN just doesn't compare especially when it comes to backgrounds of LucasArts adventure games. If you want me to get back to using what I was using before then by all means I will but I just wanted to mess around with this new model a bit.

Still though, if this isn't impressive then I don't know what is.

Versus the remaster multiple human beings had to put real work into (youtube screengrab):

that's honestly so dope great job dude

makes a world's difference

i think the remaster image is more polished overall since the AI one has a couple of aliasing upscaling errors but goddamn that is fucking impressive considering that not a single human was involved in the process(well i guess one had to press the button lol). This stuff is gonna streamline remasters development and high resolution content(for 4k for example) to a significant degree.This is unbelievable.

Versus the remaster multiple human beings had to put real work into (youtube screengrab):

Transparency issues in the textures. But man it's pretty easy to use this

So you took the video, converted every frame into a PNG, then reconstructed it with audio? That's really impressive.Yeah doesnt work so well like that :D

Needs to be each texture.

The FF7 result wasn't perfect, but I wonder if it would work with, say a higher resolution FFX FMV. One of my biggest gripes of remasters is that they often leave the FMVs untouched. If this could eliminate macro blocking and other compression artifacts, it would be a godsend.

Sekiro: Shadows Die Twice. 1080p promotional screenshot. ESRGAN model. 4K downsampled for wallpaper. Cropped, full scale comparison:

Bicubic resampling:

ESRGAN:

With some interpolation between the PSNR model I'm sure it could soften some of the harsher edge enhancement of the arm bands though it's not really apparent when downsampled.

Bicubic resampling:

ESRGAN:

With some interpolation between the PSNR model I'm sure it could soften some of the harsher edge enhancement of the arm bands though it's not really apparent when downsampled.

Last edited:

incredibleSekiro: Shadows Die Twice. 1080p promotional screenshot. ESRGAN model. 4K downsampled for wallpaper. Cropped, full scale comparison:

Bicubic resampling:

ESRGAN: