Huh? Textures in Dishonored 2 are extremely high quality. Dishonored's textures are low quality sure but its got this water paint look going on that would be ruined if it received the upscale treatment. I think it'd look worse as a whole IMO.Considering how low quality the textures in Dishonored and Dishonored 2 are, I wonder if it would be worth looking into converting them using this..

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

AI Neural Networks being used to generate HQ textures for older games (You can do it yourself!)

- Thread starter vestan

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Man I hope the developers doing the Metroid Prime Trilogy conversion at Nintendo are aware of this technology... it could very well be that this fan conversion will look miles better than the real thing! What about Silent Hill 2? That could work amazingly!

I've only found a Dead Maria model and I'm not sure how representative it is for the overall quality of textures of the game... because it already is very good!

http://screenshotcomparison.com/comparison/128304

Barely a difference, the skirt is messed up because I set the interpolation too high with Manga and ESRGAN - 04 (should have been 3 or 2)

Maybe with environment textures there is more of a difference?

Last edited:

Silent Hill 2 textures work really well but unfortunately I couldn't find a way to get them in game. The one tool that can insert textures into the PC version only accept same size textures and Texmod with PCSX2 the texture mapping messed up.

http://screenshotcomparison.com/comparison/128305

http://screenshotcomparison.com/comparison/128305

Last edited:

Gigapixel seems to have the upper hand here from what I can tell, I don't know about what options it has available but maybe the noise reduction is a bit too strong as there is some shadow detail missing (or rather some midtone detail here and there) but it recreates the original image more truthfully as if it has more options and puts more varied details where neccessary (I've only tried recreating the first image tho, it's not a huge difference depending on the model and how you prepare the source image but there is a difference)Pre rendered backgrounds from Ocarina of Time, all done in Gigapixel:

Last edited:

Man I hope the developers doing the Metroid Prime Trilogy conversion at Nintendo are aware of this technology... it could very well be that this fan conversion will look miles better than the real thing! What about Silent Hill 2? That could work amazingly!

Saddly, the soft texture swap want the same texture resolution of the game, it's hard to swap HQ texture with Vanilla

Gigapixel seems to have the upper hand here from what I can tell, I don't know about what options it has available but maybe the noise reduction is a bit too strong as there is some shadow detail missing (or rather some midtone detail here and there) but it recreates the original image more truthfully as if it has more options and puts more varied details where neccessary (I've only tried recreating the first image tho, it's not a huge difference depending on the model and how you prepare the source image but there is a difference)

The options are very limited atm, it just lets you select the level of noise and blur reduction between none, moderate and strong. First image is with 'none' selected, second with 'strong':

The options are very limited atm, it just lets you select the level of noise and blur reduction between none, moderate and strong. First image is with 'none' selected, second with 'strong':

Take this comparison with a grain of salt:

Topaz Gigapixel vs ESRGAN / waifu2x mix

Using the original, small image from the previous page I was trying to match the Topaz image (the one without denoising... tho I assume there is still denoising going on to get to the result shown), the models available to me I wasn't satisfied with the results. If there is a single step upscale that would do it then it is not obvious to me.

In the end I had to run the original through the deJpeg of Waifu2x (highest setting but no upscaling) and then run that through ESRGAN. Then I overlayed a Manga109 upscale 50% over that layer in PS. There are parts of the Topaz image which I still prefer (details on the walls and the sink etc) the Topaz image is also sharper which is due to the upscale being bigger and my PS butchering. There are often these thick noodly lines with ESRGAN or harsh scratches you can see in images where only ESRGAN itself was used, I really don't like those!

(I'm hoping for someone with enough time to train more images for an improved model for ESRGAN that would get me there in just one single step)

Last edited:

I know Why would you do that? was working or a script to upscale the alpha channel separately, but I just sorted out the textures with an alpha channel and used waifu2x-caffe for them instead for the Metroid Prime pack.What would people say is ( most of the time ? ) the best way to upscale the alpha channel for a picture done with ESRGAN/manga109 ? also doing it with ESRGAN/manga109, waifu2x, bicubic ?

What would people say is ( most of the time ? ) the best way to upscale the alpha channel for a picture done with ESRGAN/manga109 ? also doing it with ESRGAN/manga109, waifu2x, bicubic ?

Same settings if you want to keep the mask clean. There is an argument for changing settings if you have jaggy edges, there is a mutlitude of things you can do to the original mask as a black and white image before you upscale it, from using different models or upscalers or using anti aliasing and blur, denoisers etc.

It just becomes a lot of work if you have a lot of images.

Thanks collige & Laser Man.

I was asking that because I was doing 2 quick script probably similar to what Why would you do that? is/was working on.

The first browse through all the png in a given folder, and save the alpha channel ( only if the alpha is not empty/all white ) as a separate image in a separate folder, allowing you to upscale it with you prefered method.

The second browse through all upscaled alpha picture folder, and merge them with the original upscaled picture.

Things should be pretty efficient if you select the right folder ( say ESRGAN &/or waifu2x result folder ) and maybe do a bat file to chain the alpha extract, img and alpha upscale, then merging.

It's probably far from perfect ( only tested it on a very small sample atm ), but that's just a few hours work and tbh I never did any python before. But if people are interested I can share it, I'm sure people can improve it.

I was asking that because I was doing 2 quick script probably similar to what Why would you do that? is/was working on.

The first browse through all the png in a given folder, and save the alpha channel ( only if the alpha is not empty/all white ) as a separate image in a separate folder, allowing you to upscale it with you prefered method.

The second browse through all upscaled alpha picture folder, and merge them with the original upscaled picture.

Things should be pretty efficient if you select the right folder ( say ESRGAN &/or waifu2x result folder ) and maybe do a bat file to chain the alpha extract, img and alpha upscale, then merging.

It's probably far from perfect ( only tested it on a very small sample atm ), but that's just a few hours work and tbh I never did any python before. But if people are interested I can share it, I'm sure people can improve it.

Last edited:

Thanks collige & Laser Man.

I was asking that because I was doing 2 quick script probably similar to what Why would you do that? is/was working on.

The first browse through all the png in a given folder, and save the alpha channel ( only if the alpha is not empty/all white ) as a separate image in a separate folder, allowing you to upscale it with you prefered method.

The second browse through all upscaled alpha picture folder, and merge them with the original upscaled picture.

Things should be pretty efficient if you select the right folder ( say ESRGAN &/or waifu2x result folder ) and maybe do a bat file to chain the alpha extract, img and alpha upscale, then merging.

It's probably far from perfect ( only tested it on a very small sample atm ), but that's just a few hours work and tbh I never did any python before. But if people are interested I can share it, I'm sure people can improve it.

I'd be interested in that script (if you want to share it that is?)

Edit:

Thanks!

Last edited:

Sure, did a quick pastebin of both :I'd be interested in that script (if you want to share it that is?)

https://pastebin.com/My87ymGi

https://pastebin.com/rNsMCqya

No problem. I'd love to hear if it prove usefull and/or if you find problem with it or things to improve.

Last edited:

Laser Man @elytis I've also added the script I used to github: https://github.com/rapka/dolphin-textures

It requires Node.js rather than python, but the big advantage is that the library I used is blazing fast. For reference, my full texture dump of Metroid Prime had 49k files and this sorted them in about an hour.

The main file (sort.js) also has commented out lines to do the alpha channel extraction that's described above. I haven't written anything to join the files back together yet as I'm waiting for a proof of concept to show that it actually works. In the meantime, waifu2x-caffe provided good enough results for me. Let me know if you have any questions/problems/whatever.

Also, coming soon: Viewtiful Joe!

It requires Node.js rather than python, but the big advantage is that the library I used is blazing fast. For reference, my full texture dump of Metroid Prime had 49k files and this sorted them in about an hour.

The main file (sort.js) also has commented out lines to do the alpha channel extraction that's described above. I haven't written anything to join the files back together yet as I'm waiting for a proof of concept to show that it actually works. In the meantime, waifu2x-caffe provided good enough results for me. Let me know if you have any questions/problems/whatever.

Also, coming soon: Viewtiful Joe!

Just letting you know in case you missed it and wanted it that I did release Java code doing the same thing if you want to use it. See post 974 for that, and feel free to use it to do whatever.

I also briefly worked on code to do texture sorting based on a number of parameters, but I didn't get a chance to finish that. Honestly I don't know anything about picture processing, but basically I'm trying to determine the texture type (e.g. photograph, cartoony, or flat art / fonts) by looking at various parameters like number of colors, number of adjacent pixel color changes, contiguous pixels, and the like.

Also, for upscaling the alpha textures, I honestly don't know what the best algorithm would be, but based on my tests with ESRGAN it's probably not that one, lol. Just try playing around with it and see.

I also briefly worked on code to do texture sorting based on a number of parameters, but I didn't get a chance to finish that. Honestly I don't know anything about picture processing, but basically I'm trying to determine the texture type (e.g. photograph, cartoony, or flat art / fonts) by looking at various parameters like number of colors, number of adjacent pixel color changes, contiguous pixels, and the like.

Also, for upscaling the alpha textures, I honestly don't know what the best algorithm would be, but based on my tests with ESRGAN it's probably not that one, lol. Just try playing around with it and see.

I've been playing around with F-Zero GX, nothing to show yet. Thought I'd share my PowerShell scripts I'm using to separate alpha from images and then combine after processing (yet another solution :) ). Uses PoshRSJob for faster parallel processing and ImageMagick does all the actual work. These have only been tested on my Windows 10 machine.

ImageAlphaUtils.psm1

SeparateAlphas.ps1 (Pass in a folder, all images get separated out to [filename]_RGB.png and [filename]_ALPHA.png, originals are deleted so make sure you have copies of the originals if you want to keep them)

CombineAlphas.ps1 (Pass in a folder, all images named [filename]_RGB.png and [filename]_ALPHA.png will be recombined to [filename].png, [filename]_RGB.png and [filename]_ALPHA.png are deleted)

I also made modifications to the ESRGAN test.py script to:

- Define your own import and export folders.

- Images in import folder are processed recursively and copied to export folder with same folder structure. Original image filenames are unchanged.

- If processing an image fails for whatever reason, errors are caught and logged and the script continues rather than breaks

- Enough consecutive errors will abort processing (5 by default)

- I moved the main logic to a function. I originally did this to try setting up a workflow where if CUDA fails, try with the CPU instead, but I couldn't get this to work properly

Here's the modified script:

At this point I've processed all the textures I have with both the interp_07 and manga109 models and now have to figure out the best/fastest way to sort through them all and decide, of those to keep, which looks better, and which ones to discard. There's also a few that required too much video memory for my 6 GB card. For those I can probably use ImageMagick to chop up the image into sections which can be processed by ESRGAN separately and then reassembled, but I haven't gotten around to that yet...

ImageAlphaUtils.psm1

Bash:

Function Test-ContainsAlpha($sourceImage)

{

$result = & "magick" identify -format '%[channels]' $sourceImage | Out-String

return ( $result -like '*rgba*' )

}

Function Expand-ImageAlpha($sourceImage, $rgbImage, $alphaImage)

{

& "magick" convert $sourceImage -alpha extract $alphaImage

& "magick" convert $sourceImage -alpha off $rgbImage

return $True

}

Function Merge-ImageAlpha($rgbImage, $alphaImage, $newImage)

{

& "magick" convert $rgbImage $alphaImage -compose CopyOpacity -composite PNG32:$newImage

return $True

}SeparateAlphas.ps1 (Pass in a folder, all images get separated out to [filename]_RGB.png and [filename]_ALPHA.png, originals are deleted so make sure you have copies of the originals if you want to keep them)

Bash:

param

(

[string] $inDir

)

$alphaUtilsPath = Join-Path $PSScriptRoot "ImageAlphaUtils.psm1"

$filesToProcess = Get-ChildItem -Path $inDir -Include *.png -Recurse

$filesToProcess | Start-RSJob -ScriptBlock {

Import-Module $Using:alphaUtilsPath

if ($_.FullName -like "*_ALPHA.png" -or $_.FullName -like "*_RGB.png")

{

"Skipping (already processed): $_"

}

elseif (-not (Test-ContainsAlpha $_.FullName))

{

"Skipping (no alpha): $_"

}

else

{

$alphaName = $_.FullName.Replace('.png', '_ALPHA.png')

$rgbName = $_.FullName.Replace('.png', '_RGB.png')

if (Expand-ImageAlpha $_.FullName $rgbName $alphaName)

{

Remove-Item $_

"Processed: $_"

}

else

{

"Failed: $_"

}

}

} | Wait-RSJob | Receive-RSJobCombineAlphas.ps1 (Pass in a folder, all images named [filename]_RGB.png and [filename]_ALPHA.png will be recombined to [filename].png, [filename]_RGB.png and [filename]_ALPHA.png are deleted)

Bash:

param

(

[string] $inDir

)

$alphaUtilsPath = Join-Path $PSScriptRoot "ImageAlphaUtils.psm1"

$alphaFilesToProcess = Get-ChildItem -Path $inDir -Include *_ALPHA.png -Recurse

$alphaFilesToProcess | Start-RSJob -ScriptBlock {

Import-Module $Using:alphaUtilsPath

$rgbFileName = $_.FullName.Replace('_ALPHA.png', '_RGB.png')

$newFileName = $_.FullName.Replace('_ALPHA.png', '.png')

if (Merge-ImageAlpha $rgbFileName $_.FullName $newFileName)

{

Remove-Item $_ -Force

Remove-Item $rgbFileName -Force

"Processed: $_"

}

else

{

"Failed: $_"

}

} | Wait-RSJob | Receive-RSJobI also made modifications to the ESRGAN test.py script to:

- Define your own import and export folders.

- Images in import folder are processed recursively and copied to export folder with same folder structure. Original image filenames are unchanged.

- If processing an image fails for whatever reason, errors are caught and logged and the script continues rather than breaks

- Enough consecutive errors will abort processing (5 by default)

- I moved the main logic to a function. I originally did this to try setting up a workflow where if CUDA fails, try with the CPU instead, but I couldn't get this to work properly

Here's the modified script:

Python:

import sys

import os

import glob

import cv2

import numpy as np

import torch

import architecture as arch

import traceback

model_path = sys.argv[1] # models/RRDB_ESRGAN_x4.pth OR models/RRDB_PSNR_x4.pth

device = torch.device('cuda') # if you want to run on CPU, change 'cuda' -> cpu

# device = torch.device('cpu')

import_folder = 'C:/FilesToProcess/In'

export_folder = 'C:/FilesToProcess/Out'

fail_log_path = os.path.join(export_folder, '_failureLog.txt')

consecutive_failures_to_abort = 5

model = arch.RRDB_Net(3, 3, 64, 23, gc=32, upscale=4, norm_type=None, act_type='leakyrelu', \

mode='CNA', res_scale=1, upsample_mode='upconv')

model.load_state_dict(torch.load(model_path), strict=True)

model.eval()

for k, v in model.named_parameters():

v.requires_grad = False

model = model.to(device)

print('Model path {:s}. \nTesting...'.format(model_path))

def process(torch, model, import_folder, export_folder):

# read image

img = cv2.imread(path, cv2.IMREAD_COLOR)

img = img * 1.0 / 255

img = torch.from_numpy(np.transpose(img[:, :, [2, 1, 0]], (2, 0, 1))).float()

img_LR = img.unsqueeze(0)

img_LR = img_LR.to(device)

output = model(img_LR).data.squeeze().float().cpu().clamp_(0, 1).numpy()

output = np.transpose(output[[2, 1, 0], :, :], (1, 2, 0))

output = (output * 255.0).round()

export_path = export_folder + path[len(import_folder):]

export_path_dir = os.path.dirname(os.path.abspath(export_path))

if not os.path.exists(export_path_dir):

os.makedirs(export_path_dir)

cv2.imwrite(export_path, output)

idx = 0

consecutive_failures = 0

fail_log = None

for path in glob.glob(import_folder + '/**/*.*', recursive=True):

idx += 1

print(idx, path)

try:

process(torch, model, import_folder, export_folder)

consecutive_failures = 0

except Exception as e:

print('Failed to process ({0})'.format(type(e).__name__))

fail_log = open(fail_log_path, 'a')

fail_log.write('***Failed image: ' + path)

fail_log.write('Error: ' + str(e))

fail_log.write(traceback.format_exc())

consecutive_failures += 1

if (consecutive_failures >= consecutive_failures_to_abort):

raise (RuntimeError("Maximum consecutive failures reached, aborting processing"))

finally:

if (fail_log):

fail_log.close()

fail_log = NoneAt this point I've processed all the textures I have with both the interp_07 and manga109 models and now have to figure out the best/fastest way to sort through them all and decide, of those to keep, which looks better, and which ones to discard. There's also a few that required too much video memory for my 6 GB card. For those I can probably use ImageMagick to chop up the image into sections which can be processed by ESRGAN separately and then reassembled, but I haven't gotten around to that yet...

Does anyone know a good way to get around VRAM limitations? I have an 11GB 1080ti, but ESRGAN is still throwing me CUDA errors with large files because apparently 4GB are always reserved by the OS.

You can split your images into several smaller tiles first, then recombine them. You can automate that in the python script when openCV output the numpy array quite easily.

You can split your images into several smaller tiles first, then recombine them. You can automate that in the python script when openCV output the numpy array quite easily.

It works, but there's a good chance it will yield seams along the edges after recombining them which you'll have to fix by hand.

It works, but there's a good chance it will yield seams along the edges after recombining them which you'll have to fix by hand.

Indeed. You need to compute tiles and do some overlaps. As long as the overlap is big enough it should work (as it will produce exactly the same image twice)

If you have integrated graphics, you could try using that as your primary GPU. That should free up the 1080ti for ESRGAN.Does anyone know a good way to get around VRAM limitations? I have an 11GB 1080ti, but ESRGAN is still throwing me CUDA errors with large files because apparently 4GB are always reserved by the OS.

I just tried it. It didn't help. I'm not sure if i was doing something wrong or not.

Last edited:

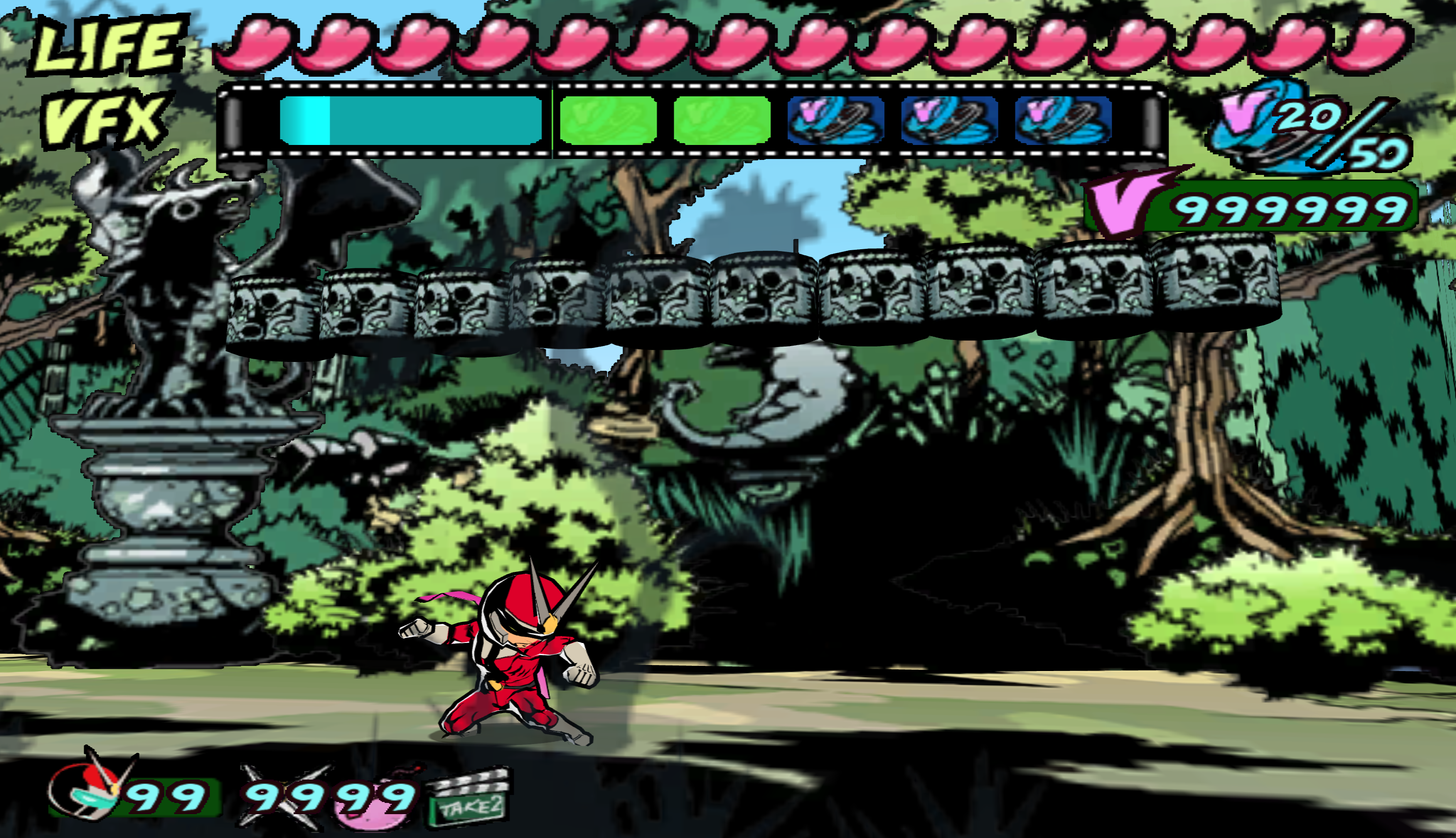

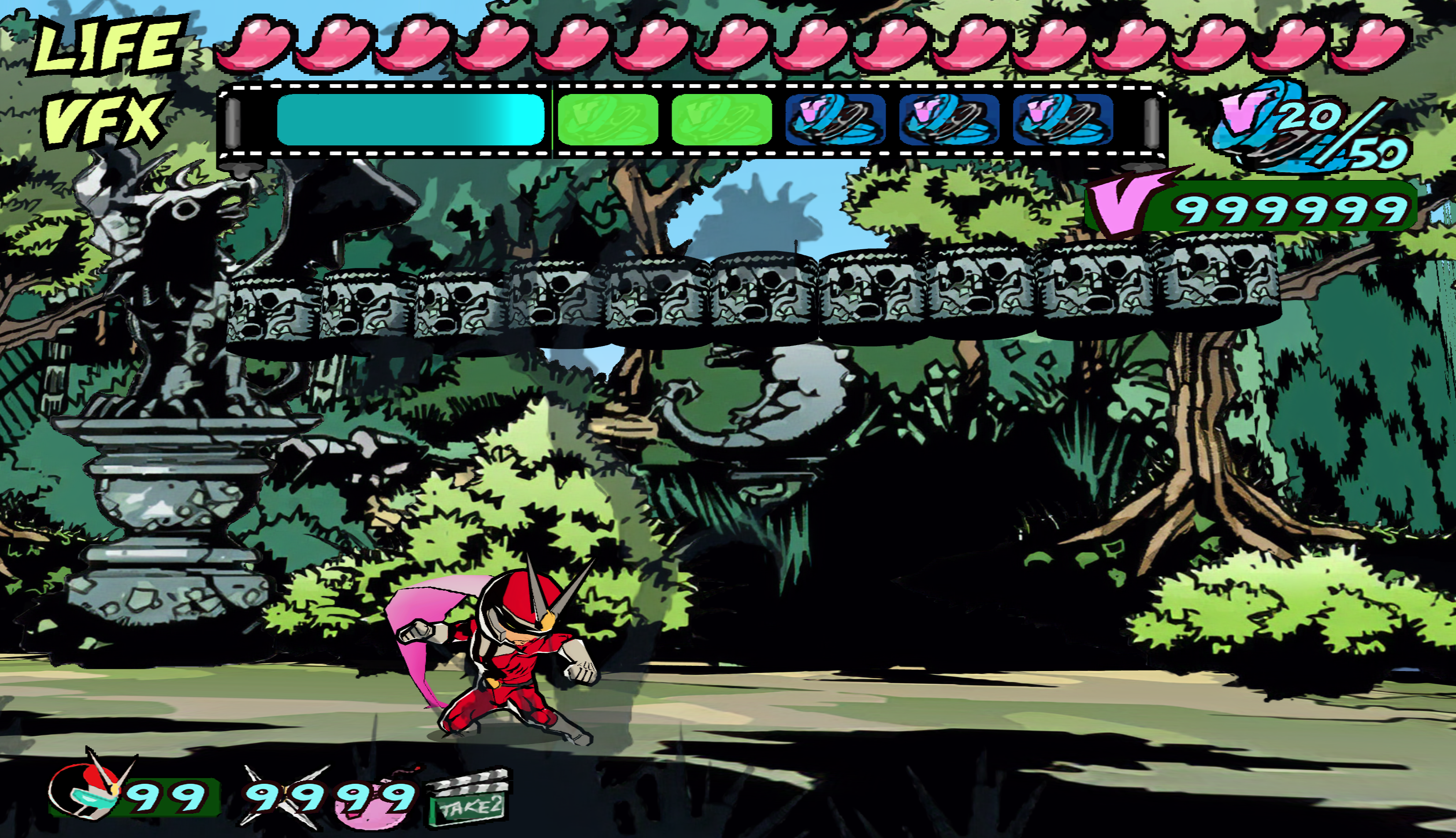

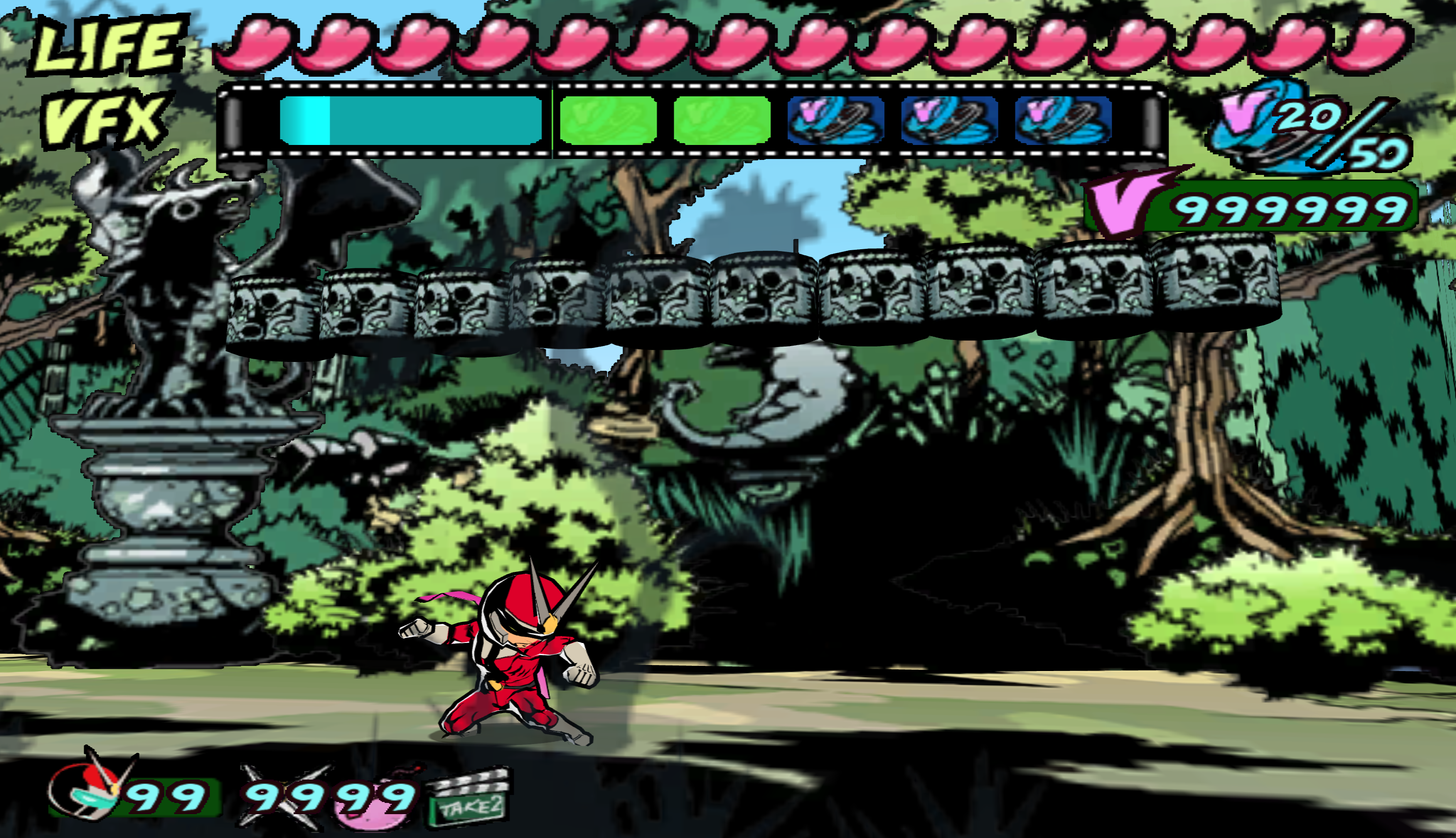

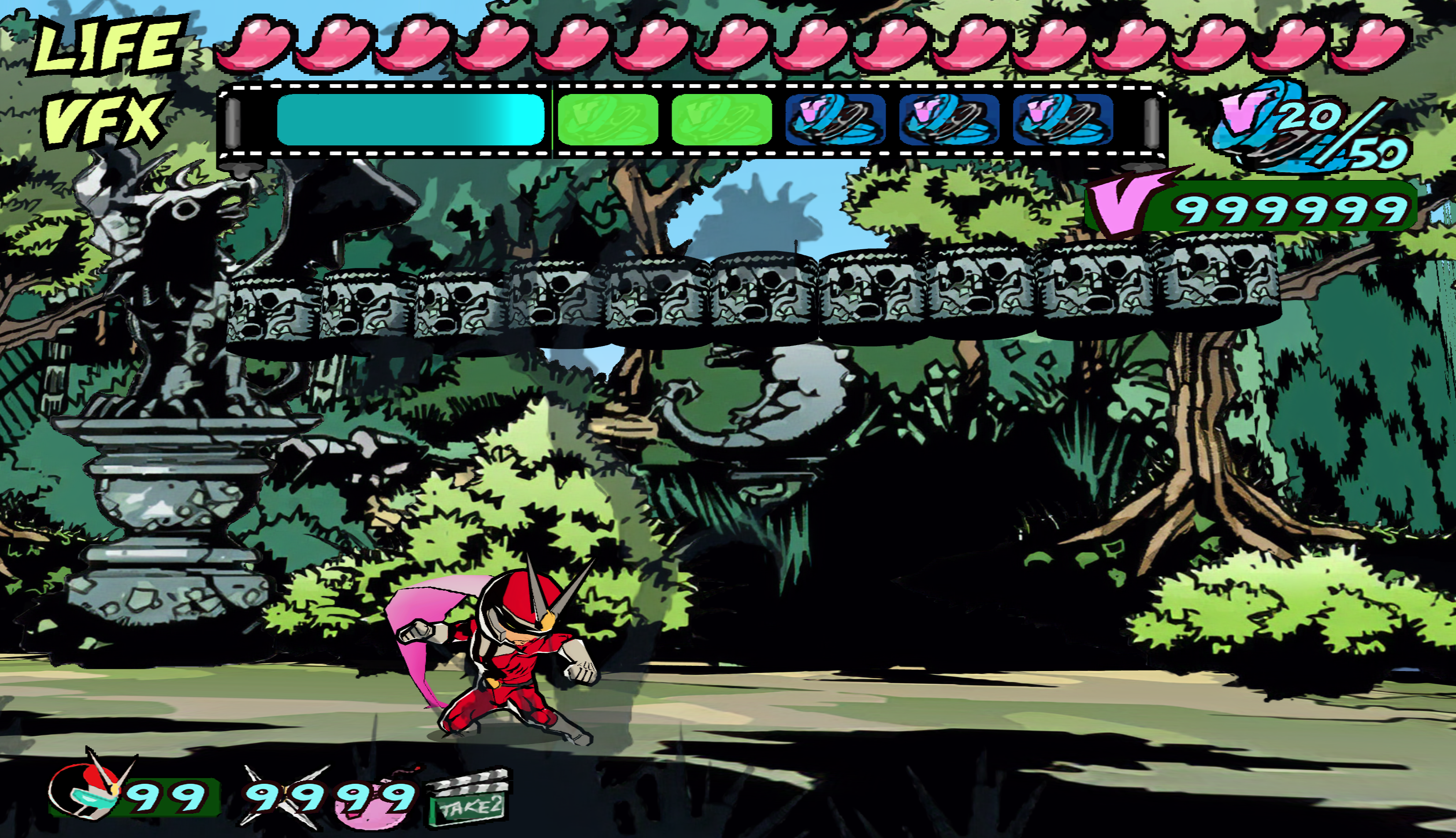

HENSHIN A GO GO BABY

http://screenshotcomparison.com/comparison/128505/picture:0

http://screenshotcomparison.com/comparison/128505/picture:1

A lot of the UI elements still look pretty bad, but thankfully there's already a handmade pack for those that I'll use for the Youtube video. Full pack is coming soon, once I convert everything to DDS.

Edit: That other thread about 16:9 made realize these widescreen shots might actually be stretched 4:3, but I honestly couldn't tell throughout my entire playthrough. Oops.

Up next: THPS3!

http://screenshotcomparison.com/comparison/128505/picture:0

http://screenshotcomparison.com/comparison/128505/picture:1

A lot of the UI elements still look pretty bad, but thankfully there's already a handmade pack for those that I'll use for the Youtube video. Full pack is coming soon, once I convert everything to DDS.

Edit: That other thread about 16:9 made realize these widescreen shots might actually be stretched 4:3, but I honestly couldn't tell throughout my entire playthrough. Oops.

Up next: THPS3!

Last edited:

This is just a friendly PSA, if you will... but before upscaling alpha maps, it can be beneficial to the outcome if you have a quick look at them and check if they have any midtones or if they are simply bitmaps (100% black and 100% white), if it's the latter then scale them up bicubic to 400% and then scale them down bicubic (or cubic or whatever) to 25% (this can be automated easilly) now you have anti-aliased your "bitmap" and after running that image through ESRGAN you get a less sawtoothy transition and when you merge them back into your upscaled png then you can get a more pleasing result overall when before you had this weird mix of great looking upscaled textures with a low-res mask applied to them which was upscaled too but ESRGAN had different information for the mask so it didn't produce the same smooth result for it. This is more relevant to very low res alpha maps from old games and less so with higher res images from new games, depending on the image it also could have a negative effect if your alpha maps are supposed to mask out rectangle shaped areas from a non organic image that only shows straight lines with sharp edges, it can depend.

The first time in ever these backgrounds actually look decent.Here's Ocarina of Time actually running with some upscaled backgrounds.

Do you have any examples of the recombined final image using this method?This is just a friendly PSA, if you will... but before upscaling alpha maps, it can be beneficial to the outcome if you have a quick look at them and check if they have any midtones or if they are simply bitmaps (100% black and 100% white), if it's the latter then scale them up bicubic to 400% and then scale them down bicubic (or cubic or whatever) to 25% (this can be automated easilly) now you have anti-aliased your "bitmap" and after running that image through ESRGAN you get a less sawtoothy transition and when you merge them back into your upscaled png then you can get a more pleasing result overall when before you had this weird mix of great looking upscaled textures with a low-res mask applied to them which was upscaled too but ESRGAN had different information for the mask so it didn't produce the same smooth result for it. This is more relevant to very low res alpha maps from old games and less so with higher res images from new games, depending on the image it also could have a negative effect if your alpha maps are supposed to mask out rectangle shaped areas from a non organic image that only shows straight lines with sharp edges, it can depend.

Do you have any examples of the recombined final image using this method?

Actually no, lol. I should have prepared something before writing it ... let me look for something where it would show a good difference and I'll get back to you brb

Interesting, I can only guess it would also be pretty easy to do for the other automated solution people have posted, but that seems like something easy enough to automate in my extract.py, this part already check if the alpha is not just only white ( so not transparency at all ) :This is just a friendly PSA, if you will... but before upscaling alpha maps, it can be beneficial to the outcome if you have a quick look at them and check if they have any midtones or if they are simply bitmaps (100% black and 100% white), if it's the latter then scale them up bicubic to 400% and then scale them down bicubic (or cubic or whatever) to 25% (this can be automated easilly) now you have anti-aliased your "bitmap" and after running that image through ESRGAN you get a less sawtoothy transition and when you merge them back into your upscaled png then you can get a more pleasing result overall when before you had this weird mix of great looking upscaled textures with a low-res mask applied to them which was upscaled too but ESRGAN had different information for the mask so it didn't produce the same smooth result for it. This is more relevant to very low res alpha maps from old games and less so with higher res images from new games, depending on the image it also could have a negative effect if your alpha maps are supposed to mask out rectangle shaped areas from a non organic image that only shows straight lines with sharp edges, it can depend.

Python:

white = (255, 255, 255, 255)

# [...] rest of the code

# Get the number of colors in the alpha channel

colors = bg.getcolors()

# If there is only one color, and it's white ( opaque) then do nothing

if ( colors[0][1] == white and len(colors) == 1 ):

print('no alpha channel')

else: # else save the alpha channel as a new image with the same name + "_alpha"Do you have any examples of the recombined final image using this method?

Here is a rather bad comparison of some palm trees from a SNES Donkey Kong Country sprite sheet:

Mask No AA vs Mask with AA

It can depend on the size of the pixels of the mask, in this case the ESRGAN "Reduced Colors" model was used and it actually smoothed out the mask ok'ish. The 400%-25% in this case includes an additional curves adjustment to increase the contrast of the masks edges again because the one step upscale left it rather fuzzy (a further step that is easily automated, at least in PS, not sure about other image editing software).

The result is not "WOW" in this example but depending on your own standards it can make a difference I think!

Thanks. At the moment, it looks like there might be too many caveats for using this strategy for batch processing especially since waifu2x-caffe seems to be pretty good at handling alpha images to begin with. Ideally, I think it might be even better to modify the ESRGAN script to not throw away the alpha channel, but I have no idea what the data manipulations in the test.py script are doing. Naively, I think simply changing `cv2.IMREAD_COLOR` to `cv2.IMREAD_UNCHANGED` on line 31 of test.py might work, but I'm not at my PC to test it right now.Here is a rather bad comparison of some palm trees from a SNES Donkey Kong Country sprite sheet:

Mask No AA vs Mask with AA

It can depend on the size of the pixels of the mask, in this case the ESRGAN "Reduced Colors" model was used and it actually smoothed out the mask ok'ish. The 400%-25% in this case includes an additional curves adjustment to increase the contrast of the masks edges again because the one step upscale left it rather fuzzy (a further step that is easily automated, at least in PS, not sure about other image editing software).

The result is not "WOW" in this example but depending on your own standards it can make a difference I think!

Last edited:

Thanks. At the moment, it looks like there might be too many caveats for using this strategy for batch processing especially since waifu2x-caffe seems to be pretty good at handling alpha images to begin with. Ideally, I think it might be even better to modify the ESRGAN script to not throw away the alpha channel, but I have no idea what the data manipulations in the test.py script are doing. Naively, I think simply changing `cv2.IMREAD_COLOR` to `cv2.IMREAD_UNCHANGED` might work, but I'm not at my PC to test it right now.

I always forget about Waifu2x... it's worth a try looking how it handles only the masks and if they still match an ESRGAN upscaled color image good enough (I'll try this tomorrow).

I've just been throwing the full color images into it directly without doing any of the alpha channel splitting stuff and it's been pretty good (see the VJ pic above). I really like the idea of doing the split, but my concern ATM is that the independently upscaled alpha channel stuff might result in textures being cutoff in unpredictable ways which may or may not be better than just using a naive method.I always forget about Waifu2x... it's worth a try looking how it handles only the masks and if they still match an ESRGAN upscaled color image good enough (I'll try this tomorrow).

The limiting factor here is the ability to batch dump and load custom textures/sprites. If you know of a GBA emulator that can do this I would be happy to try, but AFAIK it's way harder to implement for 2D consoles than 3D ones.

I've just been throwing the full color images into it directly without doing any of the alpha channel splitting stuff and it's been pretty good (see the VJ pic above). I really like the idea of doing the split, but my concern ATM is that the independently upscaled alpha channel stuff might result in textures being cutoff in unpredictable ways which may or may not be better than just using a naive method.

The limiting factor here is the ability to dump and load custom textures or sprites. If you know of a GBA emulator that can do this I would be happy to try, but AFAIK it's way harder to implement for 2D consoles than 3D ones.

If the Joe sprite is done only in Waifu2x then the mask is damn good going by the pic (also the color sprite itself...edit: Wasnt he done polygonal 3d?). The background textures are very well done! My concern is more about more detailed images and not manga-comic illustration style images because I do not like the result Waifux2 gives with those, it kills too much detail in my experience. Did you run the heart-hud elements also through Waifu2x? Those masks (black border) I could see improved (if those stem from an alpha mask?). Everywhere I see a sawtooth I feel like it could be improved but honestly it's a lot of trial & error too.

Every image with an alpha channel went through Waifu2x while those without were ESRGAN. I just checked in Photoshop, and yeah, it's an alpha mask. Here's the original sprite if you wanna mess around with it.If the Joe sprite is done only in Waifu2x then the mask is damn good going by the pic (also the color sprite itself...edit: Wasnt he done polygonal 3d?). My concern is more about more detailed images and not manga-comic illustration style images because I do not like the result Waifux2 gives with those, it kills too much detail in my experience. Did you run the heart-hud elements also through Waifu2x? Those masks (black border) I could see improved (if those stem from an alpha mask?). Everywhere I see a sawtooth I feel like it could be improved but honestly it's a lot of trial & error too.

https://abload.de/img/tex1_61x56_e52cf6d5efggkhf.png

I agree with your point about waifu2x on images, but in practice it seems like games generally don't use photorealistic styles on alpha textures outside of stuff like foliage.

Every image with an alpha channel went through Waifu2x while those without were ESRGAN. I just checked in Photoshop, and yeah, it's an alpha mask. Here's the original sprite if you wanna mess around with it.

https://abload.de/img/tex1_61x56_e52cf6d5efggkhf.png

I agree with your point about waifu2x on images, but in practice it seems like games generally don't use photorealistic styles on alpha textures outside of stuff like foliage.

I checked that sprite and the mask can be improved quite a bit (reduced color model doesn't fit the artstyle tho even if it retains the original pixels more closely, a bit too blurry too) It's still additional steps of course so I'm not saying it's a click of a button thing (unless as a PS action-script, which still complicates matters and increases time spend on everything)

And yeah you are right about realistic alpha textures, I only meant the visible texture itself in that case because (if I didn't missunderstood you) if the mask goes through Waifu2x, the color does too, right? I was thinking about scaling the color with ESRGAN and the mask with Waifu2x, but not today, nap time here :)

I report back tomorrow if that works or has it's problems (will probably result in seams if the transparency is not replaced with the same color you see at the very edge of the colorimage-mask-border because it most definitely doesn't match that pixel perfect anymore, which is sort of the case already with splitting this process in ESRGAN and treating the mask a little bit differently)

I just ran my sorting script through all the textures for THPS3 and annoyingly, it seems like pretty much all the textures were dumped with 0.5 transparency so I can't easily tell which ones actually use the alpha channel. I'm thinking that the best solution would be to:I checked that sprite and the mask can be improved quite a bit (reduced color model doesn't fit the artstyle tho even if it retains the original pixels more closely, a bit too blurry too) It's still additional steps of course so I'm not saying it's a click of a button thing (unless as a PS action-script, which still complicates matters and increases time spend on everything)

And yeah you are right about realistic alpha textures, I only meant the visible texture itself in that case because (if I didn't missunderstood you) if the mask goes through Waifu2x, the color does too, right? I was thinking about scaling the color with ESRGAN and the mask with Waifu2x, but not today, nap time here :)

I report back tomorrow if that works or has it's problems (will probably result in seams if the transparency is not replaced with the same color you see at the very edge of the colorimage-mask-border because it most definitely doesn't match that pixel perfect anymore, which is sort of the case already with splitting this process in ESRGAN and treating the mask a little bit differently)

1. Split the alpha channel into its own b&w image

2. Scale both images with ESRGAN independently

3. Merge the two back together

I could just scale the alpha channel with a normal upscaling method to get similar results as waifu2x, but I'm curious. Do you have any other ideas that I could implement in code so that it could be run on 1000's of textures?

I just ran my sorting script through all the textures for THPS3 and annoyingly, it seems like pretty much all the textures were dumped with 0.5 transparency so I can't easily tell which ones actually use the alpha channel. I'm thinking that the best solution would be to:

1. Split the alpha channel into its own b&w image

2. Scale both images with ESRGAN independently

3. Merge the two back together

I could just scale the alpha channel with a normal upscaling method to get similar results as waifu2x, but I'm curious. Do you have any other ideas that I could implement in code so that it could be run on 1000's of textures?

I don't know how to implement this into a script (my Javascript is trash) but it would look something like this in PS as a recorded action.

1. Make alpha channel active - Select alpha channel

2. Channel to selection (either in the alpha itself or one of the color channels with alpha active)

3. Create new layer, make active and fill selection with white.

4. Select none - create new layer below or fill backgroundlayer with black.

5. Merge layers down- export to png

For the color it can be difficult as it could be somewhat important what color the transparency is replaced with while scaling, maybe replace the transparency with 50% gray? Black or white could both be too visible if the next step is not done properly (or even if done properly). When the upscaled mask is merged back into the color then it has to be at least a few pixels smaller than what is in the image to make sure to cut away some of the wavy jaggies of the color or that none of the transparency-color replacement is visible right at the edge.

6. Scale seperate black and white (mask) image 400% up and then to 25% down. Then scale with same method as color image!

7. Increase contrast either by curves or level tools, make sure to tip the scale towards "more black" instead of white, or else you could get seams. This is something you probably want to try out on 1 image first and if that looks good then run it in bulk, depending on the image you give the mask it's sharp edge back by doing this.

8. Merge alpha back into color.

9. If you look at a few images and you don't like the mask in all of them (despite having tested this method on one image in the beginning), you can run a script/action on the alpha channel now and change the contrast or even expand/decrease the mask via filters. No need to do everything from scratch.

In my head this is all quite doable and "easy"... but you may or may not know, in practice it can be something else!

Last edited:

I've got steps 1-6 down, which gives me this image (pre ESRGAN): https://abload.de/img/tex1_61x56_e52cf6d5ef78jf4.png It looks like your "antialiasing" idea works as well.I don't know how to implement this into a script (my Javascript is trash) but it would look something like this in PS as a recorded action.

1. Make alpha channel active - Select alpha channel

2. Channel to selection (either in the alpha itself or one of the color channels with alpha active)

3. Create new layer, make active and fill selection with white.

4. Select none - create new layer below or fill backgroundlayer with black.

5. Merge layers down- export to png

For the color it can be difficult as it could be somewhat important what color the transparency is replaced with while scaling, maybe replace the transparency with 50% gray? Black or white could both be too visible if the next step is not done properly (or even if done properly). When the upscaled mask is merged back into the color then it has to be at least a few pixels smaller than what is in the image to make sure to cut away some of the wavy jaggies of the color or that none of the transparency-color replacement is visible right at the edge.

6. Scale seperate black and white (mask) image 400% up and then to 25% down. Then scale with same method as color image!

7. Increase contrast either by curves or level tools, make sure to tip the scale towards "more black" instead of white, or else you could get seams. This is something you probably want to try out on 1 image first and if that looks good then run it in bulk, depending on the image you give the mask it's sharp edge back by doing this.

8. Merge alpha back into color.

9. If you look at a few images and you don't like the mask in all of them (despite having tested this method on one image in the beginning), you can run a script/action on the alpha channel now and change the contrast or even expand/decrease the mask via filters. No need to do everything from scratch.

In my head this is all quite doable and "easy"... but you may or may not know, in practice it can be something else!

I'm not really sure how do deal with the contrast since my library doesn't have that option, unless you think that sharpening or gamma correction could accomplish the same effect. I guess I'll have to do some testing.

I've got steps 1-6 down, which gives me this image (pre ESRGAN): https://abload.de/img/tex1_61x56_e52cf6d5ef78jf4.png It looks like your "antialiasing" idea works as well.

I'm not really sure how do deal with the contrast since my library doesn't have that option, unless you think that sharpening or gamma correction could accomplish the same effect. I guess I'll have to do some testing.

First scale the image up with esrgan, then use the curves tool in PS (or a similar tool in in PS or other image editing software)

https://digital-photography-school.com/understand-curves-tool-photoshop/

Increase contrast, grab the point in the bottom left and drag it to the right until it is somewhere in the middle but keep it at the bottom, grab the point in the top right and drag it to the left until it is in the middle, but keep it at the top. Play around with this and watch how the gradient of the black and white image changes. You want it to be sharper (and doing it this way is cleaner and easier than using filters) and not as soft. You can do this while the image is still a separate black and white image or as an alpha channel, you can see the effect this has on the image when you do this as an alpha channel tho so that's probably preferable. When you are satisfied with how the mask looks in the image then you can save this curve and run it on all other black and white mask images (maybe try 5-10 first to see if it looks good on all of them, then on 1000's) You can use other tools or do what the tool does inside a script (if you know how?) but I like the curves tool and the automation you can do in Photoshop is quite good (In GIMP you probably can do the same by writing a python script).

So it looks like it works. Left is waifu2x, right is ESRGAN used for both the main image and the alpha channel. I tried doing some brightness manipulation on the alpha channel like you recommended, but it didn't seem to make a difference. It also looks like ESRGAN adds way more noise than waifu2x ,which makes sense given their natures. ESRGAN also freaks out around the edges, which sucks and I'm not sure if that's fixable.First scale the image up with esrgan, then use the curves tool in PS (or a similar tool in in PS or other image editing software)

https://digital-photography-school.com/understand-curves-tool-photoshop/

Increase contrast, grab the point in the bottom left and drag it to the right until it is somewhere in the middle but keep it at the bottom, grab the point in the top right and drag it to the left until it is in the middle, but keep it at the top. Play around with this and watch how the gradient of the black and white image changes. You want it to be sharper (and doing it this way is cleaner and easier than using filters) and not as soft. You can do this while the image is still a separate black and white image or as an alpha channel, you can see the effect this has on the image when you do this as an alpha channel tho so that's probably preferable. When you are satisfied with how the mask looks in the image then you can save this curve and run it on all other black and white mask images (maybe try 5-10 first to see if it looks good on all of them, then on 1000's) You can use other tools or do what the tool does inside a script (if you know how?) but I like the curves tool and the automation you can do in Photoshop is quite good (In GIMP you probably can do the same by writing a python script).

I'm thinking I might just keep using waifu2x for Viewtiful Joe specifically since it fits the artstyle better. I'm gonna try throwing all of THPS3 at it overnight and see if it's usable.

Last edited:

So it looks like it works. Left is waifu2x, right is ESRGAN used for both the main image and the alpha channel. I tried doing some brightness manipulation on the alpha channel like you recommended, but it didn't seem to make a difference. It also looks like ESRGAN adds way more noise than waifu2x ,which makes sense given their natures. ESRGAN also freaks out around the edges, which sucks and I'm not sure if that's fixable.

I'm thinking I might just keep using waifu2x for Viewtiful Joe specifically since it fits the artstyle better. I'm gonna try throwing all of THPS3 at it overnight and see if it's usable.

I just tried another sample the results can vary, it's not as simple as I had hoped (purely an example for the black outline, not for the upsclae result of the color image):

Left Unaltered Mask - Middle 400up-25down - Extra Adjustment

The big image has some midtones in it from the get go and the simple up-downscale in the middle does not produce a dignificant enough difference, the right image is what I had as a result here and there but un this case I needed to adjust the mask even further (blur and contrast) which can create problem for the mask at the borders.

It's not as cut and dry as I'd hoped. It's a shame really having the the outline look so rough in some of these images, there has to be a simple way.

I was about to suggest that.Just a thought, but would a FXAA or MLAA filter for separated alpha fix the rough edge and give better upscale?

Tried to find the old executable which did handled images, but couldn't find it.

FXAA (or probably even better SMAA1x) should do an excellent job on any aliased binary bitmap.

If you want to use this type of method for video (like anime), is there a different program to use for that, or would you have to go frame by frame to do it?

Ideally you'd use a network model that is specific for video (like TecoGAN), as these still image networks won't be temporally stable enough.

Ideally you'd use a network model that is specific for video (like TecoGAN), as these still image networks won't be temporally stable enough.

That one looks cool, but is it something that's available to use? Or is there anything similar?

That one looks cool, but is it something that's available to use? Or is there anything similar?

TecoGAN hasn't had an implementation made publicly available yet, AFAIK, but there are some other implementations of different models you can look through.

Just a thought, but would a FXAA or MLAA filter for separated alpha fix the rough edge and give better upscale?

Tried to find the old executable which did handled images, but couldn't find it.

Problem is that simple anti-aliasing is not enough for the upscale algo to smooth out a jaggy line when it upscales it, not exactly sure why... sometimes it works better than other times. Maybe the res is not optimal or the pixels are ordered in rectancles or whatever. Anyway, just for the fun of playing around here is a mix comparison, mostly DKC. Low Res to high res using ESRGAN, Waifu2x, EnhanceNet and Photoshop:

Mix comparison of different game sprites overlayed in PS