Yes, the system-wide supersampling implemented by Sony is terrible. All the first games with native downsampling looked much better that these last ones.

And maybe it's only a placebo effect, but I'm noticing very very slightly IQ losses in other games too (more blurrier), like UC4 or AC:Origin: the games with no options and only a native SS by default. I think that the system-wide supersampling option overwrites the native method, and it's not a good thing.

This is something that should be investigated by DF. At the firmware release I remember that they compared Last of Us and claimed the game was identical. But it was only a game, and with options for the user. Maybe in the games with a SS/ framerate user choice (God of War, Horizon, Last of US etc ), the native method implemented by the devs has the priority over the system-wide one.

I think that the games with no options and only a native SS should be object of further investigations after the firmware release with system-wide downsampling.

The system-wide supersampling mode is not exactly "terrible"... its just the way the system works that is kinda convoluted because when you are developing, you basically have to define how the software will work according to the resolution available on the display...

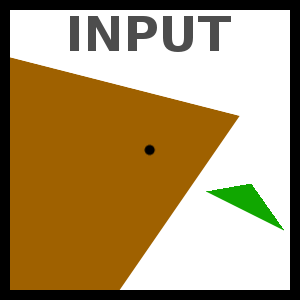

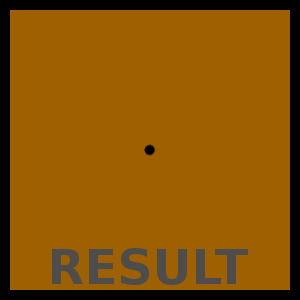

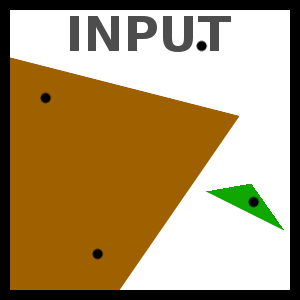

When developing on PS4 PRO your software must "read" the output used by the TV/monitor and then define a specific profile on which the software/game is going to work... so for instance when you boot The Evil Within 2:

TEW2 with system Super Sampling OFF (default)

-if connected on a 1080 display or lower:

profile 1 // game will display native 1080p (no downscale from higher resolutions), and use extra GPU performance to improve frame rate (kind of a "performance mode")

-if connected on a 4k display:

profile 2 // game will display "4k" (basic upscale from a 1260p buffer), targeting 30fps

what the "system-wide supersampling mode" does is basically force and inform the game that the console is hooked into a 4k display(when its not)... and then the final image is downscaled to a lower resolution output in the end, so if used in the example above the game will assume the "profile 2"... and then downscale the frame to 1080 in the end, so:

TEW2 with Super Sampling ON

-if connected on a 1080 display or lower:

profile 2 + downscale // game will process a 4k frame (basic upscale from a 1260p buffer), targeting 30fps and then downscale to 1080p output at the end

-if connected on a 4k display:

profile 2 // game will display "4k" (basic upscale from a 1260p buffer), targeting 30fps (NO CHANGE)

In that situation you are basically losing the "performance mode" when using SS...

Lets now use Uncharted 4 for instance:

UC4 (single player) with Super sampling OFF (default)

-if connected on a 1080 display or lower:

profile 1 // game will display a 1080 frame, downscaled by software/game from a 1440p buffer. (basically SSAA from a 1440 buffer)

-if connected on a 4k display:

profile 2 // game will display "4k", basic upscaled by software/game from a 1440p buffer.

so when using system forced super sampling:

UC4 (single player) with Super sampling ON

-if connected on a 1080 display or lower:

profile 2 + downscale // game will process a 4k frame (upscaled from a 1440 buffer), and then downscale to a 1080p output at the end

-if connected on a 4k display:

profile 2 // game will display "4k", upscaled from a 1440p buffer. (NO CHANGE)

so instead of downscale directly from a 1440p buffer, "forced" supersampling downscale a 4k image that was previously upscaled from a 1440 buffer... (thats why TLOU showed the same results on DF analysis, cause basic profile runs the game @2160/30fps on buffer, unless changed on settings)

thats why the supersampling mode is turned off by default... because its not the way the software was originally intended to work by the devs... and because this process of downscaling a 4k image (that was in most cases, originally created from a lower buffer) can create "noise" and lower the IQ, instead of improving it...

comparing to XB1X, the difference is that developers there can't know what kind of display they are connected into... so the system always automatically downscale (or upscale) the buffer used to the connected display... so devs are basically forced to develop at the higher resolution possible on buffer while working with the "enhanced mode"(xb1x mode), because in the end the system will always downscale by itself... or implement separate "performance modes" (forcing lower resolution frames on buffer) directly into the software (more or less like the latest tomb raider games did)

its a more streamlined way to work... because gives developers less options to deal with (hardware wise)... the problem though is that devs can't customize experience according to display connected, like ps4 pro allows to (for instance TEW2 does not have a "performance profile" on X, like it does on Pro)...

but when we look into what developers in general are doing with PS4 Pro... (like Rockstar, that left on RDR2 [while playing on Pro and 1080p displays] the same base slim/fat PS4 quality profile, lowering considerably IQ on 1080 displays, and even on 4k displays they could not implement checkerboard properly) I believe that, even though having more options is usually good, they are not well implemented by developers in general... unfortunately... (thats why we see lots of games running @1440p/30fps instead of using the double gpu performance and ID buffer to implement CBR, GR or TR to properly run @4k/30 or closer as its use was originally intended on hardware...)