This is the problem right here.

The issue with SDR is that modern displays have capabilities which can

far exceed the SDR spec in terms of brightness and color gamut.

Setting color space to "native" stretches out the film's BT.709 color to the display's native color space; i.e. as vivid and saturated as it can possibly make it, disregarding all color accuracy.

Since it's an HDR display, that means it can make things

very vivid. Selecting the native color space option would not be nearly as vivid on a non-HDR display.

So you've taken content designed for a small color space and stretched it out to a much larger color space.

HDR on the other-hand is designed to

use that large color space. It gives artists a larger palette to work with, rather than automatically making everything more vivid.

And in fact, the HDR color space is so large that none of the displays available today have full coverage. Your KS8500 has 63% coverage, and their QLED displays still only have 73% coverage.

HDR is about extending the

range of brightness and saturation available.

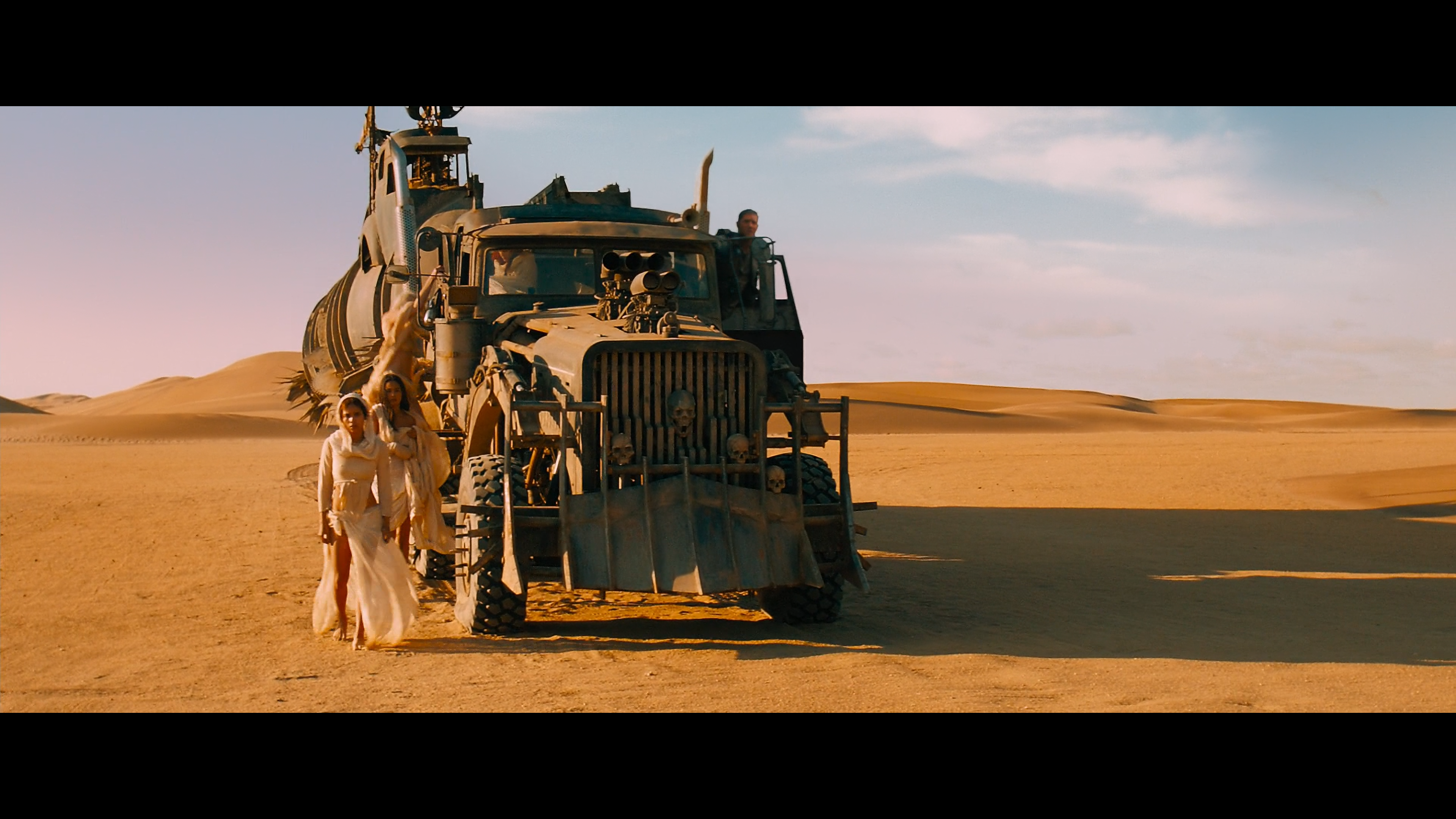

Here's a good comparison of

Mad Max: Fury Road on two properly calibrated displays:

Overall scene brightness is the same, and if anything, the HDR image is somewhat muted compared to the SDR image. However there is a lot more range.

The sky in the SDR image is flat and uniform because there's a limit on how bright things can get. In HDR, the sun and lens flare are very distinct against the sky due to the increased brightness range - and far more obvious in person.

The color of the sand is

richer in HDR, rather than being

more vivid, with deeper reds than you would get in SDR.

Now HDR content

can get more vivid than SDR content

is supposed to, but only where appropriate. It doesn't automatically make the picture more vivid than SDR.

Even if you wanted to, you don't have the option of oversaturating the image with HDR like you do with SDR.

Now it would appear that most manufacturers are giving people full control over picture settings in newer HDR displays, which really goes against a lot of what the spec intended to fix, so you are able to increase the color control to 100 and oversaturate the image that way.

But increasing the color control does not increase saturation the same way that increasing the color gamut does.

Increasing the color control will clip colors, so anything which is supposed to be bright and saturated loses all detail and turns into a solid mass of that color. Increasing saturation by expanding the color space does not do this.

It's a similar story with the backlight and dynamic contrast. SDR is intended to be viewed at 100 nits, but most displays can push that to 400 nits or higher. So you can push the brightness to 4x higher than intended.

The HDR spec for brightness is far beyond the capabilities of today's displays, so you don't have the option of using a display which is 4x brighter than the content is designed for.

Since you can't push the brightness as high, you can end up with SDR content that is much brighter on average than HDR content.

It's completely inaccurate and not how it is meant to look at all, but the option is there.

So if you're looking for the brightest, most saturated, and most vivid picture that you can get, you probably want to stick to SDR sources

on an HDR display.

Only an HDR display will let you push these things to ludicrous levels without running into issues like clipping, so you are still getting value out of having an HDR display. It's just not what was intended.