Yes, and it's very easy to see why when you realize what's happening:

SDR is mastered to be viewed at 100 nits brightness.

HDR TVs can now push SDR to 500-600 nits; 5-6x the intended brightness.

Meanwhile, a scene that is mastered for 100 nits brightness in HDR will be displayed at 100 nits whether your TV is capable of 1000, 2000, 4000 nits.

So a TV displaying SDR out-of-spec is going to be brighter and more contrasted than HDR.

Some TVs now have options to brighten the HDR picture by going out of spec, but this is achieved by… compressing the dynamic range. So as you brighten the HDR picture, you are making it closer and closer to that boosted SDR one.

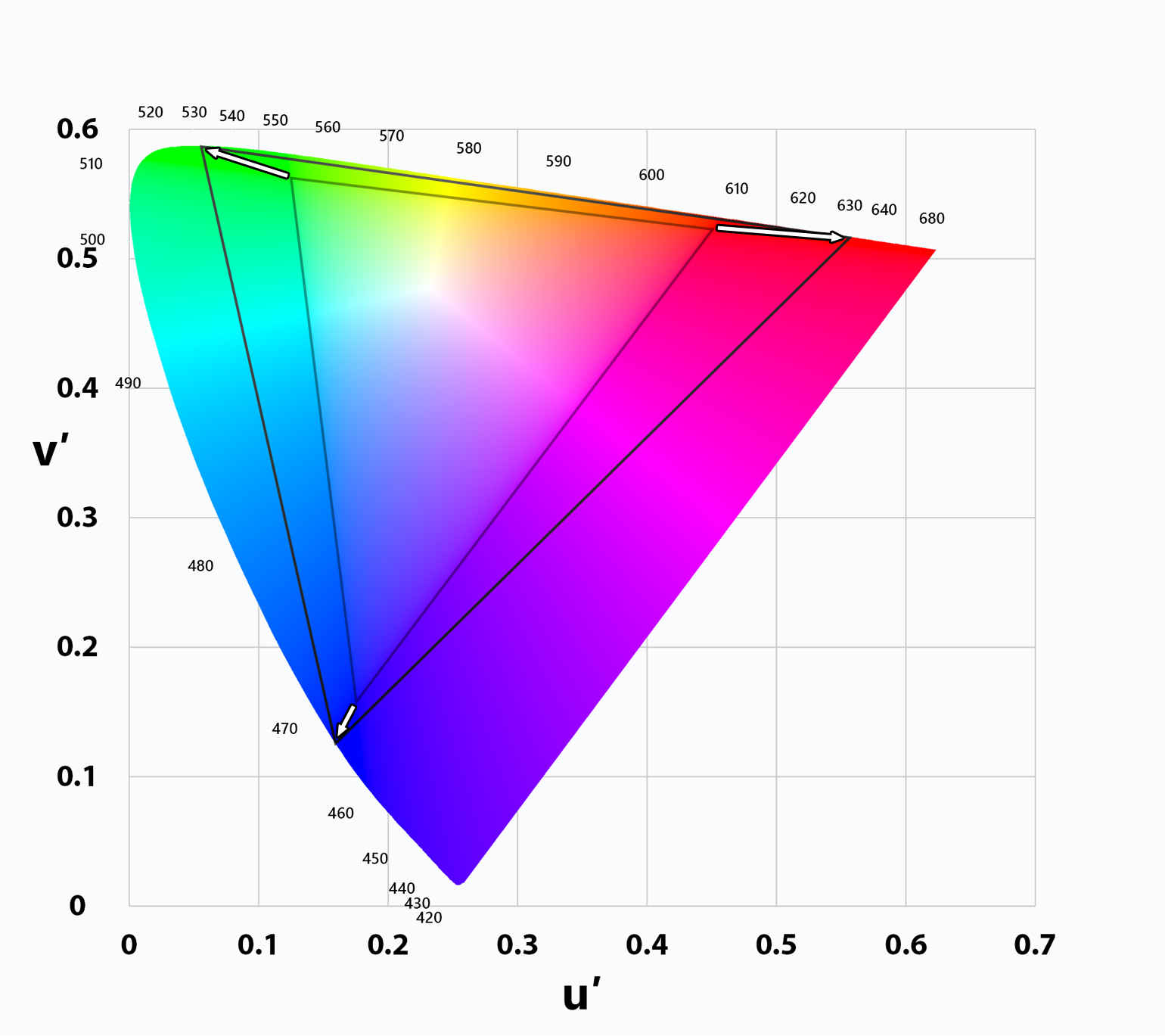

It's the same thing for color gamut.

SDR is mastered to Rec.709 colors, so it's not possible for an SDR source to display reds, greens, blues etc. which are as bright and saturated as HDR.

But most TVs let you stretch those Rec. 709 colors to the Display-P3 gamut, which over-saturates the picture.

A solid, completely saturated red is going to be the same in HDR or SDR -you can't go beyond the TV's capabilities- but while HDR will be displaying accurate color with all its subtleties, SDR in P3 will be displaying an image that is more saturated and vivid than HDR.

EDIT: An example I forgot about - though not the best showcase for HDR.

Mad Max: Fury Road in calibrated SDR on the left, and HDR on the right.

Color is slightly different (deeper and more natural reds) in HDR, but the main difference is that the bright areas of the picture have detail in them (above 100 nits) instead of washing out to a flat white sky.

You can actually see the sun and the lens flare like you would in real life - though the rest of the picture looks quite similar because it's within the 100 nits range that SDR can display.

This is because HDR can be used to create a subtly more natural image than always having to go significantly brighter or more vivid - because that is not always the director's intent.

And this is

Fury Road in SDR with the brightness cranked vs HDR.

This displays a much brighter and more vivid picture than HDR; but there's still no detail in the sky at all. It's flat and featureless, and colors in the image look unnatural.

But this is how many people watch SDR, and then wonder why HDR looks "worse" or dimmer.

It's pretty clear in this example, but viewing SDR maybe not quite so out-of-spec is why some people "can't tell the difference" when they switch HDR on.