The main usage of the breakout box iirc was 3d audio for VR, considering PS5 has a 3D audio chip inside, yea i will say no breakout box.Is it still expected, that Sony will incorporate the VR breakout box into the PS5?

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

Next-gen PS5 and next Xbox speculation launch thread - MY ANACONDA DON'T WANT NONE

- Thread starter Phoenix Splash

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Threadmarks

View all 14 threadmarks

Reader mode

Reader mode

Recent threadmarks

[USE IN CASE OF NO LEAKS BEFORE E3!!] AdoredTV - Analysing Navi part 2 Teraflop Peak Performance Lookup Table Computex: AMD's keynote May 27th Memory bandwidth calculations summary Sony and Microsoft collabotaring for cloud gaming streaming AMD at Computex May 27th livestream time Submit vote for Next thread title and we need a new OPIs it still expected, that Sony will incorporate the VR breakout box into the PS5?

After what happened to Xbox with Kinect, i doubt that they would do that. Do we have any idea on what the costs would be?

Not much actually, the breakout box doesn't really do anything special. The components aren't super expensive, they just were not built-in to PS4.After what happened to Xbox with Kinect, i doubt that they would do that. Do we have any idea on what the costs would be?

I can see backward compatibility with PSVR via breakout box, but PSVR2 would just plug in via USB-C or something.

I know Sony have confirmed that they won't be releasing this FY But do we have any information from MS about their release? Is 2019 still possible for them? I know it's extremely unlikely as we'd expect more leaks etc if it was that close but wondering if they had hinted towards a release window. If they launch prior to ps5 then they would certainly be the most powerful system as they'd hit the market well before ps5.

Not much actually, the breakout box doesn't really do anything special. The components aren't super expensive, they just were not built-in to PS4.

So what kind of size, power envelope and price ramifications would you expect in case they integrate everything?

If MS are been launching this year we would have heard it. Either from developers, production etc.I know Sony have confirmed that they won't be releasing this FY But do we have any information from MS about their release? Is 2019 still possible for them? I know it's extremely unlikely as we'd expect more leaks etc if it was that close but wondering if they had hinted towards a release window. If they launch prior to ps5 then they would certainly be the most powerful system as they'd hit the market well before ps5.

I dont expect much of an effect at all.So what kind of size, power envelope and price ramifications would you expect in case they integrate everything?

Damn bro, that's some bitter hatred right there. Anyway you do you, long as you're happy.How? &#>= Microsoft when it comes to console. Sony can fall and I will just retrogame like I do now. You couldn't pay me any money to game on the Xbox.

Nah, we have no info like that. It is extremely unlikely to happen.I know Sony have confirmed that they won't be releasing this FY But do we have any information from MS about their release? Is 2019 still possible for them? I know it's extremely unlikely as we'd expect more leaks etc if it was that close but wondering if they had hinted towards a release window. If they launch prior to ps5 then they would certainly be the most powerful system as they'd hit the market well before ps5.

Nah, we have no info like that. It is extremely unlikely to happen.

Yeah 2019 is almost certainly no, but I wonder if they could make a March/April release happen, would be interesting for them to have a 6 month headstart.

So back to 8TF again?

The PS4 actually had a weaker GPU than the 1.97TF 260X which came out 3 months before the PS4 and was 115W TDP, priced 139$ at launch. We also have to remember that this price represents a profit margin, the whole PCB and 2GB of GDDR5.It will depend, but the ps4 had a $300 desktop equivalent gpu.

We know for sure Sony is using Navi, so a Navi card with about 185w power consumption is the max we can expect for PS5.

I think you are taking the NVIDIA paper a bit out of context, they are talking about GPUs with orders of magnitude the bandwidth of the next-gen consoles.https://research.nvidia.com/publication/2017-10_Fine-Grained-DRAM:-Energy-Efficient

EDIT: Correct link

I find the study and Nvidia estimate than DRAM consumption problem is the biggest problem to solve for future of GPU.

Future GPUs and other high-performance throughput processors will require multiple TB/s of bandwidth to DRAM.

Last edited:

Nah, we have no info like that. It is extremely unlikely to happen.

I would not be surprised if they Launch Spring 2020.

The PS4 actually had a weaker GPU than the 1.97TF 260X that was 115W TDP and only 139$ at launch (and remember that this price represents a profit margin, the whole PCB and 2GB of GDDR5).

I think you are taking the NVIDIA paper a bit out of context, they are talking about GPUs with orders of magnitude the bandwidth of the next-gen consoles.

Again read my answer and you made yourself the calculation 16GB GDDR 6 with 256 Gbit/s and 24 GDDR6 384 Gbit/s are already huge problem for power consumption on console scale...

8 Gb of GDDR5 was already a huge part of PS4 TDP.

borderline 10.

Anyway on the breakout box. Think it's inside. Well technically.

They could just use embed a multipurpose 2nd processor to deal with interpolating images for VR, amongst other things.

But RAM TDP is a cost. It reduces what you can do with other element of the console.

On PS4, 8Gb of GDDR5 was 40 to 50 watts. 16 Gb of GDDR6 will be more than this probably between 60 to 70 watts and 24 Gb of GDDR6 on a 384 bits would be 90 to 100 watts. This is crazy.

It will limit the potential of growth of the GPU. I will try to find it but I read a Nvidia study about a new type of RAM coming when HBM will reach consumption limit. It was not something recent because it compares HBM against GDDR5 but they said the main challenge for GPU is the RAM power consumption.

8GB HBM 2 ( 2048 bit bus - +400 GB of bandwidth ) in Radeon RX VEGA 56 card consumes around 30 watts.

Not necessarily - if RT is only used for audio that doesn't help visuals right?

To clarify, I'm suggesting there are other uses for RT outside real-time graphics and perhaps the NG consoles will utilise RT predominantly for these cases.

Sure you can use RT for sound but when it comes to saving development time the big aspect about RT is visuals .

5.5 pJ/bit for 800GB/s is ~35.2W. That doesn't include overhead power, but 100W isn't the right ballpark.But it compare HBM and GDDR within a constraint enviromment in term of TDP and cost like in console, this is already a problem and it begins current gen. 8 GDDR5 in PS4 is not a tiny part of the console consumption. If we could stay with 8Gb of RAM, it woud be better but we need to go to 16 GB of GDDR6 with 256 bits bus or 24 Gb of RAM with a 384 bits bus. The difference between current gen and next gen is HBCC.

EDIT: the micron formula find by anexanhume show bus too play a part in TDP and DrKeo calculation he finds nearly nearly 100 watts for 24 Gb of GDDR6 with a 384 bits.

Post it here lolI've come across something, patent wise, that might be relevant to PS5's SSD solution & customisations. It's quite detailed. If that's interesting (?) I'll post about it in another thread.

It's quite a lot, and dunno if everyone's interested in the nitty gritty. But here you go:

I found several Japanese SIE patents from Hideyuki Saito along with a single (combined?) US application that appear to be relevant.

The patents were filed across 2015 and 2016.

Note: This is an illustrative embodiment in a patent application. i.e. Maybe parts of it will make it into a product, maybe all of it, maybe none of it.

But it perhaps gives an idea of what Sony has been researching. And does seem in line with what Cerny talked about in terms of customisations across the stack to optimise performance.

http://www.freepatentsonline.com/y2017/0097897.html

There's quite a lot going on, but to try and break it down:

It talks about the limitations of simply using a SSD 'as is' in a games system, and a set of hardware and software stack changes to improve performance.

Basically, 'as is', an OS uses a virtual file system, designed to virtualise a host of different I/O devices with different characteristics. Various tasks of this file system typically run on the CPU - e.g. traversing file metadata, data tamper checks, data decryption, data decompression. This processing, and interruptions on the CPU, can become a bottleneck to data transfer rates from an SSD, particularly in certain contexts e.g. opening a large number of small files.

At a lower level, SSDs typically employ a data block size aimed at generic use. They distribute blocks of data around the NAND memory to distribute wear. In order to find a file, the memory controller in the SSD has to translate a request to the physical addresses of the data blocks using a look-up table. In a regular SSD, the typical data block size might require a look-up table 1GB in size for a 1TB SSD. A SSD might typically use DRAM to cache that lookup table - so the memory controller consults DRAM before being able to retrieve the data. The patent describes this as another potential bottleneck.

Here are the hardware changes the patent proposes vs a 'typical' SSD system:

- SRAM instead of DRAM inside the SSD for lower latency and higher throughput access between the flash memory controller and the address lookup data. The patent proposes using a coarser granularity of data access for data that is written once, and not re-written - e.g. game install data. This larger block size can allow for address lookup tables as small as 32KB, instead of 1GB. Data read by the memory controller can also be buffered in SRAM for ECC checks instead of DRAM (because of changes made further up the stack, described later). The patent also notes that by ditching DRAM, reduced complexity and cost may be possible.

- The SSD's read unit is 'expanded and unified' for efficient read operations.

- A secondary CPU, a DMAC, and a hardware accelerator for decoding, tamper checking and decompression.

- The main CPU, the secondary CPU, the system memory controller and the IO bus are connected by a coherent bus. The patent notes that the secondary CPU can be different in instruction set etc. from the main CPU, as long as they use the same page size and are connected by a coherent bus.

- The hardware accelerator and the IO controller are connected to the IO bus.

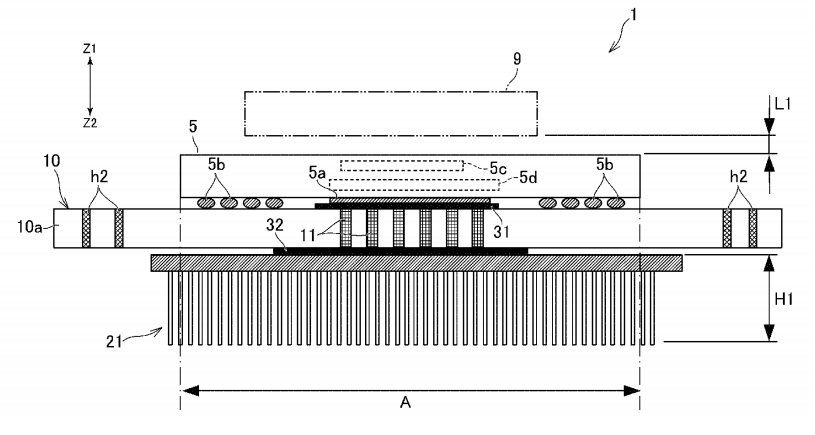

An illustrative diagram of the system:

At a software level, the system adds a new file system, the 'File Archive API', designed primarily for write-once data like game installs. Unlike a more generic virtual file system, it's optimised for NAND data access. It sits at the interface between the application and the NAND drivers, and the hardware accelerator drivers.

The secondary CPU handles a priority on access to the SSD. When read requests are made through the File Archive API, all other read and write requests can be prohibited to maximise read throughput.

When a read request is made by the main CPU, it sends it to the secondary CPU, which splits the request into a larger number of small data accesses. It does this for two reasons - to maximise parallel use of the NAND devices and channels (the 'expanded read unit'), and to make blocks small enough to be buffered and checked inside the SSD SRAM. The metadata the secondary CPU needs to traverse is much simpler (and thus faster to process) than under a typical virtual file system.

The NAND memory controller can be flexible about what granularity of data it uses - for data requests send through the File Archive API, it uses granularities that allow the address lookup table to be stored entirely in SRAM for minimal bottlenecking. Other granularities can be used for data that needs to be rewritten more often - user save data for example. In these cases, the SRAM partially caches the lookup tables.

When the SSD has checked its retrieved data, it's sent from SSD SRAM to kernel memory in the system RAM. The hardware accelerator then uses a DMAC to read that data, do its processing, and then write it back to user memory in system RAM. The coordination of this happens with signals between the components, and not involving the main CPU. The main CPU is then finally signalled when data is ready, but is uninvolved until that point.

A diagram illustrating data flow:

Interestingly, for a patent, it describes in some detail the processing targets required of these various components in order to meet certain data transfer rates - what you would need in terms of timings from each of the secondary CPU, the memory controller and the hardware accelerator in order for them not to be a bottleneck on the NAND data speeds:

Though I wouldn't read too much into this, in most examples it talks about what you would need to support a end-to-end transfer rate of 10GB/s.

The patent is also silent on what exactly the IO bus would be - that obviously be a key bottleneck itself on transfer rates out of the NAND devices.

Once again, this is one described embodiment. Not necessarily what the PS5 solution will look exactly like. But it is an idea of what Sony's been researching in how to customise a SSD and software stack for faster read throughput for installed game data.

Last edited:

5.5 pJ/bit for 800GB/s is ~35.2W. That doesn't include overhead power, but 100W isn't the right ballpark.

How much do you estimate the ballpark for 16Gb of GDDR6 256 Gbit/s and 24Gb of GDDR6 of 384 bits/s. And with the memory controller too.

I've come across something, patent wise, that might be relevant to PS5's SSD solution & customisations. It's quite detailed. If that's interesting (?) I'll post about it in another thread.

You can post here but if you want to make a new thread sure go ahead.

This is the best explanation I have heard in the last few pages of the Next Xbox Vega vs PS5 Navi situation. Thank youNavi will be more efficient, giving more graphical power for the same number of watts. But Vega will apparently continue as AMD's flagship gaming card, with Navi launching as a medium tier. (I don't know specifically why that is, but multiple sources say so.) Microsoft want to be undisputed performance leader, so that might make Vega attractive to them. Also, Vega features effectual FP64 calculation, which is rare for gaming but useful for machine learning AI and other advanced tasks. And Microsoft have officially said they want to use the same hardware in the next Xbox and their gaming servers.

It's important to note that this imagined scenario where Sony use Navi and Microsoft use Vega wouldn't have an inevitable winner. It could go all sorts of ways. Maybe Microsoft does get the highest TF card, and their Xbox One X experience lets them cool it effectively without much extra cost. There's rumors that Navi isn't as effective or power-thrifty as meant to be, and maybe PS5 could end up needing extra cooling redesign and downclocks at the last minute, which make it just as expensive as Xbox but notably less powerful.

Or, maybe Vega has higher TF but a lack of efficiency, and the usage of chip space for new server-specific features makes the next Xbox less performant than expected. Meanwhile, a careful Navi design with smartly customized gaming-relevant features allows PS5 to produce graphics basically indistinguishable from Anaconda, but at a much lower price.

In other words, neither choice is necessarily bad. Both have potential drawbacks, but both also have potential advantages for what the different platforms hope to accomplish. And no matter what they design for, some tech bets simply don't pan out.

yeah, having a sub CPU isn't something a far of a reach for the playstation. PS4 had an ARM processor.It's quite a lot, and dunno if everyone's interested in the nitty gritty. But here you go:

I found several Japanese SIE patents from Saito Hideyuki along with a single (combined?) US application that appear to be relevant.

The patents were filed across 2015 and 2016.

Note: This is an illustrative embodiment in a patent application. i.e. Maybe parts of it will make it into a product, maybe all of it, maybe none of it.

But it perhaps gives an idea of what Sony has been researching. And does seem in line with what Cerny talked about in terms of customisations across the stack to optimise performance.

http://www.freepatentsonline.com/y2017/0097897.html

There's quite a lot going on, but to try and break it down:

It talks about the limitations of simply using a SSD 'as is' in a games system, and a set of hardware and software stack changes to improve performance.

Basically, 'as is', an OS uses a virtual file system, designed to virtualise a host of different I/O devices with different characteristics. Various tasks of this file system typically run on the CPU - e.g. traversing file metadata, data tamper checks, data decryption, data decompression. This processing, and interruptions on the CPU, can become a bottleneck to data transfer rates from an SSD, particularly in certain contexts e.g. opening a large number of small files.

At a lower level, SSDs typically employ a data block size aimed at generic use. They distribute blocks of data around the NAND memory to distribute wear. In order to find a file, the memory controller in the SSD has to translate a request to the physical addresses of the data blocks using a look-up table. In a regular SSD, the typical data block size might require a look-up table 1GB in size for a 1TB SSD. A SSD might typically use DRAM to cache that lookup table - so the memory controller consults DRAM before being able to retrieve the data. The patent describes this as another potential bottleneck.

Here are the hardware changes the patent proposes vs a 'typical' SSD system:

- SRAM instead of DRAM inside the SSD for lower latency and higher throughput access between the flash memory controller and the address lookup data. The patent proposes using a coarser granularity of data access for data that is written once, and not re-written - e.g. game install data. This larger block size can allow for address lookup tables as small as 32KB, instead of 1GB. Data read by the memory controller can also be buffered in SRAM for ECC checks instead of DRAM (because of changes made further up the stack, described later). The patent also notes that by ditching DRAM, reduced complexity and cost may be possible.

- The SSD's read unit is 'expanded and unified' for efficient read operations.

- A secondary CPU, a DMAC, and a hardware accelerator for decoding, tamper checking and decompression.

- The main CPU, the secondary CPU, the system memory controller and the IO bus are connected by a coherent bus. The patent notes that the secondary CPU can be different in instruction set etc. from the main CPU, as long as they use the same page size and are connected by a coherent bus.

- The hardware accelerator and the IO controller are connected to the IO bus.

An illustrative diagram of the system:

At a software level, the system adds a new file system, the 'File Archive API', designed primarily for write-once data like game installs. Unlike a more generic virtual file system, it's optimised for NAND data access. It sits at the interface between the application and the NAND drivers, and the hardware accelerator drivers.

The secondary CPU handles a priority on access to the SSD. When read requests are made through the File Archive API, all other read and write requests can be prohibited to maximise read throughput.

When a read request is made by the main CPU, it sends it to the secondary CPU, which splits the request into a larger number of small data accesses. It does this for two reasons - to maximise parallel use of the NAND devices and channels (the 'expanded read unit'), and to make blocks small enough to be buffered and checked inside the SSD SRAM. The metadata the secondary CPU needs to traverse is much simpler (and thus faster to process) than under a typical virtual file system.

The NAND memory controller can be flexible about what granularity of data it uses - for data requests send through the File Archive API, it uses granularities that allow the address lookup table to be stored entirely in SRAM for minimal bottlenecking. Other granularities can be used for data that needs to be rewritten more often - user save data for example. In these cases, the SRAM partially caches the lookup tables.

When the SSD has checked its retrieved data, it's sent from SSD SRAM to kernel memory in the system RAM. The hardware accelerator then uses a DMAC to read that data, do its processing, and then write it back to user memory in system RAM. The coordination of this happens with signals between the components, and not involving the main CPU. The main CPU is then finally signalled when data is ready, but is uninvolved until that point.

A diagram illustrating data flow:

Interestingly, for a patent, it describes in some detail the processing times required of these various components in order to meet certain data transfer targets - what you would need in terms of timings from each of the secondary CPU, the memory controller and the hardware accelerator in order for them not to be a bottleneck on the NAND data speeds. Though I wouldn't read too much into this, it lays out a table of targets from 1GB/s to 20GB/s. In most examples it talks about what you would need to support a end-to-end transfer rate of 10GB/s.

The patent is also silent on what exactly the IO bus would be - that obviously be a key bottleneck itself on transfer rates out of the NAND devices.

Once again, this is one described embodiment. Not necessarily what the PS5 solution will look exactly like. But it is an idea of what Sony's been researching in how to customise a SSD and software stack for faster read throughput for installed game data.

Which was what I was thinking, what if the VR breakout box and this for the SSD can be done on one multipurpose processor.

OkayI never and will never own any Microsoft consoles. They don't rock with your boy. Ever since the original I was like no thanks.

Conservatively I would say 35W and 50W, respectively.How much do you estimate the ballpark for 16Gb of GDDR6 256 Gbit/s and 24Gb of GDDR6 of 384 bits/s. And with the memory controller too.

This is the best explanation I have heard in the last few pages of the Next Xbox Vega vs PS5 Navi situation. Thank you

The only way Vega is a superior solution is if Navi missed its design targets or MS insisted on having something more capable of double precision and AI for Azure, with Navi being a pure gaming GPU.

No interest of HBM 2 and why people keep saying HBM2 is more interesting than GDDR6? Is the Rambus engineer nuts? For low level HBM2 they have less perfomance per watts than GDDR6.

8gb 2048 8GB and 16GB 4096b hbm2 ?Conservatively I would say 35W and 50W, respectively.

The only way Vega is a superior solution is if Navi missed its design targets or MS insisted on having something more capable of double precision and AI for Azure, with Navi being a pure gaming GPU.

OR. Wait for games that I want that are not on PC and then the PS5 while pouting in the darn store.

That too I guess.

This is certainly interesting! Considering how detailed it is i think it will be worth it to create a thread on thisIt's quite a lot, and dunno if everyone's interested in the nitty gritty. But here you go:

I found several Japanese SIE patents from Hideyuki Saito along with a single (combined?) US application that appear to be relevant.

The patents were filed across 2015 and 2016.

Note: This is an illustrative embodiment in a patent application. i.e. Maybe parts of it will make it into a product, maybe all of it, maybe none of it.

But it perhaps gives an idea of what Sony has been researching. And does seem in line with what Cerny talked about in terms of customisations across the stack to optimise performance.

http://www.freepatentsonline.com/y2017/0097897.html

There's quite a lot going on, but to try and break it down:

It talks about the limitations of simply using a SSD 'as is' in a games system, and a set of hardware and software stack changes to improve performance.

Basically, 'as is', an OS uses a virtual file system, designed to virtualise a host of different I/O devices with different characteristics. Various tasks of this file system typically run on the CPU - e.g. traversing file metadata, data tamper checks, data decryption, data decompression. This processing, and interruptions on the CPU, can become a bottleneck to data transfer rates from an SSD, particularly in certain contexts e.g. opening a large number of small files.

At a lower level, SSDs typically employ a data block size aimed at generic use. They distribute blocks of data around the NAND memory to distribute wear. In order to find a file, the memory controller in the SSD has to translate a request to the physical addresses of the data blocks using a look-up table. In a regular SSD, the typical data block size might require a look-up table 1GB in size for a 1TB SSD. A SSD might typically use DRAM to cache that lookup table - so the memory controller consults DRAM before being able to retrieve the data. The patent describes this as another potential bottleneck.

Here are the hardware changes the patent proposes vs a 'typical' SSD system:

- SRAM instead of DRAM inside the SSD for lower latency and higher throughput access between the flash memory controller and the address lookup data. The patent proposes using a coarser granularity of data access for data that is written once, and not re-written - e.g. game install data. This larger block size can allow for address lookup tables as small as 32KB, instead of 1GB. Data read by the memory controller can also be buffered in SRAM for ECC checks instead of DRAM (because of changes made further up the stack, described later). The patent also notes that by ditching DRAM, reduced complexity and cost may be possible.

- The SSD's read unit is 'expanded and unified' for efficient read operations.

- A secondary CPU, a DMAC, and a hardware accelerator for decoding, tamper checking and decompression.

- The main CPU, the secondary CPU, the system memory controller and the IO bus are connected by a coherent bus. The patent notes that the secondary CPU can be different in instruction set etc. from the main CPU, as long as they use the same page size and are connected by a coherent bus.

- The hardware accelerator and the IO controller are connected to the IO bus.

An illustrative diagram of the system:

At a software level, the system adds a new file system, the 'File Archive API', designed primarily for write-once data like game installs. Unlike a more generic virtual file system, it's optimised for NAND data access. It sits at the interface between the application and the NAND drivers, and the hardware accelerator drivers.

The secondary CPU handles a priority on access to the SSD. When read requests are made through the File Archive API, all other read and write requests can be prohibited to maximise read throughput.

When a read request is made by the main CPU, it sends it to the secondary CPU, which splits the request into a larger number of small data accesses. It does this for two reasons - to maximise parallel use of the NAND devices and channels (the 'expanded read unit'), and to make blocks small enough to be buffered and checked inside the SSD SRAM. The metadata the secondary CPU needs to traverse is much simpler (and thus faster to process) than under a typical virtual file system.

The NAND memory controller can be flexible about what granularity of data it uses - for data requests send through the File Archive API, it uses granularities that allow the address lookup table to be stored entirely in SRAM for minimal bottlenecking. Other granularities can be used for data that needs to be rewritten more often - user save data for example. In these cases, the SRAM partially caches the lookup tables.

When the SSD has checked its retrieved data, it's sent from SSD SRAM to kernel memory in the system RAM. The hardware accelerator then uses a DMAC to read that data, do its processing, and then write it back to user memory in system RAM. The coordination of this happens with signals between the components, and not involving the main CPU. The main CPU is then finally signalled when data is ready, but is uninvolved until that point.

A diagram illustrating data flow:

Interestingly, for a patent, it describes in some detail the processing times required of these various components in order to meet certain data transfer targets - what you would need in terms of timings from each of the secondary CPU, the memory controller and the hardware accelerator in order for them not to be a bottleneck on the NAND data speeds. Though I wouldn't read too much into this, it lays out a table of targets from 1GB/s to 20GB/s. In most examples it talks about what you would need to support a end-to-end transfer rate of 10GB/s.

The patent is also silent on what exactly the IO bus would be - that obviously be a key bottleneck itself on transfer rates out of the NAND devices.

Once again, this is one described embodiment. Not necessarily what the PS5 solution will look exactly like. But it is an idea of what Sony's been researching in how to customise a SSD and software stack for faster read throughput for installed game data.

Also thank you for the great summary of the patent!

Told ya lolNo interest of HBM 2 and why people keep saying HBM2 is more interesting than GDDR6? Is the Rambus engineer nuts? For low level HBM2 they have less perfomance per watts than GDDR6.

I second this.This is certainly interesting! Considering how detailed it is i think it will be worth it to create a thread on this

Also thank you for the great summary of the patent!

Something seems strange it did not correspond to the JEDEC 10% less than GDDR6.

anexanhume and how much for 8gb of GDDR6? But without true mesurement I will believe it.

Am I the only one who haven't bought games for say the last 10 months, waiting for the PS5?

Nope, I haven't picked up Spider-Man, Days Gone yet. Nor will I buy Ghosts of Tsushima, Death Stranding, The Last Of Us Pt II because I will wait to see if there are PS5 versions. It's unlikely the PS store will see another penny from me until the PS5 launches now. I've got a massive backlog on PC which should keep me occupied for the next 18 months (and I'm hoping MS launch Game Pass for PC at E3).

What? Maybe on the very first model using 16 GDDR5 chips in the worst case scenario, but not for a normal RAM configuration.But RAM TDP is a cost. It reduces what you can do with other element of the console.

On PS4, 8Gb of GDDR5 was 40 to 50 watts. 16 Gb of GDDR6 will be more than this probably between 60 to 70 watts and 24 Gb of GDDR6 on a 384 bits would be 90 to 100 watts. This is crazy.

It will limit the potential of growth of the GPU. I will try to find it but I read a Nvidia study about a new type of RAM coming when HBM will reach consumption limit. It was not something recent because it compares HBM against GDDR5 but they said the main challenge for GPU is the RAM power consumption.

24GB GDDR6 in non clamshell will not be close to 100 watts.

Half of that is a better estimate.

https://www.anandtech.com/show/13832/amd-radeon-vii-high-end-7nm-february-7th-for-699

AMD are the stupidiest people in the world paid 320 dollars for HBM2 16 GBits GDDR6 with bus 256 bits or a custom bus would have done better.... Only 36 Gbit/s between the two solutions and 5 watts... So stupid...

AMD are the stupidiest people in the world paid 320 dollars for HBM2 16 GBits GDDR6 with bus 256 bits or a custom bus would have done better.... Only 36 Gbit/s between the two solutions and 5 watts... So stupid...

Last edited:

I think that it will be easier to calculAgain read my answer and you made yourself the calculation 16GB GDDR 6 with 256 Gbit/s and 24 GDDR6 384 Gbit/s are already huge problem for power consumption on console scale...

8 Gb of GDDR5 was already a huge part of PS4 TDP.

If the X has 12 GDDR5 chips in a 384-bit setup, shouldn't GDDR6 with the same setup actually draw less power than the X's setup? Every article I've read about GDDR6 claimed that it draws 15% less power than the same GDDR5 setup. What always felt ambiguous to me is if the 15% less power consumption number is before or after GDDR6 is ramped up to 14 or 16 Gb/s. From what I know about GDDR6, it doubles its' bandwidth mainly because of QDR, that's how a 3000MHz GDDR6 chip is 12Gb/s while a 3000MHz GDDR5 is a 6Gb/s chip.Conservatively I would say 35W and 50W, respectively.

No interest of HBM 2 and why people keep saying HBM2 is more interesting than GDDR6? Is the Rambus engineer nuts? For low level HBM2 they have less perfomance per watts than GDDR6.

The only way Vega is a superior solution is if Navi missed its design targets or MS insisted on having something more capable of double precision and AI for Azure, with Navi being a pure gaming GPU.

So in theory, because the Xbox One X uses 12 GDDR5 chips running at 6.8Gb/s on a 384-bit bus, an Anaconda with 12 GDDR6 chips running at 13.6Gb/s (underclocked 14Gb/s chips?) on the same 384-bit bus should provide 632.4GB/s bandwidth while drawing ~15% less power. I mean, I might have gotten everything totally wrong, but that's what every GDDR6 story that I've ever read has alluded to.

Vega 64 and 56 predate GDDR6 and Radeon VII was a rush job from a server card, I don't think that we've seen a real AMD card in the market while GDDR6 was available. Navi is rumored to use GDDR6, not HBM. In Vega 56 and 64 it made sense, they had to go above 400GB/s and no way in hell that you can get there with GDDR5 with GCN's crazy power draw.https://www.anandtech.com/show/13832/amd-radeon-vii-high-end-7nm-february-7th-for-699

AMD are the stupidiest people in the world paid 320 dollars for HBM2 16 GBits GDDR6 with bus 256 bits or a custom bus would have done better.... Only 36 Gbit/s between the two solutions and 5 watts... So stupid...

Last edited:

I think that it will be easier to calcul

If the X has 12 GDDR5 chips in a 384-bit setup, shouldn't GDDR6 with the same setup actually draw less power than the X's setup? Every article I've read about GDDR6 claimed that it draws 15% less power than the same GDDR5 setup. What always felt ambiguous to me is if the 15% less power consumption number is before or after GDDR6 is ramped up to 14 or 16 Gb/s. From what I know about GDDR6, it doubles its' bandwidth mainly because of QDR, that's how a 3000MHz GDDR6 chip is 12Gb/s while a 3000MHz GDDR5 is a 6Gb/s chip.

So in theory, because the Xbox One X uses 12 GDDR5 chips running at 6.8Gb/s on a 372-bit bus, an Anaconda with 12 GDDR6 chips running at 13.6Gb/s (underclocked 14Gb/s chips?) on the same 372-bit bus should provide 632.4GB/s bandwidth while drawing ~15% less power. I mean, I might have gotten everything totally wrong, but that's what every GDDR6 story that I've ever read has alluded to.

Vega 64 and 56 predate GDDR6 and Radeon VII was a rush job from a server card, I don't think that we've seen a real AMD card in the market while GDDR6 was available. Navi is rumored to use GDDR6, not HBM. In Vega 56 and 64 it made sense, they had to go above 400GB/s and no way in hell that you can get there with GDDR5 with GCN's crazy power draw.

I asked some realworld measurement to a youtuber who can mesure the difference with some card and do the maths. Because it looks like all datacenter engineer and the rambus guy looks stupid.

https://www.anandtech.com/show/13832/amd-radeon-vii-high-end-7nm-february-7th-for-699

AMD are the stupidiest people in the world paid 320 dollars for HBM2 16 GBits GDDR6 with bus 256 bits or a custom bus would have done better.... Only 36 Gbit/s between the two solutions and 5 watts... So stupid...

yes a fortune 500 company are the stupidiest people in the world.

... thats just not how it works.

HBM2 can get to over 1TB/s with less power draw than most GDDR6 setups. If you want to go there with GDDR6, you will need loads of chips and very high clock speed so yeah, HBM2 makes sense for servers. if ~700GB/s is good enough for your product, GDDR is probably a better choice. Two years ago GDDR5's bandwidth was just too low for Vega, but GDDR6 is fast enough for it. I'm guessing that's why Navi will use GDDR6. Someday HMB will be cheaper and the whole GPU indestry will move to HBM but we will probably have to wait until a pretty mature point in HBM3's life cycle to get there so it will take some time.I asked some realworld measurement to a youtuber who can mesure the difference with some card and do the maths. Because it looks like all datacenter engineer and the rambus guy looks stupid.

GDDR6 doubled GDDR5's bandwidth using QDR so it can get double the performance for the same clock speed and 15% less power draw. It doesn't mean that GDDR6 can suddenly go to 1TB/s+ level of bandwidth because its' clocks will probably be too high, but HBM can.

Last edited:

yes a fortune 500 company are the stupidiest people in the world.

... thats just not how it works.

Like I said I am suspicious. I asked realworld mesurement to a guy with a Titan V, a Vega 7 and able to do the measurement for 16 Gb 3200 of DDR4. All with memory controller. I hope the guy will answer he can help me and not give only estimation.

HBM2 can get to over 1TB/s with less power draw than any GDDR6 setup. If you want to go there with GDDR6, you will need loads of chips and very high clock speed so yeah, HBM2 makes sense for servers. if ~700GB/s is good enough for your product, GDDR is probably a better choice. But someday HMB will be cheaper anyway, we will probably have to wait until a pretty mature point in HBM3's life cycle to get there so it will take some time.

Here it is low speed HBM2...

Like I said I am suspicious. I asked realworld mesurement to a guy with a Titan V, a Vega 7 and able to do the measurement for 16 Gb 3200 of DDR4. All with memory controller. I hope the guy will answer he can help me and not give only estimation.

We are derailing the thread.

It doesn't matter, because AMD couldn't have done the Vega VII, like you brilliantly suggested, with 16GB GDDR6, because it was based on the MI60 workstation card which was released earlier and came with HBM2 and wasn't meant for gaming.

Also what has 16GB of 3200 DDR4 to do with any of it? Are you even aware that VRAM and RAM are different?

We are derailing the thread.

It doesn't matter, because AMD couldn't have done the Vega VII, like you brilliantly suggested, with 16GB GDDR6, because it was based on the MI60 workstation card which was released earlier and came with HBM2 and wasn't meant for gaming.

Also what has 16GB of 3200 DDR4 to do with any of it? Are you even aware that VRAM and RAM are different?

It is for one PS5 rumors the 8Gb HBM2 and 16Gb DDR4 with HBCC rumors, compare to 16 Gb/s of GDDR6 256 bits with 14 Gbps module and 24 Gb/s 384 bits with 14 Gbps module.

Let's hope he can get us real numbers.Like I said I am suspicious. I asked realworld mesurement to a guy with a Titan V, a Vega 7 and able to do the measurement for 16 Gb 3200 of DDR4. All with memory controller. I hope the guy will answer he can help me and not give only estimation.

The low-speed HBM2 thing is just a rumor. We have no idea what kind of impact this action will cause and by how much the price will drop if it will happen. Considering that HBM2 is ~x2 the price of GDDR6, the price will have to drop by a huge margin. I'm not saying that it's impossible, but it does seem a bit extreme.

X had 12 GDDR5 chips running @3400MHz. If Anaconda has 12 GDDR6 chips running @3400MHz it will have 24GB with a 652.8GB/s bandwidth while drawing 15% less power than the X. At least that's what the GDDR6 PR claims.It is for one PS5 rumors the 8Gb HBM2 and 16Gb DDR4 with HBCC rumors, compare to 16 Gb/s of GDDR6 256 bits with 14 Gbps module and 24 Gb/s 384 bits with 14 Gbps module.

Threadmarks

View all 14 threadmarks

Reader mode

Reader mode

Recent threadmarks

[USE IN CASE OF NO LEAKS BEFORE E3!!] AdoredTV - Analysing Navi part 2 Teraflop Peak Performance Lookup Table Computex: AMD's keynote May 27th Memory bandwidth calculations summary Sony and Microsoft collabotaring for cloud gaming streaming AMD at Computex May 27th livestream time Submit vote for Next thread title and we need a new OP- Status

- Not open for further replies.