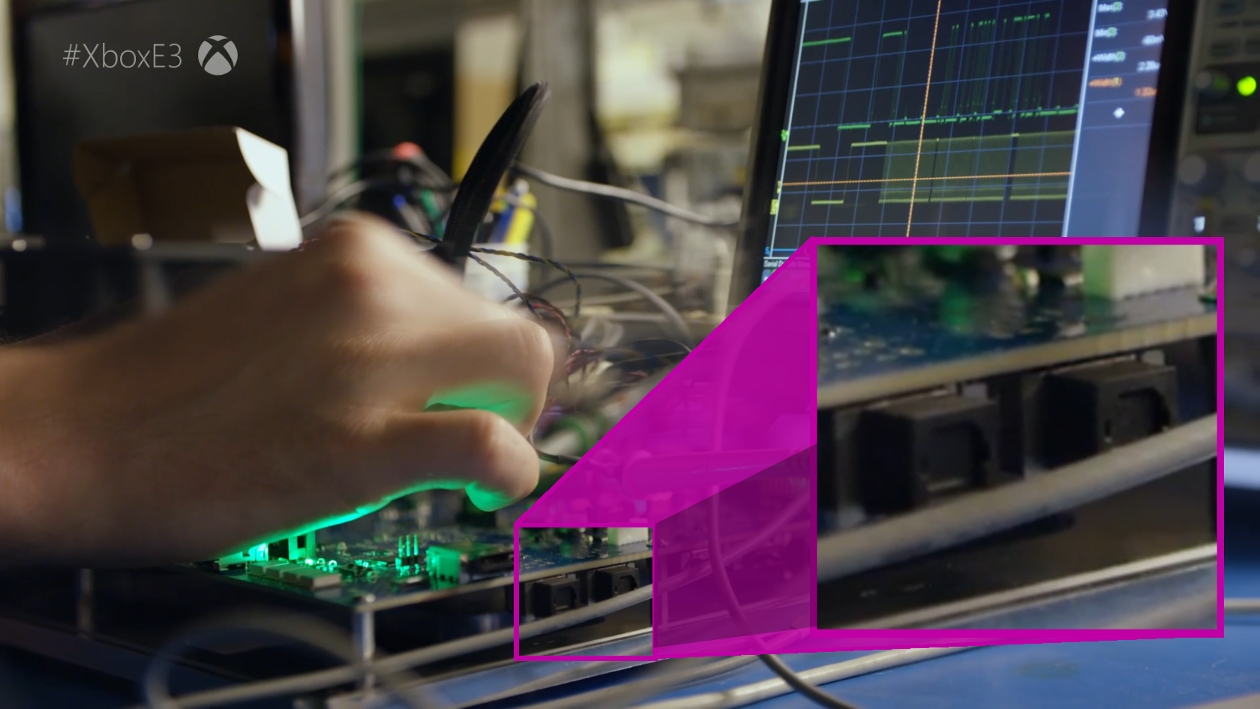

Here's the actual reason I was going through the Scarlett announce video again. I captured the very short sequence panning over the APU package area. There's a focus pull during this shot, so as it goes different parts of the image are in focus. I used multiple frames to paste together a version combining all parts while they're most clear. Here's the combined shot.

There's no paint-in here--that is, I didn't add any pixels. But obviously, I made choices about where and what to erase and combine, and my decisions may not have preserved accuracy.

This image should definitely not be relied on for size measurement, counting of traces, etc.

But it's good for rough conclusions, and I think well demonstrates the evidence for a 320-bit memory interface. There are apparently no RAM chips on the far side, so all would be situated to the left, bottom, and (offscreen) the right of the APU package. The 3 chips on the left fit entirely within the length of the package. The 2 chips at the bottom start outside the package edge, and don't reach the center of the die. It would thus make most sense for there to be 4 chips total at bottom, with 2 offscreen. Logically, there'd then be another 3 chips on the right. Though they can't be seen, the view past the top-right package corner shows no RAM in a corner position...and further down at the very edge, there's a mild dark blur that could be out-of-focus RAM in the expected position.

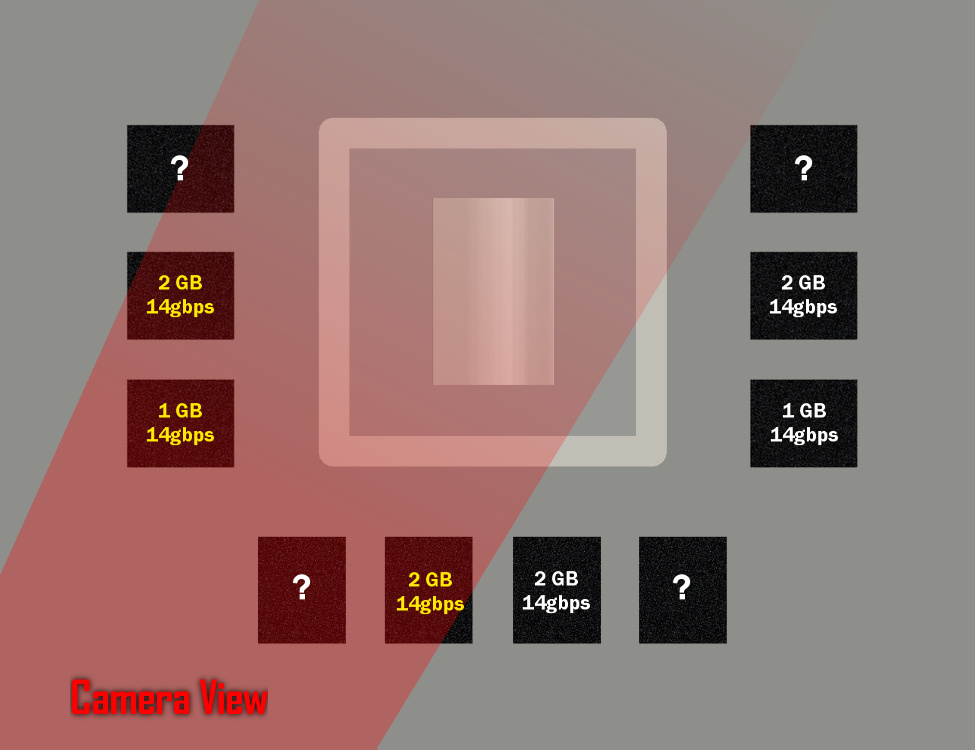

This adds up to very good evidence for 10 RAM chips in a 3-4-3 configuration. At 32-bit per GDDR6 chip, that's a 320-bit bus. Given 14bps chips (see below), total RAM bandwidth would be 560GB/s. That's about 72% higher than Xbox One X. It's 25% higher than both the new Navi GPUs and the Nvidia RTX 2080, though in Anaconda this would be shared with the CPU. (Note that this is a max figure for these particular GDDR6 chips. In One X, Microsoft downclocked the RAM slightly, so a repeat of that would give lower results.)

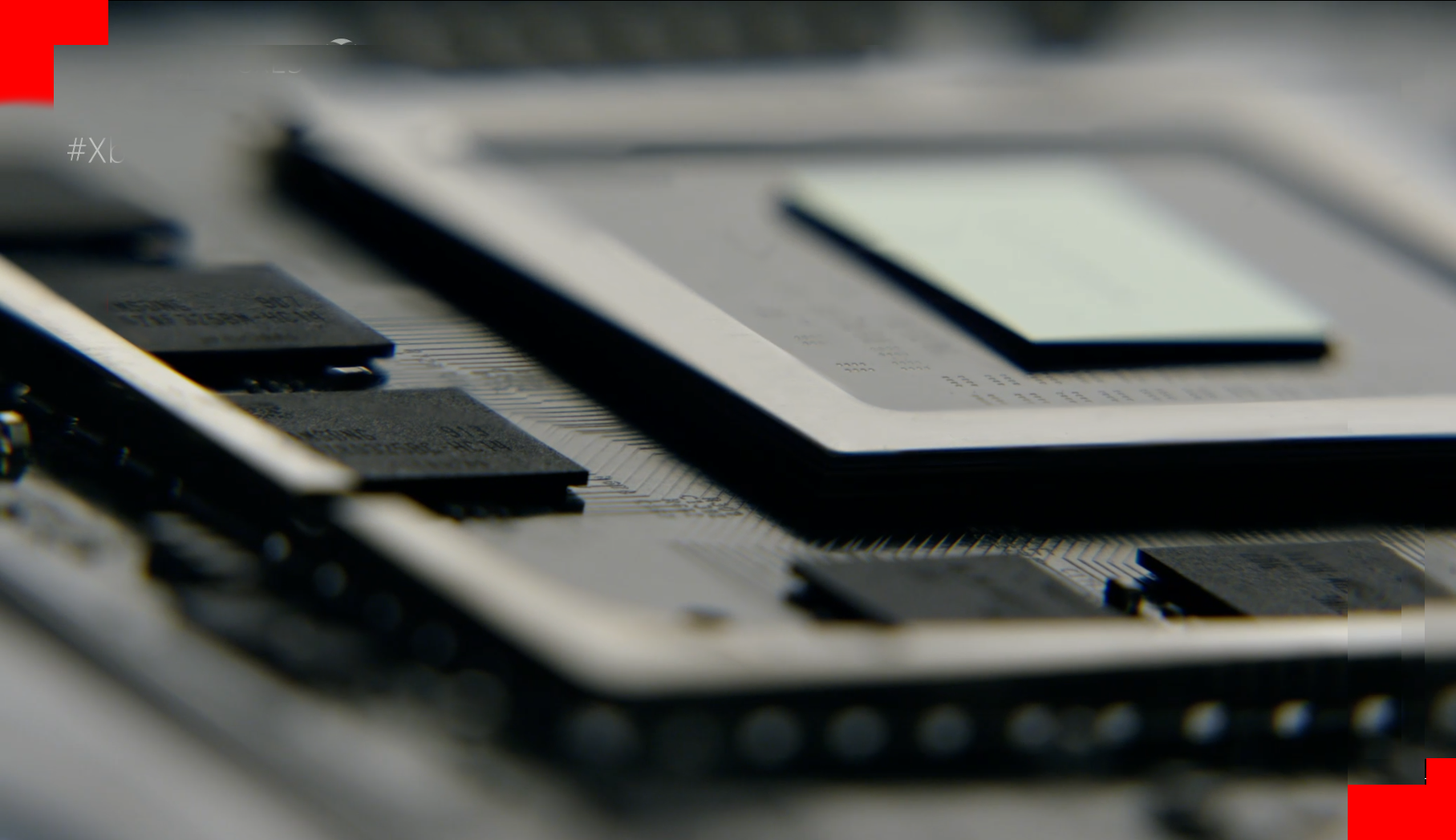

As for size, two different

Samsung designations can be seen. The middle chip on the left, and probably the right one at bottom, are code 325BM-HC14. This is 16Gb/2GB capacity and 14Gbps speed. The lower chip on the left is code 325BC-HC14, an 8Gb/1GB capacity at the same speed. Presuming symmetry of the left with the proposed unseen chips on the right, we have 10GB known, plus 4 unknown chips. That'd allow 14, 16, or 18GB total.

Here's a rough overhead schematic showing the situation I described. The RAM labeled in yellow is definite, as we can read the part numbers. The RAM labeled in white is from assumption of symmetry. The remaining chips are unknown, and could be 1 or 2GB each.

Hopefully this is helpful for folks that hadn't seen this data fully explicated before. Note that apart from the newly enhanced image, none of this analysis is new from me. It was developed by others earlier, notably

DukeBlueBall . But I believe a thorough layout like this will show that the memory bandwidth and (in a tight range) amount are probably known for Anaconda, even though not verbally announced by Microsoft.