So what is actually hdr? The colours become more colourful than ever before? Like blue becomes more specific like sapphire blue, pink into magenta or live pink?

HDR allows displays to use an extended range of brightness, and an extended color gamut.

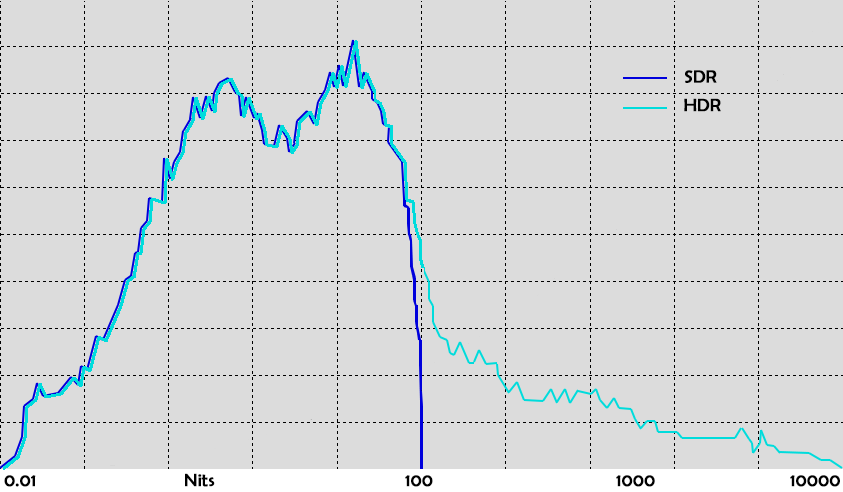

This may only help if you know how to read a histogram, but here's a comparison between HDR and SDR for the same scene:

For all objects below 100 nits brightness in the scene, HDR and SDR look the same. But HDR has additional highlight details that extend beyond 100 nits.

That would be similar to the sun being visible against the sky in this example.

The SDR image on the left cannot go brighter than 100 nits, while the sun in HDR may be 500 nits against a 100 nit sky.

The foreground is roughly similar in brightness on both, since it falls below that 100 nit threshold.

Of course HDR also permits scenes that have a higher average brightness overall. Not all scenes are going to be kept below 100 nits, so you could have an outdoor scene that is twice as bright as an indoor scene in HDR, while they would both be a similar brightness in SDR due to that 100 nits limit.

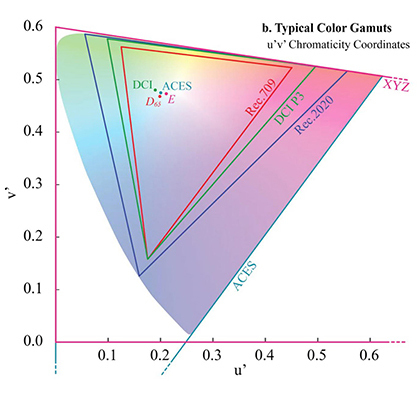

The color gamut is also expanded for HDR - though current displays cannot cover the full rec.2020 gamut, they only cover the P3 colorspace.

The outer triangular shape covers the range of colors that the human eye can see, while the inner triangles show the color range covered by SDR (Rec.709), our current HDR displays (P3), and future displays covered by the HDR spec (Rec.2020).

With full P3 coverage, displays can show much deeper and more vibrant reds and greens, and slightly richer blues - but that depends on the content.

HDR content is not automatically more vibrant and saturated than SDR - that only applies if the content itself doesn't fit into the range of colors that Rec.709 can display.

But a lot of filmed content does exceed what the Rec.709 gamut can display, if the camera was capable of capturing it.

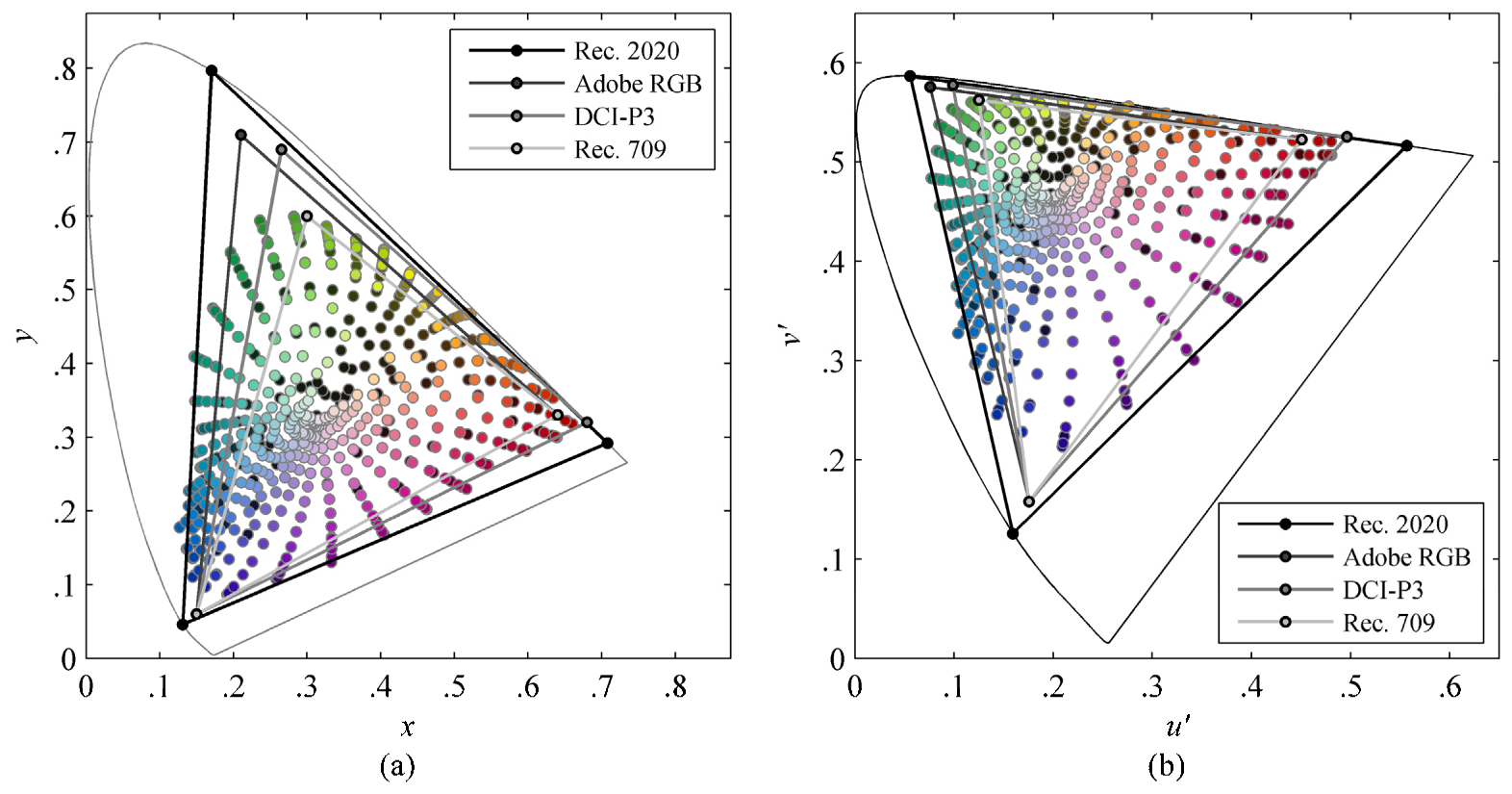

Here we see a chart that shows surface colors (Pointer's gamut) which is essentially all the colors found in nature that are not direct light sources.

Rec.2020 covers

most of it, but P3 is still missing a lot in the blue/green region.

It's also worth pointing out that, if you configure an HDR display incorrectly, it's possible to essentially stretch out Rec.709 colors across the P3 or Rec.2020 color space, resulting in an extremely vivid and saturated image at all times with SDR content.

That's not how it is supposed to look, but some people do that and prefer it (and then wonder why HDR looks "dull").

I've run across a couple of HDR versions that just feel like the devs have implemented a sunglasses filter. It's crazy frustrating.

That often means your SDR settings are significantly brighter than they're supposed to be, as I've previously demonstrated.

Using the

Mad Max example again, let's say that you have a 500 nit HDR display.

The sky is supposed to be displayed at 100 nits, and the sun is supposed to be 500 nits. 500 nits really stands out against a 100 nit background.

But SDR allows you to turn up the brightness as much as 5x what it is supposed to be, so that now the entire sky is 500 nits.

This is only possible because of the limited dynamic range of SDR.

If you were to increase the brightness of the HDR image by 5x to match it, you would need a display capable of 2500 nits for the sun, so that it still stands out against the sky.

Even if you did have the option to increase the brightness of the HDR picture by 5x, without the display having that 2500 nits brightness you would end up compressing/clipping the highlights in the HDR image so that it looks identical to the overbright SDR image.

But if you're looking for an overbright and oversaturated image, an HDR display is going to be more capable of it than an SDR display.

You're just going to be disappointed with how true HDR content looks in comparison to it, since that forces most displays into showing more accurate brightness and saturation.

There was a topic a while back where someone wanted just that:

I bought a KS8500 last year and have had a miserable time trying to figure out what HDR brings to video games. Long story short, i just dont see the stunning difference people claim to see with HDR.

I haven't seen any movies in HDR thanks to Sony's ridiculous decision to ship the Pro without a 4k drive. But with Vudu finally adding HDR10 support to some Samsung TVs and the Roku sticks, i picked up a roku stick + on Black Friday and finally got to see Mad Max in HDR today and yes, i could immediately tell the difference, it looked dull and flat, and just plain wrong.

The Orange hue that made the movie so visually striking is replaced with this dull brown filter. I took some off screen shots to compare, and i know some videophiles here think that's blasphemy, but the difference b/w HDR and non-HDR is so striking, it easily comes across in pictures.

Just look at how boring the so-called HDR shot looks. The orange color is just gone.

The next two shots are from the sandstorm scene, they are blurry but the get the point across.

I just dont understand HDR. With some games, the difference is so subtle, i go 'is that it?' Some games like ratchet and uncharted, i cant even tell the difference. I was really hoping for movies to add that wow factor that justifies this $1k tv and the $8 price tag for hdr movies, but the first impressions of mad max have completely soured me from spending another $8-10 on more hdr movies.

P.S I believe there is something wrong with this HDMI plug that comes with these Samsung tvs. mine might be defective. i have seen demos on the youtube app and some movies look incredible. this roku stick goes into the hdmi slot and while i get the HDR pop up, i just dont get this eye catching stunning image everyone keeps raving about. Mad max was supposed to be this life altering experience, and it just looks wrong. I really hope its an issue with my HDMI slots, but PS4 pro and roku all say my tv supports HDR.

P.P.S Bonus screenshots. guess which one is HDR?

The SDR images are ridiculously bright and oversaturated - but if that's what you want, the option is there on an HDR display (when viewing SDR content).

That's not the case, own a pretty awesome OLED tv which makes everything look great. Can get pretty freaking bright. Movies like IT or MAD MAX have plenty of super bright scenes and my tv doesn't do any funky ABL stuff with those, so that's not the issue. It's the games and their lightning exposure, which sucks. Maybe there's no other way to do it atm, but still sucks

OLEDs can do 150 nits full-screen brightness. Many HDR movies have scenes with an average brightness of 200, 300, 400 nits. Some discs have a maximum frame-average brightness of over 800 nits.

OLEDs are great for lower-brightness HDR content, but they fall short of a proper HDR presentation.

Since they have only gone from ~120 to ~150 nits in the last 5 years (though peak brightness has improved a lot), and they're still using that WRGB pixel structure to cheat brightness measurements, I'm not sure that it's a problem they will be able to solve.

We probably need µLED displays to have per-pixel brightness control and high brightness HDR combined.

That doesn't mean OLEDs are bad for HDR - they're the best display for many HDR sources. But they fall short for high brightness HDR.

Do you know this old article by Nixxes/Nvidia about HDR in Rise of the Tomb Raider?

https://developer.nvidia.com/implementing-hdr-rise-tomb-raider

There they claim games have rendered internally at HDR for years. Maybe Halo 5 uses some tech which is incompatible with the type of conversion detailed there. But even Halo 3 via backwards compatibility managed to produce pretty good HDR, didn't it?

Yes, games have been rendering in HDR and with high bit-depth buffers for a long time now.

The conversion to an output suitable for an HDR display is not necessarily an easy one though, as these engines were designed with an output to SDR in mind

It would be nice if game developers even started to output >8-bit in SDR, and actually dithered their conversions properly to eliminate banding.

Funnily enough, there's actually a mod for NieR:Automata on PC which does just that, replacing many shaders to add dither and minimize banding.