Mesh Shading Pipeline

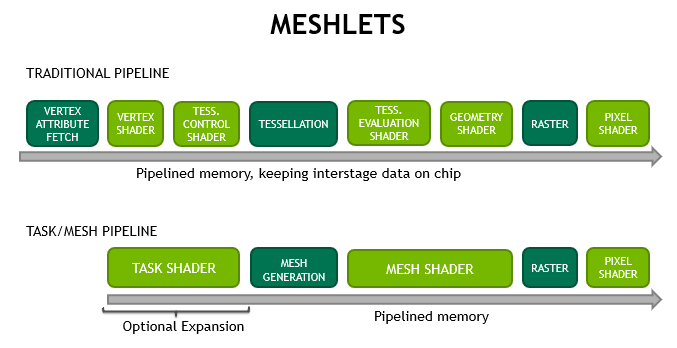

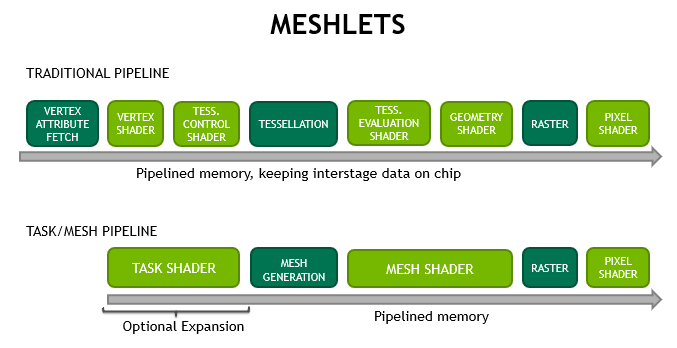

A new, two-stage pipeline alternative supplments the classic attribute fetch, vertex, tessellation, geometry shader pipeline. This new pipeline consists of a task shader and mesh shader:

The mesh shader stage produces triangles for the rasterizer using the above-mentioned cooperative thread model internally. The task shader operates similarly to the hull shader stage of tessellation, in that it is able to dynamically generate work. However, like the mesh shader, the task shader also uses a cooperative thread mode. Its input and output are user defined instead of having to take a patch as input and tessellation decisions as output.

The interfacing with the pixel/fragment shader is unaffected. The traditional pipeline is still available and can provide very good results depending on the use-case. Figure 4 highlights the differences in the pipeline styles.

The new mesh shader pipeline provides a number of benefits for developers:

Mesh shading follows the programming model of compute shaders, giving developers the freedom to use threads for different purposes and share data among them. When rasterization is disabled, the two stages can also be used to do generic compute work with one level of expansion.

More info:

https://devblogs.nvidia.com/introduction-turing-mesh-shaders/

https://www.nvidia.com/content/dam/...{"num":168,"gen":0},{"name":"XYZ"},106,720,0]

Pretty interesting, first big change in rendering pipeline since unified shaders.

A new, two-stage pipeline alternative supplments the classic attribute fetch, vertex, tessellation, geometry shader pipeline. This new pipeline consists of a task shader and mesh shader:

- Task shader : a programmable unit that operates in workgroups and allows each to emit (or not) mesh shader workgroups

- Mesh shader : a programmable unit that operates in workgroups and allows each to generate primitives

The mesh shader stage produces triangles for the rasterizer using the above-mentioned cooperative thread model internally. The task shader operates similarly to the hull shader stage of tessellation, in that it is able to dynamically generate work. However, like the mesh shader, the task shader also uses a cooperative thread mode. Its input and output are user defined instead of having to take a patch as input and tessellation decisions as output.

The interfacing with the pixel/fragment shader is unaffected. The traditional pipeline is still available and can provide very good results depending on the use-case. Figure 4 highlights the differences in the pipeline styles.

The new mesh shader pipeline provides a number of benefits for developers:

- Higher scalability through shader units by reducing fixed-function impact in primitive processing. The generic purpose use of modern GPUs helps a greater variety of applications to add more cores and improve shader's generic memory and arithmetic performance.

- Bandwidth-reduction, as de-duplication of vertices (vertex re-use) can be done upfront, and reused over many frames. The current API model means the indexbuffers have to be scanned by the hardware every time. Larger meshlets mean higher vertex re-use, also lowering bandwidth requirements. Furthermore developers can come up with their own compression or procedural generation schemes.

The optional expansion/filtering via task shaders allows to skip fetching more data entirely.

- Flexibility in defining the mesh topology and creating graphics work. The previous tessellation shaders were limited to fixed tessellation patterns while geometry shaders suffered from an inefficient threading, unfriendly programming model which created triangle strips per-thread.

Mesh shading follows the programming model of compute shaders, giving developers the freedom to use threads for different purposes and share data among them. When rasterization is disabled, the two stages can also be used to do generic compute work with one level of expansion.

More info:

https://devblogs.nvidia.com/introduction-turing-mesh-shaders/

https://www.nvidia.com/content/dam/...{"num":168,"gen":0},{"name":"XYZ"},106,720,0]

Pretty interesting, first big change in rendering pipeline since unified shaders.