This was an interesting experiment for me. Now that we have the specs, the suggestion that these consoles could be around a "mid-range" PC stands out as such an absurd idea I had to take a look, so I reviewed the steam hardware survey.

GPU:

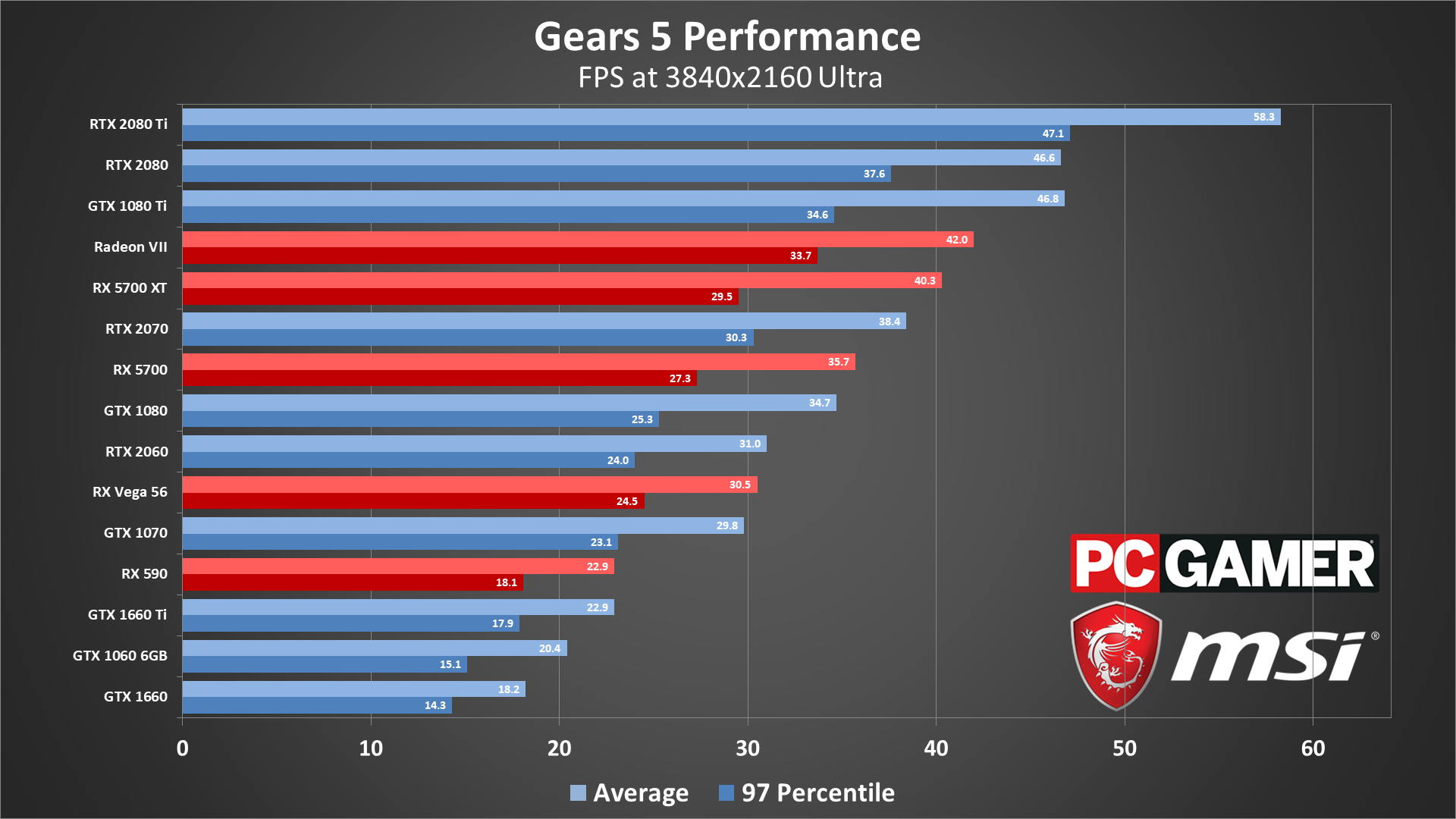

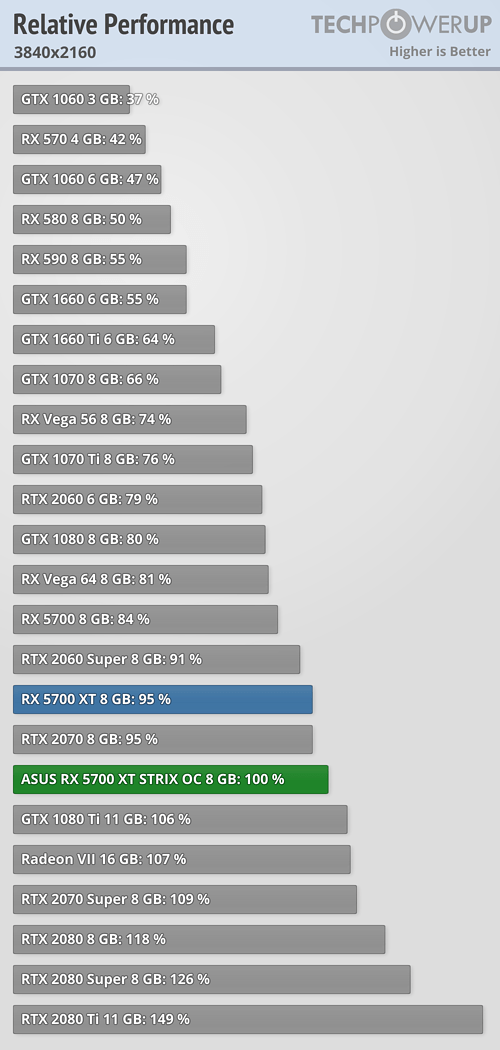

At the moment the best guess/evidence is that the new consoles' GPUs will be around RTX 2080 level. I personally have no doubt they'll soon be performing better than that, just like every console in the past over time performs better than the PC GPUs they initially seemed most similar to. But anyway:

Percent of users with a 2080 or better:

2.04%

That means these consoles are in the top 2 percent. Better than 98% of PCs out there. That's not mid-range. That's not even high-end. That's

top-end.

But perhaps you meant the positioning of the GPU in the range of current available parts, not actual usage. Well, there is also exactly one graphics card above the 2080: the 2080 Ti. Also, there's the little detail that the 2080 is $600+. That's most likely more than either of these consoles will cost

in their entirety. Again, that's not high-end, that's

extremely high-end.

As for what to consider "mid-range", the most popular card is the GTX 1060 at 12.68%. Needless to say this is not even close to the power bracket the 2080 is in.

CPU:

This was pretty interesting to me.

72.51% are 4 cores or less.

93.68% are 6 or less. Only 6.32% are 8 core or more, and not all of those have hyperthreading. There is not as detailed of a breakdown of CPUs as there are for GPUs, meaning even this percentage of 8 cores is including things like the AMD FX-8350, which, well... lol.

These consoles are in the top 6 percent, better than 94% of PCs (just in core count, much higher when you consider architecture).

For clock speeds again it's tough to get detailed info, but

73.33% are 3.29 GHz or lower,

26.67% are 3.3 or higher.

The consoles are somewhere in the middle of the top 26% for clock speed, again with the latest, most efficient architecture.

They are similar to the 3700x (probably a little slower). That's a $300 CPU, with the only higher-end parts being the 3800x (which is similar, just clocked a little higher), the 3900x, and the 3950x.

Those Ryzen 9s are $500+. That's not high-end, that's EXTREMELY high end, enthusiast/professional level. So again, the consoles are basically top-end for a gaming PC.

=============

Now as silly as it seemed to claim next-gen consoles would be "mid-range", this was even better:

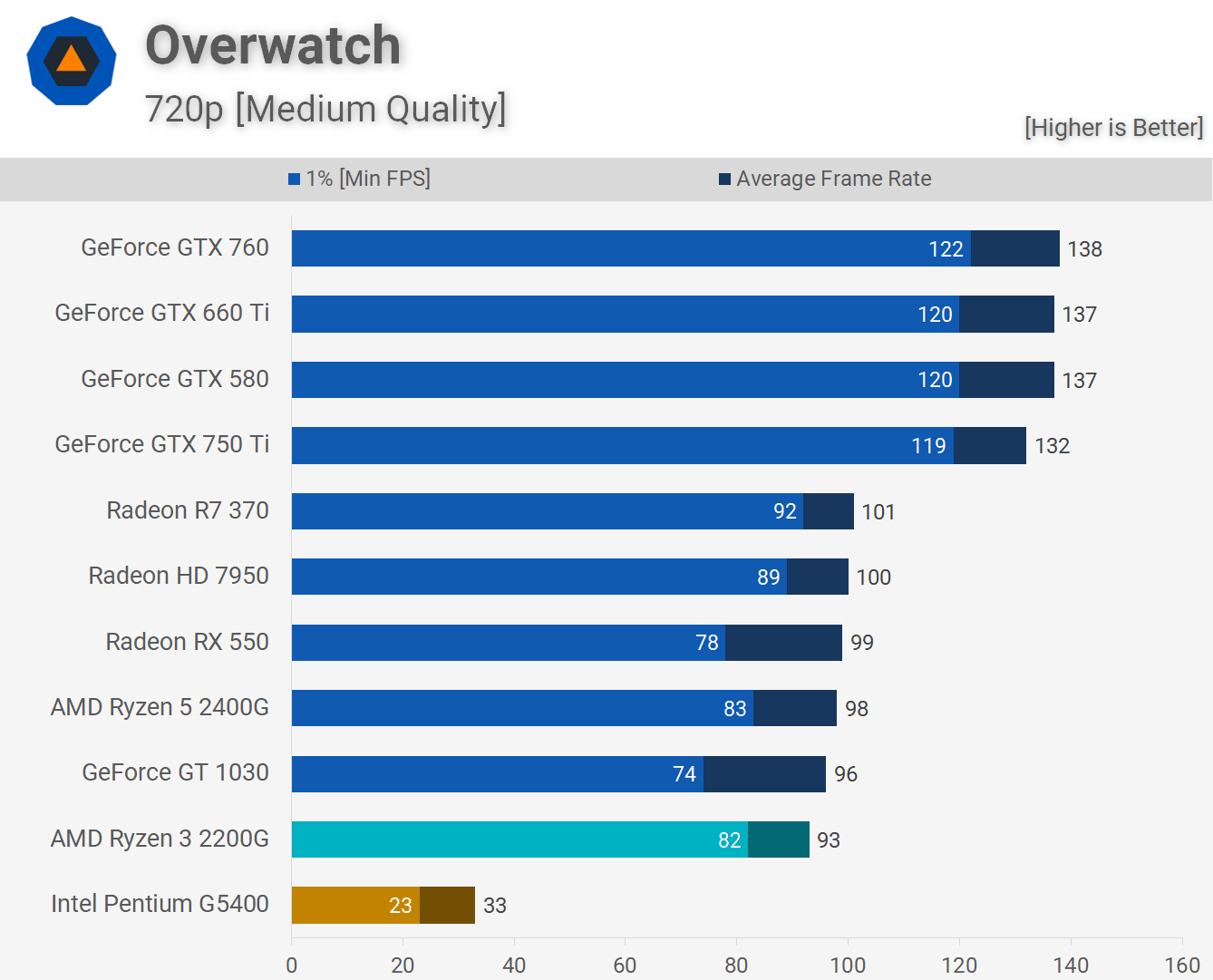

A low-end PC is something like the Intel Pentium G5400 or the AMD Ryzen 3 2200G, and... well:

These aren't even close to

last-gen consoles, not by a long shot. You seriously think that in a couple years, low-end integrated graphics will be

2080 level? No integrated graphics will be even close to next-gen console level at the

end of that generation, let alone a couple years in.

Anyway, this was fun, thanks for giving me the idea. I knew these consoles were powerful, but even I didn't realize how extraordinarily high-end they really were. This isn't even counting the bespoke customizations for I/O where especially the PS5 will be

far beyond anything possible on the PC, and likely for a good long while. Even when PC SSDs get to the base speed of the PS5, that doesn't include all the customizations that remove the bottlenecks and slowdowns PCs will still have. Remember Cerny said the decompressor was worth what, 9 Zen 2 cores? The very highest-end PC CPUs and GPUs will surely be faster, but they will also have to be used for these other functions that the consoles have dedicated hardware for.