Are people still trying to spin the narrative that Sony upped their clocks at the last minute because of the XsX? That's ridiculous and it really doesn't work that way. You Can't just change everything at the last minute. These things have been planned out long in advance and in the case of Sony and the PS5 it is clear that they decided on affordability while still pushing for a powerful machine which resulted in the situation we have now. Thing is that besides affordability there were other reasons where narrow and fast could prove useful and Cerny clearly talked about those things. I think he even said that they were aiming even higher but found that they ran into limitations elsewhere.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

NX Gamer: PS5 Full Spec Analysis | A new generation is Born

- Thread starter Equanimity

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Makes sense, thanks!The frame rate, while locked, is never actually stable. This is most common hen looking at games with an uncapped framerate. If a dev is aiming for a 60fps locked framerate, then they design an engine that can sustain like 65-70fps on average so even when in taxing scenes it doesn't drop below that 60fps. And with frame pacing their engine would basically just spit out a frame every 16ms.

Hey man, just so you know, I'm not trying to start any fights here, just enlighten. I have a lot of experience doing GPU performance profiling. And I have experienced the power difference between essentially every GPU on the market. I can tell you straight up, that while most casual users probably won't be able to tell the difference (and likely wouldn't have been able to tell the difference between thier PS4 Pro and X1X), that even the 20% difference between these two cards will be very noticable on many titles.

To give you an example of what I mean, we can use PC GPU performance profiling as an example. On the website passmark, a sub-20% difference essentially gives us (and correct me if my math is wrong) the difference between an RTX 2080 and a 2070.

PassMark Video Card (GPU) Benchmarks - High End Video Cards

Video Card Benchmarks - Over 1,000,000 Video Cards and 3,900 Models Benchmarked and compared in graph form - This page contains a graph which includes benchmark results for high end Video Cards - such as recently released ATI and nVidia video cards using the PCI-Express standard.www.videocardbenchmark.net

RTX 2080 - 19371

RTX 2070 - 16615

We can look at any number of game benchmarks between these two, and immediately see performance differences (feel free to pick any PC game on the market and try this out).

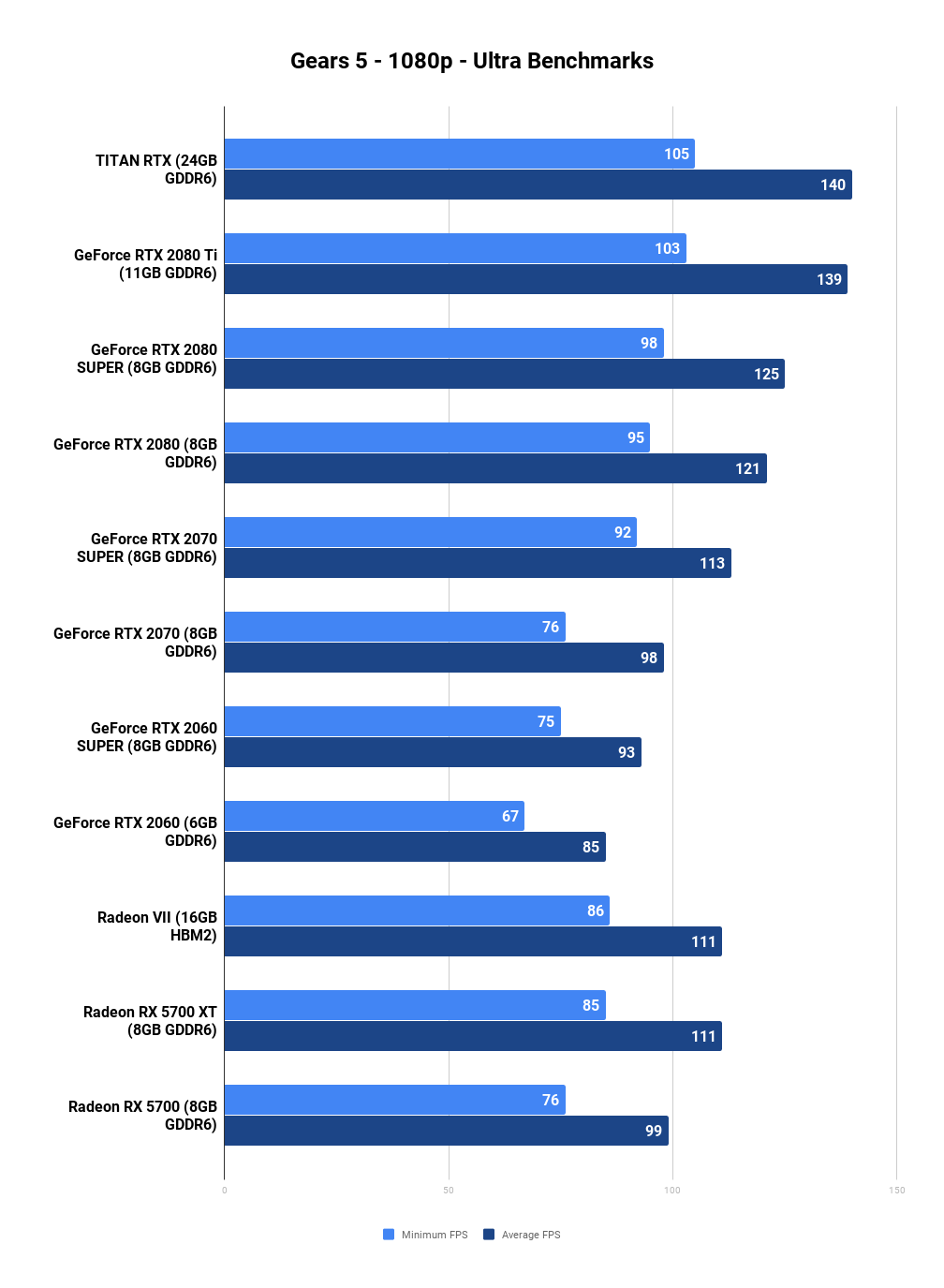

Take Gears 5 as an example:

..

TweakTown.com Enlarged Image

An enlarged full size image from https://www.tweaktown.com content.www.tweaktown.com

At 1080p (fps)

RTX 2080

Avg - 125

Min - 98

RTX 2070

Avg - 98

Min - 76

At 1440p (fps)

RTX 2080

Avg - 89

Min - 72

RTX 2070

Avg - 71

Min - 57

At 4k (fps)

RTX 2080

Avg - 51

Min - 43

RTX 2070

Avg - 40

Min - 33

If you feel the difference shown above won't make a difference to you, then fair enough, and all the power to you. In this coming generation though, I'm assuming we are going to see developers target all three of these scenarios, sometimes on a regular basis.

As you can see, the difference seems greater as the resolution goes down. For games that target 120fps at 1080p or 1440p (this could be a popular choice when using resolution scaling), PS5 will need to make up for a significant performance difference by adjusting graphical settings, tweaking resolution, whatever developers can do.

Another way you could also measure this is perhaps by comparing graphic presets between RTX 2070 and 2080 on games that do that well (Gears 5 once again does that incredibly well), to see how developers tend to prioritize visual settings to make the most out of a GPU.

I think a closer - if not perfect - comparison would be to use the existing Navi cards, and see how they scale with extra compute.

They don't scale quite as well as the RTX2070 to 2080 does in Gears 5 - there you're seeing a real world gain of up to 80% of the compute ALU difference between the two chips.

If you compare the 5700 XT to the 5700 - looking at it across 12 games at max settings at 1440p and 4K - you realise only about 60% of the paper compute difference. 23% higher teraflops is typically buying you ~13% higher framerate, for the same resolution/settings.

Of course in both of these cases - the 2070/2080, 5700XT/5700 - bandwidth is the same among all the GPUs in question. XSX will have a bandwidth advantage that scales relatively closely to its compute advantage. However I think it's interesting that even at the lower 1440p resolution, where presumably games may be less bandwidth bound, the 5700XT doesn't do much relatively better vs the 5700 than it does at 4K. It's a pretty steady difference. That's another difference vs the nVidia chips, there's not quite the same apparent scaling of perf difference between lower and higher resolutions.

It will be interesting to see if RDNA2 can scale better with more compute (and bw), and how a faster-clocked top/tail of the gpu pipeline might affect the real differences too. I think those benches are a good example, though, of how all else being equal, real world performance doesn't scale perfectly with compute increases.

Name the people making such an argument. Too often, posters like yourself come into the middle of arguments and start nursing lines they themselves haven't sourced. Clocks are some of the "easiest" parts of a GPU/CPU to adjust.Are people still trying to spin the narrative that Sony upped their clocks at the last minute because of the XsX? That's ridiculous and it really doesn't work that way. You Can't just change everything at the last minute. These things have been planned out long in advance and in the case of Sony and the PS5 it is clear that they decided on affordability while still pushing for a powerful machine which resulted in the situation we have now. Thing is that besides affordability there were other reasons where narrow and fast could prove useful and Cerny clearly talked about those things. I think he even said that they were aiming even higher but found that they ran into limitations elsewhere.

Name the people making such an argument. Too often, posters like yourself come into the middle of arguments and start nursing lines they themselves haven't sourced. Clocks are some of the "easiest" parts of a GPU/CPU to adjust.

You are saying they upped the clocks to be competitive with the XsX. Obviously you are one of the people i'm talking about.

I never said it was a last minute thing. Re-read the comment. I said it was a necessity because of where 2GHz/9.2TF would have left them in the next-gen conversation. It's about perception, marketing 101 teaches this.

Maybe you haven't spent any time overclocking in the PC realm, by 2.2GHz is obscene for GPU clocks. Heat is the enemy of component life and the higher your clocks, the more heat you produce. I do not believe Cerny opted for 2.2GHz from the get-go. In the PC space, you're hard-pressed to overclock that high on discrete GPUs, let alone consoles. We'll see how cooling works for PS5, but I have my doubts.

It´s ridicoulous to assume that Sony went for as you call it obscene clock just because of the XSX. They would have to rework the whole cooling system, casing, test everything and so on, you don´t do that on a whim. They over time as chips, cooling and so on got better looked how high they can get with their approach, which is different than what Pc´s and consoles do and has been pretty in depth explained not only by Cerny but also by several members in this very thread.

What you do is guesswork and only that. If you´re right we will most likely see lot´s of Ps5´s break pretty early due to overheating, which will cause a lot more damage to Sony´s reputation and their sales figures over the course of the gen than a 8TF console would have. Now what are the odds that Sony designed their system the way you claim they have?

Upping your speed to be competitive and upping your speed at the last minute is not the same thing. Which did I say? Be specific.You are saying they upped the clocks to be competitive with the XsX. Obviously you are one of the people i'm talking about.

Upping your speed to be competitive and upping your speed at the last minute is not the same thing. Which did I say? Be specific.

Dude it comes down to the same thing. Sony didn't choose to go for such a high clocks after MS released the info that the XsX is 12tf.

You are kind of saying it: that they changed because marketing and perception vs XsX. That is not how things work, you don't compromise design and reliability of a product you have to manufacture and sell in millions for years because it doesn't fit marketing.Name the people making such an argument. Too often, posters like yourself come into the middle of arguments and start nursing lines they themselves haven't sourced. Clocks are some of the "easiest" parts of a GPU/CPU to adjust.

If it was that easy to adjust, MS could easily boost theirs and widen the gap again.

I think a closer - if not perfect - comparison would be to use the existing Navi cards, and see how they scale with extra compute.

Also important to observe how one gets to the theoretical performance. Both the 2080 and the 5700XT are clocked faster than 2070 and 5700, respectively, and perf scales better with clocks than with extra logic.

Thing is, Sony didn't just up the clocks like what MS did with the Xbox One back in 2013. Sony's whole design is based around a power management system that dynamically adjusts CPU and GPU clocks and even though it's based on AMD technology, it is still different to AMD chips that are on the market. So no, you don't just build a system like that out of the blue and it's not just "adjusting the clocks".Name the people making such an argument. Too often, posters like yourself come into the middle of arguments and start nursing lines they themselves haven't sourced. Clocks are some of the "easiest" parts of a GPU/CPU to adjust.

Some people love overlooking details these days when they try comparing the PS5 to the XsX but have no issue sticking on one flashy TF number.

Also important to observe how one gets to the theoretical performance. Both the 2080 and the 5700XT are clocked faster than 2070 and 5700, respectively, and perf scales better with clocks than with extra logic.

Good spot, that's another complicating factor in the comparison for sure.

It would be interesting if someone could 'somehow' replicate the setups in PS5/XSX with Navi cards, and investigate the perf scaling on PC with them. That might be easier said than done for the moment though.

Exactly.Are people still trying to spin the narrative that Sony upped their clocks at the last minute because of the XsX? That's ridiculous and it really doesn't work that way. You Can't just change everything at the last minute. These things have been planned out long in advance and in the case of Sony and the PS5 it is clear that they decided on affordability while still pushing for a powerful machine which resulted in the situation we have now. Thing is that besides affordability there were other reasons where narrow and fast could prove useful and Cerny clearly talked about those things. I think he even said that they were aiming even higher but found that they ran into limitations elsewhere.

I'll admit some things I assumed turned out wrong. In the end, it seems Sony really did factor in an affordable price. We shall see when they reveal the price.

I think a closer - if not perfect - comparison would be to use the existing Navi cards, and see how they scale with extra compute.

They don't scale quite as well as the RTX2070 to 2080 does in Gears 5 - there you're seeing a real world gain of up to 80% of the compute ALU difference between the two chips.

If you compare the 5700 XT to the 5700 - looking at it across 12 games at max settings at 1440p and 4K - you realise only about 60% of the paper compute difference. 23% higher teraflops is typically buying you ~13% higher framerate, for the same resolution/settings.

Of course in both of these cases - the 2070/2080, 5700XT/5700 - bandwidth is the same among all the GPUs in question. XSX will have a bandwidth advantage that scales relatively closely to its compute advantage. However I think it's interesting that even at the lower 1440p resolution, where presumably games may be less bandwidth bound, the 5700XT doesn't do much relatively better vs the 5700 than it does at 4K. It's a pretty steady difference. That's another difference vs the nVidia chips, there's not quite the same apparent scaling of perf difference between lower and higher resolutions.

It will be interesting to see if RDNA2 can scale better with more compute (and bw), and how a faster-clocked top/tail of the gpu pipeline might affect the real differences too. I think those benches are a good example, though, of how all else being equal, real world performance doesn't scale perfectly with compute increases.

Not to mention that besides the extra CUs, the 5700XT also has a clock advantage over the 5700. Since we know that clock increases improve performance more linearly than the extra parallelism of more CUs, the gap between theoretical performance advantage and actual performance advantage would be even higher.

To come back to the example of @theAllseeingEYE , if you compare TFLOPs instead of benchmark results - and the entire discussion here has been about what TFLOPs translate to, mind you! - you get a 34-38% advantage for the 2080 (depending on what clockspeeds you're comparing), but only a 18% advantage in games. You've actually proven our point here.

Edit: Beaten to it lol.

"Clearly, Sony felt the need to up clocks to raise teraflops so they would compare more favorably because both of the previous configurations are a major perception blow in the face of the XSX."Upping your speed to be competitive and upping your speed at the last minute is not the same thing. Which did I say? Be specific.

The burden of proof for this statement is on you

"No amount of denial from you lot will change this. Sony had no choice to be aggressive with clocks because the machine is lacking in grunt comparatively."

you sound very confident. let's see the receipts.

Well you know, at the last minute they decided to go with their cooling solution."Clearly, Sony felt the need to up clocks to raise teraflops so they would compare more favorably because both of the previous configurations are a major perception blow in the face of the XSX."

The burden of proof for this statement is on you

"No amount of denial from you lot will change this. Sony had no choice to be aggressive with clocks because the machine is lacking in grunt comparatively."

you sound very confident. let's see the receipts.

That V shaped dev kit? Last minute design decision.

Yup, it checks out.

I never said anything about them choosing their clocks after Microsoft released XSX specifications on March 16, 2020 or even in Februrary. Look at how ridiculous that reads. This would have been a decision made many months ago.Dude it comes down to the same thing. Sony didn't choose to go for such a high clocks after MS released the info that the XsX is 12tf.

But again I argue.

1.8GHz is not a "low" clock speed.

2GHz is high.

2.2GHz is nuts.

We're talking about consoles here, they have lower TDP than discrete GPUs by design. I re-iterate what I originally stated in my discussion with Phoenix. Sony wanted to eek out every once of power possible from a 36CU chip while also being aware of public perception of the power differential.

Some of you behave as if Sony operates in a bubble. They would have been aware of the rumors of a 12TF next-gen Xbox for sometime now. It's not something they woke up to overnight and reacted to, but you can be sure they factored that into their design decisions for PlayStation 5.

Not to mention that besides the extra CUs, the 5700XT also has a clock advantage over the 5700. Since we know that clock increases improve performance more linearly than the extra parallelism of more CUs, the gap between theoretical performance advantage and actual performance advantage would be even higher.

To come back to the example of @theAllseeingEYE , if you compare TFLOPs instead of benchmark results - and the entire discussion here has been about what TFLOPs translate to, mind you! - you get a 34-38% advantage for the 2080 (depending on what clockspeeds you're comparing), but only a 18% advantage in games. You've actually proven our point here.

Edit: Beaten to it lol.

The real gap in the Gears example on the 2800/2700 is higher than 18%, its more like 25-30% ish, except at 4K where I guess bandwidth bottlenecks kick in. About a 80% realisation of the compute difference.

But scaling seems a bit different to that example on Navi cards - it doesn't seem to be as good - and there isn't the same predictable change with a looser bandwidth bound.

However I say all this to say, to just be cautious drawing too many conclusions about the perf in XSX/PS5 as the variables change a bit again. But PC Navi GPUs would be a closer point of comparison if one is minded to look at PC GPUs for guidance.

My post has both 1440p and 4k performance numbers, I assume that is what you are asking for?

Also, since people generally either have 1080p or 4k TVs, I think it's possible, especially on PS5, that developers targeting 120fps could reduce resolution down that far (hopefully with some sort of temporal reconstruction to bring it back up towards 4k or 1440p).

These are just raw performance numbers though, I'm am certain that we will see developers use every trick in the book to get the most out of both GPUs.

Ugh, I misread, sorry about that.

I mean the difference is definitely there, and if they want to lock resolution at 4k, there will be noticable framerate differences. I think it also kind of shows though that if you initiate dynamic res, the differences are unlikely to be significant.

Oh, I was just using the techpowerup chart that gives one big average for all the games tested on a given card.

I never said anything about them choosing their clocks after Microsoft released XSX specifications on March 16, 2020 or even in Februrary. Look at how ridiculous that reads. This would have been a decision made many months ago.

But again I argue.

1.8GHz is not a "low" clock speed.

2GHz is high.

2.2GHz is nuts.

We're talking about consoles here, they have lower TDP than discrete GPUs by design. I re-iterate what I originally stated in my discussion with Phoenix. Sony wanted to eek out every once of power possible from a 36CU chip while also being aware of public perception of the power differential.

Some of you behave as if Sony operates in a bubble. They would have been aware of the rumors of a 12TF next-gen Xbox for sometime now. It's not something they woke up to overnight and reacted to, but you can be sure they factored that into their design decisions for PlayStation 5.

Which rumors? The announcement about XSX´s specs MS did in december?

I never said anything about them choosing their clocks after Microsoft released XSX specifications on March 16, 2020 or even in Februrary. Look at how ridiculous that reads. This would have been a decision made many months ago.

But again I argue.

1.8GHz is not a "low" clock speed.

2GHz is high.

2.2GHz is nuts.

We're talking about consoles here, they have lower TDP than discrete GPUs by design. I re-iterate what I originally stated in my discussion with Phoenix. Sony wanted to eek out every once of power possible from a 36CU chip while also being aware of public perception of the power differential.

Some of you behave as if Sony operates in a bubble. They would have been aware of the rumors of a 12TF next-gen Xbox for sometime now. It's not something they woke up to overnight and reacted to, but you can be sure they factored that into their design decisions for PlayStation 5.

No doubt it was deliberate

Highest power per cost, high emphasis on speed (clocks). You can see that approach everywhere in the design. They had a certain power footprint and wanted to fab the smallest chip possible at the highest speed to bring down cost and increase volume of chips they could produce

2.23 GHz isn't too crazy with RDNA2

They also prioritized SSD speed as something they can do unique that will radically improve memory management, basically that component is their 8GB GDDR5 announcement

I never said anything about them choosing their clocks after Microsoft released XSX specifications on March 16, 2020 or even in Februrary. Look at how ridiculous that reads. This would have been a decision made many months ago.

But again I argue.

1.8GHz is not a "low" clock speed.

2GHz is high.

2.2GHz is nuts.

We're talking about consoles here, they have lower TDP than discrete GPUs by design. I re-iterate what I originally stated in my discussion with Phoenix. Sony wanted to eek out every once of power possible from a 36CU chip while also being aware of public perception of the power differential.

Some of you behave as if Sony operates in a bubble. They would have been aware of the rumors of a 12TF next-gen Xbox for sometime now. It's not something they woke up to overnight and reacted to, but you can be sure they factored that into their design decisions for PlayStation 5.

You don't seem to understand that even "many months ago" is very recent when it comes to the design of machines like this. System design takes a long long time.

They obviously planned to go with very high clocks from the start or else they would not have been able to do so since it requires some serious cooling. Besides the cooling it's obvious that the way their variable speeds and their set power budget works is to allow for speeds like this.

The one obvious thing is that yes Sony wanted to get as much performance out of a 36CU GPU and they have obviously achieved that goal. Why did they go with 36CU's? Most likely with affordability in mind.

Are people still trying to spin the narrative that Sony upped their clocks at the last minute because of the XsX? That's ridiculous and it really doesn't work that way. You Can't just change everything at the last minute. These things have been planned out long in advance and in the case of Sony and the PS5 it is clear that they decided on affordability while still pushing for a powerful machine which resulted in the situation we have now. Thing is that besides affordability there were other reasons where narrow and fast could prove useful and Cerny clearly talked about those things. I think he even said that they were aiming even higher but found that they ran into limitations elsewhere.

its extremely logical.....but not last minute.....more like last year

Given the rumors of a 2019 release and the way they went ahead with pushing the gpu/cpu when that approach is not the SAFE way to build a console.

Also nobody can say this is a last minute thing. The way these are built is a YEARS long process with commitments to suppliers.

Id also like to clarify I think its a smart move on their part if their cooling is good. I question it though. These are not overclocked home PCs, they're mass market consoles that can't afford to overheat.

Last edited:

its extremely logical.....but not last minute.....more like last year

Given the rumors of a 2019 release and the way they went ahead with pushing the gpu/cpu when that approach is not the SAFE way to build a console.

Also nobody can say this is a last minute thing. The way these are built is a YEARS long process with commitments to suppliers.

You are kind of contradicting yourself with your first and last lines.

And what do you mean it's not SAFE? It might not be the usual way to build but that does not make it unsafe? They have obviously designed and built their console with these high frequencies in mind.

I went back and fixed it to clarify.You are kind of contradicting yourself with your first and last lines.

And what do you mean it's not SAFE? It might not be the usual way to build but that does not make it unsafe? They have obviously designed and built their console with these high frequencies in mind.

I believe this is a smart FIX that Sony has.

I believe they were pushed into this corner by MS.

It's definitely up to Sony to prove that their cooling is good enough and hopefully silent enough this time. Having watched Cerny say that they were aware of the cooling and sound problems with the PS4, and that they wanted to fix these things, I think we can assume things will be fine.

Yes, Windows Central posted a rumor in early December 2019 that Project Scarlett (Xbox Series X) would have a 12TF GPU. Sony would likely have known that beforehand. But I wasn't in those meetings with Mark Cerny and the team, so can't say with 100% certainty when they found out or how they reacted to it.Which rumors? The announcement about XSX´s specs MS did in december?

Both Xbox and PlayStation engineers are testing these systems on the daily, making tweaks and adjustments. Why is it silly for me to assume that Sony obviously knew they had to up the clocks to be competitive, but it's not silly to assume that 2.2GHz was all according to keikaku from the very beginning?You don't seem to understand that even "many months ago" is very recent when it comes to the design of machines like this. System design takes a long long time.

They obviously planned to go with very high clocks from the start or else they would not have been able to do so since it requires some serious cooling. Besides the cooling it's obvious that the way their variable speeds and their set power budget works is to allow for speeds like this.

The one obvious thing is that yes Sony wanted to get as much performance out of a 36CU GPU and they have obviously achieved that goal. Why did they go with 36CU's? Most likely with affordability in mind.

Or maybe we're both off.

Maybe Sony planned to release a console in 2019 that had lower clocks but still needed an above-average cooling solution, had to move up their timeline to 2020, and determined more speed was required, especially in light of internal news of their competitor's GPU.

Who knows. My biggest concern with PS5 is the heat that a high-speed SSD and a highly-clocked GPU will produce in tandem, in close proximity, in a small space. I've dealt with a noisy PS4 Pro with cheap cooling before trading in for a new, quieter model. Cerny said they were aware of noise and sound issues with PS4. We'll see how PS5 addresses this.

I never said anything about them choosing their clocks after Microsoft released XSX specifications on March 16, 2020 or even in Februrary. Look at how ridiculous that reads. This would have been a decision made many months ago.

But again I argue.

1.8GHz is not a "low" clock speed.

2GHz is high.

2.2GHz is nuts.

We're talking about consoles here, they have lower TDP than discrete GPUs by design. I re-iterate what I originally stated in my discussion with Phoenix. Sony wanted to eek out every once of power possible from a 36CU chip while also being aware of public perception of the power differential.

Some of you behave as if Sony operates in a bubble. They would have been aware of the rumors of a 12TF next-gen Xbox for sometime now. It's not something they woke up to overnight and reacted to, but you can be sure they factored that into their design decisions for PlayStation 5.

I would imagine everyone wants to eek out every last bit of performance from their product. Its alot more likely that they both set out with somewhat specific performance and price targets. Saying sony had no choice but to do 10 is like saying ms had no choice but to do 12.

Yes, Windows Central posted a rumor in early December 2019 that Project Scarlett (Xbox Series X) would have a 12TF GPU. Sony would likely have known that beforehand. But I wasn't in those meetings with Mark Cerny and the team, so can't say with 100% certainty when they found out or how they reacted to it.

You realize MS officialy announced the XSX in december? With 12 TF, NVME SSD etc. pp.? If all you do is guesswork you should at least inform yourself about the facts.

Leak: https://www.windowscentral.com/xbox-scarlett-anaconda-lockhart-specs (December 9, 2019)You realize MS officialy announced the XSX in december? With 12 TF, NVME SSD etc. pp.? If all you do is guesswork you should at least inform yourself about the facts.

Announcement: https://www.windowscentral.com/next-gen-xbox-project-scarlett-revealed-xbox-series-x (December 12, 2019)

Not to mention we were discussing this 12TF number for quite some time in the next-gen speculation threads. Odd, I don't remember seeing you there. Yet you assume that I'm doing "guesswork."

Yes, Windows Central posted a rumor in early December 2019 that Project Scarlett (Xbox Series X) would have a 12TF GPU. Sony would likely have known that beforehand. But I wasn't in those meetings with Mark Cerny and the team, so can't say with 100% certainty when they found out or how they reacted to it.

Both Xbox and PlayStation engineers are testing these systems on the daily, making tweaks and adjustments. Why is it silly for me to assume that Sony obviously knew they had to up the clocks to be competitive, but it's not silly to assume that 2.2GHz was all according to keikaku from the very beginning?

Or maybe we're both off.

Maybe Sony planned to release a console in 2019 that had lower clocks but still needed an above-average cooling solution, had to move up their timeline to 2020, and determined more speed was required, especially in light of internal news of their competitor's GPU.

Who knows. My biggest concern with PS5 is the heat that a high-speed SSD and a highly-clocked GPU will produce in tandem, in close proximity, in a small space. I've dealt with a noisy PS4 Pro with cheap cooling before trading in for a new, quieter model. Cerny said they were aware of noise and sound issues with PS4. We'll see how PS5 addresses this.

Sony might not have known what kind of frequencies they would end up with (Cerny stated that they tried to get higher but it got limited by another part) but again it's obvious that they were aiming for high frequencies from the start.

Were they aiming for 2.23GHz from the start? I don't know. Maybe they were even aiming higher.

Did they up their frequencies because of inside spy info on the XsX? I doubt so very very much.

Leak: https://www.windowscentral.com/xbox-scarlett-anaconda-lockhart-specs (December 9, 2019)

Announcement: https://www.windowscentral.com/next-gen-xbox-project-scarlett-revealed-xbox-series-x (December 12, 2019)

Not to mention we were discussing this 12TF number for quite some time in the next-gen speculation threads. Odd, I don't remember seeing you there. Yet you assume that I'm doing "guesswork."

In the next gen threads you guys were talking all sorts of things lmao Don't make it sound like people were in the know about the 12tf or some nonsense now. It's ok to admit you jumped the gun with your initial comment. fyi I didn't post in the next gen thread but I read it basically daily lol

Yes, you ARE doing guesswork.

I think a closer - if not perfect - comparison would be to use the existing Navi cards, and see how they scale with extra compute.

They don't scale quite as well as the RTX2070 to 2080 does in Gears 5 - there you're seeing a real world gain of up to 80% of the compute ALU difference between the two chips.

If you compare the 5700 XT to the 5700 - looking at it across 12 games at max settings at 1440p and 4K - you realise only about 60% of the paper compute difference. 23% higher teraflops is typically buying you ~13% higher framerate, for the same resolution/settings.

Of course in both of these cases - the 2070/2080, 5700XT/5700 - bandwidth is the same among all the GPUs in question. XSX will have a bandwidth advantage that scales relatively closely to its compute advantage. However I think it's interesting that even at the lower 1440p resolution, where presumably games may be less bandwidth bound, the 5700XT doesn't do much relatively better vs the 5700 than it does at 4K. It's a pretty steady difference. That's another difference vs the nVidia chips, there's not quite the same apparent scaling of perf difference between lower and higher resolutions.

It will be interesting to see if RDNA2 can scale better with more compute (and bw), and how a faster-clocked top/tail of the gpu pipeline might affect the real differences too. I think those benches are a good example, though, of how all else being equal, real world performance doesn't scale perfectly with compute increases.

Unfortunately, there aren't any direct NAVI cards on the market that give as good of a performance comparison as the RTX 2080 vs the 2070 imo. I don't think the RX 5700 vs 5700 XT is a great example, since they are essentially identical cards but one was binned lower. They have identical CU counts, which is why I went with the Nvidia comparison. There are much greater architectural difference between the PS5 and XSX versions of Navi. I'm really looking forward to seeing more about Big Navi, and how the next-gen AMD (and Nvidia) cards stack up.

It's not like it can be proven either way. Believe what you want to believe. Any speculation is fair game.Dude it comes down to the same thing. Sony didn't choose to go for such a high clocks after MS released the info that the XsX is 12tf.

Leak: https://www.windowscentral.com/xbox-scarlett-anaconda-lockhart-specs (December 9, 2019)

Announcement: https://www.windowscentral.com/next-gen-xbox-project-scarlett-revealed-xbox-series-x (December 12, 2019)

Not to mention we were discussing this 12TF number for quite some time in the next-gen speculation threads. Odd, I don't remember seeing you there. Yet you assume that I'm doing "guesswork."

What you´re doing is guesswork. You say Sony upped their clock because of the XSX and marketing reasons, yet you have no receipts that your claim is true. You even go as far to say that no denial is going to change that, as if your guesswork is fact. You´re not stating facts, you made up a fictional story that fits your narrative thats all.

At a time where every PR talk (in any domain) is to ignore problem or mistake, I was glad he aknowledge it.Having watched Cerny say that they were aware of the cooling and sound problems with the PS4

He even "brag" about the cooling solution, so big hope for a silent PS5. My launched Pro is really, really loud.

I said no denial was going to change the fact that the PS5 is lacking in grunt comparatively (52CU vs 36CU). Those were my exact words. And saying that the PS5's high core clock is a mitigating move on Sony's part is no more guesswork than NXGamer surmising that the PS5 OS will only use half or less of GDDR6.What you´re doing is guesswork. You say Sony upped their clock because of the XSX and marketing reasons, yet you have no receipts that your claim is true. You even go as far to say that no denial is going to change that, as if your guesswork is fact. You´re not stating facts, you made up a fictional story that fits your narrative thats all.

Unfortunately, there aren't any direct NAVI cards on the market that give as good of a performance comparison as the RTX 2080 vs the 2070 imo. I don't think the RX 5700 vs 5700 XT is a great example, since they are essentially identical cards but one was binned lower. They have identical CU counts, which is why I went with the Nvidia comparison.

There's a CU difference - 36 vs 40. Of course that's not like the CU difference on the consoles, the flop difference in the 5700XT is also a function of higher clocks, so it's not like the PS5/XSX situation where it's purely from the CU increase, and there's an inverse relationship on clock.

Granted though, of course these aren't perfect analogies either.

We might actually have a closer one in upcoming mobile Navi's, vs last years (5600M vs 5500M). Last years went narrow/fast, while this year's are going wider/slower, and also have a similar-ish bandwidth advantage over last year's as XSX has over PS5. Of course, the performance levels aren't remotely the same, the ratios of cu and clock aren't the same... and the 5600M will have twice the memory, which will undoubtedly affect performance... but it might be a basis for having a closer look at how navi scales as narrower/faster vs wider/slower, if you can remove memory footprint from the equation.

I said no denial was going to change the fact that the PS5 is lacking in grunt comparatively (52CU vs 36CU). Those were my exact words. And saying that the PS5's high core clock is a mitigating move on Sony's part is no more guesswork than NXGamer surmising that the PS5 OS will only use half or less of GDDR6.

No your exact words were:

An actual falsehood. You think Sony clocked the PS5 GPU up so high for kicks and giggles?

36 CUs vs 52 CUs is a large, noticeable gap. It's not like the XSX GPU's clock of 1.8GHz is low.

At 1.8GHz, PS5 comes out to 8.4TF

At 2.0GHz, PS5 comes out to 9.2TF

Clearly, Sony felt the need to up clocks to raise teraflops so they would compare more favorably, because both of the previous configurations are a major perception blow in the face of the XSX.

No amount of denial from you lot will change this. Sony had no choice to be aggressive with clocks because the machine is lacking in grunt comparatively.

When you meant that no denial is going to change that the XSX has more power than the Ps5, who were you talking to? I don´t see the post you quoted there or anyone here arguing with you saying the Ps5 is stronger or equal in power.

I don't think back in 2016 (or whenever they started making the PS5) Cerny said to his team, "ok, let's get this thing to 2.2Ghz" either.I never said it was a last minute thing. Re-read the comment. I said it was a necessity because of where 2GHz/9.2TF would have left them in the next-gen conversation. It's about perception, marketing 101 teaches this.

Maybe you haven't spent any time overclocking in the PC realm, by 2.2GHz is obscene for GPU clocks. Heat is the enemy of component life and the higher your clocks, the more heat you produce. I do not believe Cerny opted for 2.2GHz from the get-go. In the PC space, you're hard-pressed to overclock that high on discrete GPUs, let alone consoles. We'll see how cooling works for PS5, but I have my doubts.

I do believe that being able to clock as high as 2.2Ghz was a result of whatever work they put into their cooling solution. So basically, we are at an impasse. If the PS5 comes and sounds like a jet engine and we are having overheating issues, then you are right. If it comes and is whisper quiet and no overheating issues, then I gues we can put the whole clocked that high for PR sake thing to bed.

I don't want to get into a back and forth. Both are the same architecture one is a class above the other. There will be differences. MS also has a huge advantage IMO with Ray-Tracing Via DXR 1.1. The basically have 2 years' experience already on the first literation of DXR.This is not a Pro vs 1X situation, relative difference is much smaller and that's just GPU part, 1X had substantial advantage in RAM allowing games to have higher resolution textures which farther extended the perceptible gap in IQ and sharpness.

This time it's going to be mostly a resolution difference and it's a bit early to talk about graphical features and settings, RT will scale with resolution difference so not sure where the "advantage" will come from, its also 18-20% better. VRS and Mesh Shaders are RDNA2 (just like a lot of other features MS talked about)

Also there is a reason why Sony decided to dedicate so much silicon space to custom storage and IO hardware instead of going with a larger GPU. Will have to wait and see the underlying benefits there.

VRS is going to be huge Next-gen people keep understating this. talking like a 30% improvement in frame rates when used. Take into fact that XBSX is using DX12 Ultimate which supports tier 2 support for VRS. MS also has a huge advantage on the API level IMO.

The SSD is not going to make up for the lower CU count that PS5 has. Worlds might be able to be drawn in further. an object may stay on screen further in the distance. Loads maybe a few seconds quicker than XBSX. PS5 is less capable and there will be a difference.

That being said I have no doubt that sony 1st party will showcase some fantastic looking games. Time to accept the truths and just await the games that Sony will show. I wholeheartedly believe both companies are going to battle for our gaming dollars. Great games will come from both consoles!

When exactly do you think sony found out about xsx Tf number?I never said it was a last minute thing. Re-read the comment. I said it was a necessity because of where 2GHz/9.2TF would have left them in the next-gen conversation. It's about perception, marketing 101 teaches this.

Maybe you haven't spent any time overclocking in the PC realm, by 2.2GHz is obscene for GPU clocks. Heat is the enemy of component life and the higher your clocks, the more heat you produce. I do not believe Cerny opted for 2.2GHz from the get-go. In the PC space, you're hard-pressed to overclock that high on discrete GPUs, let alone consoles. We'll see how cooling works for PS5, but I have my doubts.

Mind cluing us in on the mhz sweet spot for rdna2?

Why is every ps5 thread a shit show?

Not to mention that besides the extra CUs, the 5700XT also has a clock advantage over the 5700. Since we know that clock increases improve performance more linearly than the extra parallelism of more CUs, the gap between theoretical performance advantage and actual performance advantage would be even higher.

To come back to the example of @theAllseeingEYE , if you compare TFLOPs instead of benchmark results - and the entire discussion here has been about what TFLOPs translate to, mind you! - you get a 34-38% advantage for the 2080 (depending on what clockspeeds you're comparing), but only a 18% advantage in games. You've actually proven our point here.

Edit: Beaten to it lol.

As I mention above, the 5700 vs 5700 Xt is a poor comparison to the XSX and PS5 GPUs. The 5700 vs 5700 XT is likely much more indicative of performance advantages of Sony overclocking the PS5 GPU. If there are points where performance of the two is narrower than expected, it's likely because they are essentially identical cards. That also shows that overclocking won't help performance in every scenario, causing a bigger performance divide between the systems at times.

As for your Tflops difference point, I think you do have a point. Even though it's still not a perfect example, perhaps the 2070 super vs the 2080 super is a better comparison:

2080 Super:

Tflops: 11.2

SMs: 48 (comparable to an AMD CU)

Memory Bandwidth: 496GB/s

2070 Super

Tflops 9.1

SMs: 40

Memory Bandwidth: 448GB/s

Gears 5 benchmarks

Gears 5 Benchmarked at 1080p, 1440p, and 4K

Gears 5 gets benchmarked on a bunch of GPUs at 1080p, 1440p, and 4K.

1080p

2080 Super

avg: 125

Min: 98

2070 Super

avg: 113

min: 92

1440p

2080 Super

avg: 93

Min: 76

2070 Super

avg: 82

min: 67

4k

2080 Super

avg: 53

Min: 45

2070 Super

avg: 47

min: 39

That is still quite a performance gap, and doesn't take into account the greater CU difference between PS5 and XSX, the variable clocks on the PS5, the wider memory bandwidth between XSX and PS5, the CPU advantages of XSX.

My point once again, is that once developers start tweaking visual settings, they can and will choose to prioritize visual quality over resolution and framerate in many scenarios. I also assume MS is going to try and heavily leverage ray tracing, lighting, and performance enhancing graphic features such as variable rate shading, temporal resolution. For games like Metro, games from Remedy, Epic Games, teams that want to leverage ray tracing and lighting, we will also see greater performance gains for the XSX.

Don't get me wrong though, I'm not here to convince anyone that the PS5 doesn't sound like a great system. I think it's a very exciting system, and I hope the SSD speeds amount to some great features.Price will be a big selling point for both systems.

Last edited:

Ok... here is a thought. It may be totally crazy but here.I went back and fixed it to clarify.

I believe this is a smart FIX that Sony has.

I believe they were pushed into this corner by MS.

What if you are right. What if we are both right? Sony always had a good idea what MS was going to do, they were going to build a bigger APU than sony were. So sony figured they were just going to stick to their smaller APU plan and clock as high as possible. Now in 2019, as high as possible with their cooling solution meant that they could get clocks up to 2Ghz. But they didn't stop there, they kept working on it and ultimately were able to get it up to 2.2Ghz, again, based on their cooling solution.

How's any of that a reaction to MS? How can the entire basis of your argument be that because its never been done before it means they were forced to do it and its a "fix" as opposed to an engineering accomplishment.

But lets even say it was a "fix", if they still managed to accomplish it then what does it matter? Is the rez dirtier or fps chopper because they are doing the blasphemous thing of running a chip at 2.2Ghz?

Cause there are people that seem to insist on making its point that the significant difference in power between the two consoles would have PS5 running at 1080p to the XSX 4k. Well, if you read some of what they are saying that's how it sounds.When exactly do you think sony found out about xsx Tf number?

Mind cluing us in on the mhz sweet spot for rdna2?

Why is every ps5 thread a shit show?

Oh, and these people also believe sony is lying and are just desperately trying to save face... and yet not a single game has been seen on the platform yet.

I don't think back in 2016 (or whenever they started making the PS5) Cerny said to his team, "ok, let's get this thing to 2.2Ghz" either.

I do believe that being able to clock as high as 2.2Ghz was a result of whatever work they put into their cooling solution. So basically, we are at an impasse. If the PS5 comes and sounds like a jet engine and we are having overheating issues, then you are right. If it comes and is whisper quiet and no overheating issues, then I gues we can put the whole clocked that high for PR sake thing to bed.

Well, Cerny DID say that they had to cap the clocks at 2.23GHz else the logic started failing (consistent with what we've seen with some extreme OCing), so it seems 2.23GHz doesn't really max out their power supply and cooling setup. They probably had some higher number in mind.

Cause there are people that seem to insist on making its point that the significant difference in power between the two consoles would have PS5 running at 1080p to the XSX 4k. Well, if you read some of what they are saying that's how it sounds.

Oh, and these people also believe sony is lying and are just desperately trying to save face... and yet not a single game has been seen on the platform yet.

With the way some have hyped the gap, if xsx games don't end up with a 40%+ advantage in resolution, better frame rates, and better raytracing, I'll consider it a pretty big dissapointment.

And if it is whisper quiet and cool, I will gladly accept that my hypothesizing was in vain and be pleased to play the next Spider-Man and God of War in peace. AND give Mark and his team praise for clearly going above and beyond what I thought was possible in the console space.I don't think back in 2016 (or whenever they started making the PS5) Cerny said to his team, "ok, let's get this thing to 2.2Ghz" either.

I do believe that being able to clock as high as 2.2Ghz was a result of whatever work they put into their cooling solution. So basically, we are at an impasse. If the PS5 comes and sounds like a jet engine and we are having overheating issues, then you are right. If it comes and is whisper quiet and no overheating issues, then I gues we can put the whole clocked that high for PR sake thing to bed.

Well, Cerny DID say that they had to cap the clocks at 2.23GHz else the logic started failing (consistent with what we've seen with some extreme OCing), so it seems 2.23GHz doesn't really max out their power supply and cooling setup. They probably had some higher number in mind.

Or they had no number in mind and just kept pushing it until they had to finalize everything.

2080 has more shader processors than the 2070 though. 46 cus worth in 2080 and 36 in 2070. that roughly translates to a 27% difference in CUs/shader processors which is in line with their performance difference in games.Also important to observe how one gets to the theoretical performance. Both the 2080 and the 5700XT are clocked faster than 2070 and 5700, respectively, and perf scales better with clocks than with extra logic.