looking at all these elitists with their + $1000 setups crying fps digits while i am here more than satisfied with rocking a gt1030 everything on low at 720p 30fps living my best life

So you list one game with supposedly solid performance on consoles(on one of them and 30fps), then you use games that run like dogshit on console to discredit pc versions?

Hyperbole is so strong in this thread. I personally do not remember when was the last i spent more than 5 minutes tweaking graphics setting in the game.

And people tweak settings, because they want to play game on the best fitting, for them, setting possible, but you do it once.

This is the dumbest take I have ever seen. xDPC has been my main platform since 2009 and I STILL dont know what those are. I know they're AA methods, but I couldn't tell you how they differ. Same with AO, screen space reflections, sub surface scattering, and a bunch of other non sense terms. All I know is if I want more performance, I just start turning stuff off.

That's why I linked Guru3D's benchmark, they have a whole page dedicated to the options they used. Intense options like Hairworks are disabled and others like AO, toned down.

Game was demanding and there are no two ways about that. Same is now with RDR2, and same will be with Cyberpunk.

I've skimmed through the article and - correct me if I'm wrong - but I don't see any benchmarks for lowest/medium settings? That's what I mean about these benchmarks not telling you the whole story. Of course it's going to perform poorly if you run it at "ultra" on a GTX 760!

Also, as the article points out, VRAM usage is really low even at 4K. That's impressive compared with some other VRAM guzzlers I could mention.

I can run all those games (except RDR2 - not tried it yet) at 4k/60fps on my PC. I can't say the same about any console.The game is a locked 30fps at Native 4k on the X...

I feel the same as the OP between Dark Souls, AC Unity, Ryse, Arkham Knight, Watch Dogs 2, Mafia 3 and now RDR 2. DS will be the same. AAA developers don't care about PC.

I feel the same as the OP between Dark Souls, AC Unity, Ryse, Arkham Knight, Watch Dogs 2, Mafia 3 and now RDR 2. DS will be the same. AAA developers don't care about PC.

The game is a locked 30fps at Native 4k on the X...

I feel the same as the OP between Dark Souls, AC Unity, Ryse, Arkham Knight, Watch Dogs 2, Mafia 3 and now RDR 2. DS will be the same. AAA developers don't care about PC.

Really?It's also gotten a lot better than it used to be, in my experience. I felt the same way you did a few years ago, but I've been primarily PC gaming over the last two years as the experience has just been that much more enjoyable to me than consoles.

Playing RDR on my 1X last year was very unenjoyable.

it will be quieter.Vapor chamber cooling isn't magic. You wouldn't get dramatically better temps.

I can promise you I have friends who have done exactly this. Complain that a game runs like trash on their pc when they don't bother lowering the graphics settings. Like the beauty of pc gaming is the settings are fully scalable.Why is this always the first thing that gets fixated on, even if it's not actually the real problem? Many are having problems even with everything at medium settings. I'm also rereading the OP, and I don't see the word "ultra" anywhere in it.

First of all we need to stop the bs on this thread and missinformationThe game is a locked 30fps at Native 4k on the X...

I feel the same as the OP between Dark Souls, AC Unity, Ryse, Arkham Knight, Watch Dogs 2, Mafia 3 and now RDR 2. DS will be the same. AAA developers don't care about PC.

You have to do it once. There are guides available for the most taxing settings. I recently tried out a game that didn't run at 60fps, lowered from Ultra to High preset. Could probably squeeze a bit more performance but honestly the difference isn't that large to be bothered about it. The fan noise difference is more bothersome than the graphical difference.

There are even programs that can automatically give you recommended settings for your setup. I don't use them because I don't find them that useful, but you can, if you wish, avoid what you consider the worst aspect of PC gaming with a couple of mouse clicks so I find it hard to sympathize with this issue. It's not as if console games are getting easier to play and you don't have to "faf" around with them either. Some are getting graphical settings, just like PC, others require long and tedious installation times not to mention the mandatory patches or sign ins that some require. Console gaming is moving closer to PC gaming in terms of complexity whereas PC gaming has never been as accessible as it is now.

With that said, I can't say more other than I completely, but respectfully, disagree.

I disagree with this. One thing I love about pc gaming is booting up a 10 year old game I didn't play on release, cranking up all the settings, and experiencing it with modern hardware. Including settings that are too taxing for current hardware is forward - thinking. I recall The Witcher 2 putting big red warning signs on specific graphics settings that are more taxing, but today 8 years later they're a breeze for current hardware. It's future - proofing your tech, The Witcher 2 looks better today thanks to those post process effects that nobody was using at launch.Though i would argue that if pushing settings beyond consoles does not give you noticeable visual difference( that you can see without zooming way in ) then it is a questionable choice to include such settings. Pushing hardware for the sake of pushing hardware is not needed... you can cripple any machine with unnecessary precision without any visual gain.

If game can't deliver console performance/settings on ~similar pc hardware then it's a bad port, otherwise people should just lower their damn settings. That is if there are no other stuff like random crashing, framepacing and streaming issues etc.

Though i would argue that if pushing settings beyond consoles does not give you noticeable visual difference( that you can see without zooming way in ) then it is a questionable choice to include such settings. Pushing hardware for the sake of pushing hardware is not needed... you can cripple any machine with unnecessary precision without any visual gain.

What a weird post.The game is a locked 30fps at Native 4k on the X...

I feel the same as the OP between Dark Souls, AC Unity, Ryse, Arkham Knight, Watch Dogs 2, Mafia 3 and now RDR 2. DS will be the same. AAA developers don't care about PC.

This made me curious of how well The Witcher 3 does run on older or lower end hardware at the bottom end of the settings scale. So I've just installed it on such - i7-4770k (not overclocked), 16GB DDR3 RAM, GTX 780, so that's a system from 2013, two years old when The Witcher 3 released. At 1920x1080 and low settings, running around the city of Novigrad I'm getting framerates between the 70s and 90s. Textures look awful on low but popping them up to ultra doesn't hurt the framerate too much.

You can't really claim a game is demanding without also testing how it performs on the lower end and it still doesn't seem that demanding to me. I would expect Cyberpunk 2077 to follow suit, in that, while hardware is going to break a sweat on highest or near highest settings, there will be plenty of opportunity to get it running well with lower settings.

You have to wonder if a lot of this could be solved by developers having console-equivalent presets and labeling them as such; even just "console low/console high" without naming names.You are reacting with your gut and not your mind to be honest, think of low as high and there you have it

It is not a Bad port - rather the audience is 'Bad' here and is honestly not even thinking

That in itself is a problem - and why it doesn't seem to be scaling well to older systems. But you're right that the main issue here is many people's insistence on running games at "ultra" no matter what.The game is not a mess. It's just not targeting to be limited by current hardware. It has a different graphics scale. Nobody wants to play at "low" settings but they don't realize that those "low" settings are equivalent to medium-high in other AAA games. Everyone wants to play at high and ultra. Those settings equal "extreme" settings in other games.

This is what the game looks like on the lowest possible settings (except textures on ultra but anisotropic filtering is off so they look muddy):

Are you kidding me? This is the lowest graphics settings. Draw distance, shadows, volumetric clouds and lighting,..etc. are equivalent to high settings in other games. look at the clouds and trees in the distance casting shadows! Which game has those on "LOW" settings?

CPU utilization does not have to be anywhere close to 100% to bottleneck the GPU. A single thread, if it's important enough, can bottleneck the entire rendering pipeline.I have no trouble hitting 60fps at lower resolutions and the CPU utilization is low too.

That's arguably the worst PC port, in terms of performance, released this entire generation. Still looks and runs better than it does on console though.Its worse when developers release crappy optimised versions of their games and NEVER patch them.

I'm looking at you Evil Within 2. Has awful CPU and GPU utilization and stutters like a bastard.

Did they fix it? Did they fuck?

I'll just point out here that GTA IV was literally unbeatable on PlayStation 3 for me because the final mission of the game would break in the same place every time (the jump onto a helicopter).Managed to play 10 hours or so, but the game kept crashing randomly and now at a specific mission. Really tired of jumping through hoops just to get my game to play properly. Even worse than having to jerry rig older games just to get them playing on modern systems.

GTA IV ran terribly if you maxed-out the draw distance sliders. It still runs poorly on most of today's systems because it really only takes advantage of three CPU cores, if I recall correctly.Isn't this normal for rockstar games. I didn't bother with gta v, but I remember gta iv running like dog shit for no reason.

The thing with ultrawide displays is that the worst-case scenario is having to play a game in 16:9… which is how you would have to play them if you didn't have an ultrawide monitor anyway.On the other hand PC gaming can be outright bad if you're unlucky (even with a beefy pc). I wanted to play the Outer Worlds on my pc (9900K/2080ti) but thanks to my ultrawide gsync monitor i was fucked. The ultrawide resolutions are zoomed in, sadly the developers didn't take the time to do them right (Gears 5 and many other games are working perfectly). Even with ini changes it didn't really got much better.

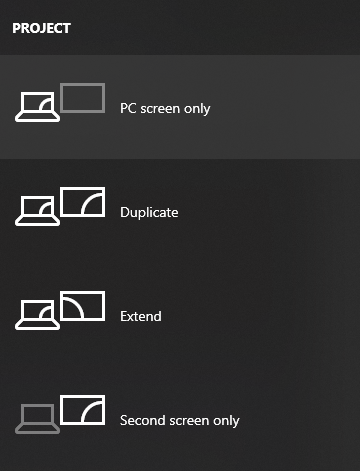

WIN+P is a shortcut you should learn, as it brings up this menu (and switches after a moment, if the screen you're in front of is currently disabled).So i tried to play on my big ass 4K HDTV but (again) the developers couldn't get the settings right for release. In other games (gears 5, etc.) you can switch the display so that you don't have to switch cables to not play on your main monitor. Because of that the game refused to let me play in more than 1080P on my 4k TV. The game still thought i was playing on my ultrawide monitor and that can't display 4K.

BSODs are generally a sign of a hardware fault. User-mode software, like a game, should not be capable of taking down the entire OS. A certain game may be a consistent trigger for a hardware issue, but that does not mean the game itself is the problem.I tried PC gaming years ago and during the installation of a game got the blue screen of death. I had to call support and got connected to someone in Eastern Europe. After 20 minutes I finally got the game to play to run but had to restore a bunch of shit.

I went back to consoles and never looked back. To me the best rig and setup doesn't compare with the ease of plug and play. Sure consoles can't technically compete with PC in terms of setting but the hassle free experience of consoles more than makes up for it.

I have literally never entered the BIOS on my system after initially setting it up, and it's extremely rare that updating the BIOS is necessary unless you are adding new hardware to the system like a newer generation of CPU or GPU.Yeah... BIOS settings. Kind of integral part of maintaining your PC. Never realized mentioning BIOS could be misinterpreted as sarcasm, but I guess I shouldn't underestimate the power of denialism. Here's a fun exerice for you, go to PC performance thread and count how many times BIOS is mentioned.

That in itself is a problem - and why it doesn't seem to be scaling well to older systems. But you're right that the main issue here is many people's insistence on running games at "ultra" no matter what.

Yeah... BIOS settings. Kind of integral part of maintaining your PC. Never realized mentioning BIOS could be misinterpreted as sarcasm, but I guess I shouldn't underestimate the power of denialism. Here's a fun exerice for you, go to PC performance thread and count how many times BIOS is mentioned.

I did not say that this game is a bad port i was talking in general. I am waiting for DF comparisons since i do not have PC version.Who said the game can't deliver console performance on similar PC hardware? No one here knows what console settings are and most people are targeting 60fps while it's 30fps and drops to the 20s on consoles. Why are you comparing it to console performance again?

Graphics settings are optional. If they are "not needed" just ignore them and play on low settings instead. Consoles run most likely with low settings and some on medium.

I don't understand why you want the devs to deliberately restrict the graphics options to current hardware maximum capabilities. Why don't they offer more scalability for future hardware?

What a weird post.

It's like listing like 5 badly optimized games this gen and saying that's evidence that developers don't care for consoles.

Are we back in 2005?

I am shaking right now, that's how upset I am.They were five games that I was really interested in and decided to buy then later upgrade my PC to play them at 1080p/60fps.

They were incredibly poorly optimised / lazy ports of big AAA games which have been well documented. I'm sorry if that upsets you.

I did not say that this game is a bad port i was talking in general. I am waiting for DF comparisons since i do not have PC version.

Like i said in later post if there is no visual gain from "future proofing" your game then what's the point? Only confusion in community and wasted hardware horsepower/electricity.

You can make untextured 3D cube run at 1fps on 2080Ti if you want... it won't make it look better though. I am not saying that RDR2 PC version does that, but from what i have seen so far there is not a big difference. Would be great to get good comparisons and analysis.

Same, the only reason I ever went to my BIOS is because I specifically made the choice of getting a 2500k, and now 8700k, that I wanted to overclock. And that was exclusively because of how core speed is/was important for emulation, I never cared about the performance gain it would bring to PC games.I set my BIOs when I installed my motherboard/CPU. The only reason I've ever played around with it is to overclock. Otherwise it's left alone for the duration of the time I have that hardware.

I agree that PC ownership requires some tinkering, but I wouldn't even consider setting a BIOs as part of that tinkering.

Where did I say or even imply that? In fact in the post you quoted I did say the higher the settings the more hardware is going to break a sweat.Thank you for your time in setting this up! Only proving my point with that comparison, tho. GTX 780 has a ~20% better performance then the recommended GTX 770 for Witcher 3, so lets say a 770 in your test would net FPS in the 60-80s. Same goes here for RDR2 - the recommended 1060 has a 60-70FPS performance on 1080p/lowest preset. (at least from the performance thread and some utube videos, don't have the chance of testing it myself)

How does that prove the game(s)(W3 and RDR2) not being demanding the more you crank up the visual goods? And even more, what's making you think that Cyberpunk wont be even more demanding (not counting raytracing implementation) and what would stop people going crazy again over this stuff, the same as here now?

Occasionally there these games that push the top of the current hardware to the limit, be it for overtaxing graphical goodies(tesselation, msaa, raytracing), futureproof options(think ubersampling in Witcher 2, I believe they even added a 'note' saying this options is for the hardware of the future), ect. Thats not a reason to hate the game, the dev or the pc gaming as a whole.

Yeah and I'd call locked 30 awful performance. Shit is sluggish as hell.The game is a locked 30fps at Native 4k on the X...

I feel the same as the OP between Dark Souls, AC Unity, Ryse, Arkham Knight, Watch Dogs 2, Mafia 3 and now RDR 2. DS will be the same. AAA developers don't care about PC.

I had to look this up on youtube, and was rather impressed. thats still playable.looking at all these elitists with their + $1000 setups crying fps digits while i am here more than satisfied with rocking a gt1030 everything on low at 720p 30fps living my best life

Missing the conversation point then, because whatever hassle I deal with in a PC game, at the least PC players can find work around and get more ideal performance.I am not speaking to the PC performance or the 30fps difference.

Missing the conversation point then, because whatever hassle I deal with in a PC game, at the least PC players can find work around and get more ideal performance.

with the console you're often stuck with the wack performance, and however the dev left the game with maybe a few tweaks to trash like motion blur and god willing a FOV slider.

I have to admit that between Modern Warfare and RDR2 I'm really starting to question my PC first approach to new games. I couldn't play the game the night that Modern Warfare was unlocked because the PC version was screwed, and I can't play RDR2 because the launcher is broken.

I got lucky with Gears 5 and was able to finish it before all the servers went to shit.

As someone whose primary focus is single player campaigns, the PC launches of this year have been buggy as can be. A year from now I don't know if my PC first approach will still be intact.

They both do the exact same thing in the exact same way, only in different packaging. Ease of manufacturing is probably the bigger factor in deciding between the two.It's not that simple. It also depends on your fans. If vapor chamber cooling made that much sense no matter what, it'd be featured in every high end GPUs. Yet a lot of high end cooling systems prefers heat pipe. Why ?

What? Mate what? Your the one taking it as a slight, where as I am just discussing what is as it is. PC generally require more work from the player, because of any number of reasons that may come up. But in the good ol age of google search, one can figure out some work arounds. Customize the game to their needs, which is the main perk of spending all the money someone does on a good rig.

Hundreds of games release just fine on PC and when one game is a slightly questionable port it's the fault of PC gaming

2 minutes of setting a game within first hour is 'a lot of minutes'? And when you set it up correctly, you do not touch it again"Like every 10 minutes". Is that normal on PC? That's a lot of minutes of not playing, imo. It's fine some people don't mind tweaking a little, but I don't want to try every setting in order to "discover" what cause certain problems. That's not hyperbole, just like my Dutch forum comment is not hyperbole.

are they cheaper than vapor chamber cooling?It's not that simple. It also depends on your fans. If vapor chamber cooling made that much sense no matter what, it'd be featured in every high end GPUs. Yet a lot of high end cooling systems prefers heat pipe. Why ?

It doesn't bother me what you're doing pal but well done for ignoring the rest of my last post.

It is in no way as easy as people on forums make out to get a locked 60fps in big name AAA ports on PC especially at high settings and especially at 1440p, 1800p or 4k (which are the resolutions now offerered by the mid gen consoles) without much of the hassle PC gaming brings.

On top of the farmed out / low budget AAA ports, the rise of exclusive deals and multiple digital stores, the death of cheaper keys and the gargantuan leap in CPU performance of the next gen consoles which are arriving in just over a year were the final nails in the coffin of PC gaming for me personally.

Anyone know how much I'd get for a boxed RTX 2070 in the U.K. second hand market?