You are mischaracterizing what Mark Cerny said. How can he downplay CU when every GPU has them?

What he said was he preferred clocking higher than adding more CU

to achieve the same theoretical TF because a rising tide riases all boats. Meaning that if the GPU is clocked higher, everything inside the GPU gets clocked higher and not just the CU.

52 CU at 1.6GHz = 10TF

36 CU at 2.23GHz = 10TF

The latter 36 CU GPU will have higher rasterization, pixel and texture fill rate, higher cache bandwidth. Even though both have the same theoretical 10TF. That is not downplaying CU and more talking about the effects of clocking higher. The downside of clocking high being latency but the benefits outweighs the downside assuming you can cool said GPU appropriately to perform at that high clock.

www.resetera.com

But overclocking the gpu core only is not a rising tide that lifts all boats.

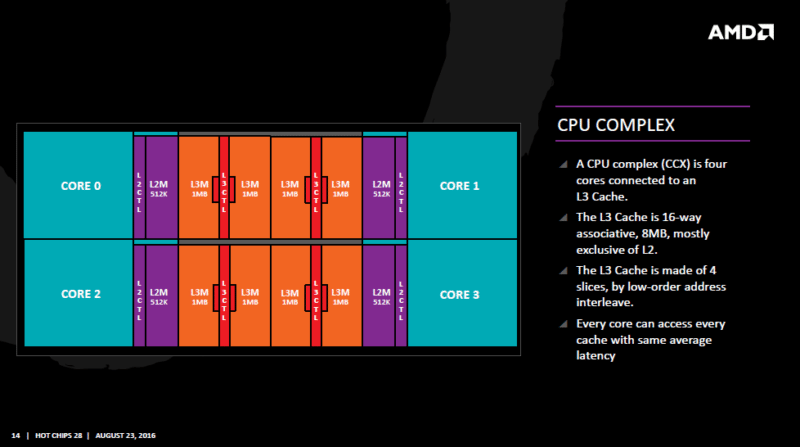

The amount of on-chip cache does not increase nor the amount of memory bandwidth (unless you overclock the memory, which they aren't)...

Right there, that's 2 super important components for the target performance that is seeing 0 improvements from the overclock, and that often even limits to see the full effect of the clocks realized.

Pixel and texture fillrate are no longer a problem in any modern gpu, in fact they have theoretical values that are usually even impossible to reach in reality because there's simply no bandwidth to feed them (was the case on Ps4, and to an insane extent on Pro).

Rasterization is not an issue either, because what's the point in being able to draw billions of triangles, if you render a small triangle the entire gpu stalls? Hence the creation of the mesh shaders, and other previous attempts such as primitive shaders to make geometry processing more efficient/flexible instead of basically making a super outdated pipeline super fast. Which is what epic used in the Ue5 tech demo, that's why despite achieving pixel sized triangles the time it took to process them was similar to Fortnite on current gen consoles (a 60fps game even).

And the importance of cache for overall performance cannot be overstated, to a point Nvidia instead to chase AMD in the tflop battle decided to focus instead on having much bigger caches on their gpus, and despite the lower tf simply destroyed them in performance. It was also one of the highlights from AMD for Rdna, which added a whole level of cache compared to gcn, and again saw huge performance gains (So much that the "Nvidia flops" and "AMD flops" when comparing gpu performance has all but withered since rdna has been introduced.

I mean, sure, compared to the exact same gpu with a lower clock the new overclocked one will perform better, but you'd achieve higher performance overall by actually raising all boats.