The SSD is "The Dream." Short-hand?Yeah, took me a while to realise that too. Seems Sony went all in with the Shakesperean lingo. I like it. PSVR2 will be called something like Project Midsummer (not to be confused with Midsommar, that's a wholly different nightmare).

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny

- Thread starter vestan

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Yeah, took me a while to realise that too. Seems Sony went all in with the Shakesperean lingo. I like it. PSVR2 will be called something like Project Midsummer (not to be confused with Midsommar, that's a wholly different nightmare).

I've heard PSVR2 is codenamed Caesar.

Edit: realised this might have sounded insidery, not an insider, I think I heard that from BGs on the other place.

A GPU's job is to output what you see on screen. It is resolution based for the most part and all tied to what the power and efficiency of the GPU.Who's saying MS is damed?

I think it's fair to to point out and understand the differences in system design and how they may be advantageous on both sides. TFs is not the only measure of theoretical performance, is that not okay to say?

lmao at your avatar.Agreed about RDNA2 Scaling - everything we know so far sounds amazing.

If full and optimal utilization of all 52 CU is happening most of the time, PS5 will be behind. I imagine the difference is going to come down to a dynamic resolution delta (as in time that can be spent at highest res due to on screen dynamics) in most titles.

For Sony's sake, i hope that Cerny's secret sauce in the gpu overcomes that tflops advantage because i am pretty sure its going to cost them sales. i used to visit gamespot forums back in the day and almost everyone switched from sony to ms that gen. they did come back later. but the initial power delta really did have an effect.

of course MS had some amazing exclusives in the first two years like Bioshock, Gears, Oblivion, Halo 3 and Mass Effect so that made it easier for them, if Sony can show off some exclusives on par with those classics, it might slow the tide.

thats true. but i think MS knows that too which is why they revived the lockhart. if sony is $100 (by some miracle i should add) then lockhart could be too. at which point, it becomes a whole lot more difficult for sony to sell the more cheaper console.The power talk work well for them but it was also because they were cheaper .

We have to find out the price of these consoles cause if XsX more expensive it will effect things.

AMD is making an 80 CU gpu. i highly doubt that the scaling of CUs is still awful after a complete redesign plus an extra year of R&D for RDNA 2.0.

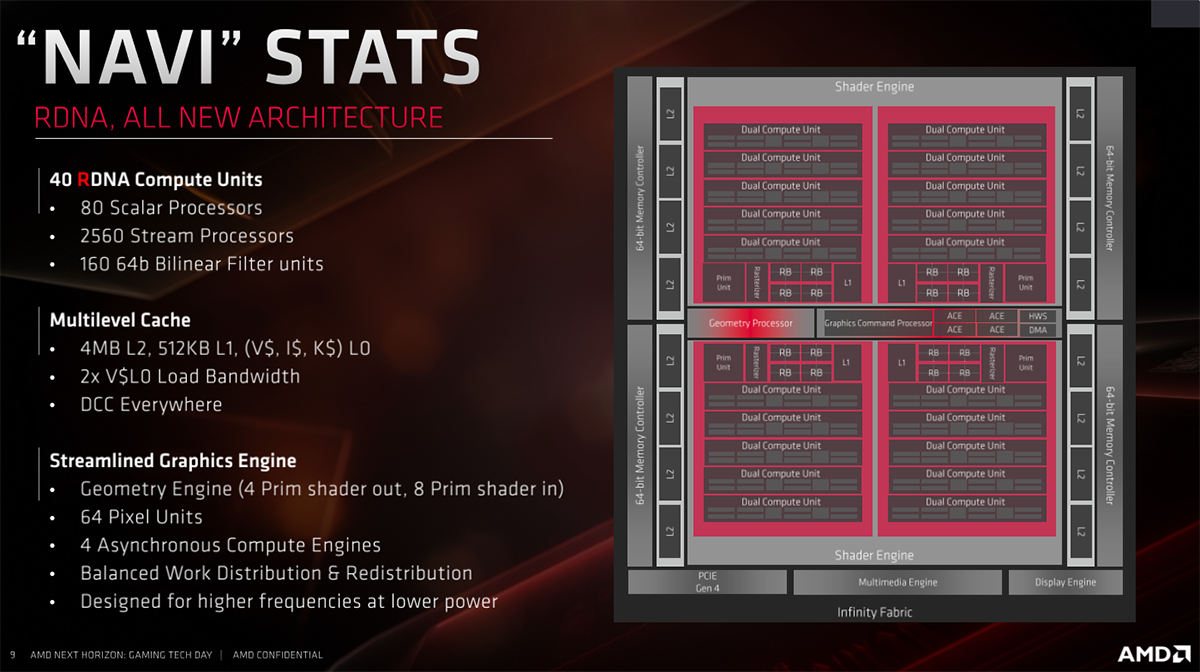

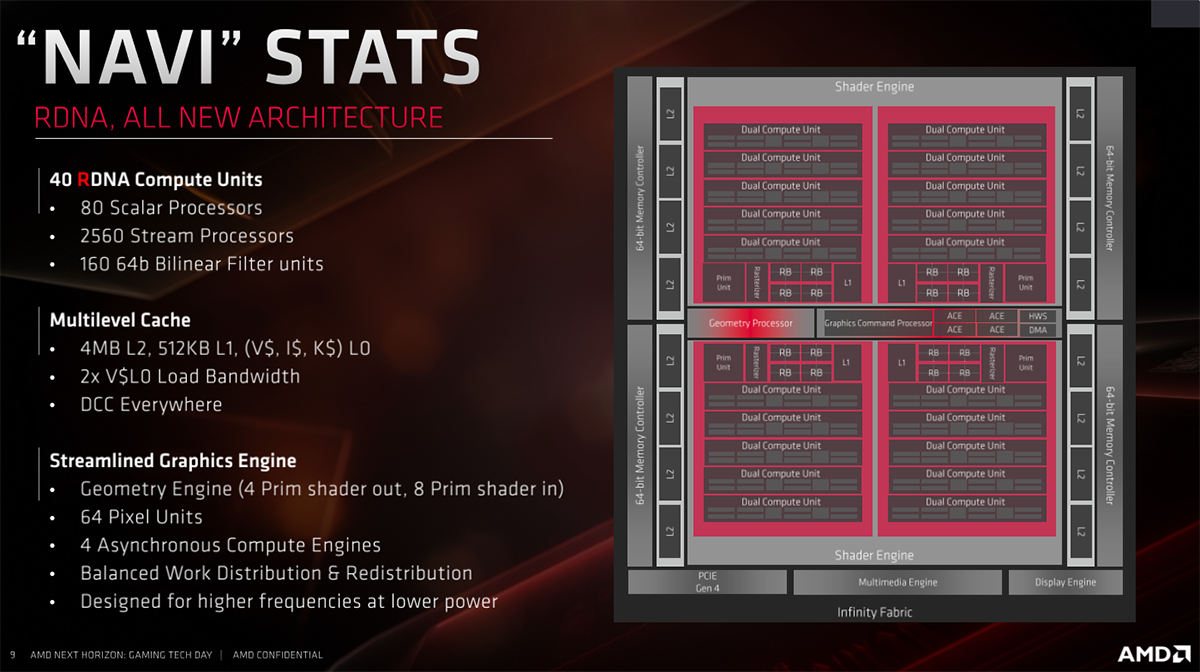

It depends on how the GPU is scaled. It's very clear RDNA is a lot more flexible when it comes to scaling its resources than GCN. If they make an 80 CU GPU, then they most likely will go with 8 SAs, which means 10CUs/SA and equivalent to two geometry units of a 40CU GPU. That's very different from scaling to a 56 CU GPU though. The most common building block is the SA, which contains the a primitive unit/rasterizer/ZROPs/ROPs and CUs. They need to scale that block into a descret number, which means MS either went with 14CUs/SA, 8CUs/SA (if RDNA supports odd numbers of SAs) or 7CUs/SA. There is no magic about this. They most likely went with 14CUs/SA as they still need to keep the chip area small.

regardless, the point i was trying to make was that the damage is already done because even if it is 10% like you said, the conversation will revolve around that for the rest of the launch and then the rest of the gen. sony has conceded the power talk which worked so well for them, and will be playing catch up the rest of the gen just like MS did this gen. Pheonix asked whats more important? resolution/framerate/performance vs loading and based on the initial response to the cerny reveal, no one seems to care about the ssd at all. everyone wanted more tflops.

I agree with you that SONY will lose a lot of hardcore gamers, which are important to them, since they are very passionate/vocal and work as ambassadors/influencers for the platform. IMO, that's unfortunate but that's no reason to be overly pessemistic about the performance delta. Let's not forget that we don't know the price of the machines and that can reverse the sentiment entirely. Also, I don't doubt Sony's first party output will be superior to MS.

thats true. but i think MS knows that too which is why they revived the lockhart. if sony is $100 (by some miracle i should add) then lockhart could be too. at which point, it becomes a whole lot more difficult for sony to sell the more cheaper console.

Not really being the middle ground might end being the best thing for them.

There is whole bunch of sales and marketing data that show a lot of the time the middle price item end up selling the best .

"Friends, players lend me your eyes" will be the slogan?I've heard PSVR2 is codenamed Caesar.

Edit: realised this might have sounded insidery, not an insider, I think I heard that from BGs on the other place.

What's the first?I'm an idiot. I just realized The Tempest is yet another Shakespeare reference.

Custom flash controller is the flop for ps5

Like when ESRam flop for xbo

I'm not following...

Ariel, Gonzalo, Flute, Oberon (and supposedly Prospero) were already GPU and platform codenames.

Yes, but GPU performance is not are not strictly = to CUs x clockA GPU's job is to output what you see on screen. It is resolution based for the most part and all tied to what the power and efficiency of the GPU.

No one is making the argument that the PS5 GPU is overall more powerful than the SX GPU. Just that you can't just look at TFs as the only measure of performance. Parts outside the CU will scale with clocks, not CU count.

Neat. Thanks :)Ariel, Gonzalo, Flute, Oberon (and supposedly Prospero) were already GPU and platform codenames.

I'm an idiot. I just realized The Tempest is yet another Shakespeare reference.

I thought this during the presentation but thought the other names were an AMD thing and not a Sony thing.

Yeah, took me a while to realise that too. Seems Sony went all in with the Shakesperean lingo. I like it. PSVR2 will be called something like Project Midsummer (not to be confused with Midsommar, that's a wholly different nightmare).

That (or Project Night's Dream) would go great since PSVR was Project Morpheus (the god of dreams).

Also, now I want Midsommar VR.

I've heard PSVR2 is codenamed Caesar.

Edit: realised this might have sounded insidery, not an insider, I think I heard that from BGs on the other place.

Now I'm hyped to see what's in store for Project Titus or Project Andronicus.

You play the game as a bear :D

Yes, but GPU performance is not are not strictly = to CUs x clock

No one is making the argument that the PS5 GPU is overall more powerful than the SX GPU. Just that you can't just look at TFs as the only measure of performance. Parts outside the CU will scale with clocks, not CU count.

Vega 56 x Vega 64 is a good example of that: iso-clock they performed mostly the same, despite 64 being considerably bigger. Not saying that is going to be the case here, but going wider virtually always has some loss in performance.

Why on earth are we getting bigger GPU's or ever increasing efficiency gains then?

The increased efficiency comes from architectural improvements, which is something completely different from what we are talking about here. As long as all the resources of the GPU are scaled correspondingly there is no efficiency loss when scaling up/down a GPU. A GPU is divided into sub-blocks and those sub-blocks need to be a discret number, which means it's not possible to scale all the resources proportionally for every possible CU configuration. A 56 CU configuration gives an indication which resources have been scaled and which haven't.

That same argument was made in favor of XBox One/ XBS vs PS4 when Microsoft up the gpu clocks. PS4s overall CUs advantage and memory bandwidth still produce higher resolutions and graphical performance despite XB1/S clock speed advantage.Yes, but GPU performance is not are not strictly = to CUs x clock

No one is making the argument that the PS5 GPU is overall more powerful than the SX GPU. Just that you can't just look at TFs as the only measure of performance. Parts outside the CU will scale with clocks, not CU count.

Good, because there's nothing to follow.

Eventually when the hot takes die down, more info is known, the consoles finally get released we will see why Sony went in on the SSD.

I'm just waiting on the OS, features to be revealed.

The increased efficiency comes from architectural improvements, which is something completely different from what we are talking about here. As long as all the resources of the GPU are scaled correspondingly there is no efficiency loss when scaling up/down a GPU. A GPU is divided into sub-blocks and those sub-blocks need to be a discret number, which means it's not possible to scale all the resources proportionally for every possible CU configuration. A 56 CU configuration gives an indication which resources have been scaled and which haven't.

In a separate debate yesterday, I asked why these gains being talked about here did not help the base Xbox One with 12CU's@853Mhz or The Xbox One S with 12CU at 913Mhz compared to an 18CU@800Mhz PS4.Yes, but GPU performance is not are not strictly = to CUs x clock

No one is making the argument that the PS5 GPU is overall more powerful than the SX GPU. Just that you can't just look at TFs as the only measure of performance. Parts outside the CU will scale with clocks, not CU count.

You are limited by power, and bandwidth, and if you want to sustain high frame rates, the CPU has to be competent. The laws around which GPU's perform are not changing.

Vega 56 x Vega 64 is a good example of that: iso-clock they performed mostly the same, despite 64 being considerably bigger. Not saying that is going to be the case here, but going wider virtually always has some loss in performance.

Yes, that's a good example. When only the CU count is scaled, only the operations that are shader bound will be affected, which is typically long shaders applied during the main render pass I.E. when rendering the frame-buffer. There are a lot of other types of rendering going on though. For instance, when rendering shadow maps or depth pre-pass, the shaders are typically very short and the operations are entirely geometry/rasterizer bound. Scaling up the CUs will have no effect on those kind of operations. It's possible to run compute or async compute workloads during those operations, but it's difficult to find suitable workloads at that point of the frame since the main render pass is yet to be executed.

Who says the didn't help? By how much? who knows. The numbers are much different this time around, as noted below.In a separate debate yesterday, I asked why these gains being talked about here did not help the base Xbox One with 12CU's@853Mhz or The Xbox One S with 12CU at 913Mhz compared to an 18CU@800Mhz PS4.

You are limited by power, and bandwidth, and if you want to sustain high frame rates, the CPU has to be competent. The laws around which GPU's perform are not changing.

I agree. BUT the TF gap is much smaller this time, and the Clock gap is much greater this time, and the bandwidth of memory (considering all 16GBs average speed) is smaller MUCH smaller as well. PS4 had nearly 3X the bandwidth.That same argument was made in favor of XBox One/ XBS vs PS4 when Microsoft up the gpu clocks. PS4s overall CUs advantage and memory bandwidth still produce higher resolutions and graphical performance despite XB1/S clock speed advantage.

Again, not saying PS5 is magically out performing the SX on the basis of clocks here. I have no idea how impactful it will actually be. Just trying to understand the capabilities of the machine, and it sure isn't boiled down to TF only.

I hate to get in on the system wars, especially in a dedicated thread so I'll only make one post on the matter..

We seem to be going round in circles going from one extreme to another. The answer will no doubt lie somewhere in between

Will the XSX have a compute advantage over PS5? Yes..

Will the 16% to ~20% difference between the system's TF counts represent the real world performance differential? No..

When the entire GPU is clocking much higher everything outside the CUs is running faster; in addition you can make slightly more efficient use of those CUs.

The CPU difference is ~3x smaller than the difference between the OG X1 and PS4.

The SSD won't magically give you more frames but it does remove the most pressing bottleneck games have been facing for years.

I expect the real-world performance differential to be about half the GPU TF differential. So perhaps 8-10% in resolution as a rough example. Think 2052p vs 2160p.

The question you need to ask yourself as a developer or consumer is, is that tiny real-world difference worth the gains in I/O and the possibilities that brings in changing the fundamentals of the games we get to play or develop? I without a doubt think it is.

We seem to be going round in circles going from one extreme to another. The answer will no doubt lie somewhere in between

Will the XSX have a compute advantage over PS5? Yes..

Will the 16% to ~20% difference between the system's TF counts represent the real world performance differential? No..

When the entire GPU is clocking much higher everything outside the CUs is running faster; in addition you can make slightly more efficient use of those CUs.

The CPU difference is ~3x smaller than the difference between the OG X1 and PS4.

The SSD won't magically give you more frames but it does remove the most pressing bottleneck games have been facing for years.

I expect the real-world performance differential to be about half the GPU TF differential. So perhaps 8-10% in resolution as a rough example. Think 2052p vs 2160p.

The question you need to ask yourself as a developer or consumer is, is that tiny real-world difference worth the gains in I/O and the possibilities that brings in changing the fundamentals of the games we get to play or develop? I without a doubt think it is.

In a separate debate yesterday, I asked why these gains being talked about here did not help the base Xbox One with 12CU's@853Mhz or The Xbox One S with 12CU at 913Mhz compared to an 18CU@800Mhz PS4.

You are limited by power, and bandwidth, and if you want to sustain high frame rates, the CPU has to be competent. The laws around which GPU's perform are not changing.

Of course it helped, but it's not reasonable to expect a 6% clock frequency advantage will negate a 50% CU advantage, especially when the ESRAM of the XB1 was way too small, which resulted in a lot of unnecessary copying of data. XB1 also only had half the amount of ROPs.

In the same way, it's not reasonable to expect that a 22% clock frequency advantage will negate a 44% CU advantage, but it will make the performance delta less than what the TF delta implies.

wait, where did they mention tempest?I'm an idiot. I just realized The Tempest is yet another Shakespeare reference.

3D Audio.

it's the name of the 3D audio chip.

When matt revealed xbo he focus on ESRam and praised it as the revolution... But it flop

Now cerny is praising CFC as its the revolution...

Will it cause ps5 to flop

When matt revealed xbo he focus on ESRam and praised it as the revolution... But it flop

Now cerny is praising CFC as its the revolution...

Will it cause ps5 to flop

Nice logic there warrior.

He already has our ears, what is he up to in that bathroom?.

I see y'all are still acting up. Meanwhile I've got the big brain master plan. Getting the Switch this summer, the PS5 for exclusives. Then building a maxed out PC with an RTX 3080Ti AND one of the PS5 certified SSDs when available since I can play Xbox exclusives there.

Checkmate gamers😏

Checkmate gamers😏

Yeah, it seems to me that a lot of the new features need a show-not-tell type of presentation, which is why I think they said they were planning on doing hundreds of events in the coming year. That way people can experience everything -- such as the new controller and 3d audio -- firsthand, but the coronavirus outbreak kind of threw a wrench in those plans.It seems Cerny and Co's goal is to push the experience forward rather than just pixels. Bespoke 3D audio, haptics, ssd and i/o throughput are very hard things to sell to people; and it takes balls to go for that over the higher numbers.

Would I have liked an extra flop and some 16Gbps RAM chips? Sure.. but even then it's not a case of ~10.3 vs 12.1, the vast difference in clocks will likely tighten the raw compute gap to 5-10% along with more efficient utilization, cache speed and rasterization. The small compromise at the top-end is worth everything else we're getting. My only major point of concern is VRS; although I would be amazed if it isn't in there as it seems to be inherent to the RDNA2 base architecture.

Sony's biggest mistakes here is promoting this GDC-esque talk on their consumer facing channels and doing it before a more generalised reveal. I personally liked the talk but Sony could have placed it better in their schedule and conveyed the nature of it better beforehand.

XSX does not have a fast SSD?I hate to get in on the system wars, especially in a dedicated thread so I'll only make one post on the matter..

We seem to be going round in circles going from one extreme to another. The answer will no doubt lie somewhere in between

Will the XSX have a compute advantage over PS5? Yes..

Will the 16% to ~20% difference between the system's TF counts represent the real world performance differential? No..

When the entire GPU is clocking much higher everything outside the CUs is running faster; in addition you can make slightly more efficient use of those CUs.

The CPU difference is ~3x smaller than the difference between the OG X1 and PS4.

The SSD won't magically give you more frames but it does remove the most pressing bottleneck games have been facing for years.

I expect the real-world performance differential to be about half the GPU TF differential. So perhaps 8-10% in resolution as a rough example. Think 2052p vs 2160p.

The question you need to ask yourself as a developer or consumer is, is that tiny real-world difference worth the gains in I/O and the possibilities that brings in changing the fundamentals of the games we get to play or develop? I without a doubt think it is.

Why would XSX games design not change too?

When matt revealed xbo he focus on ESRam and praised it as the revolution... But it flop

Now cerny is praising CFC as its the revolution...

Will it cause ps5 to flop

The Xbox One didn't "flop."

The 360s ownership numbers were hugely inflated by a shit ton of casual owners that jumped in late gen because of Kinect. Something insane like 25 million kinects and copies of Kinect adventures got sold.

These people bought almost nothing else, but they DID watch a lot of TV- which explains the kinect integration and TV centric approach of the launch XBO.

Zhuge mentioned on twitter that the XBO has hit 50 million units and its not unreasonable to think it will sell a few million more before MS ends the platform.

That being the case, the majority of the sales gulf between the 360 and XBO will be chalked up to Kinect owners who didn't come back. They retained most of their core audience.

PS5 is doomedWhen matt revealed xbo he focus on ESRam and praised it as the revolution... But it flop

Now cerny is praising CFC as its the revolution...

Will it cause ps5 to flop

what's a playstation certified ssd gonna do on a PC?I see y'all are still acting up. Meanwhile I've got the big brain master plan. Getting the Switch this summer, the PS5 for exclusives. Then building a maxed out PC with an RTX 3080Ti AND one of the PS5 certified SSDs when available since I can play Xbox exclusives there.

Checkmate gamers😏

This is actually the only correct response to this (and also my general plan).I see y'all are still acting up. Meanwhile I've got the big brain master plan. Getting the Switch this summer, the PS5 for exclusives. Then building a maxed out PC with an RTX 3080Ti AND one of the PS5 certified SSDs when available since I can play Xbox exclusives there.

Checkmate gamers😏

PlayStation 5 vs Xbox Series X: Console Face-Off

We reviewed both systems. Here is how they compare.

When matt revealed xbo he focus on ESRam and praised it as the revolution... But it flop

Now cerny is praising CFC as its the revolution...

Will it cause ps5 to flop

Don't they still need to take into consideration the base Xbox One? They won't be making next-gen exclusives for a year or so. Unless that stays throughout the generation.XSX does not have a fast SSD?

Why would XSX games design not change too?

There's no reason to suspect it will stay for the generation, surely if it was going to Booty wouldn't have said "year, two years" in his response to the question.

Unite the kingdoms

If this is the case, only 1st party titles really taking advantage of the tech, then I would assume Sony has a bunch of 1st party titles ready for launch to show it off. Otherwise, what is their plan? Let people imagine all these supposed game changers?Whether or not AAA publishers will be able to fully utilize Sony's proprietary technology remains to be seen. Maybe it really will be just faster loading times and better LOD transitions.

The only thing I am confident in saying is that the PS5 will be super easy to develop for. The extremely fast SSD will help the smaller developers spend less time optimizing their games.

Ps5 will likely continue where the PRO left off and again be bandwidth limited.AMD is making an 80 CU gpu. i highly doubt that the scaling of CUs is still awful after a complete redesign plus an extra year of R&D for RDNA 2.0.

ps5 will have a 22% higher fillrate compared to a 36 cu series x. a 56 cu 1.82 ghz gpu will be on par if not better in every single way.

Cerny did say that 36 fast cus are better than 48 slower CUs so maybe you are right, but that remains to be seen.

regardless, the point i was trying to make was that the damage is already done because even if it is 10% like you said, the conversation will revolve around that for the rest of the launch and then the rest of the gen. sony has conceded the power talk which worked so well for them, and will be playing catch up the rest of the gen just like MS did this gen. Pheonix asked whats more important? resolution/framerate/performance vs loading and based on the initial response to the cerny reveal, no one seems to care about the ssd at all. everyone wanted more tflops.

There hasn't been much talk on cooling in the PS5 yet, and how heat could impact real world sustained throughput of the SSD, especially for expansion drives.

If expansion drives won't require a heatsink, it's going to be interesting to see what the heat dissipation will look like along with the subsequent throughput. NVME SSDs can get pretty damn hot and will throttle, so I can only imagine how hot they could get with sustained use at the speeds Sony wants.

Cerny said inner SSD has 6 priority levels and NVME SSD spec has only 2 priority levels.

This means games that make use of more than 2 priority levels (most 1st party) HAVE to run from the built in SSD, not directly from the NVME.

When you want to game, the game will be copied from the external NVME to the internal drive.

Fortunately, at 5GB/s, this would take mere seconds to accomplish, and can be hidden from the player.

Thus the external NVMEs should have 0 overheating problems.

Cerny said inner SSD has 6 priority levels and NVME SSD spec has only 2 priority levels.

This means games that make use of more than 2 priority levels (most 1st party) HAVE to run from the built in SSD, not directly from the NVME.

When you want to game, the game will be copied from the external NVME to the internal drive.

Fortunately, at 5GB/s, this would take mere seconds to accomplish, and can be hidden from the player.

Thus the external NVMEs should have 0 overheating problems.

Didn't Cerny say that the I/O cluster would arbitrate the extra priority levels from the expansion SSDs?

Didn't Cerny say that the I/O cluster would arbitrate the extra priority levels from the expansion SSDs?

Yeah. And is why there needs to be some extra speed on the expansion ssd to compensate. Validating ssds for expansion will be about testing they're up to the task of being >= the internal ssd performance and behaviour for running games.

XSX does not have a fast SSD?

Why would XSX games design not change too?

MS have a very fast SSD and a from everything we've been told a great I/O stack and set of optimisations; in itself it deserves praise (particularly their texture solution), I don't question whether their solution will change game design, it will. But the systems have clear differences in priorities and Sony's solution has simply gone further. When Cerny refers to "the dream" from a developer perspective, it's referring to lifting the bottleneck and reaching a greater threshold where you can just load what you want on the fly; they seem to have surpassed that threshold.

I have been thinking about this.

Tempest is a AMD GPU CU modified to work like an SPU right?

...

Tempest is the second GPU!

Jk but it's funny that the theory has come true on a technical level, albeit obviously for a different purpose.

- Status

- Not open for further replies.