Right. Like I even know when the fans kick in on my beeefy ass work laptop that the CPU usage hitting 100% is only in certain instances, same for GPU. so I just don't understand this concern that it won't give the exact power when needed in 90% of. Scenarios.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny

- Thread starter vestan

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

That's an excellent point. This is probably a dumb question but is there a reason why some developers don't add frame rate caps to pause screens and the like? My ps4 pro sounds the worst on menu screens and map screens because I think the frame rate is uncapped. Is there something preventing them from capping the frame rate on those screens?Yeah, and the important thing people need to realize is that these worst case scenarios are usually not areas where a game is even running out of performance. These kind of "power virus" loads, as AMD has described them in the past, are usually caused by very simple scenes that start running at hundreds of frames per second. The example Cerny uses is a simple map screen. On PCs the furmark application was notorious for being able to set videocards on fire until GPUs added thermal protection routines to defend against that kind of process. So it doesn't matter if the clockspeed drops under 2Ghz in such a scenario as you aren't losing any meaningful game performance, just saving wasted power consumption.

That's an excellent point. This is probably a dumb question but is there a reason why some developers don't add frame rate caps to pause screens and the like? My ps4 pro sounds the worst on menu screens and map screens because I think the frame rate is uncapped. Is there something preventing them from capping the frame rate on those screens?

The higher fps help with the smoothest so they let it happen but there is nothing stopping them from capping it.

Yeah they can lower the clock that's why they can reach higher peak clocks, but that's not what I'm arguing about.He said that with the old method .

Where are you seeing he saying it's not possible with the method they using now .

With the new method they can down clock the GPU to use less power like i said 200mhz is would be 10% and that is if the CPU also using it's full power.

We are talking about during gameplay and nothing some simple as lets say map screen which was his eg .

Regarding power consumption, yeah it decreases exponentially, but that also means it increases exponentially from the base 1.7-1.8ghz the gpu was first designed to run on.

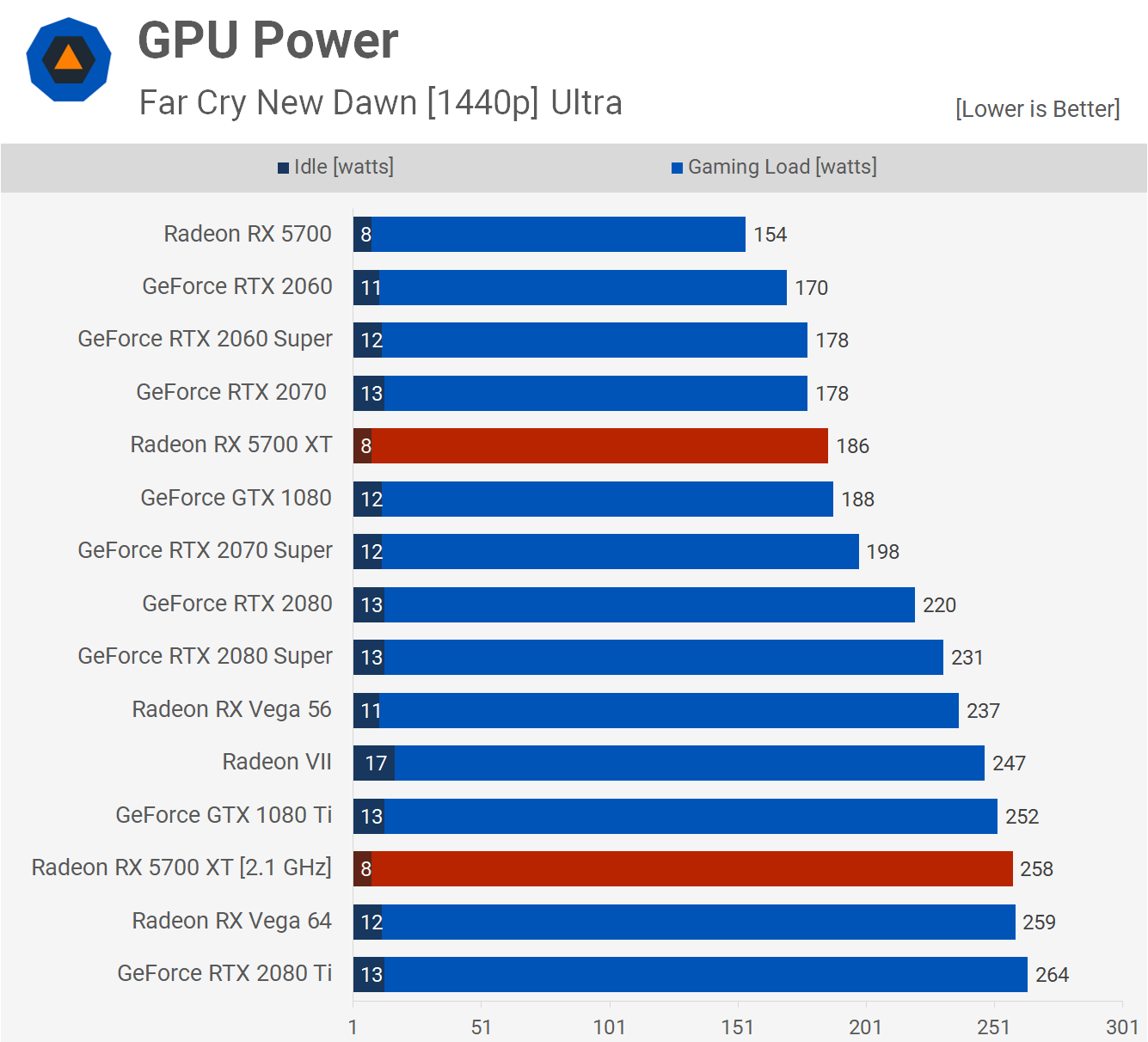

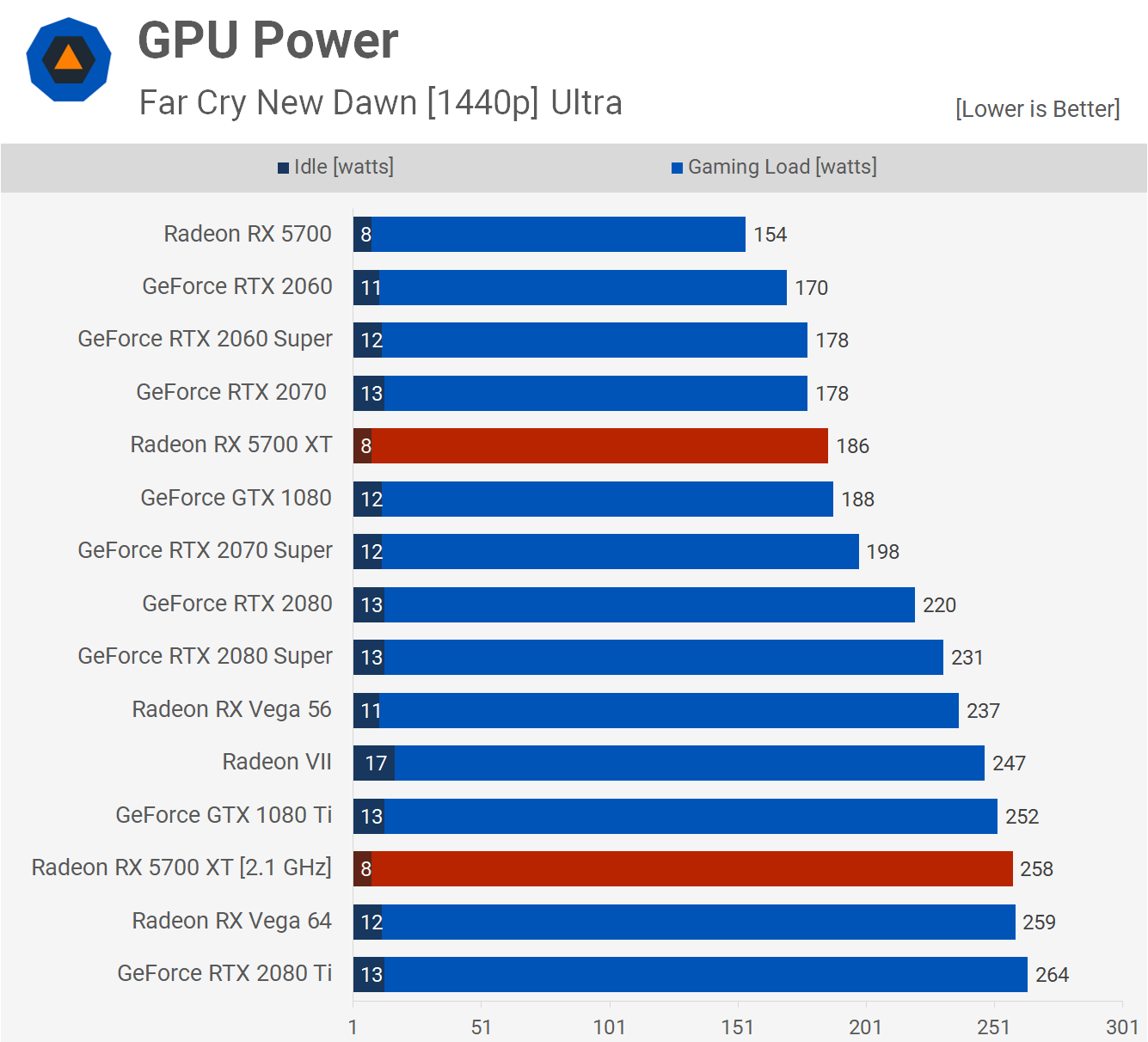

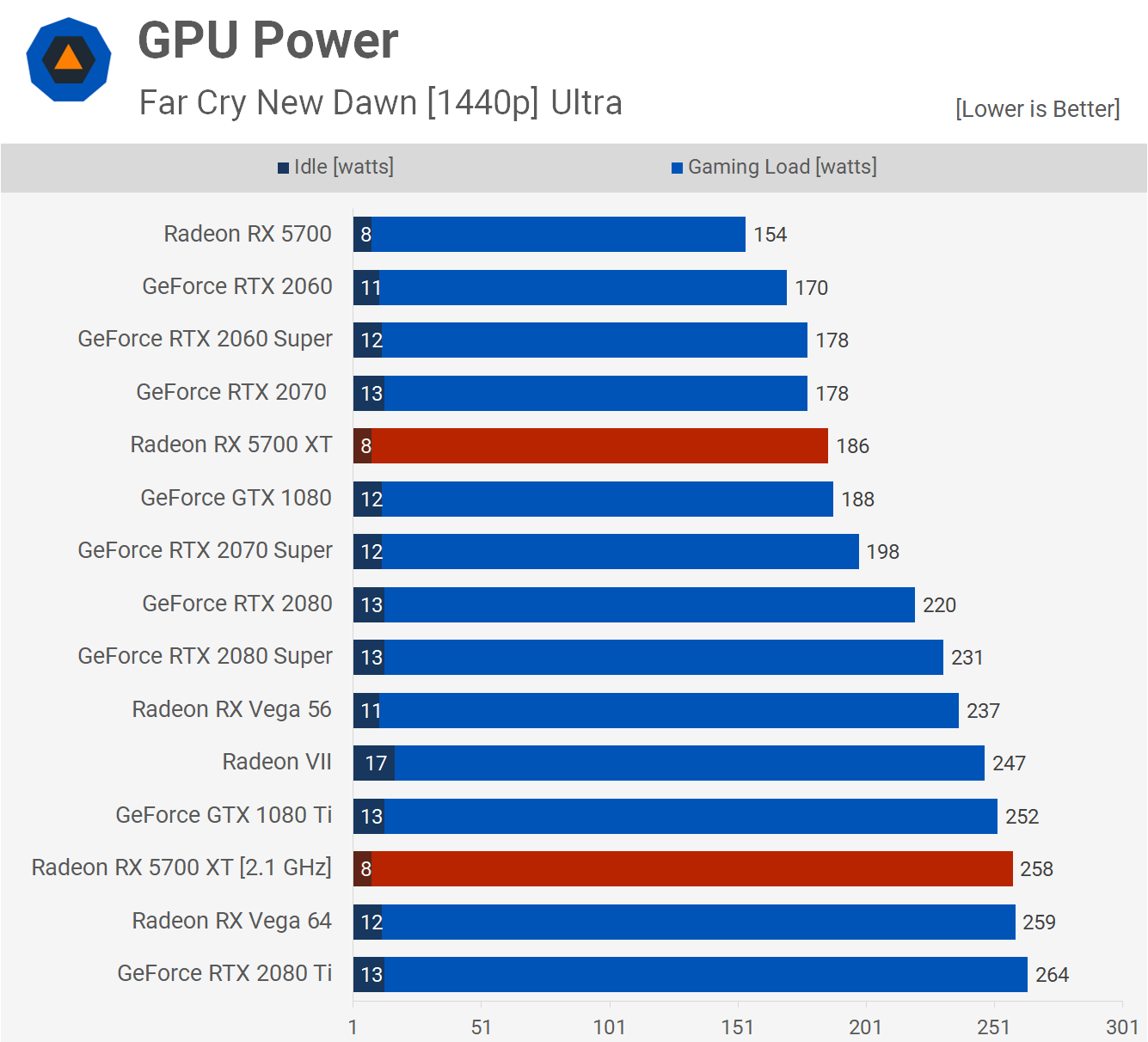

As I posted before, this is a 5700XT, RDNA1, at 2.1ghz consumption:

I'm assuming close to 100% load because it's running Far Cry @1440p in 80-100fps. But look at the power drawn.

RDNA2 will bring these values down, sure, but we are way past what's reasonable on the console. Which is why I'm saying we don't actually have nearly enough data to make the claim of how Ps5 clocks will vary, because we don't know any of the variables (base and peak wattages, etc)

Yeah they can lower the clock that's why they can reach higher peak clocks, but that's not what I'm arguing about.

Regarding power consumption, yeah it decreases exponentially, but that also means it increases exponentially from the base 1.7-1.8ghz the gpu was first designed to run on.

As I posted before, this is a 5700XT, RDNA1, at 2.1ghz consumption:

I'm assuming close to 100% load because it's running Far Cry @1440p in 80-100fps. But look at the power drawn.

RDNA2 will bring these values down, sure, but we are way past what's reasonable on the console. Which is why I'm saying we don't actually have nearly enough data to make the claim of how Ps5 clocks will vary, because we don't know any of the variables (base and peak wattages, etc)

It really seems like Sony made a huge mistake putting a stock RX 5700 XT in the PS5. What a blunder.

Yeah they can lower the clock that's why they can reach higher peak clocks, but that's not what I'm arguing about.

Regarding power consumption, yeah it decreases exponentially, but that also means it increases exponentially from the base 1.7-1.8ghz the gpu was first designed to run on.

As I posted before, this is a 5700XT, RDNA1, at 2.1ghz consumption:

I'm assuming close to 100% load because it's running Far Cry @1440p in 80-100fps. But look at the power drawn.

RDNA2 will bring these values down, sure, but we are way past what's reasonable on the console. Which is why I'm saying we don't actually have nearly enough data to make the claim of how Ps5 clocks will vary, because we don't know any of the variables (base and peak wattages, etc)

Well maybe we should go by what the people that are using RDNA 2 parts are saying .

If we go by AMD it would be half of that .

If RDNA 2 was just like 1 there is no way MS could have put a 52CU @1800Ghz GPU in a console either .

Just work out how much power that would have used on RDNA 1with your chart .

It's not just that. The entire paradigm is basically designed so that the worst case heating issue is essentially the same as the best case. Constant power instead of constant frequency. This way fan speed is a constant.I wouldn't say that the fan issues is what drove them to varying clocks. We know from the leaked testings that they were trying to make a fixed 2ghz work and went through several revisions to try and make it work.

Varying clocks was definitely the solution they found to the problem of 2ghz not being sustainable.

Simply has to do with the types of instructions needed.I honestly don't think there is anything but something extremely poorly a coded to draw that much power, I honestly think at most you could see power drop 15% and that's an highly extreme scenario which would possibly be something that happens in the rarest of cases late as hell in the generation. For all intents and purposes we can assume based on all given information that those instances are rare enough to have us stop debating it. We literally are arguing at worst a 10% of chance if it happening and not looking at the 90% of the time it works exactly as designed

>We literally are arguing at worst a 10% of chance if it happening and not looking at the 90% of the time it works exactly as designed

Exactly. It's good news I think. I think it suggests that 2 GHz will be about what we could expect for the worst case of the worst case.

I suggested a page or two back, that we will probably see something like:

90% 2.23

9.5% 2.1

0.5% 2

Also, you are taking his 2Ghz quote out of context. He said if they were trying to keep the speed at 2Ghz using the same conventional means they would have done with PS4, PS5 wouldn't have been able to sustain it.

All_the_THANKYOU.gifs

That's been bugging me for a while across different threads but you've put it better than I could have.

People have to stop thinking in existing PC terms. What's happening this generation is evolutionary.

Yup and that's more than likely exactly what we should expect. The super interesting thing in ALL of this. Is what this translates to future Sony consoles. I don't know how prevalent a solution like this is power draw vs board temps. But this could mean for crazy high GPU MHz in the future with less CUs that would allow for them to add more things to chips.It's not just that. The entire paradigm is basically designed so that the worst case heating issue is essentially the same as the best case. Constant power instead of constant frequency. This way fan speed is a constant.

Simply has to do with the types of instructions needed.

>We literally are arguing at worst a 10% of chance if it happening and not looking at the 90% of the time it works exactly as designed

Exactly. It's good news I think. I think it suggests that 2 GHz will be about what we could expect for the worst case of the worst case.

I suggested a page or two back, that we will probably see something like:

90% 2.23

9.5% 2.1

0.5% 2

All_the_THANKYOU.gifs

That's been bugging me for a while across different threads but you've put it better than I could have.

People have to stop thinking in existing PC terms. What's happening this generation is evolutionary.

Right. These consoles are meant to do one thing they have way more more liberty to get a little crazy and evolutionary

Yup and that's more than likely exactly what we should expect. The super interesting thing in ALL of this. Is what this translates to future Sony consoles. I don't know how prevalent a solution like this is power draw vs board temps. But this could mean for crazy high GPU MHz in the future with less CUs that would allow for them to add more things to chips.

The best thing about these advancements is that they are applicable across the board, regardless of platform or maybe even application (eg gaming). This gen will be literally epic. (I didn't see what I did there. No pun intended.)

The best thing about these advancements is that they are applicable across the board, regardless of platform or maybe even application (eg gaming). This gen will be literally epic. (I didn't see what I did there. No pun intended.)

right. I'm actually curious to know how long it's been a theory of using power draw to determine cooling. Def has more applications for this too.

Yup and that's more than likely exactly what we should expect. The super interesting thing in ALL of this. Is what this translates to future Sony consoles. I don't know how prevalent a solution like this is power draw vs board temps. But this could mean for crazy high GPU MHz in the future with less CUs that would allow for them to add more things to chips.

Some of us were talking about this a while back and Matt even said that if it was possible and MS had this set up they would gotten a even more power console .

Of course they would have had to change certain things so this was all hypothetical .

But we do have mid gen console now so it going to be interesting to see what happen going forward .

10.28 TF - 1.08 TF = 9.2 TF

Guys, I did it. I solved how they're gonna hit 9.2 TF

What do I win?

Guys, I did it. I solved how they're gonna hit 9.2 TF

What do I win?

Oh hell yeah they would've which is pretty crazy to thinkSome of us were talking about this a while back and Matt even said that if it was possible and MS had this set up they would gotten a even more power console .

Of course they would have had to change certain things so this was all hypothetical .

But we do have mid gen console now so it going to be interesting to see what happen going forward .

Some of us were talking about this a while back and Matt even said that if it was possible and MS had this set up they would gotten a even more power console .

Of course they would have had to change certain things so this was all hypothetical .

But we do have mid gen console now so it going to be interesting to see what happen going forward .

While their are more pro's than con's for Sony's power solution, the drawback is that it will require additional optimization work for Devs. So while MS left performance on the table, perhaps they felt the trade-off wasn't worth it. No guarantee a mid gen refresh adopts this approach.

10.28 TF - 1.08 TF = 9.2 TF

Guys, I did it. I solved how they're gonna hit 9.2 TF

What do I win?

A $50 PSN card. Cancel the raffle.

He said 2ghz at all times was not sustainable. That literally means that there are workloads where they had to go below 2ghz or the consumption was too high. And apparently often enough that they were not willing to keep it.

There are often synthetic workloads that exercise designs far beyond what will ever happen in meaningful, productive use. Exploiting full parallelism with FMAD instructions using three constants over and over in a tight loop without ever using the results will demand far more power than doing anything useful, but you have to account for it when validating a design. No game will ever intentionally ship with this code, so whether or not it can run sustained at 2GHz is an academic discussion at best. The history of computing has several examples of similarly non-productive use cases sometimes referred to under the general banner of "Halt and Catch Fire" instructions.

So no, you can't execute these kinds of useless workloads at the default clock speed. Check. Optimizing for running malicious code strikes me as meaningless compared to designing a product to deal well with actual workloads. How often these come up is irrelevant - your product needs to deal with the worst case even if it's code nobody should ever ship, partly because the same consoles will be used in test environments where ill-behaved code could well get caught in a meaningless loop. Not to mention, if it occurs in a shipping game you'd want the console to survive even if the game locks up doing this kind of meaningless work.

The proof is in the pudding, so to speak, so given that we're going to know in a week or two what some games will look like? I think some of the alarmist claims can be put aside for a bit. I can't say I blame regular posters in the thread for being tired of Yet Another Account aggressively pursuing the exact same concern that has seen multiple accounts banned in the past. It's not that this subject hasn't been discussed ad infinitum: it has. If you're interested, there's plenty of historical reading to catch up, complete with implied cautionary tales.

Last edited:

While their are more pro's than con's for Sony's power solution, the drawback is that it will require additional optimization work for Devs.

I don't think this is true. Pretty sure Cerny said devs won't have to do anything.

Thank you so much for sharing that. It's a phenomenal overview not just of where MM landed, but some of the abandoned approaches show incredible promise for future exploration. Not that it's particularly consumable without some background in a bunch of relevant areas, but it can also serve to suggest where folks of a technical nature might want to brush up on related reading. I had seen some high level overviews in the past and could fill in some of the gaps with a little imagination but nothing that came close to the kind of detail and insight presented here.

This is what I'm most excited about as hardware advances. Not just more of what we do today, but experimental new rendering strategies that make whole new aesthetics and experiences possible that aren't just revisiting well trodden paths at higher resolutions and frame rates.

Glad you found it useful! Yeah, this line of work with volumetric geometry representations and polygonization opens up a whole new field of interesting and novel possibilities for rendering. It's not "solved" as cleanly as our traditional meshes yet, but that's part of the beauty.

Incidentally, that's why I'm still pretty stoked about Texture Space Shading too, because it basically upends the whole shading pipeline. By decoupling rendering and shading you can do so much crazy stuff that goes way beyond squeezing a few extra frames here and there (also, VR - I mean, nothing will ever beat being able to render a second viewport pretty much for free).

There are often synthetic workloads that exercise designs far beyond what will ever happen in meaningful, productive use. Exploiting full parallelism with FMAD instructions using three constants over and over in a tight loop without ever using the results will demand far more power than doing anything useful, but you have to account for it when validating a design. No game will ever intentionally ship with this code, so whether or not it can run sustained at 2GHz is an academic discussion at best. The history of computing has several examples of similarly non-productive use cases sometimes referred to under the general banner of "Halt and Catch Fire" instructions.

So no, you can't execute these kinds of useless workloads at the default clock speed. Check. Optimizing for running malicious code strikes me as meaningless compared to designing a product to deal well with actual workloads. How often these come up is irrelevant - your product needs to deal with the worst case even if it's code nobody should ever ship, partly because the same consoles will be used in test environments where ill-behaved code could well get caught in a meaningless loop. Not to mention, if it occurs in a shipping game you'd want the console to survive even if the game locks up doing this kind of meaningless work.

The proof is in the pudding, so to speak, so given that we're going to know in a week or two what some games will look like? I think some of the alarmist claims can be put aside for a bit. I can't say I blame regular posters in the thread for being tired of Yet Another Account aggressively pursue the exact same concern that has seen multiple accounts banned in the past. It's not that this subject hasn't been discussed ad infinitum: it has. If you're interested, there's plenty of historical reading to catch up, complete with implied cautionary tales.

Your supposition that only synthetic workloads caused frequency drops below 2 GHz is baseless.

Your supposition that only synthetic workloads caused frequency drops below 2 GHz is baseless.

Now where did she say that she was using that as a eg to show you still have to think about that sort of stuff when it comes to power allotment .

Your supposition that only synthetic workloads caused frequency drops below 2 GHz is baseless.

I don't think I actually made that claim. More to the point, any supposition to the contrary would be equally baseless.

Oh thats interesting...

Well, why I was going with the harsh locked/unlocked thing is so it would be tied to the console and not based on some sort of agreement between seller and user which would require qualifications to be met.

What I am suggesting basically would mean anyone from anywhere in the world can buy a locked console and would just have to load on a fixed amount every year for up to there times/years regardless of where they are in the world. Sony?MS wouldn't care who bought the console and anyone buying one isn't tied to a 2/3 year agreement. They can sell it whenever they want to. nd no commitments needs to be made.

I want the reverse.

Buy 10 years of PS+, get a free PS5.

I don't think I actually made that claim. More to the point, any supposition to the contrary would be equally baseless.

No argument from me on your second sentence. We don't know yet. Which is why anyone argue with certainty deserves to be chastised. (Not saying that's you)

It's abit silly to make comparisons to the 5700xt, we have no idea about the ps5's power consumption figures, it's a different architecture likely on a different process node that has probably been designed from the beginning with high frequency in mind. The power curve sweet spot is not going to be the same.

Yeah, and the important thing people need to realize is that these worst case scenarios are usually not areas where a game is even running out of performance. These kind of "power virus" loads, as AMD has described them in the past, are usually caused by very simple scenes that start running at hundreds of frames per second. The example Cerny uses is a simple map screen. On PCs the furmark application was notorious for being able to set videocards on fire until GPUs added thermal protection routines to defend against that kind of process. So it doesn't matter if the clockspeed drops under 2Ghz in such a scenario as you aren't losing any meaningful game performance, just saving wasted power consumption.

Yeah, I think this might in fact be the case - PS5 actually can't stay above 2GHz at all times, but but the only times it drops below are these power virus moments like the Horizon map screen. That would match what Cerny was saying very well.

Wheels, joysticks, the Novint Falcon, VR controllers, laparoscopy training rigs...there's lots of things with haptics. They're not directly comparable, of course, and some of these devices are very expensive to build in total. But the point isn't the total cost, just the incremental cost to add FFB, as a percentage of the overall BOM.But those FFB control devices are much larger as well, so I don't expect that the particularities around the cost of those devices can apply to the DualSense. Unless you have something in mind other than FFB racing wheels?

Also, there's at least one Sony patent that could apply very much to the DualSense. (I recall another with a different mechanism, but I can't find it now.) And as I said, it shows very little extra cost of components.

Of course, but obviously Epic have cracked that. The demo was running on an actual PS5. So I'm not sure where your concern comes from about fitting so much geometry into memory. They certainly can, even if we don't know precisely how that's achieved.Texture sampling is a much simpler problem to solve: you're casting to a point on a known triangle and then working out which parts of a texture should be drawn there, based on a known set of UV coordinates.

In the case of a super high poly model we're dealing with geometry first, so you have to perform many, many of tests to work out which polys you need.

You don't know this for sure, because you're basing that statement on RDNA1. Improved power consumption is a bullet point feature for its successor, and this is very liable to change the exact shape of the curve.This is definitely not false. Yeah, power drown is not linear so the 2% in clock reduces power drawn by 10% might be true, but at 2.23ghz the gpu would be far past the point where a 10% drop in power consumption might be enough.

No, it's up 72W. And again, this is RDNA1. We don't know what RDNA2 will look like.For reference, a 5700 XT at 2.1ghz (4 more CUs than PS5, but at lower clocks) is already at 258W, up 100W from its base clock consumption.

People saying PS5 design being more efficient aren't necessarily speaking in power draw terms. They may be referring to faster caches, rasterization, etc.BTW, that's another eye opener for the "clocks will make ps5 flops more efficient", they used liquid cooling to get 5700 XT to 2.1ghz to see at most a 7% increase, making the card more expensive than a 2070 super with around the same performance and consuming 90W more... Definitely not efficient. Like at all

There are often synthetic workloads that exercise designs far beyond what will ever happen in meaningful, productive use. Exploiting full parallelism with FMAD instructions using three constants over and over in a tight loop without ever using the results will demand far more power than doing anything useful, but you have to account for it when validating a design. No game will ever intentionally ship with this code, so whether or not it can run sustained at 2GHz is an academic discussion at best. The history of computing has several examples of similarly non-productive use cases sometimes referred to under the general banner of "Halt and Catch Fire" instructions.

I'll take any opportunity to post this opening.

I don't think I actually made that claim. More to the point, any supposition to the contrary would be equally baseless.

I actually don't know if I'd agree with that. We have Cerny outright saying that you only need a 2% drop in frequency to get a 10% TDP drop off - aka you would only need to drop to maybe 2.18GHz for that. I know that the ratio will compress the lower you go, but it seems like needing to go all the way down to 2GHz would result in at least a 25-30% TDP drop. I'm hardly an expert but that sounds tremendously unlikely outside of the extreme power virus events like the map screen. Literally the only reason why anyone even suspects the console going below 2GHz is a thing is because of what he said about how it couldn't sustain that at fixed clocks - it doesn't match up with his other statements.

Everyone is also assuming that PS5/RDNA2 is on 7nm. It could be one of the improved nodes which help the power consumption by 10-15%.

Everyone is also assuming that PS5/RDNA2 is on 7nm. It could be one of the improved nodes which help the power consumption by 10-15%.

Most likely it'll be on whatever node RDNA2 is using in general, which is responsible for some part of the 50% PPW increase.

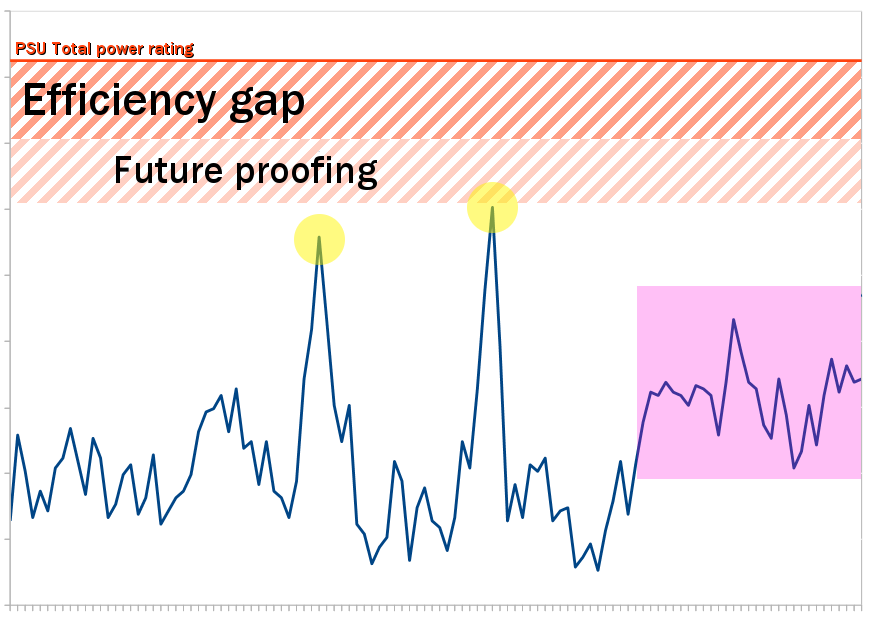

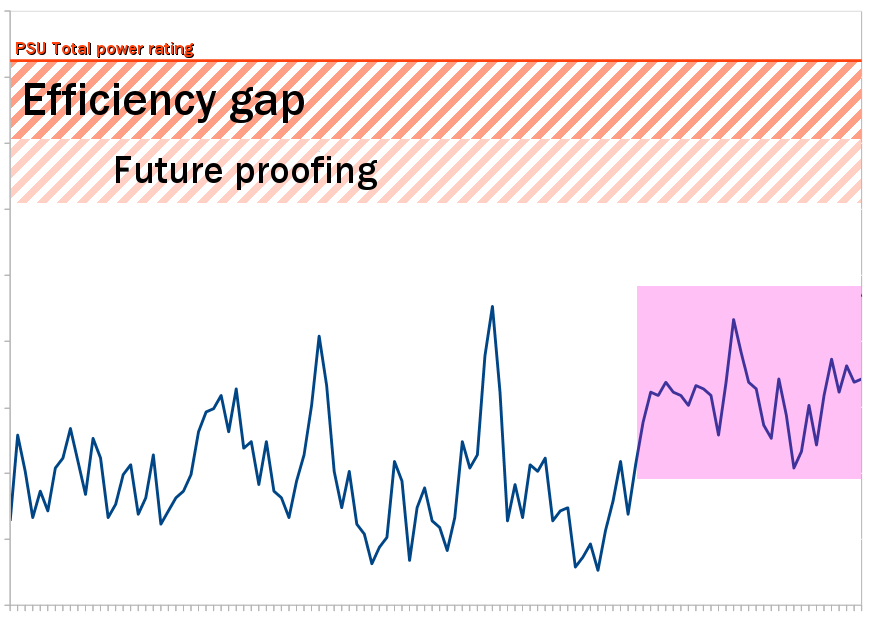

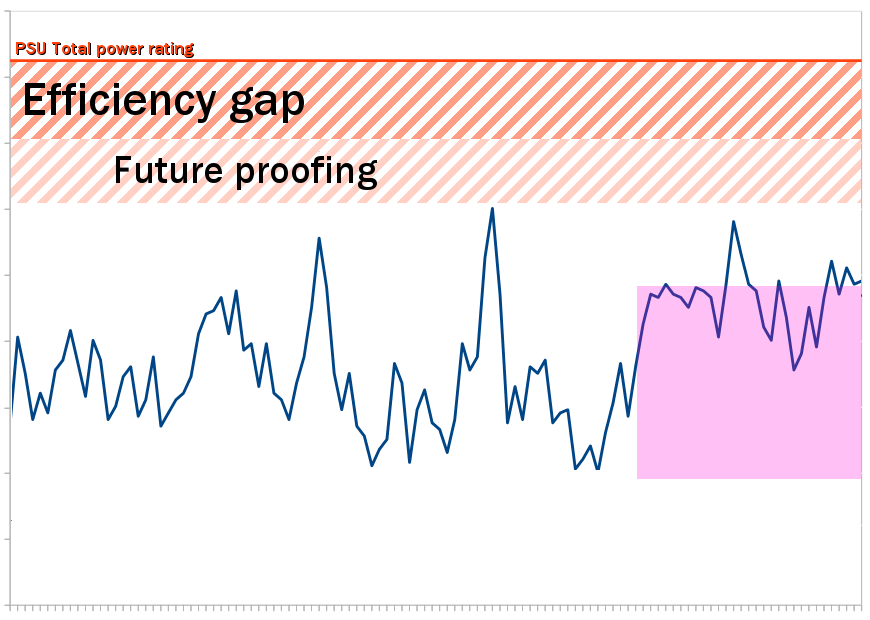

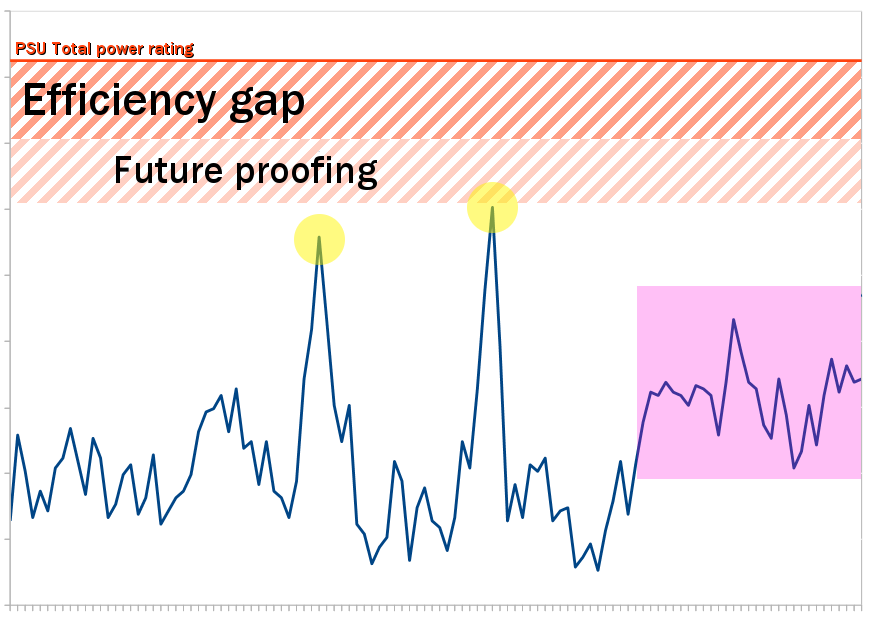

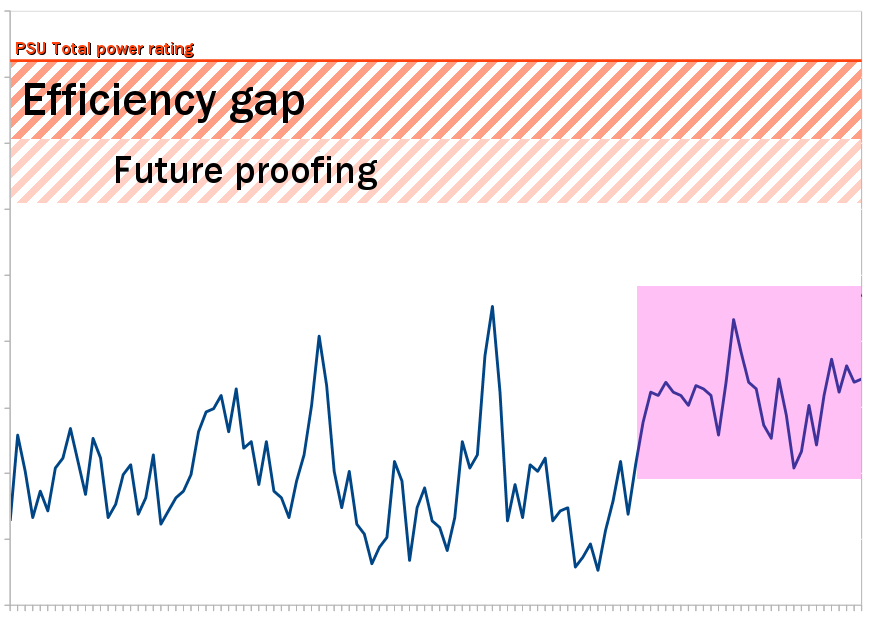

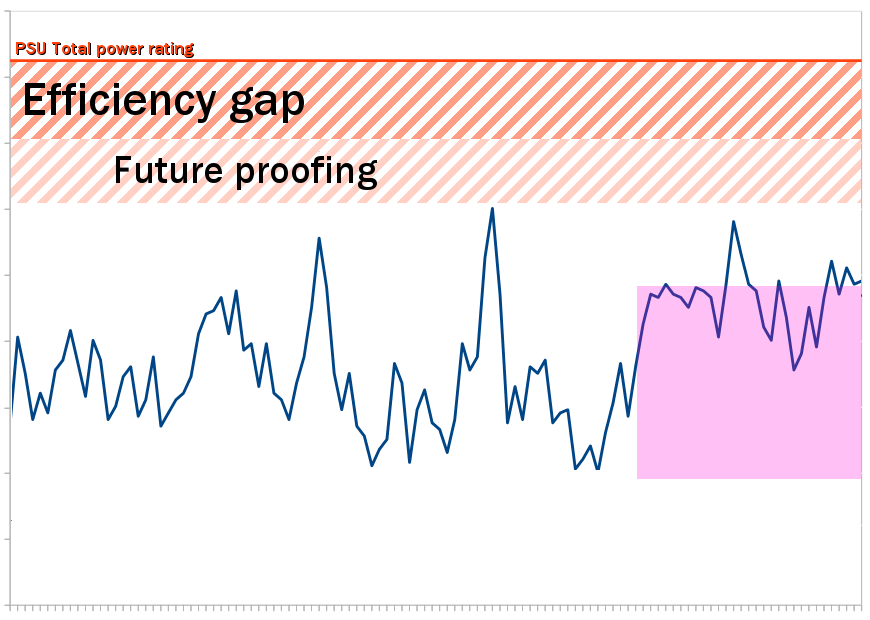

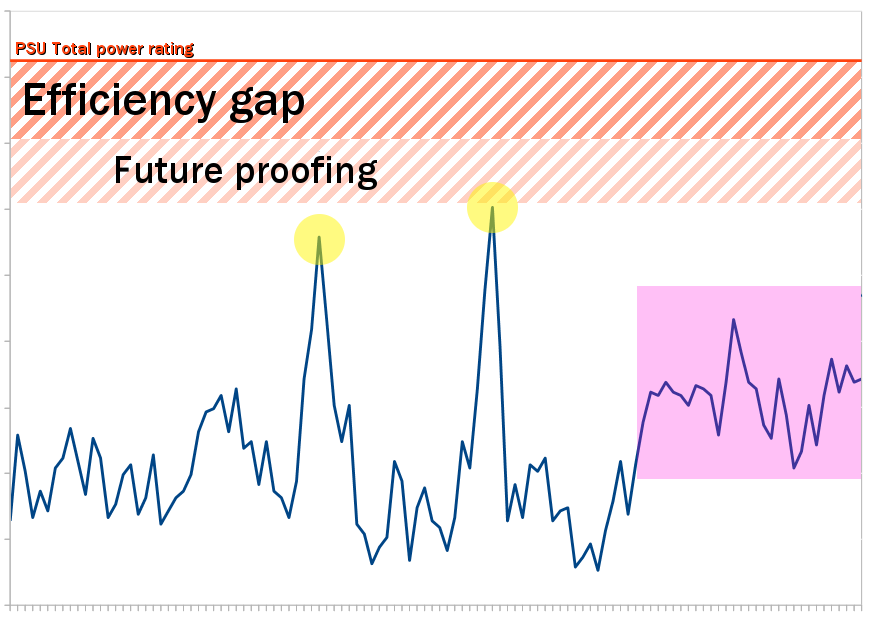

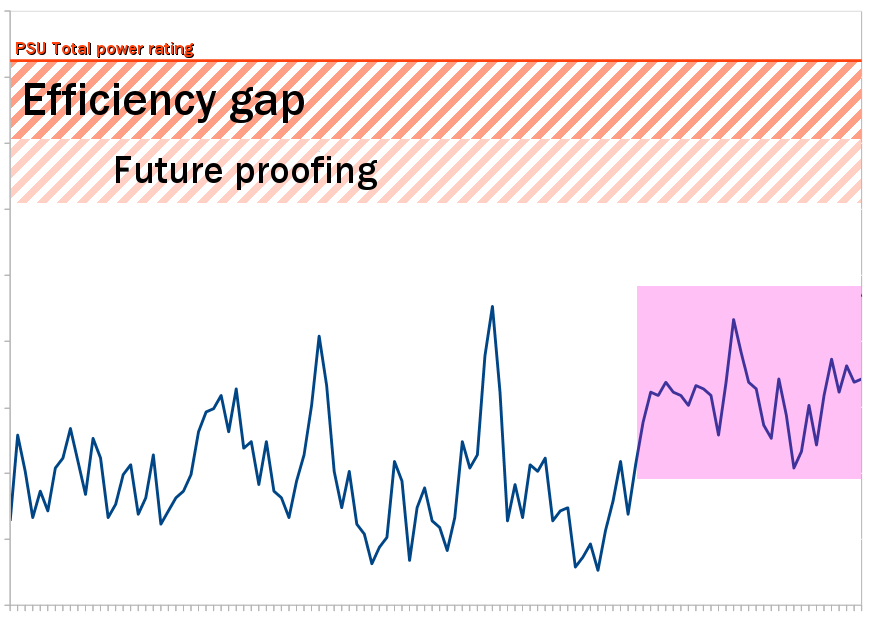

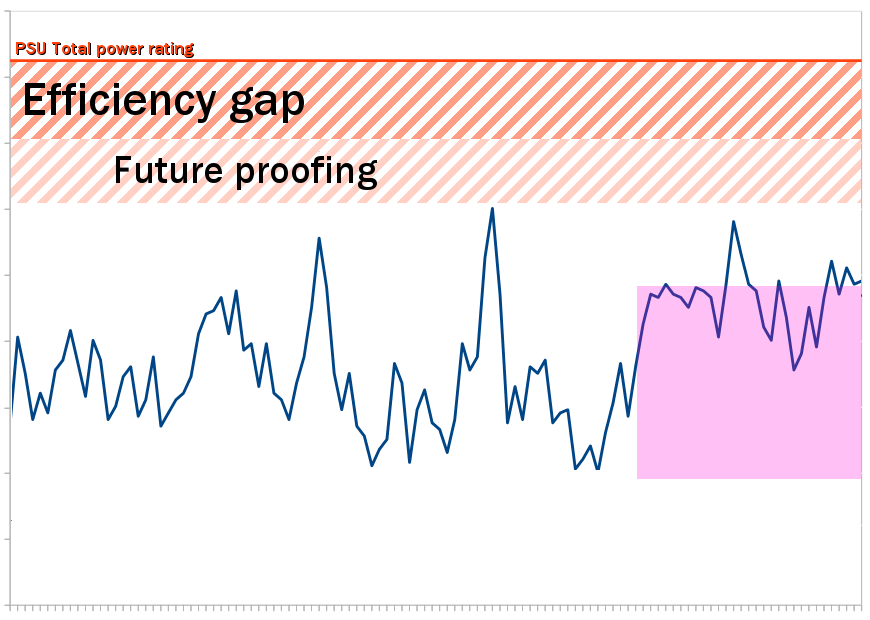

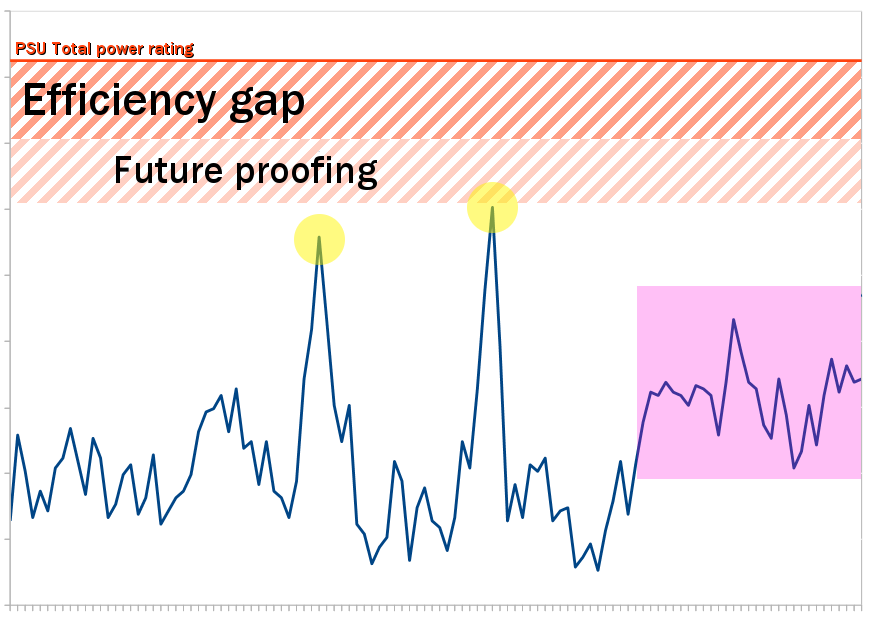

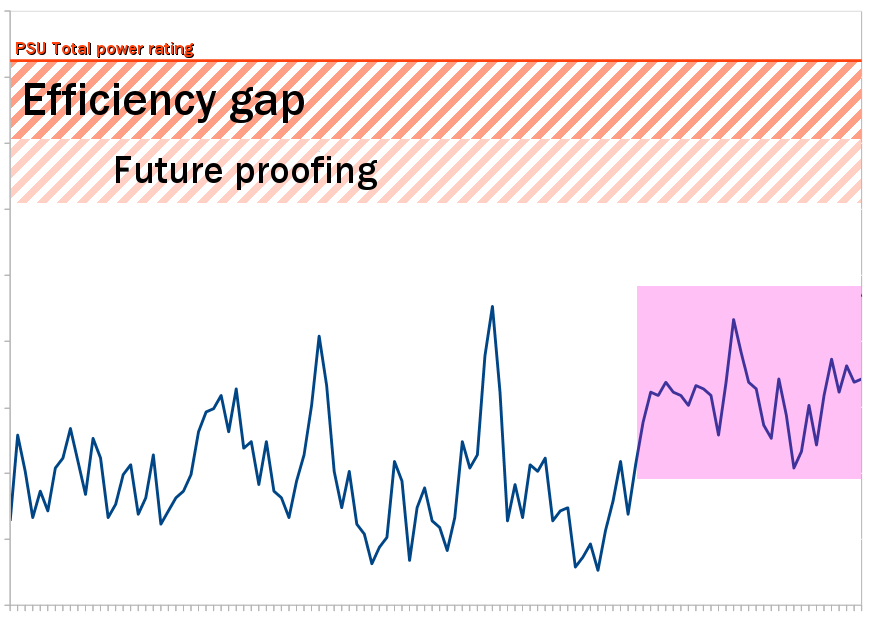

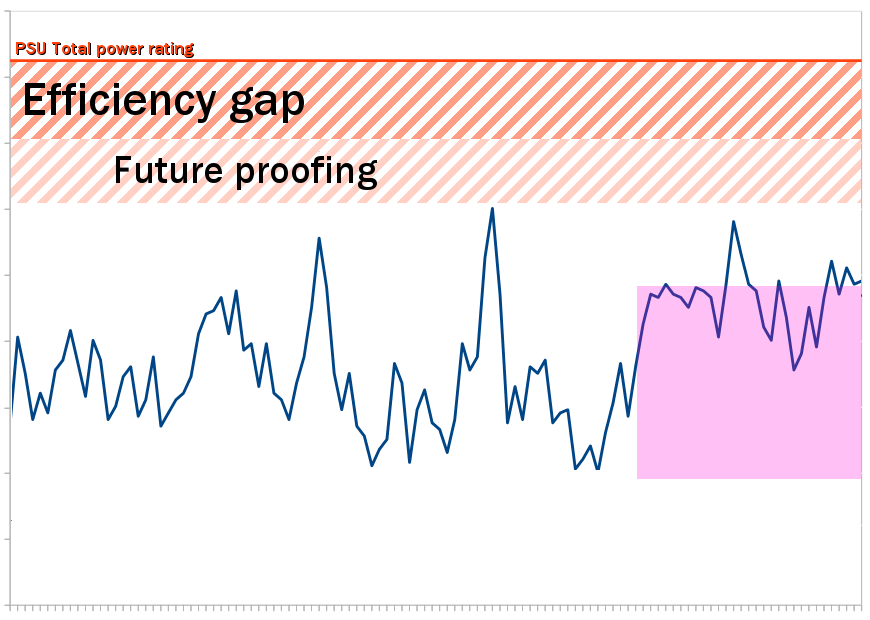

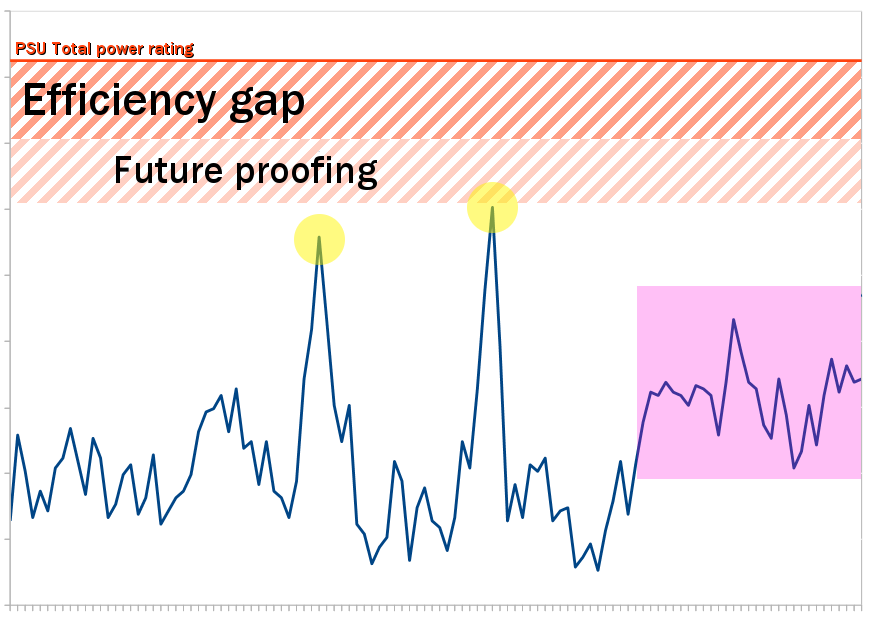

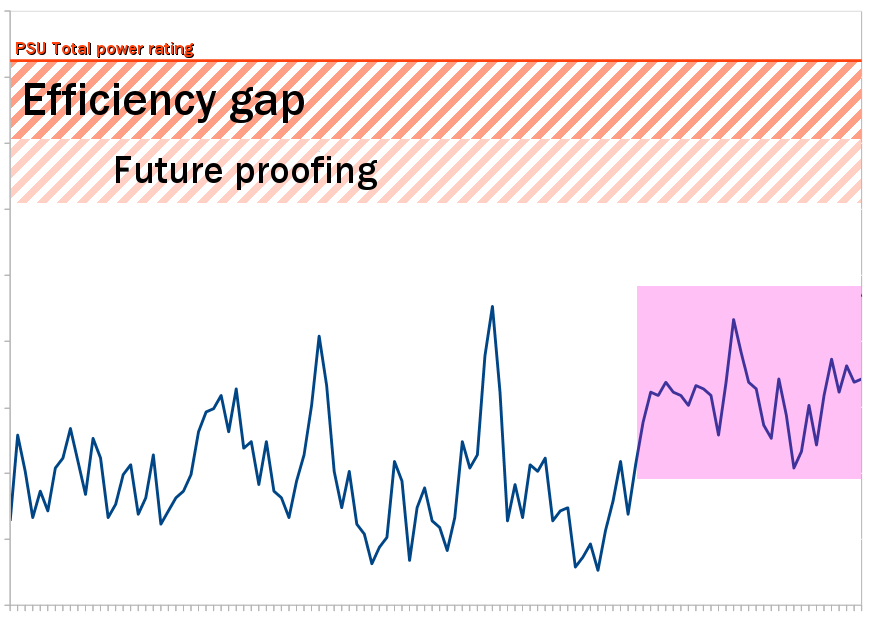

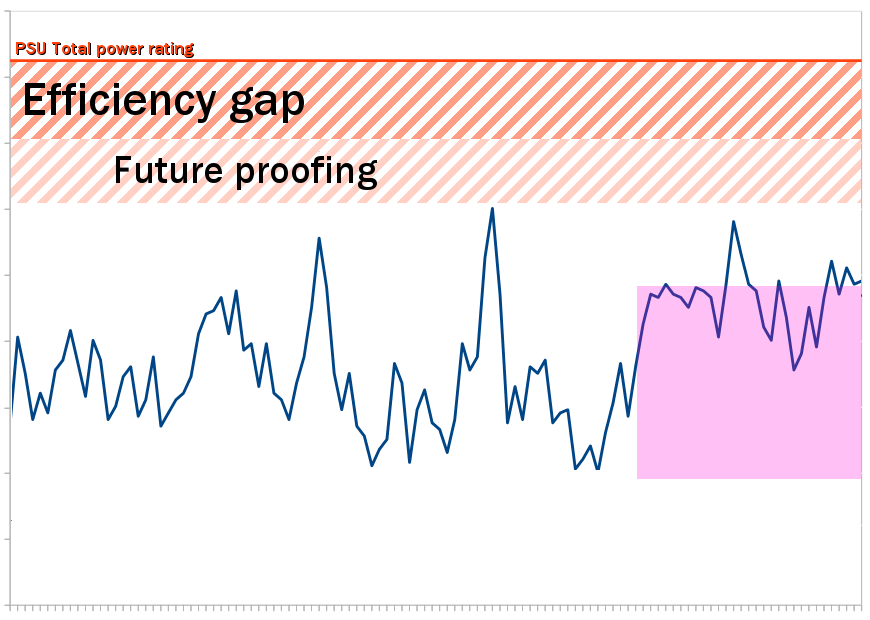

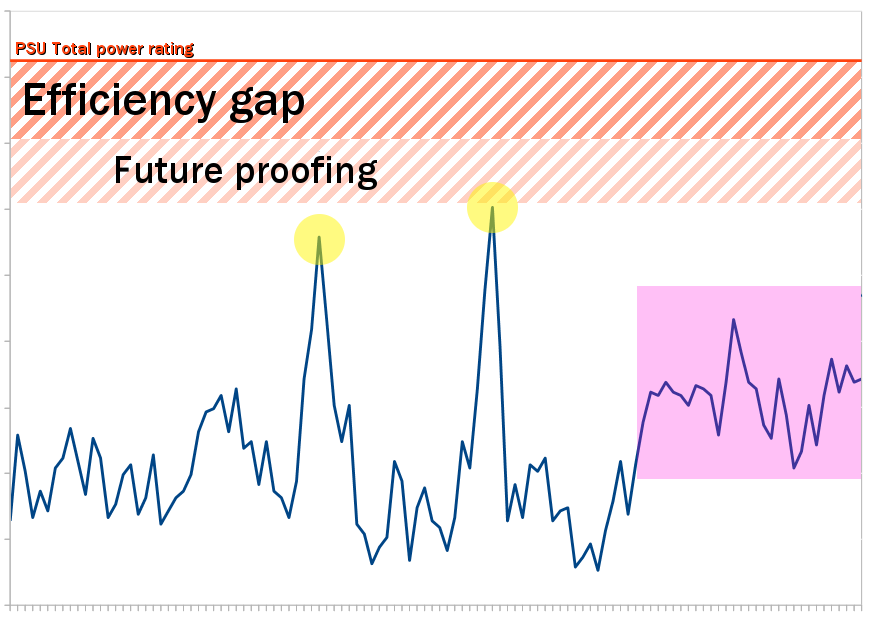

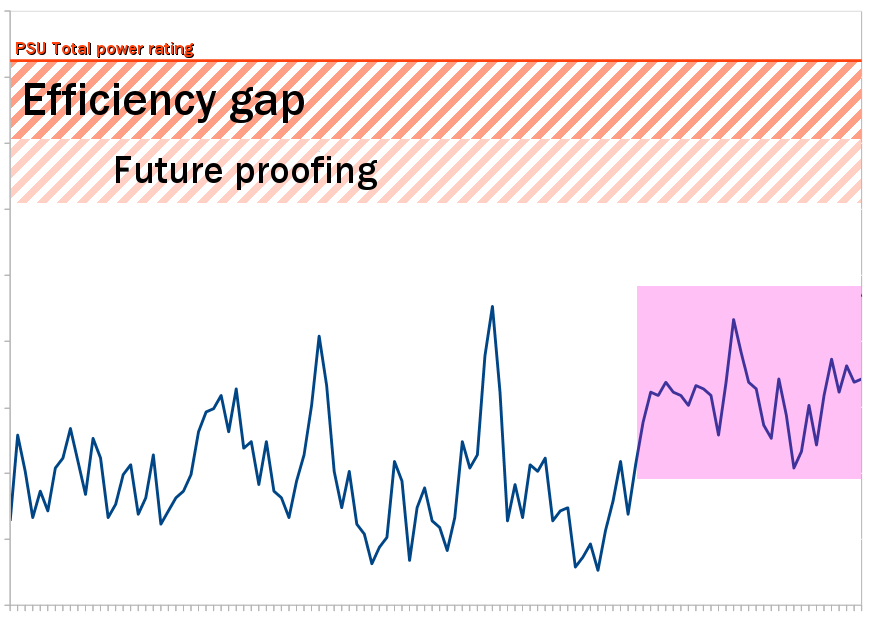

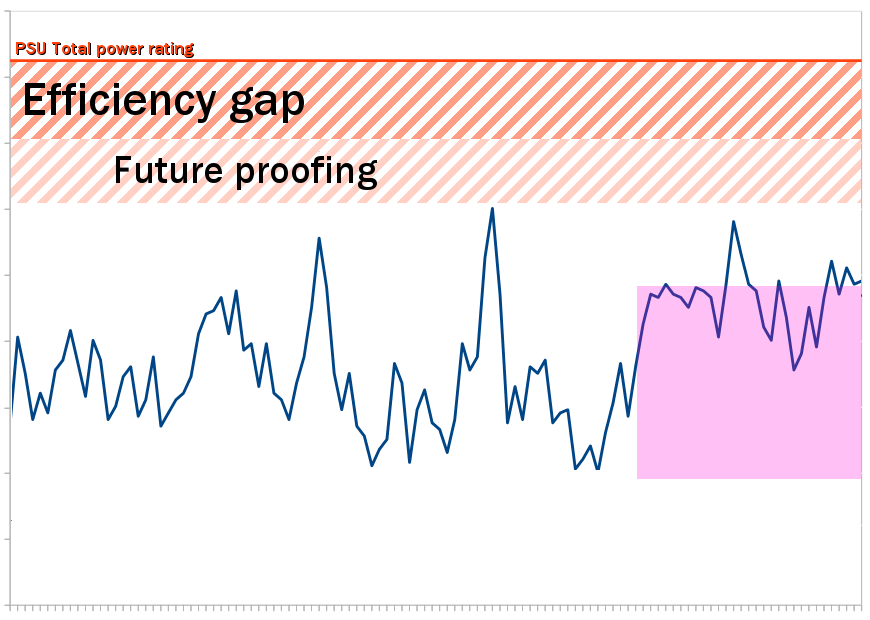

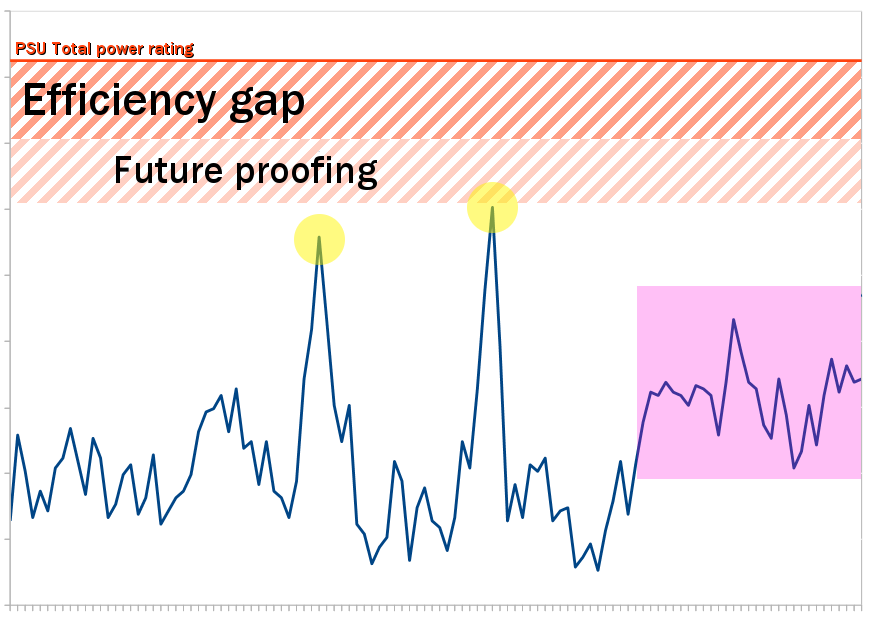

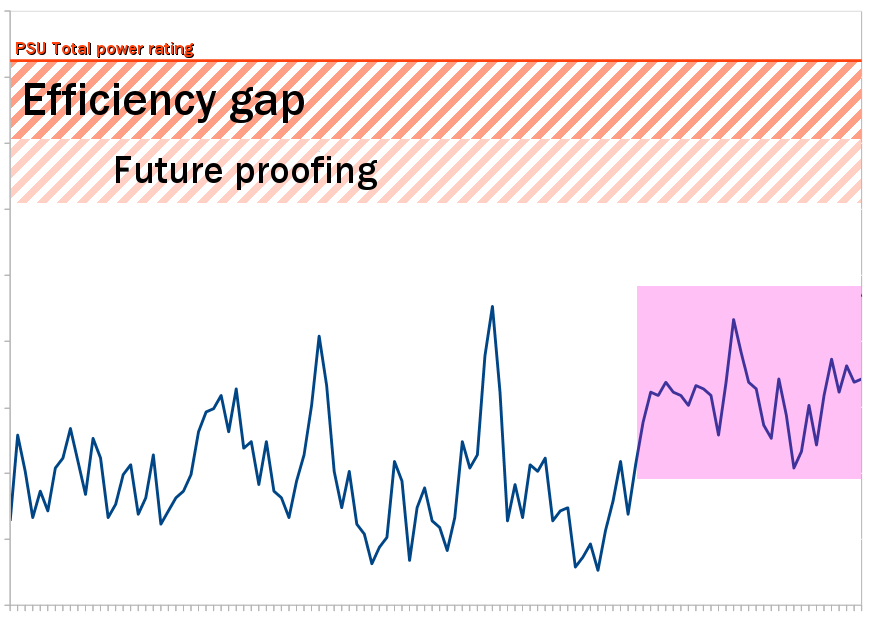

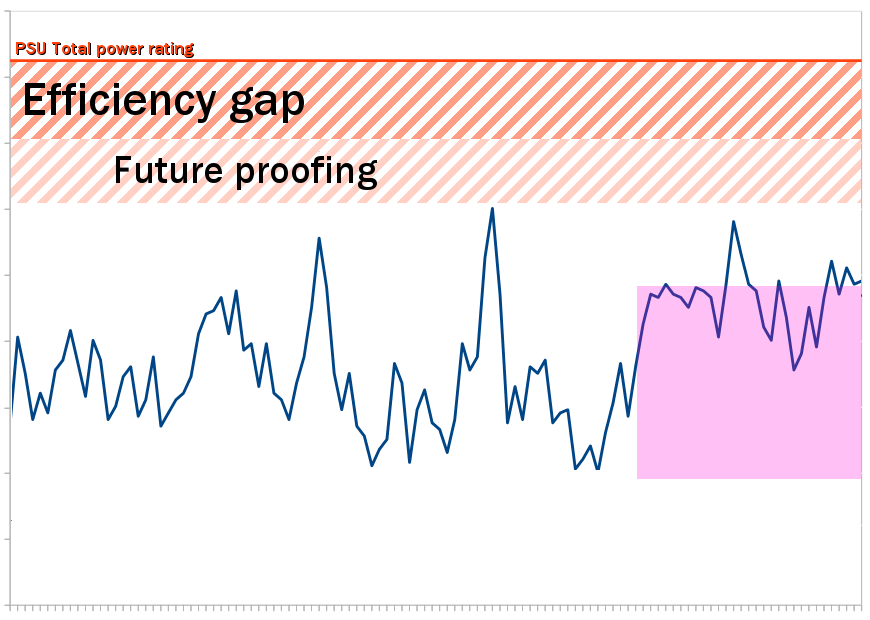

Visual Explanation of Power Rating

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs aren't straightforward.How do they work then?

Because Sony definitely did not tell us. But I don't see how they could magically never drop below 2ghz if it can't sustain 2ghz at all times, please enlighten me. I'm seriously open for learning if there is a way.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw must stay well below the rated capacity. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, the events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving fewer resources untapped. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about exactly how much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll end with this (slowly) animated version of the above.

Last edited:

Stellar post.Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs isn't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw needs to stay well below that. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, these events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving less untapped resources. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about how exactly much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll stop with this (slowly) animated version of the above.

Thank you, really great post and visual explanations.

Some of you really have the patience of saints and I applaud you.

There are just certain usernames that when they come up in certain threads I just scroll right past.

There are just certain usernames that when they come up in certain threads I just scroll right past.

Great post. One of the best explanations I have read on the subject.Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs isn't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw needs to stay well below that. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, these events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving less untapped resources. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about how exactly much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll stop with this (slowly) animated version of the above.

Holy shit. Linus just did a 180 and made a pretty honest apology video. I am impressed.

Respect. I like Linus quite a bit, but he has a flair for the dramatic, and he sometimes talks about things with authority when he doesn't actually have all the details.

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs isn't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw needs to stay well below that. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, these events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving less untapped resources. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about how exactly much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll stop with this (slowly) animated version of the above.

This is by far the best explanation I have heard on this topic and should explain in a much better way why this is indeed a good thing and why it allows more utilization of the GPU, not less.

Thanks for putting it together.

Amazing explanation.Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs isn't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw needs to stay well below that. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, these events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving less untapped resources. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about how exactly much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll stop with this (slowly) animated version of the above.

Well done Liabe. Unusual concept well illustrated.

This current fly on the ass of this thread pulled the same nonsense back at the start of this gen too. It's same shit, it's boring.Some of you really have the patience of saints and I applaud you.

There are just certain usernames that when they come up in certain threads I just scroll right past.

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs aren't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw must stay well below the rated capacity. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, the events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving fewer resources untapped. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about exactly how much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll end with this (slowly) animated version of the above.

Holy shit, I I didn't even know it worked like this. Appreciate the education.

Respect. I like Linus quite a bit, but he has a flair for the dramatic, and he sometimes talks about things with authority when he doesn't actually have all the details.

He did a good job with this, and touched on a number of things I've wondered about too, and I such as what will happen when it comes to lowest-common-denominator PC storage. I'm guessing at best they might make SATA SSDs their baseline?

OH GOD

I JUST THOUGHT OF THE WORST THING

Gamers: Devs, please design your games around SSDs!

Devs: Sure thing! *designs game around DRAM-less SSDs*

It's not based on power draw at all. Re-read or re-watch carefully what cerny told us. If it was based on power draw alone, there would be variations between PS5 because of silicon lottery.They told it was based on power drawn not temperature.

They told that especially in high clocks a 2% drop in clock can yield a 10% drop in consumption (which is pretty known before).

They told that 2ghz at all times wasn't sustainable.

They told about race to idle, because when the gpu is done in less than 33ms they can downclock it to give cpu room (and likely vice versa)

They told that the target clocks were chosen as the point where the base consumption for CPU and GPU were the same

They told that they from analyzing current games in development expect clocks to be close to peak at all times, without clarifying what close means or the workload that causes them to drop.

And nothing of that explains the operating ranges we can expect for PS5, or under which loads they happen, nor refute the fact that it will drop below 2ghz.

Edit: Forgot one: They also told us that it can't sustain 2ghz at all times, which is the basis for this fact.

At this point, I just want the controller to be released early so I can touch it. That's where I am emotionally. I already know the console will be amazing.

Sony did tell us how their design works. The thing you're missing is that the PS5 approach is not just letting clocks be variable, like uncapping a framerate. That would indeed have no effect on the lowest dips in frequency. But they've also changed the trigger for throttling from temperature to silicon activity. And that actually changes how much power can be supplied to the chip without issues. This is because the patterns of GPU power needs aren't straightforward.

Here's a depiction of the change. (This is not real data, just for illustrative purposes of the general principle.) The blue line represents power draw over time, for profiled game code. The solid orange line represents the minimum power supply that would need to be used for this profile. Indeed, actual power draw must stay well below the rated capacity. Power supplies function best when actually drawing ~80% of their rating. And when designing a console the architects, working solely from current code, will build in a buffer zone to accommodate ever more demanding scenarios projected for years down the line.

You'd think the tallest peaks, highlighted in yellow, would be when the craziest visuals are happening onscreen in the game: many characters, destruction, smoke, lights, etc. But in fact, that's often not the case. Such impressive scenes are so complicated, the calculations necessary to render them bump into each other and stall briefly. Every transistor on the GPU may need to be in action, but some have to wait on work, so they don't all flip with every tick of the clock. So those scenes, highlighted in pink, don't contain the greatest spikes. (Though note that their sustained need is indeed higher.)

Instead, the yellow peaks are when there's work that's complex enough to spread over the whole chip, but just simple enough that it can flow smoothly without tripping over itself. Unbounded framerates can skyrocket, or background processes cycle over and over without meaningful effect. The useful work could be done with a lot less energy, but because clockspeed is fixed, the scenes blitz as fast as possible, spiking power draw.

Sony's approach is to sense for these abnormal spikes in activity, when utilization explodes, and preemptively reduce clockspeed. As mentioned, even at the lower speed, these blitz events are still capable of doing the necessary work. The user sees no quality loss. But now behind the scenes, the events are no longer overworking the GPU for no visible advantage.

But now we have lots of new headroom between our highest spikes and the power supply buffer zone. How can we easily use that? Simply by raising the clockspeed until the highest peaks are back at the limit. Since total power draw is a function of number of transistors flipped, times how fast they're flipping, the power drawn rises across the board. But now, the non-peak parts of your code have more oomph. There's literally more computing power to throw at the useful work. You can increase visible quality for the user in all the non-blitz scenes, which is the vast majority of the game.

Look what that's done. The heaviest, most impressive scenarios are now closer to the ceiling, meaning these most crucial events are leaving fewer resources untapped. The variability of power draw has gone down, meaning it's easier to predictively design a cooling solution that remains quiet more often. You're probably even able to reduce the future proofing buffer zone, and raise speed even more (though I haven't shown that here). Whatever unexpected spikes do occur, they won't endanger power stability (and fear of them won't push the efficiency of all work down in the design phase, only reduce the spikes themselves). All this without any need to change the power supply, GPU silicon, or spend time optimizing the game code.

Keep in mind that these pictures are for clarity, and specifics about exactly how much extra power is made available, how often and far clockspeed may dip, etc. aren't derivable from them. But I think the general idea comes through strongly. It shows why, though PS5's GPU couldn't be set to 2GHz with fixed clocks, that doesn't necessarily mean it must still fall below 2 GHz sometimes. Sony's approach changes the power profile's shape, making different goals achievable.

I'll end with this (slowly) animated version of the above.

Fantastic and thorough explanation. Love that you've included actual images to help better visualise things.

To lend further credence to your point about how power and heat spikes aren't always as a result of the craziest visuals or most complex scenes, Cerny actually touched on this in his presentation.

He used Horizon Zero Dawn as an example and how it was the pause map screen that caused some of the biggest power/heat spikes (anyone who's played the game could attest to their PS4 Pro's spinning louder during the map screen), due to the way the engine/system deals with processing more simplistic or much lower triangle count scenes, and ironically consuming less power processing denser geometry (presumably due to more efficient utilisation/saturation of the GPU).

Last edited:

He said they couldn't sustain 2ghz. With variable clock they can reach higher with low gpu utilization but the one fact that you can infer from his statement is that the clocks are definitely going to drop below 2ghz. So a 2% drop being the worst case is completely unreasonable (plus IIRC, that's also not what he said)

The real question is for how long and how often that happens, as well which kind of workload where that happens, none of which, unfortunately, he hasn't answered or even gave even a small blip of actual information regarding that.

The weirdness comes from utilisation. They woudn't be able to sustain a fixed 2GHz in all situations in case of high utilisation - true. But most high utlisation cases come from low performance - simple things like the GoW map screen with unlocked framerate. A fixed 2GHz wouldn't be able to handle the increased power there. But in a variable rate system it could lower the clocks to accommodate. The developer doesn't actually need that high frequency at that point so there is no impact to gameplay.

I think most normal utilisation for 'efficient' code was estimated to be below 50%.

Yeah, and the important thing people need to realize is that these worst case scenarios are usually not areas where a game is even running out of performance. These kind of "power virus" loads, as AMD has described them in the past, are usually caused by very simple scenes that start running at hundreds of frames per second. The example Cerny uses is a simple map screen. On PCs the furmark application was notorious for being able to set videocards on fire until GPUs added thermal protection routines to defend against that kind of process. So it doesn't matter if the clockspeed drops under 2Ghz in such a scenario as you aren't losing any meaningful game performance, just saving wasted power consumption.

It'd be great if this could get threadmarked - its a great and simple explanation of one of the primary reasons and benefits of variable clocks. The downclocks will nearly always be these phantom loads - Sony will have profiled all their games and have clear estimations of utilisation across CPU/GPU for PS4 - and extrapolated that for PS5 (allowing for parallel RT in a CU, and increasingly efficient engines). And they still expect the majority of the time to be a max clocks.

Its counter intuitive maybe - high clocks/high fans when low 'load' - and combined with the usual interpretation of 'boost clocks' coming from PC GPU world I can completely understand this method being a difficult one to wrap your head around.

But this paragraph above is a concise and excellent distillation of things.

edit: Fuck - Liabe Brave post is a thing of beauty too. Honestly Cerny should have described it like that.

Question to resident experts/devs.

I'm assuming right now there's some kind of "power overdrawn" warning in PS5's debug tools. Was there anything similar in previous tools, or did the devs just measure unit temperature (that accumulated and was, one assumes, a bit delayed from the actual troublesome code) without full understanding of the cause?

I'm assuming right now there's some kind of "power overdrawn" warning in PS5's debug tools. Was there anything similar in previous tools, or did the devs just measure unit temperature (that accumulated and was, one assumes, a bit delayed from the actual troublesome code) without full understanding of the cause?

- Status

- Not open for further replies.