-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

PS5 and next Xbox launch speculation - Post E3 2018

- Thread starter Phoenix Splash

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

if 2019 zenSo what do y'all think will be in for both consoles cpu wise. Will they use a modified zen or will zen 2 make the cut?

if 2020 (my guess) zen 2

I suspect it will, at the very least be Zen+ based; although, as was stated in the post quoted by the OP, there is evidence that the PS5 at least will support an instruction set not supported by any current version of the Zen architecture.That could mean Zen 2 or even Zen 3...but it's going to be a semi-custom part...so it could very well be Zen+, but with an expanded instruction set and other features.

Actual performance difference between Zen+ and Zen 2 will probably mostly be down to the new node. Actual IPC improvements will probably be no more than 10-15%. And Xbox One had a 10% clock-speed advantage this generation, and almost no one seemed to notice or care. Either Zen+ or Zen 2 would represent a huge leap over Jaguar.

TSMC's 7nm+ is expected to have better yields than 7nm (due to decreased use of multi-patterning and increased use of EUV lithography), so there is a fair chance that if the console releases in 2020 they will be produced on that node. Both Sony and Microsoft used TSMC this generation.

Actual performance difference between Zen+ and Zen 2 will probably mostly be down to the new node. Actual IPC improvements will probably be no more than 10-15%. And Xbox One had a 10% clock-speed advantage this generation, and almost no one seemed to notice or care. Either Zen+ or Zen 2 would represent a huge leap over Jaguar.

TSMC's 7nm+ is expected to have better yields than 7nm (due to decreased use of multi-patterning and increased use of EUV lithography), so there is a fair chance that if the console releases in 2020 they will be produced on that node. Both Sony and Microsoft used TSMC this generation.

Zen anything will be a major upgrade over what we have now with the Jaguars. Of all the things that i wish for, i hope and pray that the systems are balanced this time. No more Superman GPU's paired with Steve Urkel CPU's.So what do y'all think will be in for both consoles cpu wise. Will they use a modified zen or will zen 2 make the cut?

It's going to be Zen 2 either way.

And yet we had more 60fps titles than in the CPU-beefed previous generation.Zen anything will be a major upgrade over what we have now with the Jaguars. Of all the things that i wish for, i hope and pray that the systems are balanced this time. No more Superman GPU's paired with Steve Urkel CPU's.

This. Zen 2 is just 'Zen but on 7nm' from what I know, and there's no way the node is anything other than 7nm.

Zen 3So what do y'all think will be in for both consoles cpu wise. Will they use a modified zen or will zen 2 make the cut?

There are no informations in regards to Zen2 and its changes.This. Zen 2 is just 'Zen but on 7nm' from what I know, and there's no way the node is anything other than 7nm.

If AMD pumps up the data-paths to support single-cycle AVX instructions then Zen2 will be way faster under such cases.

Last edited:

The choice for 60fps games is entirely up to the developer.And yet we had more 60fps titles than in the CPU-beefed previous generation.

Phil: "If you look at the Xbox stuff we are doing right now like variable framerate... I think framerate is an area where consoles can do more just in general. You look at the balance between CPU and GPU in todays consoles they are a little bit out of whack compared to what's on the PC side and I think there is work that we can do there."

That's from Phil Spencer. But maybe you know more than him...

I don't know better than Spencer but, as you said, 60fps is a developer choice. A beefier CPU isn't going to give more benefits if the game design doesn't match. As you very well said in the first sentence.The choice for 60fps games is entirely up to the developer.

Phil: "If you look at the Xbox stuff we are doing right now like variable framerate... I think framerate is an area where consoles can do more just in general. You look at the balance between CPU and GPU in todays consoles they are a little bit out of whack compared to what's on the PC side and I think there is work that we can do there."

That's from Phil Spencer. But maybe you know more than him...

Its also worth noting that devs had to adapt to multicore CPUs on the last gen, so those machines weren't being used to their fullest - especially if you were using a middleware engine without much customisation.

There are no informations in regards to Zen2 and its changes.

If AMD pumps up the data-paths to support single-cycle AVX instructions than Zen2 will be way faster under such cases.

I hope that's the case. Currently all the confirmation I heard was the node shrink, but actual additional features would be great.

Well theoretically each Zen core can perform four times as many single-precision floating-point operations per clock as a Jaguar core. In actual use the performance difference isn't quite that extreme, but it's still large. And the Zen cores in a next gen console would likely be clocked faster than the Jaguar cores in Xbox One X.

Lets compare theoretical single-precision floating-point operations per second of CPUs (not GPUs)

Xbox || 2.9 GFLOPS

PlayStation 2 || 6.2 GFLOPS

Nintendo Switch || 65.3 GFLOPS

Xbox 360 || 76.8 GFLOPS

PlayStation 4 || 102.4 GFLOPS

Xbox One || 112.0 GFLOPS

PlayStation 4 Pro || 136.3 GFLOPS

Xbox One X || 147.2 GFLOPS

PlayStation 3 || 204.8 GFLOPS

Zhongshan Subor Z || 384.0 GFLOPS

Hypothetical Next Gen 8 core Zen @ 3.0 GHz || 768.0 GFLOPS

Per Digital Foundry a Raven Ridge APU (4 Zen cores, 8 threads, 3GHz, 14nm) can deliver 60+ FPS in CPU limited scenarios where the PS4 Pro (8 cores, 8 threads, 2.1 GHz, 14nm) can't maintain a solid 30 FPS.

It's worth remembering that the Jaguar microarchitecture was fundamentally different from Piledriver or Steamroller (AMDs Desktop CPUs at the time). Whereas all the Ryzen Mobile APUs released so far are identical to the Raven Ridge Desktop APUs (like the Ryzen 5 2400G), just with different clocks and different features or parts of the chip disabled. PIledriver and Steamroller cores could perform three times as many single-precision floating-point operations per clock as a Jaguar core (or three-quarters as many as Zen).

Thanks. I think 2.2 GHz is the maximum we can expect from next gen consoles simply because of power consumption. I am expecting a 2x increase of CPU resources in real world applications going from Jaguars to Zen cores.

Notice on the BOARD SUPPORTING THE AMD SoC developed for China with a Zen CPU and Vega GPU @ about 4TF , it has the required number of GDDR5 chips for 8 GB, and almost no support chips (everything is in the SoC) except for a large number of Bucky regulators (grey square things) with most inductor/transformers having three driver chips....way more than in any PS4. I suspect multiple voltages and each of the 4 Zen CPUs individually powered so that they may be powered off as required. There appear to be enough for the Vega to be powered on and off with multiple voltages and Perhaps portions of the GPU CUs in blocks. In other words, this design places a premium on power savings which increases the cost.

Sony has not had power savings as a priority so a Media console using a similar SoC design could be produced with less expense

- Four Ryzen Cores at 3.0 GHz, with Simultaneous Multi Threading

- 24 CUs of Radeon Vega, at 1.3 GHz, for 4 TFLOPs compute

- 8GB of GDDR5 at 256 GB/s

- OS Option 1 (PC Mode): Windows 10

- OS Option 2 (Console Mode): Windows 10 with Z+ Custom Interface

- Low Power Modes supported, with 30W 'background download' power

- 4.9 liter body, built-power supply, 'excellent' heat dissipation design

- 'Ultra-Mute' 33 dB at full horsepower

- Customizable appearance for unique designs

- 802.11ac WiFi, BlueTooth 4.1 (WiFi Module unknown)

- Storage is supplied through a 128GB M.2 SSD and optional 1TB HDD

- Audio stack supports SPDIF

- HDMI 2.0 is supported, as well as VR, 4K60, and HDCP 1.4

- System has four USB 3.0 ports and two USB 2.0 ports

Last edited:

$200 for 1Tb and $100 for 512GB are good prices. Since I'm sure they sell it at roughly 2.5x cost to make im guessing the 1TB costs $75-$80 to make, maybe close to $100.Hey guys, I just found about the price of the new Intel 660 so, what do you say? Four lines of PCIE in PS5 or there is no way the would have predicted the prices to be so low with enough anticipation?

That could come down but I can't imagine the storage for next gen will take up more than $30-$40 per package. I could see the 1TB getting close in 2.5 years but idk. 512GB for sure tho.

I know it's arbitrary but I'd think they'd at least match or surpass the Xbox One X's Clock speed of 2.3Ghz.Thanks. I think 2.2 GHz is the maximum we can expect from next gen consoles simply because of power consumption. I am expecting a 2x increase of CPU resources in real world applications going from Jaguars to Zen cores.

My guess is 12 cores at 2.5Ghz.

I know it's arbitrary but I'd think they'd at least match or surpass the Xbox One X's Clock speed of 2.3Ghz.

My guess is 12 cores at 2.5Ghz.

Hmm. 4 blocks of 4 cores, each core with 1 die disabled for improved yield. 12 cores - 10 for games, 2 for OS should be enough of a split? Worst case you'd have 8/4 split so still more cores for games. What are we - 6 cores on Jaguar currently - one disabled for yield, one for OS?

I'm expecting more like a 40-60GB cache (about 12-15$ per console). Not a full fat disk.$200 for 1Tb and $100 for 512GB are good prices. Since I'm sure they sell it at roughly 2.5x cost to make im guessing the 1TB costs $75-$80 to make, maybe close to $100.

That could come down but I can't imagine the storage for next gen will take up more than $30-$40 per package. I could see the 1TB getting close in 2.5 years but idk. 512GB for sure tho.

3 blocks with 4 cores on one chip, not 4 chips with 4 cores each.Hmm. 4 blocks of 4 cores, each core with 1 die disabled for improved yield. 12 cores - 10 for games, 2 for OS should be enough of a split? Worst case you'd have 8/4 split so still more cores for games. What are we - 6 cores on Jaguar currently - one disabled for yield, one for OS?

Currently we are on 8 Jaguar cores, 6 are exclusive for games and the 7th core is also available for games, at least 50-80% of it on the Xbox One.

There are no numbers how Sony does the sharing but they also allow the 7th core to be used for games.

One core is always exclusive to the OS/System.

There are 8 physical cores on the chip and they are always active, there is no redundancy.

I think 8 at 3 is more likely, and cheeper than 12 at 2.5I know it's arbitrary but I'd think they'd at least match or surpass the Xbox One X's Clock speed of 2.3Ghz.

My guess is 12 cores at 2.5Ghz.

I'd accept that!

But the Zen (2500/2700U) mobile chips, which are 14nm, have a TDP of 15W for their 4 core 8 threads at around 3.6-3.8Ghz.Thanks. I think 2.2 GHz is the maximum we can expect from next gen consoles simply because of power consumption. I am expecting a 2x increase of CPU resources in real world applications going from Jaguars to Zen cores.

Someone explain to me why 8 cores 16 threads @ 3Ghz or above wouldn't be possible for Zen2's 7nm process in those next gen consoles.

I'm pretty sure this is what it's going to be. 3.0 Ghz seems to be a sweet spot for heat/power consumption on Zen and 8 physical cores cores will make BC easier and be about the same size as the current jags

But the Zen (2500/2700U) mobile chips, which are 14nm, have a TDP of 15W for their 4 core 8 threads at around 3.6-3.8Ghz.

That's their boost frequency you're quoting, which is most likely throttled after a short period of time. Their base frequencies are 2.0 and 2.2ghz respectively.

Someone explain to me why 8 cores 16 threads @ 3Ghz or above wouldn't be possible for Zen2's 7nm process in those next gen consoles..

We can't, the same as you can't tell us it will be possible. We're speculating and really it boils down to how cautiously you want to extrapolate from current data*.

*And that data includes tighter power constraints coming in for consoles next year.

There are no informations in regards to Zen2 and its changes.

If AMD pumps up the data-paths to support single-cycle AVX instructions then Zen2 will be way faster under such cases.

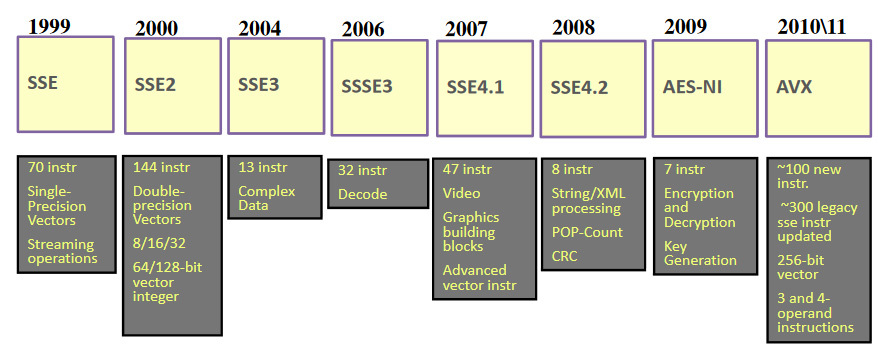

You mean single-cycle AVX2 (i.e. AVX256), as even Jaguar supports AVX128 single cycle and i think double-pumps for AVX2...?

Their max TDP is 15W which is what they'd be at on boost, so it makes more sense to base it on that. They can't be hitting anywhere near that on base clocks.That's their boost frequency you're quoting, which is most likely throttled after a short period of time. Their base frequencies are 2.0 and 2.2ghz respectively.

it is in their interest to release the consoles late 2020 or in 2021. so idk

You mean single-cycle AVX2 (i.e. AVX256), as even Jaguar supports AVX128 single cycle and i think double-pumps for AVX2...?

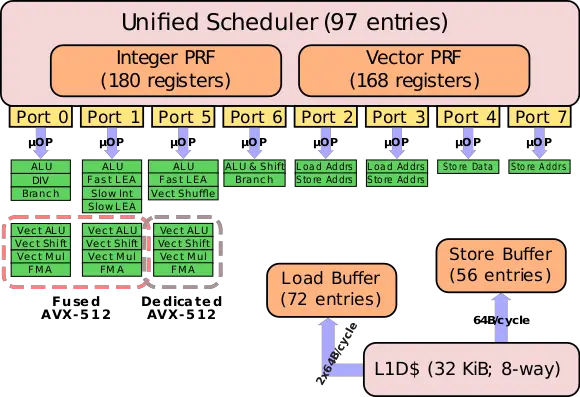

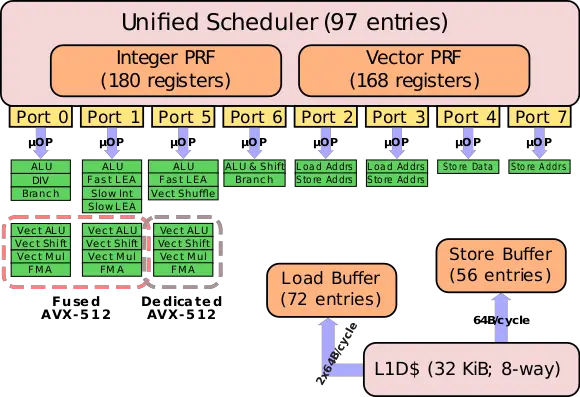

I think the question for Zen 2 is if single cycle AVX512 will be supported.

AVX1 already supports 256-Bit instructions but there is also an 128-Bit mode, Jaguar supports AVX1 with both modes.You mean single-cycle AVX2 (i.e. AVX256), as even Jaguar supports AVX128 single cycle and i think double-pumps for AVX2...?

You can either use 128-Bit AVX1 Instructions per cycle or one 256-Bit AVX1 instruction across two cycles.

Jaguar doesn't support AVX2, that extension came first with Excavator for AMD.

And Zen also only has 128-Bit pipes, so every architecture from AMD which supports AVX can't execute 256-Bit instructions in one cycle.

For AVX512 (In the past called AVX3.(x)) there is another extension which allows for 128/256-Bit operations, so I believe it should be possible to support AVX512 even with 128-Bit pipes.

Server Skylake for example can put out two AVX512-Bit instructions per cycle but there is only one native 512-Bit Pipe, the other 512-Bit instruction comes from ganging up 256-Bit Pipes together:

https://en.wikichip.org/wiki/intel/microarchitectures/skylake_(server)

So Server Skylake can do 2x512-Bit but executes it internally as 2x256 + 1x512.

Cannon Lake was also doing 2x256-Bit per cycle If I remember correctly.

AVX1 already supports 256-Bit instructions but there is also an 128-Bit mode, Jaguar supports AVX1 with both modes.

You can either use 128-Bit AVX1 Instructions per cycle or one 256-Bit AVX1 instruction across two cycles.

Jaguar doesn't support AVX2, that extension came first with Excavator for AMD.

And Zen also only has 128-Bit pipes, so every architecture from AMD which supports AVX can't execute 256-Bit instructions in one cycle.

For AVX512 (In the past called AVX3.(x)) there is another extension which allows for 128/256-Bit operations, so I believe it should be possible to support AVX512 even with 128-Bit pipes.

Server Skylake for example can put out two AVX512-Bit instructions per cycle but there is only one native 512-Bit Pipe, the other 512-Bit instruction comes from ganging up 256-Bit Pipes together:

https://en.wikichip.org/wiki/intel/microarchitectures/skylake_(server)

So Server Skylake can do 2x512-Bit but executes it internally as 2x256 + 1x512.

Cannon Lake was also doing 2x256-Bit per cycle If I remember correctly.

Out of interest, Locuza, in your experience, how much do AVX instructions get used in game's development?

And what types of workloads are the vector instructions used to process?

The benefit of these much more powerful next gen CPU's is developers should be able to get good frames AND more complex world simulations going at the same time while the already beefy GPU's can continue to draw pretty pictures.I don't know better than Spencer but, as you said, 60fps is a developer choice. A beefier CPU isn't going to give more benefits if the game design doesn't match. As you very well said in the first sentence.

In my non-existent experience it doesn't look like very much.Out of interest, Locuza, in your experience, how much do AVX instructions get used in game's development?

And what types of workloads are the vector instructions used to process?

The first game I know of which uses AVX1 is Codemasters GRID2.

Recently I got wind about Doom, The Crew 2 and Project Cars 2 who are using AVX1, the last one gained 4-8% speed improvements overall according to a moderator:

http://forum.projectcarsgame.com/sh...d4e094c2b722&p=1352978&viewfull=1#post1352978

And Path of Exile used AVX1 to dramatically speed up particle calculations:

https://www.pathofexile.com/forum/view-thread/1715146In order to get these gains, we used the special purpose AVX instructions which were introduced on CPUs since roughly 2011. AVX instructions allow you to apply the same set mathmatical operations on a larger set of data at the same time. For example, instead of calculating the velocity of one particle, we can calculate it on four particles at once with the same number of CPU instructions.

The actual particle subsystem by itself is roughly 4X faster using these instructions. For CPUs without AVX support, we also have an SSE2 implementation which is roughly 2X faster than before, which will still have a fairly significant end result on your frame rate.

Much more widespread might be the usage when games use CPU PhysX 3.x, which apparently uses AVX for cloth simulation if available.

The Havok physic middleware might do the same.

Publicly there is little information around what extensions games are using and for what exactly.

There are some reasons why usage is so mediocre even for older extentions like SSSE3 and SSE4.1/2.

SSSE3 isn't supported by AMD's Phenom CPUs where several games in the recent past got patches to lower the min-spec.

Then there is SSE4.1 which got support with Intel's 45nm Penryn generation but it took AMD till Bulldozer and Jaguar to support SSE4.1 and SSE4.2.

SSE4.2 came with Intel's Nehalem processors.

Now for games it looks like SSSE3/SSE4.1/2 are establishing themselves as the recent baseline.

Resident Evil 7, Dishonored 2, Mafia 3, No Man's Sky and probably many more are using one or both extensions.

For AVX1 it looks like the penetration is accelerating.

But the reason why the usage is so limited is because consoles don't really benefit from it, throughput-wise Jaguar gains nothing from it.

There is the three operand advantage but one game is using SSE4.1 on consoles but AVX1 on PC, I forgot which one but it might be tight together with fetch/decode/instruction mix/latency considerations?

And with AVX1 for PC you are starting too really chop off the low-end spectrum.

No Conroe, Penryn, Nehalem, Westmere, Phenom and Bobcat support.

It really is a 2011/12 standard but partially it's worse because Intel artifically crippled Celeron/Penitum CPUs and disabled AVX1 support.

AVX2 and FMA3 came with Haswell, Piledriver (FMA3) and Excavator (AVX2).

It's not supported by consoles, it's a 2013/14 story and as far as I know there is no game which uses them.

But the next generation of consoles CPU can mandate a new standard if there are advantages.

Like AVX1/2 and FMA3 if AMD would upgrade their pipes for sincle-cycle 256-Bit execution.

Also AVX512 is a nice standard even if you are only supporting it with 128/256-Bit SIMDs, the number of vector registers is doubled, from the current 16 to 32.

There are also some nice functions coming with the standard but AVX512 is splitted into multiple different extensions and not everything needs to be supported.

In regards to Zen2/3 it will be really interesting what AMD will do on that side.

Their max TDP is 15W which is what they'd be at on boost, so it makes more sense to base it on that. They can't be hitting anywhere near that on base clocks.

Apologies, I was getting mixed up with the 2200G & 2400G & their 65w draw.

Does anyone know what the power splits are between the CPU and GPU on the PS4, Pro, XB1 and XBX?

Their max TDP is 15W which is what they'd be at on boost, so it makes more sense to base it on that. They can't be hitting anywhere near that on base clocks.

Not sure if this helps or confuses things even more but from the Anandtech Ryzen 2400G review:

https://www.anandtech.com/show/12425/marrying-vega-and-zen-the-amd-ryzen-5-2400g-review/13

Does anyone know what the power splits are between the CPU and GPU on the PS4, Pro, XB1 and XBX?

I don't think we'll ever get these sort of figures but if I was to take a wild guess I would say the 8 Jag cores in OG PS4/Xbox One used ~25w at 28nm? Probably way out, though.

In my non-existent experience it doesn't look like very much.

The first game I know of which uses AVX1 is Codemasters GRID2.

Recently I got wind about Doom, The Crew 2 and Project Cars 2 who are using AVX1, the last one gained 4-8% speed improvements overall according to a moderator:

http://forum.projectcarsgame.com/sh...d4e094c2b722&p=1352978&viewfull=1#post1352978

And Path of Exile used AVX1 to dramatically speed up particle calculations:

https://www.pathofexile.com/forum/view-thread/1715146

Much more widespread might be the usage when games use CPU PhysX 3.x, which apparently uses AVX for cloth simulation if available.

The Havok physic middleware might do the same.

Publicly there is little information around what extensions games are using and for what exactly.

There are some reasons why usage is so mediocre even for older extentions like SSSE3 and SSE4.1/2.

SSSE3 isn't supported by AMD's Phenom CPUs where several games in the recent past got patches to lower the min-spec.

Then there is SSE4.1 which got support with Intel's 45nm Penryn generation but it took AMD till Bulldozer and Jaguar to support SSE4.1 and SSE4.2.

SSE4.2 came with Intel's Nehalem processors.

Now for games it looks like SSSE3/SSE4.1/2 are establishing themselves as the recent baseline.

Resident Evil 7, Dishonored 2, Mafia 3, No Man's Sky and probably many more are using one or both extensions.

For AVX1 it looks like the penetration is accelerating.

But the reason why the usage is so limited is because consoles don't really benefit from it, throughput-wise Jaguar gains nothing from it.

There is the three operand advantage but one game is using SSE4.1 on consoles but AVX1 on PC, I forgot which one but it might be tight together with fetch/decode/instruction mix/latency considerations?

And with AVX1 for PC you are starting too really chop off the low-end spectrum.

No Conroe, Penryn, Nehalem, Westmere, Phenom and Bobcat support.

It really is a 2011/12 standard but partially it's worse because Intel artifically crippled Celeron/Penitum CPUs and disabled AVX1 support.

AVX2 and FMA3 came with Haswell, Piledriver (FMA3) and Excavator (AVX2).

It's not supported by consoles, it's a 2013/14 story and as far as I know there is no game which uses them.

But the next generation of consoles CPU can mandate a new standard if there are advantages.

Like AVX1/2 and FMA3 if AMD would upgrade their pipes for sincle-cycle 256-Bit execution.

Also AVX512 is a nice standard even if you are only supporting it with 128/256-Bit SIMDs, the number of vector registers is doubled, from the current 16 to 32.

There are also some nice functions coming with the standard but AVX512 is splitted into multiple different extensions and not everything needs to be supported.

In regards to Zen2/3 it will be really interesting what AMD will do on that side.

Thanks for the very detailed response. I appreciate it.

I'm curious as to the differences between the vector extensions, i.e. SSE vs AVX. Are the principal differences in SIMD width and numbers of vector registers?

So am I right to say that the main concern for developers in exploiting supported vector extensions in their code is compatibility over performance? In an age where games are so expensive such that they basically need to run on everything in order to be profitable (enough) the patchwork hodgepodge of various supported vector extensions makes broad developer utilisation less likely, leaving mostly the middleware providers like Havok as those most likely to leverage them, since they're the ones with a direct financial incentive to optimize their codebases around such features supported by major hardware platforms.

Not sure if this helps or confuses things even more but from the Anandtech Ryzen 2400G review:

https://www.anandtech.com/show/12425/marrying-vega-and-zen-the-amd-ryzen-5-2400g-review/13

I don't think we'll ever get these sort of figures but if I was to take a wild guess I would say the 8 Jag cores in OG PS4/Xbox One used ~25w at 28nm? Probably way out, though.

I might guess it was closer to 20W, but I think you are about right. Folks need to be careful trying to guess power consumption based on the TDP of AMDs APUs.

For one, there's no set rules for calculating Thermal Design Power. Once upon a time AMDs TDP actually reflected peak power consumption, but Intels did not and AMD did not want their chips to look pwer hingry. TDP is now just the manufacturer's way of saying you need about this much cooling. It can be heavily influnced by how they expect a particular part to be used. They might give a part intended for a thin-and-light notebook a lower TDP because they figure it will rarely be used for processor intensive work. And just set it to throttle its speed if the chip heats up.

Secondly, the Ryzen Mobile chips you're looking at have intgrated Vega GPUs. In the case of those with Vega 10, Vega 10 at 1.3 GHz will draw more power than the Zen cores.

That said, if 7nm cuts power consumption by 50% 8 Zen cores at ~3.2 GHz should probably draw about the amount of juice as the 8 Jaguar cores in the PS4.

So what do y'all think will be in for both consoles cpu wise. Will they use a modified zen or will zen 2 make the cut?

Zen 2 or zen 3.

Zen1/zen+ are 14nm/12nm so i think they are out of question.

That said, if 7nm cuts power consumption by 50% 8 Zen cores at ~3.2 GHz should probably draw about the amount of juice as the 8 Jaguar cores in the PS4.

TSMC's 7nm is expected to get 60%+ power reduction or a 40% increase in perf (compared to their 16FF+). 8 zen cores at 3ghz-ish is what I believe we'll be getting - significant improvement with, maybe, some power saving.

Zen 2 or zen 3.

Zen1/zen+ are 14nm/12nm so i think they are out of question.

Odds are neither Sony nor Microsoft will use the AMD designed nodes anyway.

Look at the PS4 Pro, PS4 Slim, Xbox One S, and Xbox One X, they all use one of TSMC's "16nm" variants, not AMD's 14nm or 28nm. AMD only ever released Jaguar on 28nm.

These APUs are all semi-custom parts. All of them have to have their own individual design, so it's entirely up to Microsoft and Sony what process they want to use. They could keep Jaguar on 7nm if they really want, even though there has never been a 7nm Jaguar part.

The changes from extension to extension are always different, sometimes there are new functions, sometimes the SIMD-width gets increased, the number of available registers, sometimes changes were made for a more efficient handling of the operations themselves.I'm curious as to the differences between the vector extensions, i.e. SSE vs AVX. Are the principal differences in SIMD width and numbers of vector registers?

So am I right to say that the main concern for developers in exploiting supported vector extensions in their code is compatibility over performance? In an age where games are so expensive such that they basically need to run on everything in order to be profitable (enough) the patchwork hodgepodge of various supported vector extensions makes broad developer utilisation less likely, leaving mostly the middleware providers like Havok as those most likely to leverage them, since they're the ones with a direct financial incentive to optimize their codebases around such features supported by major hardware platforms.

As a rough overview till AVX1 (Page 6):

https://www.polyhedron.com/web_images/intel/productbriefs/3a_SIMD.pdf

And yes the main concern for developers is as often times the lowest common denominator.

It's not attractive to spend your time and energy for a small(er) subset of your potential customers.

Last edited:

TSMC's 7nm is expected to get 60%+ power reduction or a 40% increase in perf (compared to their 16FF+). 8 zen cores at 3ghz-ish is what I believe we'll be getting - significant improvement with, maybe, some power saving.

Bit off topic, though it does at least concern a potential new console - is there any way to figure out what the power or battery life improvements would be like in a Switch 1.5 that had a newly produced 7nm Tegra X1?

Bit off topic, though it does at least concern a potential new console - is there any way to figure out what the power or battery life improvements would be like in a Switch 1.5 that had a newly produced 7nm Tegra X1?

Well, TSMC claims that its 16nm process consumes 60% less power than the 20nm (which the Switch's Tegra X1 is built on). And they have claimed that their 7nm process consumes 60-65% less power than the 16nm. So the Tegra X1 would use about 1/6th the power. But I would assume that a Switch Slim or whatever would have a smaller battery, and the screen would still draw just as much power.

And screen brightness would have an even larger effect on Battery life.

I wonder if they could maybe put an M.2 SSD access door in? So if a user wanted to add an SSD they could, rather than Sony including one by default and increasing the price. They already have the software in place to manage changing of HDDs. The existing HDD could then be treated as an external drive.

Well, TSMC claims that its 16nm process consumes 60% less power than the 20nm (which the Switch's Tegra X1 is built on). And they have claimed that their 7nm process consumes 60-65% less power than the 16nm. So the Tegra X1 would use about 1/6th the power. But I would assume that a Switch Slim or whatever would have a smaller battery, and the screen would still draw just as much power.

And screen brightness would have an even larger effect on Battery life.

I don't necessarily see it having a smaller battery - they would need to keep it compatible with things like the dock and Joy-Con, so it would all need to be exactly the same size as the normal Switch, with the improvements being internal. Sitting the battery might make it cheaper and lighter but it wouldn't be any smaller.

And if you're right about the improvements, then what I'd be more excited about is the ability of the chip to run games in Docked Mode while handheld, or do a Pro-style Boost Mode, or even both at the same time, along with Boost Mode while docked.

It would be easier to just have a plastic adapter that clips into old docks so they could use a physically smaller Switch 1.5.I don't necessarily see it having a smaller battery - they would need to keep it compatible with things like the dock and Joy-Con, so it would all need to be exactly the same size as the normal Switch, with the improvements being internal. Sitting the battery might make it cheaper and lighter but it wouldn't be any smaller.

i really think keeping a future Switch update the same size is not what they want. That besides lowering the final price, making it truly portable would be a major consideration. The size of the current dock port would not be a problem.

Nintendo WANT the Switch to be the successor to the 3DS. Both the cost and the form factor will be important to make that transition for those who haven't yet bought a current model Switch.

It would be easier to just have a plastic adapter that clips into old docks so they could use a physically smaller Switch 1.5.

i really think keeping a future Switch update the same size is not what they want. That besides lowering the final price, making it truly portable would be a major consideration. The size of the current dock port would not be a problem.

Nintendo WANT the Switch to be the successor to the 3DS. Both the cost and the form factor will be important to make that transition for those who haven't yet bought a current model Switch.

If Nintendo was going to make a smaller Switch, they would not have made a dock and removable controllers that are precisely designed around the current size. When they did that they restricted themselves to the current size, and they were apparently ok with that. I suppose a plastic adapter for the dock could possibly work, but there's nothing that would fix the Joy-Con issue. They would have to make whole new, smaller Joy-Con, which would would be uncomfortable (especially in single-use mode) and expensive, and would massively piss people off when they found that their pricey controllers weren't usable even on the Switch's revision, never mind the successor. Imagine if Sony had made all DS4s unusable on the Pro and you needed DS4.5 controllers instead. It would be so, so much easier to just use a 7nm chip and sell the device on its improved performance and battery life, and even have a pure tablet SKU for current Switch owners.

There is the possibility of a pure-handheld version that some people have brought up, but I doubt they'll make 'the Switch that doesn't switch'. I do see your point about making the Switch 'truly portable', but I'm not sure if that will matter so much. Just by being a handheld at all, the Switch is more portable than Sony or Nintendo's offerings, and it's selling gangbusters as is.

Guys, just a question regarding Zen cpu's in both next gen consoles. Be it Zen, Zen2. How efficient do you think it could make running Rockstars Rage engine, middleware Euphoria and Bullet on next gen consoles? Are we going to finally get a stable and solid framerate in a new GTA?

And guys with Zen setups on pc, how stable is it running GTAV at max graphics etc. Are you able to run GTA V in 60fps with a GPU on par with what we think may be in a PS5? Is the limitations in GTA V CPU or GPU bound?

And guys with Zen setups on pc, how stable is it running GTAV at max graphics etc. Are you able to run GTA V in 60fps with a GPU on par with what we think may be in a PS5? Is the limitations in GTA V CPU or GPU bound?

Last edited: