RE2 essentially caches textures to whatever VRAM limit you set it to. If I'm not mistaken, setting it to High(1GB) already gives you the highest quality textures available in the game. However, you may see more frequent texture streaming as the engine is only given 1GB of dedicated VRAM for textures. You can set it up to a whooping High(8GB), but even the High(3GB) setting I tested had no texture pop in issues whatsoever. I think the engine also internally detects your GPU VRAM and tries not to go over it regardless of your texture settings to prevent stutteringYeah, I'm not really sure what is going on in RE2 remake. I've played with these insane texture settings and everything worked wonderfully even though it should have been thrashing as hell. I think Dictator talked about this in his video on RE2, it's probably a false estimate by the game.

You are actually totally right and I think I'm going to add DMCV and RE7, just to see what's going on with the RE engine.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

PS5 Speculation |OT12| - Aw hell, Transistor's running this again?

- Thread starter Mecha Meister

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

Threadmarks

View all 7 threadmarks

Reader mode

Reader mode

Recent threadmarks

Thread rules + Contest Image of Phil Spencer's twitter profile picture with 10 memory chips Transistor vs. the World Xbox Series X chip size estimation Series X chip comparison (illustration vs photo) Xbox Series X Official Specs NEW DISCUSSION GUIDELINESIt's the best piece of information we have but it is possible it turns out to not be true.

Some of the achieved values are higher then the theoretical, so they can't refer to the same APU.

Wasn't it something about theoretical values using 14Gbps ram while achieved values were using faster than 14 Gbps ram?

Yes, theoretical were all for Ariel seemingly.Wasn't it something about theoretical values using 14Gbps ram while achieved values were using faster than 14 Gbps ram?

I am devastated that a bag of walnuts is not winning the poll. You can add walnuts to pancakes! You can add walnuts to almost anything!

Look upon my breakfast and be amazed!

On topic what are the chances we get a PS5 that is 13tf+?

Look upon my breakfast and be amazed!

On topic what are the chances we get a PS5 that is 13tf+?

Breakfast made in Dreams?I am devastated that a bag of walnuts is not winning the poll. You can add walnuts to pancakes! You can add walnuts to almost anything!

Look upon my breakfast and be amazed!

On topic what are the chances we get a PS5 that is 13tf+?

My point is that unlike CPUs, almost every GPU usage is scalable and most of it scales with resolution. Whatever doesn't scale with resolution, will still scale "by hand", if it's SVOGI, particle simulations or voxel-based cloud systems. In the end, if we look at game engines through the ages it's hard to think of a scenario in which a developer can't scale back GPU usage by downscaling resolution and other graphical settings.

Knowing MS and how good they are with abstracting the hardware using a thick API layer, I think it's pretty safe to assume that XSX games will just run on the XSS and all developers really need to do is balance their graphical settings and QA the hell out of it. It's almost like while playing a game, someone replaced your 2080 with a 1660 so you are getting terrible performance. So you go into the settings menu in order to get things running well again. You drop resolution, you lower the SVOGI fidelity, you lower textures to free up VRAM, etc. and in the end, things are running well again.

Again I'm arguing that non-scalable elements don't get designed into most games because of the very fact that they are not scalable. Most elements being scaleable is strictly because the platform design dictates that to be so, not because the elements are always going to be scalable. You're taking the fact that things are designed to be GPU scalable as evidence that most anything you want to run on GPU will be.

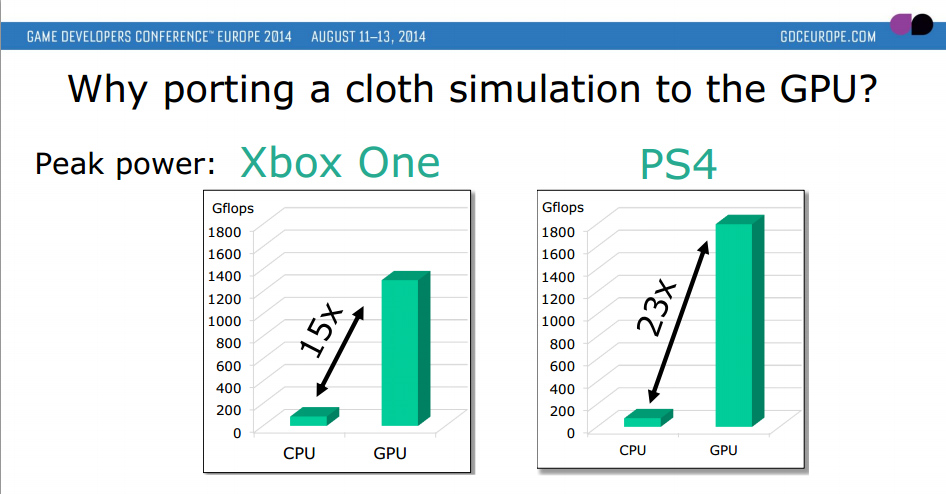

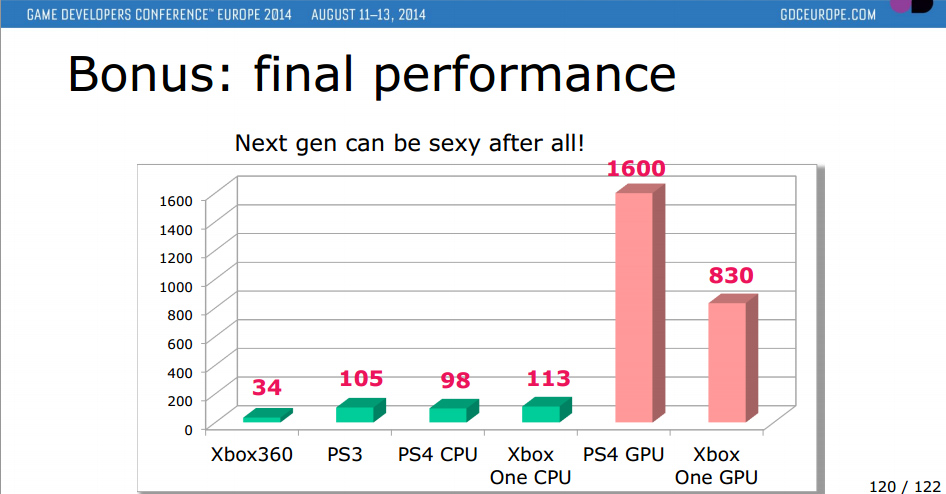

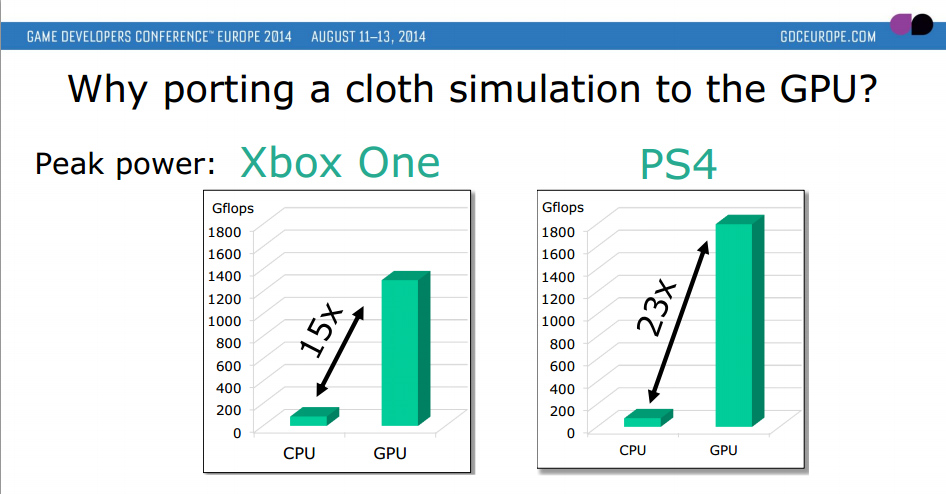

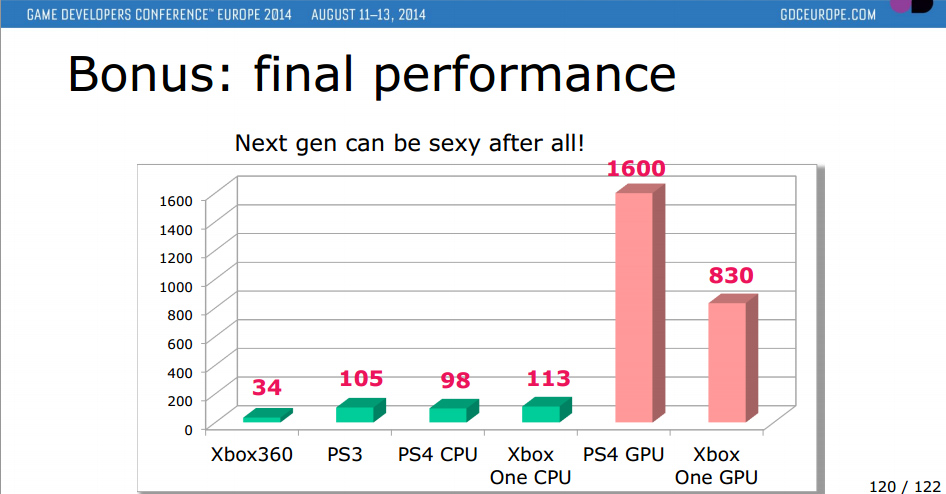

I think I'll just link this UBIsoft test on GPGPU cloth simulation:

Ubisoft GDC Presentation of PS4 & X1 GPU & CPU Performance

Ubisoft's GDC 2014 document provides insights into the performance of the Playstation 4 and Xbox One's CPU and GPU and GPGPU compute information from AMD and other sources.

www.redgamingtech.com

We can see there are distinct workloads that benefit from GPGPU, but this also means we cannot plan a gameplay mechanic around the higher level of GPGPU compute of this cloth simulation. The obvious reason being because of the vast gap in those workloads. Therefore any use of cloth simulation would have to NOT affect gameplay or design and be relegated to be visual and scalable only.

The only reason these effects are, as you put it, sliders on a scale is because developers know up front they cannot rely on the higher levels of performance because of the vast disparity between systems and design their gameplay elements around the limitations of their lower baseline systems.

Boost mode pspro patched games? To push those resolutions to native 4k and the unlocked frame rates to 60fps?...maybe?

yeah im gonna press the doubt button on pro boost. this is 6.3x faster than the OG PS4 and 2.7x faster than PS4 Pro. This is also why it makes sense that it's probably PS5 because XSX is only 2.5x faster than X1X. They actually took a bigger jump than MS did. It's still a generational leap. Probably thought MS overcorrected with X1X.

as for max clock... doubt too. it wouldn't be a round number if they're testing the limits of a processor.

People can't forget power budget is a thing. PS4, PS4 Pro and Xbox One X all targeted 150W TDP so Sony probably thought that MS would do the same this time but instead pushed the upper bound... because PS5 is supposedly in 150W TDP but is going to be hitting closer to it's peak like X1X did. MS went above that. Their APU alone will be drawing more power than the entire X1X did by itself.

like no joke... the only Sony console that hit 200W was the PS3.

Xbox 100W, PS2 50W, Xbox 360 170W, PS4 135W, PS4 Pro 150W, Xbox One 115W, Xbox One X 170W

PS5 170W projected, Xbox Series X 200W+ projected

Remember when everyone thought over 10 TF was a fantasy? (well except me, i thought they could push 18-24 TF just to send the hammer down because of 7nm) there was a time when 8 TF was considered the ceiling. you go back and tell your old self that it was 9.2 TF and you'd be happy. MS just went above that, that's all. There's nothing to be sad about... the games are still going to look fantastic. Just look at what 1.8 TF GCN is doing now. Look at what 4.2 TF GCN is doing now. Imagine having a much better CPU and having raytracing *for free* imagine having Last of Us 2 but with realistic looking hair *for free*

People are just freaking out because one number is higher than the other.

This is kind of a circular argument especially when we are talking about multi platform games. You couldn't program use of GPGPU into your game for obvious reasons if you are designing for Xbox and PS4. So it would also stand to reason that we would see a significant difference in exclusive titles which is what we do see. Titles like DriveClub used the extra power in various ways, we also saw Killzone Shadowfall and Infamous which used it to perform sound reflections. I think the point I'm making, even with Claybook, is that the extra performance doesn't "have" to be allocated visuals so that it can scale down to a lower system. I do think the CPUs this time around are much better but we also have 7 years to go with the hardware and whatever baseline we establish upfront.

We went into the generation with a low baseline for CPU that really began to hurt towards the end of this generation, we will be starting a whole new 7 year period with a 4Tflop GPU baseline.

edit: actually as I'm thinking about it we are essentially talking an exact replay of how it worked last gen. Games were designed for the Xbox base system and scaled up, not the other way around. We already have an example with the current gen where if we have similar CPU but different GPUs that most will simply be resolution difference and maybe a frame rate increase with some other added effects and call it a day. We see this already with the Xbox One OG up to the X1X being 5x more powerful.

Lockhart is technically too powerful relative to the other consoles. You make a game maximizing Lockhart at 1080p utilizing zero GPGPU and you would be using dynamic resolution on Xbox Series X because it wouldn't be able to handle the scene you made on Lockhart. It's 3x. Don't forget that. You need 4x.

This means on an Xbox exclusive, you have a GPGPU budget of 1.3 TF. For a multiplatform game, you'd have a GPGPU budget of around 1.9TF (assuming PS5 @ 9.2TF), you exceed that and the game cannot be ported to Lockhart. now last time I checked dedicating nearly 25% of your GPU budget to GPGPU would be kinda nuts. Lockhart is not going to hold back PS5 or Xbox Series X. Full stop. If Lockhart is higher than 4 and closer to 4.8 which I think it might be... the numbers get even crazier. 2.4 TF GPGPU budget for exclusives 3TF budget for multiplatform. this is assuming you are utilizing the rest for shaders/RT in the most optimal way possible. (which is why the multiplatform number is higher, so XSX gets IQ benefit and framerate stability with no extra bells and whistles)

I don't know about you but having 1.9-3TF budget for GPGPU usage sounds pretty darn exciting to me, considering we just came from a generation where all consoles are below that mark no matter what.

edit: apparently I did my math like a lazy ass... 1.9TF should be around 2.3 TF. woops.

Last edited:

Regarding RT and VRS, the files show a lot of tests but you can't infer that anything not tested doesn't exist. Sparkman and Arden files are very different, have nothing to do with the Ariel and Oberon files, so it's not like they are the same but Sparkman has RT while Oberon doesn't. So as I see it, Github doesn't confirm or deny RT and VRS in Oberon or Ariel, it just doesn't refer to it.Wasn't there a patent about that made by Cerny?

I don't believe in 36CU or designing your next gen based on BC. And no RT and VRS, I still didn't read an explanation about that by GitHub biliever.

People with knowledge on that, is there specific number about CU number by SE baring multiple of 2 ? If I follow correctly Oberon is 2 SE with 20 cu and 2 deactivated and Oberon 2 SE of 30 with 2 deactivated?

So, with CU deactivated throught software can it be 22, 24, 26, 28, 30, or is there physical / hardware limitations?

Regarding the SEs, the junctions setup in RDNA and GCN are highly complicated and they only converge after the SE level. We still have arguments about the subject but our general assumption is that if you want to activate and deactivate parts of the GPU without having it shut down a rebooted, the smallest part you can shut down in real-time is a full SE. Now, it's always possible that Sony and AMD reworked the RDNA architecture in order to enable real-time shutting down of an individual SA, but we can't really assume it because it's one hell of customization work. So the assumption is, if Sony is limited to 18CUs per SE, if they want more than 36 CUs they will have to add a third SE with its 18 CUs that will result in 54 CUs.

Obviously all that is deduction work, we don't know RDNA well enough and we sure as hell don't know anything about RDNA 2.

That was the point, the real world values of Oberon A0 and B0 are different than the theoretical, so basically the whole file looks like one big Ariel B0 file with some Oberon data sprinkled inside of it.Some of the achieved values are higher then the theoretical, so they can't refer to the same APU.

I think it's too simplistic for Era to swallow, the same place that still over analyzes Cerny's words from April 2019 and tries to figure out if PS5 will have RT :)Is it too simplistic to assume it's being addressed as a RDNA 1 GPU despite being a later generation?

Flute had a Zen 2 with 8 cores, everything in the Flute leak matches Github perfectly.Did the CPU in flute is already zen2 ?

Can the actual platform (forgot the name) be only refreshing the CPU and keeping the GPU until RDNA2 is available?

It was a dream to eat. But seriously made them because of the lack of walnut love in here

Cloth simulation scale with vertex count, so once again, scalable GPU workload that might not scale with resolution but does scale with other parameters. As long as it's graphical in nature (just like the cloth simulation example you brought), it doesn't matter, it will scale. XSS customers have to accept that they might get games with inferior graphics, which is a given when you buy a cheap SKU.Again I'm arguing that non-scalable elements don't get designed into most games because of the very fact that they are not scalable. Most elements being scaleable is strictly because the platform design dictates that to be so, not because the elements are always going to be scalable. You're taking the fact that things are designed to be GPU scalable as evidence that most anything you want to run on GPU will be.

I think I'll just link this UBIsoft test on GPGPU cloth simulation:

Ubisoft GDC Presentation of PS4 & X1 GPU & CPU Performance

Ubisoft's GDC 2014 document provides insights into the performance of the Playstation 4 and Xbox One's CPU and GPU and GPGPU compute information from AMD and other sources.www.redgamingtech.com

We can see there are distinct workloads that benefit from GPGPU, but this also means we cannot plan a gameplay mechanic around the higher level of GPGPU compute of this cloth simulation. The obvious reason being because of the vast gap in those workloads. Therefore any use of cloth simulation would have to NOT affect gameplay or design and be relegated to be visual and scalable only.

The only reason these effects are, as you put it, sliders on a scale is because developers know up front they cannot rely on the higher levels of performance because of the vast disparity between systems and design their gameplay elements around the limitations of their lower baseline systems.

If something is gameplay related, in 99.99% of the cases it will be simulated on the CPU, not the GPU. That's exactly the reason why as long as CPU, SSD and RAM are comparable to the XSX (after some adjustments considering the XSS will run games at a lower resolution and settings), everything will be fine.

It was a dream to eat. But seriously made them because of the lack of walnut love in here

I'll continue that with the leftover of last night's dessert:

Date and walnut log. Goes well with ice cream, to the surprise of no one.

yeah im gonna press the doubt button on pro boost. this is 6.3x faster than the OG PS4 and 2.7x faster than PS4 Pro. This is also why it makes sense that it's probably PS5 because XSX is only 2.5x faster than X1X. They actually took a bigger jump than MS did. It's still a generational leap. Probably thought MS overcorrected with X1X.

as for max clock... doubt too. it wouldn't be a round number if they're testing the limits of a processor.

People can't forget power budget is a thing. PS4, PS4 Pro and Xbox One X all targeted 150W TDP so Sony probably thought that MS would do the same this time but instead pushed the upper bound... because PS5 is supposedly in 150W TDP but is going to be hitting closer to it's peak like X1X did. MS went above that. Their APU alone will be drawing more power than the entire X1X did by itself.

like no joke... the only Sony console that hit 200W was the PS3.

Xbox 100W, PS2 50W, Xbox 360 170W, PS4 135W, PS4 Pro 150W, Xbox One 115W, Xbox One X 170W

PS5 170W projected, Xbox Series X 200W+ projected

Remember when everyone thought over 10 TF was a fantasy? (well except me, i thought they could push 18-24 TF just to send the hammer down because of 7nm) there was a time when 8 TF was considered the ceiling. you go back and tell your old self that it was 9.2 TF and you'd be happy. MS just went above that, that's all. There's nothing to be sad about... the games are still going to look fantastic. Just look at what 1.8 TF GCN is doing now. Look at what 4.2 TF GCN is doing now. Imagine having a much better CPU and having raytracing *for free* imagine having Last of Us 2 but with realistic looking hair *for free*

People are just freaking out because one number is higher than the other.

Lockhart is technically too powerful relative to the other consoles. You make a game maximizing Lockhart at 1080p utilizing zero GPGPU and you would be using dynamic resolution on Xbox Series X because it wouldn't be able to handle the scene you made on Lockhart. It's 3x. Don't forget that. You need 4x.

This means on an Xbox exclusive, you have a GPGPU budget of 1.3 TF. For a multiplatform game, you'd have a GPGPU budget of around 1.9TF (assuming PS5 @ 9.2TF), you exceed that and the game cannot be ported to Lockhart. now last time I checked dedicating nearly 25% of your GPU budget to GPGPU would be kinda nuts. Lockhart is not going to hold back PS5 or Xbox Series X. Full stop. If Lockhart is higher than 4 and closer to 4.8 which I think it might be... the numbers get even crazier. 2.4 TF GPGPU budget for exclusives 3TF budget for multiplatform. this is assuming you are utilizing the rest for shaders/RT in the most optimal way possible. (which is why the multiplatform number is higher, so XSX gets IQ benefit and framerate stability with no extra bells and whistles)

I don't know about you but having 1.9-3TF budget for GPGPU usage sounds pretty darn exciting to me, considering we just came from a generation where all consoles are below that mark no matter what.

I'm definitely excited for a 12Tflop XSX but I'm not a fan of the primary use for the extra Tflop difference between XSS and XSX being resolution. I'm definitely looking forward to see much better deformations and simulation in cloth, hair, foliage, and general physics upgrades and the leap with be phenomenal. Like I said, my only concern is a dev who would want to make heavy use of those simulation abilities for gameplay purposes and not having the GPU budget to do it.

Sorry I'm late everyone! I got hung up at the bus stop in an absolute downpour. I made some new friends, though! Say hi everyone!

Well, it's a simple night tonight. JamboGT and EsqBob, please step forward. For the crimes of failing to guess the PlayStation 5 reveal date, I hereby sentence you to the following avatars:

JamboGT, this avatar is courtesy of a certain Era staff member. I just couldn't say no when I saw it.

EsqBob, I think it's time to get yourself a new ride.....

Well, that's it folks. Like I said, easy night. Come back tomorrow for more fun. And now, I sleep.

Well, it's a simple night tonight. JamboGT and EsqBob, please step forward. For the crimes of failing to guess the PlayStation 5 reveal date, I hereby sentence you to the following avatars:

JamboGT, this avatar is courtesy of a certain Era staff member. I just couldn't say no when I saw it.

EsqBob, I think it's time to get yourself a new ride.....

Well, that's it folks. Like I said, easy night. Come back tomorrow for more fun. And now, I sleep.

I'll continue that with the leftover of last night's dessert:

Date and walnut log. Goes well with ice cream, to the surprise of no one.

Ah a fellow user of culture I see.

As a side note, not sure if the full rebirth tutorial on how it was produced was posted yet but it's fascinating considering they got it running in real-time at 24fps in 4K on a 1080. I'm convinced we will get similar graphics from next gen.

Surprised to already see 6nm designs finalized

www.gizmochina.com

www.gizmochina.com

UNISOC's Tiger T7520 5G processor is the world's first 6nm EUV processor - Gizmochina

At a press conference held today, UNISOC announced its second-gen 5G chipset. The Tiger T7520 is its latest 5G SoC for mobile devices following the launch of the Tiger T7510 last year. This is also the first 6nm processor in the world. The T7520 is built on a 6nm EUV process and we are pretty …

partially because it's really 7nm and is forward compatible with the upcoming 7nm+ variantsSurprised to already see 6nm designs finalized

UNISOC's Tiger T7520 5G processor is the world's first 6nm EUV processor - Gizmochina

At a press conference held today, UNISOC announced its second-gen 5G chipset. The Tiger T7520 is its latest 5G SoC for mobile devices following the launch of the Tiger T7510 last year. This is also the first 6nm processor in the world. The T7520 is built on a 6nm EUV process and we are pretty …www.gizmochina.com

Just some calculations for anyone interested, they don't reflect my thoughts on the PS5 power

2 X 64 X 1.8 X 48 = 11.06 TFlops

If we believe that it has to be multiples of 18 to maintain perfect BC

2 X 64 X 1.8 X 54 = 12.44 TFlops

36 CU's @ 2GHz for 9.2 TFlops has been discussed to death, so no need for me to do the calculations there.

Feel free to make your own calculations. 1.8 refers to the clockspeeds while 48/54 refers to the CU count.

2 X 64 X 1.8 X 48 = 11.06 TFlops

If we believe that it has to be multiples of 18 to maintain perfect BC

2 X 64 X 1.8 X 54 = 12.44 TFlops

36 CU's @ 2GHz for 9.2 TFlops has been discussed to death, so no need for me to do the calculations there.

Feel free to make your own calculations. 1.8 refers to the clockspeeds while 48/54 refers to the CU count.

Not quite. It's N7 with EUV layers, whereas N7+ isn't compatible.partially because it's really 7nm and is forward compatible with the upcoming 7nm+ variants

As a side note, not sure if the full rebirth tutorial on how it was produced was posted yet but it's fascinating considering they got it running in real-time at 24fps in 4K on a 1080. I'm convinced we will get similar graphics from next gen.

Im convinced this is how a death stranding 2 would look. Maybe even better.

Cloth simulation scale with vertex count, so once again, scalable GPU workload that might not scale with resolution but does scale with other parameters. As long as it's graphical in nature (just like the cloth simulation example you brought), it doesn't matter, it will scale. XSS customers have to accept that they might get games with inferior graphics, which is a given when you buy a cheap SKU.

If something is gameplay related, in 99.99% of the cases it will be simulated on the CPU, not the GPU. That's exactly the reason why as long as CPU, SSD and RAM are comparable to the XSX (after some adjustments considering the XSS will run games at a lower resolution and settings), everything will be fine.

I see we aren't going to make headway on this so I will just draw your attention to the bolded, explain the core difference between our arguments, and leave it at that:

I'm arguing because GPUs have wide variances in performance, gameplay elements are not run on the GPU.

You're arguing gameplay elements are not run on the GPUso there is no reason to worry about wide variances in performance.

While both are true, my proof is that when exclusives are made, gameplay elements are offloaded to the GPU which happens in a uniform closed system or in the case of the PS3, gpu elements are offloaded to the CPU. In fact, even in this generation we've seen things such as AI and physics offloaded to the GPU. It's not a rarity at all.

if viewing the XSS/XSX through the view of multiplatforwhich are designed with wide variance in mind then no it wouldn't matter as the game wouldn't be designed like that in the first place.

if viewed through the lens of platform exclusives games, then a closed system without performance variance will make better use of its guaranteed resources than a game designed to scale up and down based on performance variances.

How prevalent we will see either of these scenarios is up for debate, maybe only one or two PS5 exclusives take advantage of the higher baseline for gameplay elements, maybe they all do, maybe none of them do, and I guess we won't know until the generation and games release.

Last edited:

If Capcom makes a new, next-gen only MH it's going to be really cool seeing what they can do with the higher specs/SSD.How would this be for a hype moment...

PS5 reveal (PlayStation Meeting, pre-recorded, not live). Cerny presentation, developers talk, controller and console revealed.

11.4 TF.

Demon's Souls Remake. Insomniac new Ratchet & Clank that looks almost as good as a Pixar film

FFVIIR BC enhancements (native 4K, 60fps)

A few clips of Gran Turismo 7 with ray tracing.

Monster Hunter World and Iceborne BC enhancements

and finally, Capcom Presents

D E E P - D O W N - showing incredible flame physics and gameplay that visually far surpasses the 2013 trailer that ran on a 3TF GTX 680.

Of course.

Not quite. It's N7 with EUV layers, whereas N7+ isn't compatible.

So any 7nm design can just be ported to 6nm with (relatively) minimal effort?

More or less, yes. The main issue is that it supposedly won't be in volume until next year.So any 7nm design can just be ported to 6nm with (relatively) minimal effort?

If Capcom makes a new, next-gen only MH it's going to be really cool seeing what they can do with the higher specs/SSD.

I am sure they will, maybe we'll see it in 2022 ?

I've not seen any confirmation of SMT on them either, although I can't imagine they'll be sticking with "just" 8 physical cores. In fact, has there even been core count confirmed for both?At this point, both consoles have RDNA2 plus HW raytracing. The question remains how many CU's and RAM configuration. The rest is pretty well known. I think Microsoft may increase clock frequency as they did in the last generation.

More or less, yes. The main issue is that it supposedly won't be in volume until next year.

Are we at all likely to see consoles using it? I assume that slims in 2023 would need a bigger improvement (from something like 5nm or even 3nm), so maybe the regular console manufacturing will just invisibly switch to 6nm after a while.

Sure. It could become more cost effective than 7nm due to reduced mask count.Are we at all likely to see consoles using it? I assume that slims in 2023 would need a bigger improvement (from something like 5nm or even 3nm), so maybe the regular console manufacturing will just invisibly switch to 6nm after a while.

Nvidia has been putting up some videos explaining ray tracing in a broader overview. they just put up a video talking about ray tracing hardware. if anyone remembers the AMD patent of RT, it seems pretty similar to how Nvidia chose to go about it.

I am very surprised this wasn't posted yet:

www.techradar.com

www.techradar.com

The Last of Us developer talks up PS5 graphics for rendering hair (and hair gel)

Let there be lighting

it will never endI just want it to be over. 😔

More silly avatar bets, less whatever this last couple of pages.

My gun-to-my-head prediction - if 3 SEs each with 18 CUs isn't required for BC - is 48 CU @ 1.85 GHz for 11.4 TF.Just some calculations for anyone interested, they don't reflect my thoughts on the PS5 power

2 X 64 X 1.8 X 48 = 11.06 TFlops

If we believe that it has to be multiples of 18 to maintain perfect BC

2 X 64 X 1.8 X 54 = 12.44 TFlops

36 CU's @ 2GHz for 9.2 TFlops has been discussed to death, so no need for me to do the calculations there.

Feel free to make your own calculations. 1.8 refers to the clockspeeds while 48/54 refers to the CU count.

I don't believe Sony is going to build a chip as big as XSX's, which is massive. I do think they might clock higher given the rumblings about a robust cooling system. And this fits the idea both systems are really close in power.

They talked to Straley who left ND in late 2017. Makes sense because he's mostly talking about limitations on the older games rather than new capabilities.I am very surprised this wasn't posted yet:

The Last of Us developer talks up PS5 graphics for rendering hair (and hair gel)

Let there be lightingwww.techradar.com

They talked to Straley who left ND in late 2017. Makes sense because he's mostly talking about limitations on the older games rather than new capabilities.

At least for hair he is up to date. Hair and vegetation can improve a lot.

Excuse my ignorance, as I've missed the last sixty pages that have been posted in the last two days, but I read a post that said, according to AMD, 2GHz is a sustainable speed for RDNA 2. If that's the case, is there any reason to believe these GPUs in the Series X and PS5 WON'T run at 2GHz? I don't see why they wouldn't, if it doesn't appear to be a roadblock for the technology.

At least for hair he is up to date. Hair and vegetation can improve a lot.

I found that last part extra funny given your avatar badge.

The logical definition of a Shader Engine is nearly a decade old already, starting even with VLIW4 GPUs:An SE stands for Shader Engine. It is something that has been part of AMD's graphic cards since GCN second gen. Here is the wikipedia entry:

" GCN 2nd generation introduced an entity called "Shader Engine" (SE). A Shader Engine comprises one geometry processor, up to 44 CUs (Hawaii chip), rasterizers, ROPs, and L1 cache."

The PS4 had one single SE with 18 CU running at 800Mhz, while the PS4 Pro had two shader engines with 36 CU running at 911Mhz. When the PS4 Pro was playing original PS4 games that had not received a enhanced patch it disabled one of the SE to emulate the actual hardware of the PS4 so that there wouldn't be any bugs or glitches.

For this generation (NAVI, instead of GCN) AMD is still using shader engines (SE) and at this point all of their graphic cards have either 1 SE or 2 SE, but there are rumors that some of the upcoming big Navi GPUs will have 4 SEs. At this point we are pretty sure that Arden is the Series X GPU and it uses 2 SE with 56 CU running at approximately 1.675 Mhz. For Sony the really old info on Ariel said it used 2 SE with 36 CU, and then the later backwards compatibility testing that was tied to PS5 also showed 2 SE with 36 CU at 800Mhz, 911Mhz, and 2.0 Ghz. This shows that the PS5 will probably use the same method of backwards compatibility, running 1 SE with 18 CU for PS4 games, running 2 SE with 36 CU for PS4 Pro games, and then maybe running 2 SE with 36 CU at 2.0 Ghz for games that have an engine that can take advantage, or maybe for PS5 games.

We don't have any idea if that is what the final chip is though because that leak did not show RT and VRS, while it did show those things for Arden (the XSX GPU). Now that we know PS5 also has RT and VRS it makes the Github leak look like it may have only been testing for BC because it is missing final details. The thought is then that maybe PS5 has 3 SE with 54 CU, and that the Github data only showed backwards compatibility testing.....

Edit: a word

https://github.com/torvalds/linux/blob/master/drivers/gpu/drm/radeon/ni.ccase CHIP_CAYMAN:

rdev->config.cayman.max_shader_engines = 2;

rdev->config.cayman.max_pipes_per_simd = 4;

rdev->config.cayman.max_tile_pipes = 8;

rdev->config.cayman.max_simds_per_se = 12;

rdev->config.cayman.max_backends_per_se = 4;

rdev->config.cayman.max_texture_channel_caches = 8;

Hawaii (GCN2) used 4 Shader-Engines (Up to 1 Geometry-Engine, Rasterizer, 1 Shader-Array with 11 Compute Units and 16 ROPs per SE).

PS4 and Xbox One 2 Shader Engines, PS4 Pro and Xbox One X use 4.

At runtime it's possible for AMD to switch to Wave64 or Wave32 for Shaders, use WGP or CU mode and to reserve CUs and lock out CUs for a certain task.

I think the question is rather if AMD can power gate a Shader-Array per SE on RDNA1/2, so it wouldn't burn extra power for bc.

Power and thermal envelope of a console is what will hold it back. You can only feed the chip so much juice and dissipate so much heat inside of a small console before it needs to throttle down.Excuse my ignorance, as I've missed the last sixty pages that have been posted in the last two days, but I read a post that said, according to AMD, 2GHz is a sustainable speed for RDNA 2. If that's the case, is there any reason to believe these GPUs in the Series X and PS5 WON'T run at 2GHz? I don't see why they wouldn't, if it doesn't appear to be a roadblock for the technology.

This seems to be why the Series X is shaped the way it is. To have more volume and better cooling potential.

Also, the big chip in the consoles is both the gpu cores combined with the cpu cores in a big ass chip. you cant run both at their max desktop counterpart's speeds in this configuration.

That makes sense for the way we traditionally thought about GPU speeds, but then I'm hearing about how 2GHz is not that crazy when it comes to RDNA 2 specifically. Is that kind of misinformation and 2GHz would still be a hurdle for RDNA 2 GPUs?Power and thermal envelope of a console is what will hold it back. You can only feed the chip so much juice and dissipate so much heat inside of a small console before it needs to throttle down.

This seems to be why the Series X is shaped the way it is. To have more volume and better cooling potential.

One thing I've been thinking of, if Arden is a hypothetical 56 CU active GPU with 60 CU's in total equating to 350mm^2 die space, a 48 CU active GPU with 52 CU's in total would be around 300mm^2 die space if we scale linearly. Wasn't there a rumour a while back that said XSX was 350mm^2 and PS5 was 300mm^2? Perhaps it was referring only to the GPU?I don't believe Sony is going to build a chip as big as XSX's, which is massive. I do think they might clock higher given the rumblings about a robust cooling system. And this fits the idea both systems are really close in power.

I wonder how much it really matters. You can undervolt because you're massively underclocked and those SA are sitting idle drawing a reduced quiescent current at worst. Clock-gating would be the next best thing and is probably achievable easily due to the buffer trees anyway.At runtime it's possible for AMD to switch to Wave64 or Wave32 for Shaders, use WGP or CU mode and to reserve CUs and lock out CUs for a certain task.

I think the question is rather if AMD can power gate a Shader-Array per SE on RDNA1/2, so it wouldn't burn extra power for bc.

Look - Matt said it isn't reliable. This little community went in circles on that stupid little leak for far too long even after it was called out.It's the best piece of information we have but it is possible it turns out to not be true.

If you're still choosing to believe in that leak, good for you, but clear your plate for the crow.

I swear, if we really do get a pair of largely identical 12TF $499 consoles, it'll be a good thing VallenValiant is banned. He'd choke to death on crow.

An SE stands for Shader Engine. It is something that has been part of AMD's graphic cards since GCN second gen. Here is the wikipedia entry:

" GCN 2nd generation introduced an entity called "Shader Engine" (SE). A Shader Engine comprises one geometry processor, up to 44 CUs (Hawaii chip), rasterizers, ROPs, and L1 cache."

The PS4 had one single SE with 18 CU running at 800Mhz, while the PS4 Pro had two shader engines with 36 CU running at 911Mhz. When the PS4 Pro was playing original PS4 games that had not received a enhanced patch it disabled one of the SE to emulate the actual hardware of the PS4 so that there wouldn't be any bugs or glitches.

For this generation (NAVI, instead of GCN) AMD is still using shader engines (SE) and at this point all of their graphic cards have either 1 SE or 2 SE, but there are rumors that some of the upcoming big Navi GPUs will have 4 SEs. At this point we are pretty sure that Arden is the Series X GPU and it uses 2 SE with 56 CU running at approximately 1.675 Mhz. For Sony the really old info on Ariel said it used 2 SE with 36 CU, and then the later backwards compatibility testing that was tied to PS5 also showed 2 SE with 36 CU at 800Mhz, 911Mhz, and 2.0 Ghz. This shows that the PS5 will probably use the same method of backwards compatibility, running 1 SE with 18 CU for PS4 games, running 2 SE with 36 CU for PS4 Pro games, and then maybe running 2 SE with 36 CU at 2.0 Ghz for games that have an engine that can take advantage, or maybe for PS5 games.

We don't have any idea if that is what the final chip is though because that leak did not show RT and VRS, while it did show those things for Arden (the XSX GPU). Now that we know PS5 also has RT and VRS it makes the Github leak look like it may have only been testing for BC because it is missing final details. The thought is then that maybe PS5 has 3 SE with 54 CU, and that the Github data only showed backwards compatibility testing.....

Edit: a word

This post didn't get the attention it deserved, imo.

You are right, if they really set the clock at 0.8 or 0.911 Ghz having the rest idle should consume relatively little anyway.I wonder how much it really matters. You can undervolt because you're massively underclocked and those SA are sitting idle drawing a reduced quiescent current at worst.

Didn't see this posted but IGN made a video podcast where multiple developers have commented on what gamers can expect from both next gen graphics and new gameplay possibilities. I felt like there was quite a bit of interesting stuff in here. Surprised that I didn't see any discussion on any of this:

youtu.be

youtu.be

What Xbox’s 12 Teraflops ACTUALLY Do - Next-Gen Console Watch

Welcome back to Next-gen Console Watch 2020, our new show following all the news and rumors on the PlayStation 5 and Xbox Series X. Last week we got the full...

Threadmarks

View all 7 threadmarks

Reader mode

Reader mode

Recent threadmarks

Thread rules + Contest Image of Phil Spencer's twitter profile picture with 10 memory chips Transistor vs. the World Xbox Series X chip size estimation Series X chip comparison (illustration vs photo) Xbox Series X Official Specs NEW DISCUSSION GUIDELINES- Status

- Not open for further replies.