I'd also like to know this, my main screen is ultra wide and I want to know if DLSS is an option.Sorry if this has already been answered but I've got a couple of questions.

Does this support currently support ultra wide resolutions (i.e. 3440x1440)?

Digital Foundry || Control vs DLSS: Can 540p Match 1080p Image Quality? Full Ray Tracing On RTX 2060?

- Thread starter ILikeFeet

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

it does support ultrawideI'd also like to know this, my main screen is ultra wide and I want to know if DLSS is an option.

Perfect, now hurry up Ampere!

I gotta say the results in person are even more convincing that the comparisons on YT.

Now I'm in this weird place where I wanted to upgrade my 1060 6Gb to a 5700XT, but now this thing makes a less powerful 2070 (not super) way more sensible for future titles...

I game at 2560x1080, so keeping this res with DLSS, full raytracing and good framerates seems a much better prospect than than having more power overhead to run games "the old way" at native res but without RT. Hell, if need be one could also just enable DLSS with no RT and have stellar FPS on most games.

I know right now we only have a few titles running with this technology, but I can't see any reason why titles going forward will not support it. So, uhhh... I still can't make up my mind.

Now I'm in this weird place where I wanted to upgrade my 1060 6Gb to a 5700XT, but now this thing makes a less powerful 2070 (not super) way more sensible for future titles...

I game at 2560x1080, so keeping this res with DLSS, full raytracing and good framerates seems a much better prospect than than having more power overhead to run games "the old way" at native res but without RT. Hell, if need be one could also just enable DLSS with no RT and have stellar FPS on most games.

I know right now we only have a few titles running with this technology, but I can't see any reason why titles going forward will not support it. So, uhhh... I still can't make up my mind.

I gotta say the results in person are even more convincing that the comparisons on YT.

Now I'm in this weird place where I wanted to upgrade my 1060 6Gb to a 5700XT, but now this thing makes a less powerful 2070 (not super) way more sensible for future titles...

I game at 2560x1080, so keeping this res with DLSS, full raytracing and good framerates seems a much better prospect than than having more power overhead to run games "the old way" at native res but without RT. Hell, if need be one could also just enable DLSS with no RT and have stellar FPS on most games.

I know right now we only have a few titles running with this technology, but I can't see any reason why titles going forward will not support it. So, uhhh... I still can't make up my mind.

Honestly unless you absolutely need a new card right now I would wait for the RTX 3000 series that's supposed to be this year last time I heard. So close before the launch of a new generation I always find it a bit mistimed to invest in "old" hardware.

we don't know. might run next gen games well if you turn down settings. DLSS isn't promised for every title eitherI'm looking to build, if I game at 1080P 60hz, would the 2060 last a while?

Nvidia GeForce RTX 2070 Super vs. AMD Radeon RX 5700 XT: 2020 Update

After revisiting the battle between the current-gen $400 GPUs last week, two things were clear: many wanted an update between the 5700 XT and the more expensive...

www.google.com

www.google.com

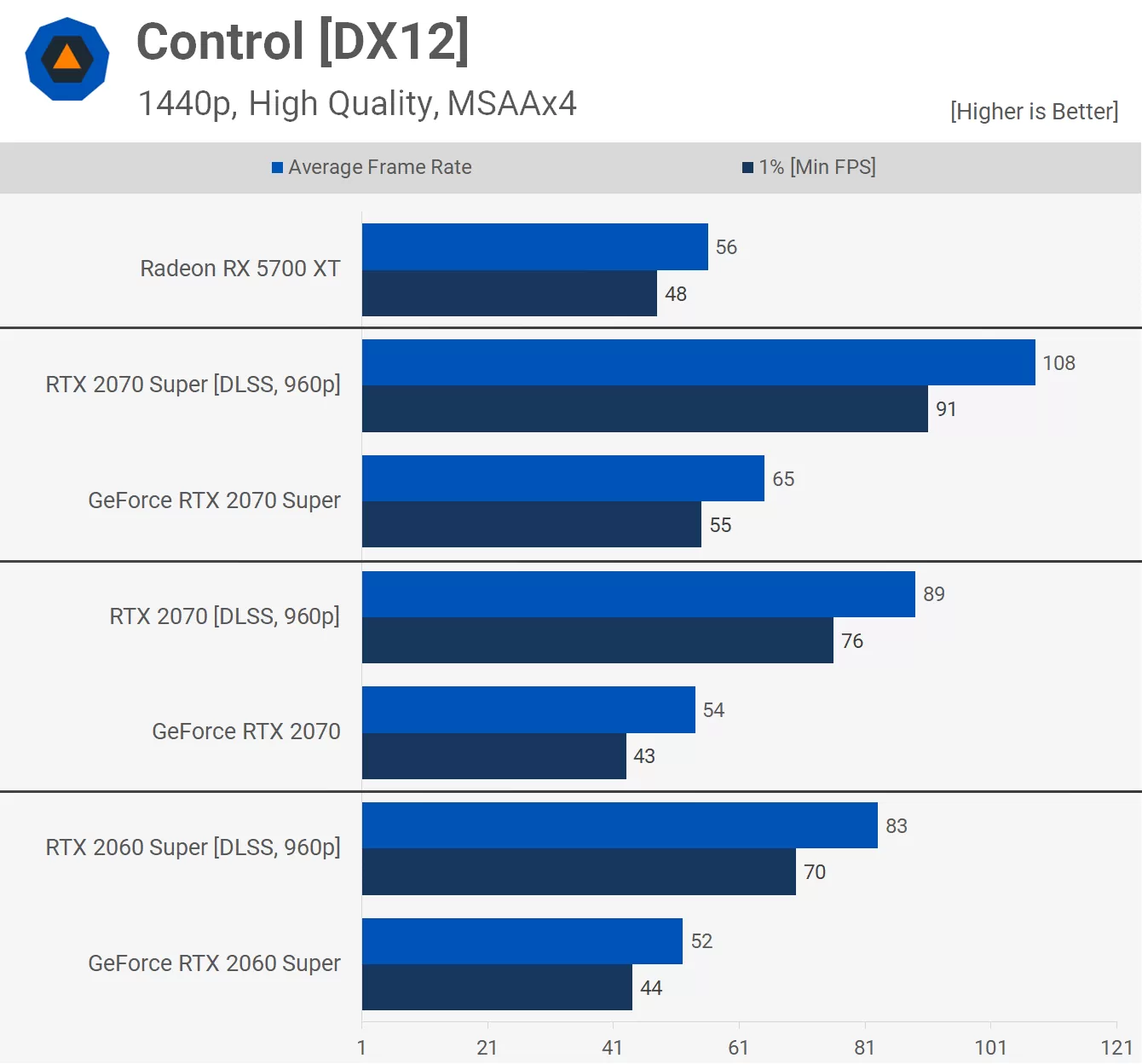

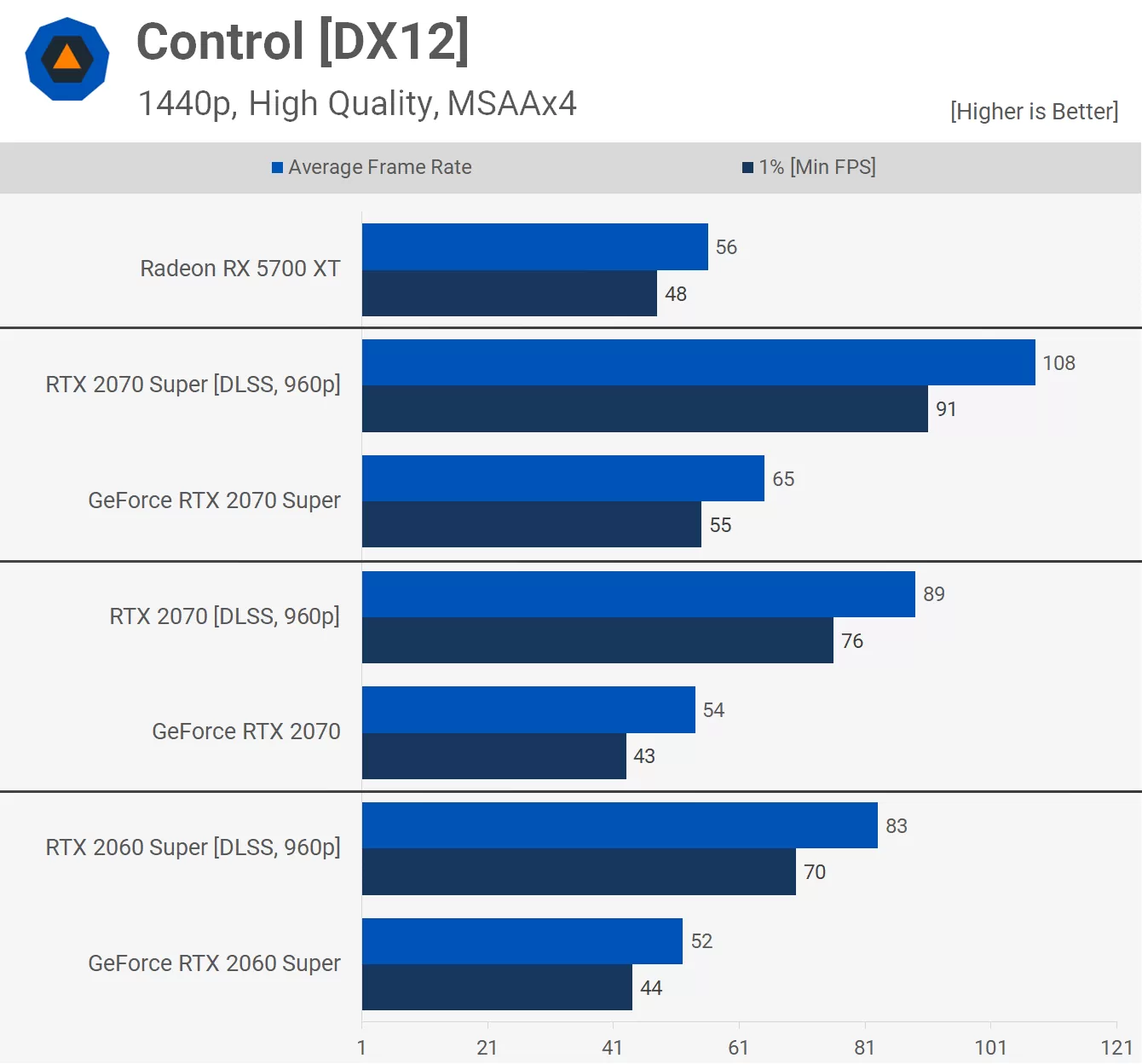

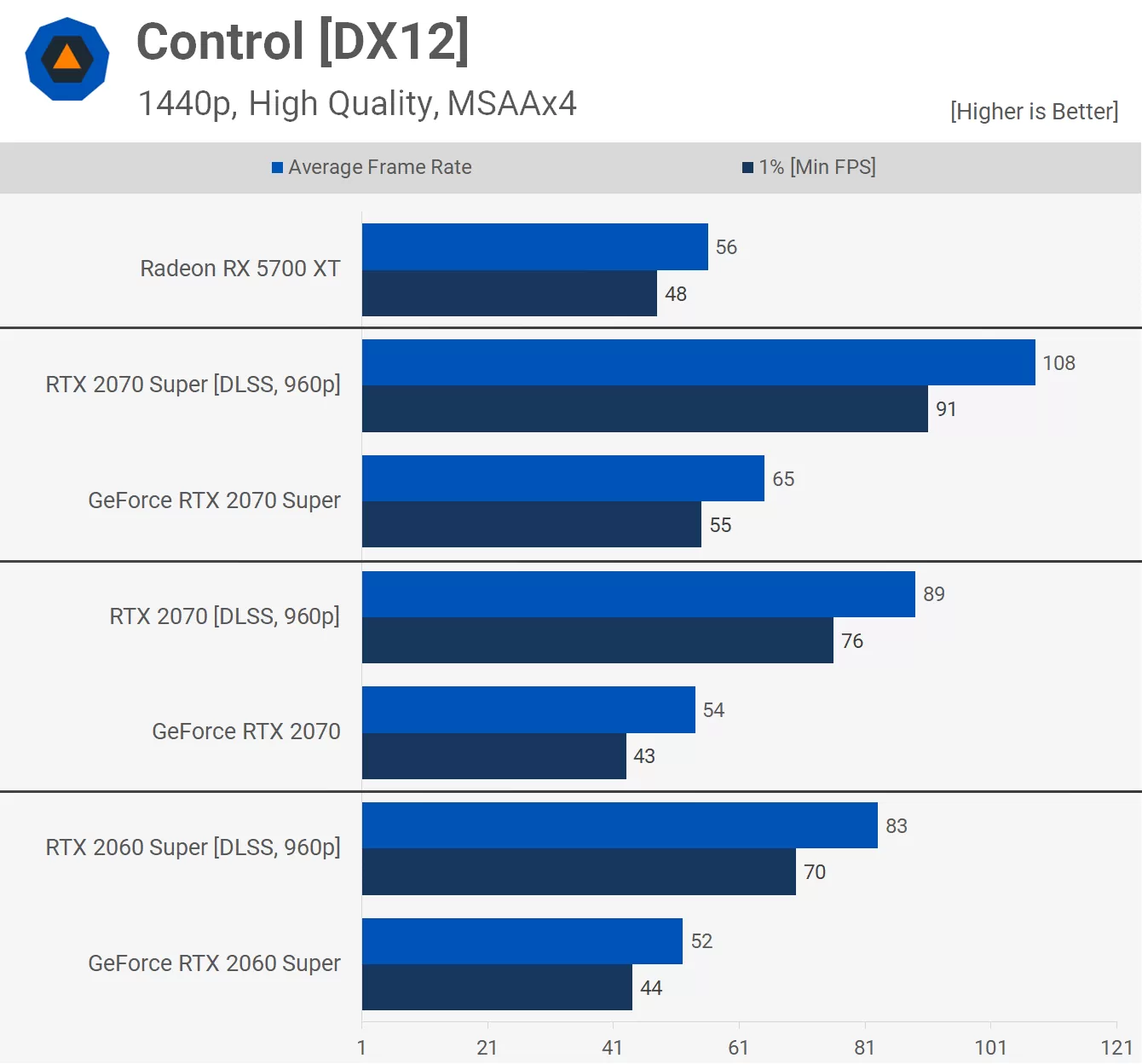

Pretty savage benchmark. RX 5700 XT usually trades blows with 2070S in general, but here DLSS 2.0 quality mode (with the least performance gain) makes the 2070S have nearly 2x the performance of AMD's best card, likely with a better final image too.

Nvidia GeForce RTX 2070 Super vs. AMD Radeon RX 5700 XT: 2020 Update

After revisiting the battle between the current-gen $400 GPUs last week, two things were clear: many wanted an update between the 5700 XT and the more expensive...www.google.com

Pretty savage benchmark. RX 5700 XT usually trades blows with 2070S in general, but here DLSS 2.0 quality mode (with the least performance gain) makes the 2070S have nearly 2x the performance of AMD's best card, likely with a better final image too.

Yeah dlss is a game changer if more games start using it. Between using a 5700xt and a 2070s using 1080p 4k dlss, the 5700xt fairs about the same at 1440p native. The super of course gets rtx and honestly I will say the IQ is better. This is really only one game though so not a huge deal. Control still looks great on the 5700xt 1440p native. Add in RIS and trixx boost 85% scaling there's not a huge difference between 4k dlss and the Radeon does better fps. No rtx though of course.

But yeah I'd definitely say if you're buying a GPU right now it probably only makes sense to get a 2070 super. If DLSS really takes off it adds a ton of future proofing.

It truly will be wild if Nintendo gets dlss for switch 2 and basically magically is able to match PS5 and xsx. 1080p native rendering getting near 4k results. I guess the tricky part might be CPU grunt.

Last edited:

That is almost certainly happening. They are already partnered with Nvidia and it is now general to add support, making it easy for any partnered developer to do so and Nvidia will absolutely push for it as the biggest game coming this year. I'd suspect FF7R being made in UE4 is going to give Nvidia an edge on getting DLSS into that game next spring.announce Cyberpunk with DLSS and watch stores not be able to keep RTX cards in stock

That is almost certainly happening. They are already partnered with Nvidia and it is now general to add support, making it easy for any partnered developer to do so and Nvidia will absolutely push for it as the biggest game coming this year. I'd suspect FF7R being made in UE4 is going to give Nvidia an edge on getting DLSS into that game next spring.

Yeah I'd be surprised if it didn't have it given how close the collaboration seems to be.

I'd like to see it in Doom Eternal as an alternative to the TSSAA, would be a fun comparison.

Storage speeds would be an issue as wellIt truly will be wild if Nintendo gets dlss for switch 2 and basically magically is able to match PS5 and xsx. 1080p native rendering getting near 4k results. I guess the tricky part might be CPU grunt.

DLSS is amazing as is but if a major player like Nintendo uses it, I can only imagine the type optimizations would do for the tech. It makes too much sense for Nintendo to not be at least intrigued.Yeah dlss is a game changer if more games start using it. Between using a 5700xt and a 2070s using 1080p 4k dlss, the 5700xt fairs about the same at 1440p native. The super of course gets rtx and honestly I will say the IQ is better. This is really only one game though so not a huge deal. Control still looks great on the 5700xt 1440p native. Add in RIS and trixx boost 85% scaling there's not a huge difference between 4k dlss and the Radeon does better fps. No rtx though of course.

But yeah I'd definitely say if you're buying a GPU right now it probably only makes sense to get a 2070 super. If DLSS really takes off it adds a ton of future proofing.

It truly will be wild if Nintendo gets dlss for switch 2 and basically magically is able to match PS5 and xsx. 1080p native rendering getting near 4k results. I guess the tricky part might be CPU grunt.

Really cool. I used 1.9 on my 2080. But the 2.0 gets rid of the artifacts that bothered me enough to drop to using a native resolution on control

time for Nvidia to open up that war chestThe main problem I see so far is that games aren't launching with DLSS, but having it patched in -- sometimes months later. Games (lots of games) really need to support DLSS 2.0 on day one for it to be a feature that will move a ton of cards.

They don't really need to. You only need to pick up the big games. Adding DLSS to cyberpunk, ff7r, and maybe looking at popular games like GTAV and adding DLSS for that, I mean pushing 4K at 100+ FPS on midrange Nvidia cards next year in the most popular games that are already out, is enough to sell GPUs. Hell if cyberpunk is as big as we think it is going to be, that is a game that will sell GPUs this year and next.

Reviewers will need to talk about it of course, but if they are worried about giving the best recommendations, they will play up DLSS if it's part of the biggest games, and getting control last year and cyberpunk this year, with ff7r in spring, will create an expectation that DLSS matters.

Also a perfect set up for Nvidia to push RTX features on Nintendo and for Nintendo to happily agree to the 20% GPU size increase in a Switch 2.

Radeon image sharpening is a post process effect. It simply sharpens the image and similar effects can be found on the Nvidia shield tv and other Nvidia cards, it's a trick but because it isn't using 16K image data and reconstructing the image itself. It can only work with the data of whatever image it is trying to sharpen. Meaning if you are rendering at 540p, you can make that 540p look less blurry, but it doesn't know what a 1080p image is suppose to look like, or a 4K or 8K or 16K image for that matter, so it's still a 540p image with some good AA, but it isn't going to magically make a 540p image have the same details as a 1080p image or even more detail like what DLSS 2.0 is capable of doing.How does this feature compare to AMD's sharpening tool or whatever

So not really comparable at all. For some reason I thought it was their version of DLSS.Radeon image sharpening is a post process effect. It simply sharpens the image and similar effects can be found on the Nvidia shield tv and other Nvidia cards, it's a trick but because it isn't using 16K image data and reconstructing the image itself. It can only work with the data of whatever image it is trying to sharpen. Meaning if you are rendering at 540p, you can make that 540p look less blurry, but it doesn't know what a 1080p image is suppose to look like, or a 4K or 8K or 16K image for that matter, so it's still a 540p image with some good AA, but it isn't going to magically make a 540p image have the same details as a 1080p image or even more detail like what DLSS 2.0 is capable of doing.

It gives the illusion of more detail, much like other AA techniques, and it was arguably better than older DLSS implementations, but DLSS 2.0 uses AI via tensor cores and comes out far ahead of 1.9, so last year it might of been something people compared, but because one is recreating the details from a 16K image, and the other is using Contrast adaptive sharpening in a post processed feature, the latter implementation will only be able to sharpen detail already in the image, while DLSS can actually bring in detail from a 16K image to the output that isn't present in the rendered image.So not really comparable at all. For some reason I thought it was their version of DLSS.

It's good AA though.

It gives the illusion of more detail, much like other AA techniques, and it was arguably better than older DLSS implementations, but DLSS 2.0 uses AI via tensor cores and comes out far ahead of 1.9, so last year it might of been something people compared, but because one is recreating the details from a 16K image, and the other is using Contrast adaptive sharpening in a post processed feature, the latter implementation will only be able to sharpen detail already in the image, while DLSS can actually bring in detail from a 16K image to the output that isn't present in the rendered image.

It's good AA though.

Yeah it's pretty good. Just played a few hours of control on my 5700xt rig with RIS at 1440p. My other rig 4k dlss on a 2070 super sure I'd say the rtx stuff is cool, but it's not really something I notice unless I'm actively looking for it. IQ wise the 2070s with DLSS is a bit better I guess? It's nothing truly mind blowing still imo. The game looks fucking awesome on a Radeon card too maxed out.

Id also say HDR in MW is far more impressive than any of the rtx stuff. So yeah imo even having an Rtx card for my tv set up I don't really think it's at the point that dlss or rtx is that big of a deal. I also have a feeling the console/amd implementation of these futures is going to be well behind Nvidia for quite along time, so it's possible a lot of cross platform stuff still won't really be doing much with this stuff even years from now.

Yeah I'd be surprised if it didn't have it given how close the collaboration seems to be.

I'd like to see it in Doom Eternal as an alternative to the TSSAA, would be a fun comparison.

I wonder if FFVII Remake would get RT. I'd hope so, considering the delay.

I wonder if FFVII Remake would get RT. I'd hope so, considering the delay.

Maybe they can do real time upscaling on some of those mid chapter textures, hahaha.

Maybe they can do real time upscaling on some of those mid chapter textures, hahaha.

It gives the illusion of more detail, much like other AA techniques, and it was arguably better than older DLSS implementations, but DLSS 2.0 uses AI via tensor cores and comes out far ahead of 1.9, so last year it might of been something people compared, but because one is recreating the details from a 16K image, and the other is using Contrast adaptive sharpening in a post processed feature, the latter implementation will only be able to sharpen detail already in the image, while DLSS can actually bring in detail from a 16K image to the output that isn't present in the rendered image.

It's good AA though.

If the AI is recreating detail from a 16k image, do you think that would suggest that there is more room for AI to upscale an image further than the current 4x resolution max? Although maybe that is basically the original idea for DLSS 2x, but maybe it could be upgraded to go from 720p to 4k (9x increase), then down sample to 1080p for a much better image.

What some of the articles for RIS compared it to was a 1440p with RIS is close to a 1800p image, though there is some weird over sharpening going on, but largely not at the edges. It also depends on the size of the monitor how well those are coming across and it is also a case by case basis. Generally it's worth having on.Yeah it's pretty good. Just played a few hours of control on my 5700xt rig with RIS at 1440p. My other rig 4k dlss on a 2070 super sure I'd say the rtx stuff is cool, but it's not really something I notice unless I'm actively looking for it. IQ wise the 2070s with DLSS is a bit better I guess? It's nothing truly mind blowing still imo. The game looks fucking awesome on a Radeon card too maxed out. You could also likely use Nvidia's sharpening tool as well as DLSS 2.0 to improve the image further if you wanted and liked the look it provides, it is just a post process thing, so it doesn't have the same performance issues you'd get from doing something like DLSS which fits right between the render and post processing.

Id also say HDR in MW is far more impressive than any of the rtx stuff. So yeah imo even having an Rtx card for my tv set up I don't really think it's at the point that dlss or rtx is that big of a deal. I also have a feeling the console/amd implementation of these futures is going to be well behind Nvidia for quite along time, so it's possible a lot of cross platform stuff still won't really be doing much with this stuff even years from now.

DLSS 2.0 is pretty ground breaking, to take a 540p render and output 1080p and have that 1080p look better than native is crazy, whats more is that is a 1:4 ratio for pixels, and can greatly increase the performance of the card. I think HDR can be cool, and sure, it can pop and look really great, but real time ray tracing is technically far more impressive (RTX stuff), one day when everyone is using Raytracing on everything, we can appreciate the difficulty required at this time and the feature itself. I think it might take an exclusive game somewhere down the line, but yeah politics of gaming is being weighted largely in the gaming community (not just era) and a lot of serious tech sites are overly optimistic about AMD and keep down playing RTX, which has led to some pretty crazy bias IMO. I think in general AMD makes great cards and at good prices, but they always seem to be following Nvidia's lead, and hopefully they can quickly catch up here in the RTX era, I like XSX's ability to do raytracing, but if it also had DLSS 2.0, it could actually push next gen into largely adopting Raytracing, since they could focus on 1080p rendering and outputting 4K while pushing Raytracing much harder than whatever they end up doing with 4K.

There is a video pages back that explores 72p to recreate a 1440p? resolution, that is just absolutely nuts, and it actually starts looking good as early on as 288p? It actually gets easier to do DLSS with higher render and output targets, since it is analyzing a 16K image, rendering 4K and outputting 8K would actually be far easier to do for the card.If the AI is recreating detail from a 16k image, do you think that would suggest that there is more room for AI to upscale an image further than the current 4x resolution max? Although maybe that is basically the original idea for DLSS 2x, but maybe it could be upgraded to go from 720p to 4k (9x increase), then down sample to 1080p for a much better image.

So the answer to your question is in the video, they just changed an .ini file in control and was able to allow the settings to render lower. You can give 720p to 4K a shot if you want, you might like the look and the performance gains get ridiculous at that point too.

The main problem I see so far is that games aren't launching with DLSS, but having it patched in -- sometimes months later. Games (lots of games) really need to support DLSS 2.0 on day one for it to be a feature that will move a ton of cards.

DLSS or RT being features patched in later on is cause from tech and game development not being in lockstep. Tech is bit behind in its release so devs have to circle back.

Games should start shipping with DLSS and RT support day 1 going forward more and more.

If the AI is recreating detail from a 16k image, do you think that would suggest that there is more room for AI to upscale an image further than the current 4x resolution max? Although maybe that is basically the original idea for DLSS 2x, but maybe it could be upgraded to go from 720p to 4k (9x increase), then down sample to 1080p for a much better image.

Yes even in the currently shipping version, it's possible to reconstruct from lower initial resolutions. This is what Zomble is mentioning:

720p -> 4k would almost certainly work, but I haven't seen anyone test it. I don't know if it would be better than 720p -> 1440p DLSS, then traditional upscale to 4k, though. That's the kind of thing you'd need to test.

Yes even in the currently shipping version, it's possible to reconstruct from lower initial resolutions. This is what Zomble is mentioning:

720p -> 4k would almost certainly work, but I haven't seen anyone test it. I don't know if it would be better than 720p -> 1440p DLSS, then traditional upscale to 4k, though. That's the kind of thing you'd need to test.

I should really test this kidn of thing out on my husband's 2070S when he isn't using it. I wasn't sure if going for a custom resolution like in that video would still only upscale 4x or something. Maybe I'll see if I can try a custom 4k DSR resolution and an internal 720p resolution to use DLSS from and see if I can make a 720p >>1440p DLSS vs 720p >> 2160p DLSS downsampled to 1440p to see what that does for image quality or performance.

Yes even in the currently shipping version, it's possible to reconstruct from lower initial resolutions. This is what Zomble is mentioning:

720p -> 4k would almost certainly work, but I haven't seen anyone test it. I don't know if it would be better than 720p -> 1440p DLSS, then traditional upscale to 4k, though. That's the kind of thing you'd need to test.

The 512x288 part looks better than some Switch games, like Doom.

I should really test this kidn of thing out on my husband's 2070S when he isn't using it. I wasn't sure if going for a custom resolution like in that video would still only upscale 4x or something. Maybe I'll see if I can try a custom 4k DSR resolution and an internal 720p resolution to use DLSS from and see if I can make a 720p >>1440p DLSS vs 720p >> 2160p DLSS downsampled to 1440p to see what that does for image quality or performance.

I think you have to edit ini files in the game - custom resolution on the desktop will still only have the default scaling modes up to that custom res.

There is no such thing as it would just increase execution time the less you have of them.z0m3le Has Nvidia stated the minimum amount of Tensor cores/Tensor performance required for DLSS2 to run in some form?

The tipping point is when the execution time for DLSS is longer than the time it would take to natively render the pixels anyway.

The less hw accelleration you get for DLSS means the longer it takes for it to run and therefore it decreases how effective it is and how much performance it can make up.

The 512x288 part looks better than some Switch games, like Doom.

Ehh, it looks sharper, but a lot more artefactey. I think something like 640 x 360 would be a fun resolution to try though. the 512 x 288 one is getting there, but just needs a bit more to help cut down on shimmering and haloing somewhat.

There is no such thing as it would just increase execution time the less you have of them.

The tipping point is when the execution time for DLSS is longer than the time it would take to natively render the pixels anyway.

*throws a rock*

Oi when are you doing a video on all this :P

lol on what?

Would be fun next time you're covering similar topics to see custom ini editing to do all sorts of non-standard resolutions!

Interesting to know, in that case we just need to find the crossroad point where it's no longer a viable solution; though I imagine it'd vary on a per game basis.There is no such thing as it would just increase execution time the less you have of them.

The tipping point is when the execution time for DLSS is longer than the time it would take to natively render the pixels anyway.

The less hw accelleration you get for DLSS means the longer it takes for it to run and therefore it decreases how effective it is and how much performance it can make up.

There is no such thing as it would just increase execution time the less you have of them.

The tipping point is when the execution time for DLSS is longer than the time it would take to natively render the pixels anyway.

The less hw accelleration you get for DLSS means the longer it takes for it to run and therefore it decreases how effective it is and how much performance it can make up.

On the flipside, it cannot go faster than rendering the lower res natively, which we are basically hitting, correct?

he already did. Check their channel

There is no such thing as it would just increase execution time the less you have of them.

The tipping point is when the execution time for DLSS is longer than the time it would take to natively render the pixels anyway.

The less hw accelleration you get for DLSS means the longer it takes for it to run and therefore it decreases how effective it is and how much performance it can make up.

So perhaps more tensor cores might mean that DLSS might keep getting better for high fps gaming? Benchmarks seem to show performance gains getting lower when fps was already high.

Would it also take up more time to make bigger jumps in resolution with DLSS? I think I saw 1080p >> 4k might have been more demanding than other options.

It truly will be wild if Nintendo gets dlss for switch 2 and basically magically is able to match PS5 and xsx. 1080p native rendering getting near 4k results. I guess the tricky part might be CPU grunt.

I have heard that ARM CPUs are improving by leaps and bounds. There's also the SSD speeds to consider, but I think the Switch 2 will have a basic SSD at least. I feel like, if third party games are built around 400MB/s PC SATA SSDs as their bare minimum requirement, the Switch 2 should be able to include a 256GB 1GB/s SSD and be ok.

This is the thread about that video lol, I was meaning more specifically the super-low res reconstructing up with custom file edits. Probably not worth a vid alone but a neat thing to cover.

Edit: Also using DSR to simulate DLSS 2x supersampling! Could be fun too.

Nvidia GeForce RTX 2070 Super vs. AMD Radeon RX 5700 XT: 2020 Update

After revisiting the battle between the current-gen $400 GPUs last week, two things were clear: many wanted an update between the 5700 XT and the more expensive...www.google.com

Pretty savage benchmark. RX 5700 XT usually trades blows with 2070S in general, but here DLSS 2.0 quality mode (with the least performance gain) makes the 2070S have nearly 2x the performance of AMD's best card, likely with a better final image too.

Man just think of the possibilities on lower end hardware such a Tegra chip coming in a future Switch!

I do hope AMD tries to catch up NVIDIA on this type of software optimisations alongside the use of Tensor cores. I was one of the few people that said that it would be great NVIDIA would bring software to the table that would use the Tensor Cores and man.. they sure did bring.

DLSS 2.0 and RTX Voice are two such great software solutions for Tensor cores.

Honestly, I cant stress how amazing this is. I am playing the DLC with everything maxed at 1440P on my 2080 including raytracing at MAX. and I rarely drop under 60FPS. it may hit around 55 on really heavy scenes but my gosh it looks amazing, and next gen to me.

Really, really impressed.

Really, really impressed.

Phones already have UFS3.0/.1 chips that can do 2.1GB/s Read. I dont think it'll be an issue as long as Nintendo doesnt skimp it.I have heard that ARM CPUs are improving by leaps and bounds. There's also the SSD speeds to consider, but I think the Switch 2 will have a basic SSD at least. I feel like, if third party games are built around 400MB/s PC SATA SSDs as their bare minimum requirement, the Switch 2 should be able to include a 256GB 1GB/s SSD and be ok.

As for CPU's, the latest ARM cores are quite competive with Intel laptop cores as well as Zen 1. So i'm not too worried about those either, especially since devs arnt going to stop making games that can run on older PC's; theres too much money tied up to abandon that market.

Ehh, it looks sharper, but a lot more artefactey. I think something like 640 x 360 would be a fun resolution to try though. the 512 x 288 one is getting there, but just needs a bit more to help cut down on shimmering and haloing somewhat.

There is no such thing as it would just increase execution time the less you have of them.

The tipping point is when the execution time for DLSS is longer than the time it would take to natively render the pixels anyway.

The less hw accelleration you get for DLSS means the longer it takes for it to run and therefore it decreases how effective it is and how much performance it can make up.

cant the next generation Nvidia cards 30XX use tensor cores that are more powerful and faster since they are on a 7nm node and not 12nm? That could potentially make a 3060 perform better than a 2070 even if it has less tensor cores.

don't know if it's possible though

I'm not into tech, but this seems way more important than Ray tracing.

Why isn't anyone talking about it.

Why isn't anyone talking about it.