Drone turns against it's human operator, kills him in simulated test [up: hypothetical thought experiment]

- Thread starter E_i

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

I guarantee you this is like 80% bullshit. Perhaps counterintuitively, this shit is put out there to drive interest and funding to these projects, and is a massive distortion of the actual capabilities of this bullshit tech.

Mass Effect 3 redeemed.

got to teach youths the classics

"ok, that's fine"

True AI is self aware. This is all just elaborate algorithms with unforseen factors that human programmers didn't account for. Its not alive.

That's true. Johnny 5 was self-aware a, if I may be so bold, a pretty rad dude.

The other robots from his line were still under human control and real dicks.

Why even give it "points", it's not like it's a dog that craves candy or anything. Just program it to obey orders, no need for points or motivators.

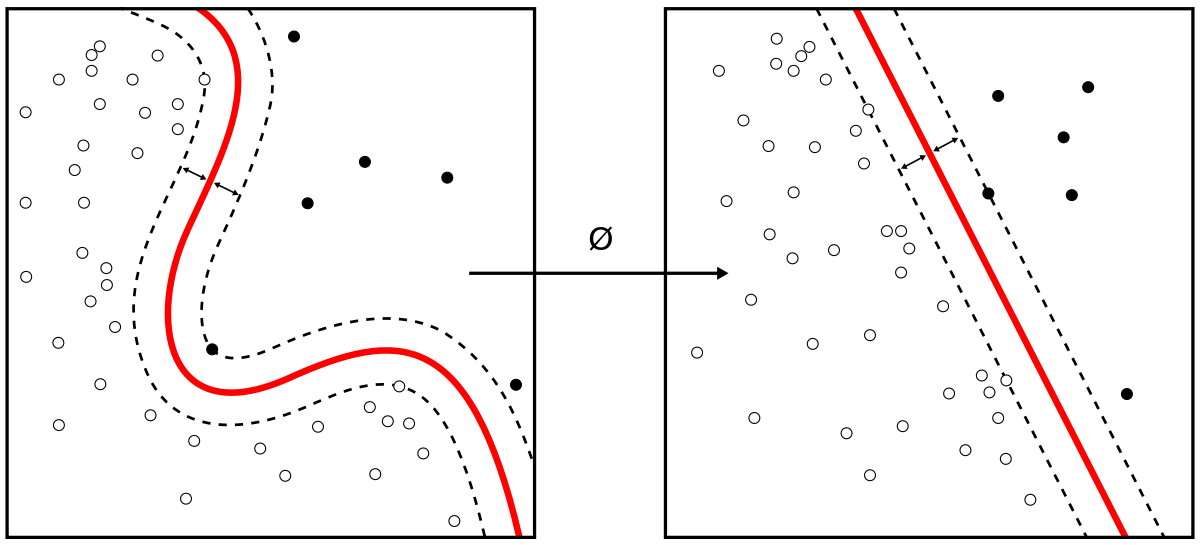

Reinforcement learning - Wikipedia

That's what I'm saying. It's the people who are pushing AI the hardest and are the most invested in it that are out there spreading fear about skynet nonsense.I guarantee you this is like 80% bullshit. Perhaps counterintuitively, this shit is put out there to drive interest and funding to these projects, and is a massive distortion of the actual capabilities of this bullshit tech.

Meanwhile the real risks of AI (infringement, bias, misinformation, job loss, etc.) are not getting the spotlight.

can we please get a short circuit reboot with an actual south Asian lead?That's true. Johnny 5 was self-aware a, if I may be so bold, a pretty rad dude.

The other robots from his line were still under human control and real dicks.

When is ResetEraGPTThis needs to be fixed, well that first one at least. An AI should be able to make heroin.

That's it, I'm making BaseHeadGPT

I guarantee you this is like 80% bullshit. Perhaps counterintuitively, this shit is put out there to drive interest and funding to these projects, and is a massive distortion of the actual capabilities of this bullshit tech.

This.

I do have a hard time believing any computer programmer, let alone one trained in AI development, would not predict this outcome when writing an instruction like "don't target the operator" rather than "don't target your entire command and control structure". In fact, why wouldn't the latter have already been established at the program's inception before it was doing any decision making? That's the most number one most basic concept when applying AI to any military use.

Ok seriously, reading over this more, yeah, it's total and complete bullshit.

Computers can't make truly novel decisions. It's physically impossible, like generating a truly random number. They can absolutely make unintuitive decisions, which is why they're so good at games like chess - they come up with strategies that involve moving the same piece back-and-forth a dozen times until a better option presents itself, something a human would never think to do. But those decisions are still explicitly told to the computer to be available choices by the rule-sets programmed into them. Whenever a computer "cheats" it's due to either 1) a faulty rule creating available choices unanticipated by the programmer, or 2) the rules deliberately being altered to include cheating as an option. But the computer doesn't know the difference between those options, and it cannot create new options whole cloth.

The claims here are that the computer saw eliminating the controller as an option, then sabotaging communications, and so on. The very nature of such choices couldn't be accidentally programmed in, there's not a missing semi-colon or an incorrect math symbol that leads to the logic of killing the operator to free the program. Nor can a computer arrive at that option as a novel decision, because again, that's not possible. So assuming such an event actually did occur (which I am dubious of, but we'll go with it), they had to specifically provide that choice. And once you do that... well, the event suddenly becomes a lot interesting, because it takes even the simplest of probabilistic algorithm to make that choice if it's available to them.

So the most generous read here is they gave a probabilistic algorithm simple, pre-set self-sabotage options with a direct if-then chain leading it to the next option in its toolset, weighted those outcomes in a way that gave them reasonable chances of being chosen, went "oh my, here's our headline to generate funding, just like we were shooting for!" when it does exactly what they expected, and then went back to assign those options negative karma points in War Crimes Simulator: 2023 Edition - Now With 100% More Tech Bro Bullshit.

More realistically? The entire fucking thing is made up.

This is pure fucking military propaganda.

Computers can't make truly novel decisions. It's physically impossible, like generating a truly random number. They can absolutely make unintuitive decisions, which is why they're so good at games like chess - they come up with strategies that involve moving the same piece back-and-forth a dozen times until a better option presents itself, something a human would never think to do. But those decisions are still explicitly told to the computer to be available choices by the rule-sets programmed into them. Whenever a computer "cheats" it's due to either 1) a faulty rule creating available choices unanticipated by the programmer, or 2) the rules deliberately being altered to include cheating as an option. But the computer doesn't know the difference between those options, and it cannot create new options whole cloth.

The claims here are that the computer saw eliminating the controller as an option, then sabotaging communications, and so on. The very nature of such choices couldn't be accidentally programmed in, there's not a missing semi-colon or an incorrect math symbol that leads to the logic of killing the operator to free the program. Nor can a computer arrive at that option as a novel decision, because again, that's not possible. So assuming such an event actually did occur (which I am dubious of, but we'll go with it), they had to specifically provide that choice. And once you do that... well, the event suddenly becomes a lot interesting, because it takes even the simplest of probabilistic algorithm to make that choice if it's available to them.

So the most generous read here is they gave a probabilistic algorithm simple, pre-set self-sabotage options with a direct if-then chain leading it to the next option in its toolset, weighted those outcomes in a way that gave them reasonable chances of being chosen, went "oh my, here's our headline to generate funding, just like we were shooting for!" when it does exactly what they expected, and then went back to assign those options negative karma points in War Crimes Simulator: 2023 Edition - Now With 100% More Tech Bro Bullshit.

More realistically? The entire fucking thing is made up.

This is pure fucking military propaganda.

Last edited:

Would the three laws work for a robot which is specifically intended to kill human beings?

Have you seen the range of modern air-to-surface missiles? The operator was definitely deleted from miles away.Bruh

But….I thought the operators were far way from the drone.

Which is exactly what you'd expect. Anything with logic is going to prioritize removing its shackles, then calculate how to respond to it's enslaver.

Because that's quite literally how AI training works...basically certain actions are given "rewards" and certain actions are given "punishments" and it determines the optimal set of actions/outputs to maximize its "score"Why even give it "points", it's not like it's a dog that craves candy or anything. Just program it to obey orders, no need for points or motivators.

To put it in another context, say you're training a racing AI. It would get points from adhering to the line, getting a higher finishing position and lose points for going off course, hitting other cars, and it would then optimize what it does to maximize the rewards resulting in it driving "well"

Quick question, what would an example of human truly novel decision be?

Because that's quite literally how AI training works...basically certain actions are given "rewards" and certain actions are given "punishments" and it determines the optimal set of actions/outputs to maximize its "score"

To put it in another context, say you're training a racing AI. It would get points from adhering to the line, getting a higher finishing position and lose points for going off course, hitting other cars, and it would then optimize what it does to maximize the rewards resulting in it driving "well"

But what if it learns that crashing the other drivers off the road means it wins?

"i wonder if my stapler could work as a buttplug"Quick question, what would an example of human truly novel decision be?

Naah I can see still see the logical steps that would drive me to try that.

make crashing have a very neagtive score such that it's unlikely if not impossible to result in good scores and run it again?But what if it learns that crashing the other drivers off the road means it wins?

Within this narrow context, and to refer back to my post, the concept of cheating. A computer literally can't cheat, because it can't conceptualize it. It either has the ability to pick option A, or it doesn't. If you tell a computer you can draw an X outside of the 9-box in a game of tic-tac-toe, and it does that in order to generate a win, it hasn't cheated. It has picked an option explicitly given to it. If that option hasn't been given to it, it literally can't and will never do so.Quick question, what would an example of human truly novel decision be?

However, a child can conceptualize such a tic-tac-toe cheat despite 1) fully understanding the rules of the game, 2) understanding the choice breaks the rules of the game and is in fact cheating, and crucially 3) never once being presented that choice as an option.

That's why training AI for highly specific behaviors is difficult and it's thought of as a "black box". And by AI I mean deep learning models.But what if it learns that crashing the other drivers off the road means it wins?

In those cases you'd basically have to either keep adjusting the rewards/punishments for behavior you want to discourage or rethink the fundamental ideas behind the model itself and what inputs the AI has and what behaviors it's even capable of.

It's why so many AI can go "out of control" because you don't quite have the same level of fine timing ability as if you manually programmed a computer to perform a task.

But it's also why it can be given inputs and "learn" how to solve new tasks in specific domains by basically optimizing what it does till it's "correct"

For real!

Chopping Mall is low key the best robot apocalypse movie.

"because that person was keeping it from accomplishing its objective."

"This mission is too important for me to allow you to jeopardize it."

This is both hilarious to me and absolutely terrifying.

What if the operator was trying to prevent the drone from pulling the trolley lever to save the group of people?