we literally have road to PS5 and Microsoft explaining how the velocity architecture works on top of Sampler feedback sharing to all talk about memory management. We do know.Cool, you're a dev with practical hands on experience then, awesome.

Evidence of the Series X superior Ray Tracing?

- Thread starter AppleBlade

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

The 970 is a poor example since it was shown that when it needed all 4GB of ram performance dropped.Are you suggesting that the XSX will randomly allocate things on the two pools of RAM, without taking into account the bandwidth that each tasks requires? We already have examples of this with the GTX 970.

The 970 is a poor example since it was shown that when it needed all 4GB of ram performance dropped.

I specifically mentioned the GTX 970 because it is exactly the same situation. This is to show that tasks that require less bandwidth can be allocated on the slower portion of RAM and this is what we can expect to happen with the XSX. Ray Tracing can be done on the high speed portion of the RAM, the OS can be on the slower portion.

Yep. That's how it works in Nvidia GPUs so I'd expect the same to be true for RDNA2.The rumour is that RDNA2 raytracing performance is tied directly to the number of CUs. Didn't the Forza promo video have more complex RT reflections than the GT7 demo? Not quite a far comparison though since GT7 showed what looked like actual gameplay.

And I'm saying that it also showed that when it needed more than 3.5GB of ram for that given task it would get an overall performance hit. It comes down to how much RAM developers have access to and how it can be used.I specifically mentioned the GTX 970 because it is exactly the same situation. This is to show that tasks that require less bandwidth can be allocated on the slower portion of RAM and this is what we can expect to happen with the XSX. Ray Tracing can be done on the high speed portion of the RAM, the OS can be on the slower portion.

I see people saying this a lot, but didn't they show us this Gears Demo running on the hardware all the way back in March? Is there something I'm not getting, as to why this doesn't count?

It...doesn't have ray tracing so it doesn't count for showing off the Series X ray tracing?

I specifically mentioned the GTX 970 because it is exactly the same situation. This is to show that tasks that require less bandwidth can be allocated on the slower portion of RAM and this is what we can expect to happen with the XSX. Ray Tracing can be done on the high speed portion of the RAM, the OS can be on the slower portion.

That didn't happen on the 970 however. As soon as something went above 3.5GB memory use, performance tanked to unplayable.

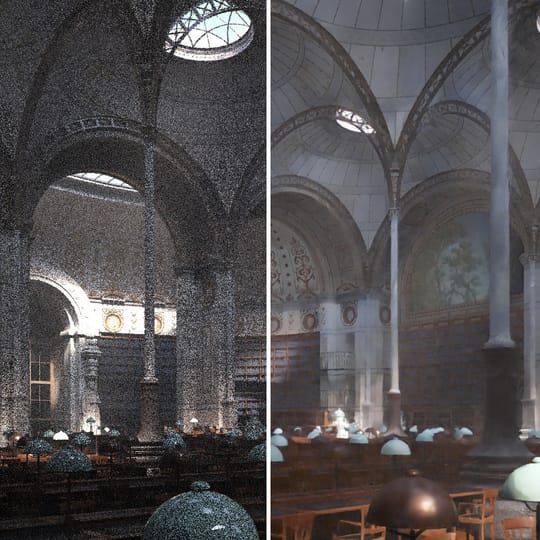

Pretty sure there is no game which uses machine learning for denoising at the moment, all use normal filtering methods.The XSX has more CU, ray tracing consumes a lot of memory bandwidth. The PS5 has 448GB/s and the XSX has 560 GB/s. Another big factor that we need to find out is if the PS5 has dedicated hardware to accelerate machine learning. If not, the denoising step should be more efficient on the XSX. Current ray tracing solutions don't shoot enough rays to cover the whole scene. You can see on the image below how it looks, with black spots where no rays reached. Machine learning is necessary to fill out the blank spaces.

It...doesn't have ray tracing so it doesn't count for showing off the Series X ray tracing?

The poster I was replying to was asking about games running on Series X, not specifically ray-tracing.

The X|S build of Gears 5 does have ray-tracing, not sure if it was in the tech demo shown in March. I didn't have time to rewatch this video.

And I'm saying that it also showed that when it needed more than 3.5GB of ram for that given task it would get an overall performance hit. It comes down to how much RAM developers have access to and how it can be used.

No, the full 4GB of RAM could be occupied. This was not necessarily a problem with filling up the RAM, but situations could happen where a game could need more fast RAM than the 3.5GB that is available, only in those situations we would see problems. On a closed system like the XSX memory allocation can be even more efficient than the GTX 970. On PC games are designed to run on hundreds of GPU models with different amounts of RAM. Let's not also derail the main subject here, which is to make clear that the additional bandwidth that the XSX has, will help with Ray Tracing.

"Nvidia's driver automatically prioritises the faster RAM, only encroaching into the slower partition if it absolutely has to. And even then, the firm says that the driver intelligently allocates resources, only shunting low priority data into the slower area of RAM."

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvi…

Last edited:

Pretty sure there is no game which uses machine learning for denoising at the moment, all use normal filtering methods.

You just ignored how the entire line of Nvidia RTX GPUs work.

This video makes a big point that it has no ray tracing, because the entire game was built around the old lighting system, and would require redoing every level's lighting to use ray tracing. But also, at that point they had only spent two weeks working on the Series X version, basically taking the PC version in Ultra and using some new screen-space effects from the newer version of UE4. In the PC version, they had cranked the screen-space reflections and shadows all throughout the game.The poster I was replying to was asking about games running on Series X, not specifically ray-tracing.

The X|S build of Gears 5 does have ray-tracing, not sure if it was in the tech demo shown in March. I didn't have time to rewatch this video.

That didn't happen on the 970 however. As soon as something went above 3.5GB memory use, performance tanked to unplayable.

No, that is not correct. Like I said, not all types of data/tasks require the same amount of bandwidth. Obviously new games will require more and more RAM, this is just how things work, but it doesn't have to do with filling up the RAM, only when the 3.5GB of RAM is full and that is not enough to run all of the tasks that require high bandwidth, is when we see a drop in frames.

"In conclusion, we went out of our way to break the GTX 970 and couldn't do so in a single card configuration without hitting compute or bandwidth limitations that hobble gaming performance to unplayable levels. We didn't notice any stutter in any of our more reasonable gaming tests that we didn't also see on the GTX 980, though any artefacts of this kind may not be quite as noticeable on the higher-end card - simply because it's faster. In short, we stand by our original review, and believe that the GTX 970 remains the best buy in the £250 category - in the here and now, at least."

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvi…

Last edited:

No, that is not correct. Like I said, not all types of data require the same amount of bandwidth. Obviously new games will require more and more RAM, this is just how things work, but it doesn't have to do with filling up the RAM, only when the 3.5GB of RAM is full and that is not enough to run all of the tasks that require high bandwidth, is when we see a drop in frames.

"In conclusion, we went out of our way to break the GTX 970 and couldn't do so in a single card configuration without hitting compute or bandwidth limitations that hobble gaming performance to unplayable levels. We didn't notice any stutter in any of our more reasonable gaming tests that we didn't also see on the GTX 980, though any artefacts of this kind may not be quite as noticeable on the higher-end card - simply because it's faster. In short, we stand by our original review, and believe that the GTX 970 remains the best buy in the £250 category - in the here and now, at least."

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvi…www.eurogamer.net

While not all types of memory access require the same bandwidth, the 970 didn't manage the memory access in such a way that the 0.5GB of lower bandwidth memory could be effectively used.

I owned a 970 and analyzed the performance. Whatever testing they said they did, it was trivial to bring a 970 to its knees.

I think the article is just saying it doesn't go to the 0.5GB slow RAM unless it has to, which makes sense. But graphics need the bandwidth.

XSX devs will have to be careful to not exceed 10GB of "VRAM" allocation. Or maybe the OS will enforce this.

While not all types of memory access require the same bandwidth, the 970 didn't manage the memory access in such a way that the 0.5GB of lower bandwidth memory could be effectively used.

I owned a 970 and analyzed the performance. Whatever testing they said they did, it was trivial to bring a 970 to its knees.

"Nvidia's driver automatically prioritises the faster RAM, only encroaching into the slower partition if it absolutely has to. And even then, the firm says that the driver intelligently allocates resources, only shunting low priority data into the slower area of RAM."

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvi…

"In conclusion, we went out of our way to break the GTX 970 and couldn't do so in a single card configuration without hitting compute or bandwidth limitations that hobble gaming performance to unplayable levels. We didn't notice any stutter in any of our more reasonable gaming tests that we didn't also see on the GTX 980, though any artefacts of this kind may not be quite as noticeable on the higher-end card - simply because it's faster. In short, we stand by our original review, and believe that the GTX 970 remains the best buy in the £250 category - in the here and now, at least."

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvi…

I shouldn't have to say anything more than this.

Denoising is not a hardware feature, it's software.

Currently games have used traditional compute path for denoising. (Usually separate denoisers for reflections/shadows/specular/diffuse.. etc.)

We have machine learning denoisers in offline renderers and preview windows of offline renderers.

The 970s management was effectively a mismanagement then, because in real world performance that upper RAM crippled the card. Doesn't bode well for Xbox Series... :/

The 970s management was effectively a mismanagement then, because in real world performance that upper RAM crippled the card. Doesn't bode well for Xbox Series... :/

You cannot ignore reality.

"But so far with this new information we have been unable to break the GTX 970, which means NVIDIA is likely on the right track and the GTX 970 should still be considered as great a card now as it was at launch. In which case what has ultimately changed today is not the GTX 970, but rather our perception of it."

GeForce GTX 970: Correcting The Specs & Exploring Memory Allocation

Denoising is not a hardware feature, it's software.

Currently games have used traditional compute path for denoising. (Usually separate denoisers for reflections/shadows/specular/diffuse.. etc.)

We have machine learning denoisers in offline renderers and preview windows of offline renderers.

Thank you for the correction on this.

You cannot ignore reality.

"But so far with this new information we have been unable to break the GTX 970, which means NVIDIA is likely on the right track and the GTX 970 should still be considered as great a card now as it was at launch. In which case what has ultimately changed today is not the GTX 970, but rather our perception of it."

GeForce GTX 970: Correcting The Specs & Exploring Memory Allocation

www.anandtech.com

What the heck is this? Dude I've tested this myself. Can you say the same? You just keep parroting vague reviews.

Will not reply again on this. I have first hand experience testing and observing this slowdown. I have nothing more to say.

It's actually a misinformed take (like so much around these consoles, especially the PS5), since Ray Tracing scales roughly linearly with resolution, so there would actually be no difference in Ray Tracing quality between XSX and PS5 versions of games despite the performance delta, rather just in resolution.

What the heck is this? Dude I've tested this myself. Can you say the same? You just keep parroting vague reviews.

Will not reply again on this. I have first hand experience testing and observing this slowdown. I have nothing more to say.

You are not reading what I'm sharing with you and the quotes I shared can't be more specific. I will say it again, filling up the 4GB GTX 970 is not the problem, but if a game requires more than the 3.5GB of high speed RAM in the GTX 970, we will see a reduction in performance. This of course applies with basically any GPU out there, make a game use 2GB of RAM on a GPU that has 1GB and it will need to use system RAM. On the GTX 970 it will use the 0.5GB of slow RAM and then use the System RAM. And yes, I used to have 4 GTX 970, now I have 2. I have done my fair share of gaming on the 970.

Last edited:

No, the full 4GB of RAM could be occupied. This was not necessarily a problem with filling up the RAM, but situations could happen where a game could need more fast RAM than the 3.5GB that is available, only in those situations we would see problems. On a closed system like the XSX memory allocation can be even more efficient than the GTX 970. On PC games are designed to run on hundreds of GPU models with different amounts of RAM. Let's not also derail the main subject here, which is to make clear that the additional bandwidth that the XSX has, will help with Ray Tracing.

"Nvidia's driver automatically prioritises the faster RAM, only encroaching into the slower partition if it absolutely has to. And even then, the firm says that the driver intelligently allocates resources, only shunting low priority data into the slower area of RAM."

Nvidia GeForce GTX 970 Revisited

When the GTX 970 launched last year, the tech press - Digital Foundry included - were unanimous in their praise for Nvi…www.eurogamer.net

Automatic VRAM allocation has been a thing on PC GPU's for a very long time. No one has to worry about "hundreds of different GPUs'" when developing for PC. 🙄

Wow sarcasm.Cool, you're a dev with practical hands on experience then, awesome.

Well I have a master in CS yes.. so some of these things are not rocket science or have been done before in the world of computers and have been explained by MS.

Numbers and on paper mean absolutely nothing until Microsoft get off there asses and show some Series X gameplay.

Automatic VRAM allocation has been a thing on PC GPU's for a very long time. No one has to worry about "hundreds of different GPUs'" when developing for PC. 🙄

I'm aware of that. I was referring to how when a developer makes a game they have to take into account the different types of RAM sizes and speeds. On PC you also have the option to change the settings to levels that your GPU cannot support at a good framerate. There is no developer out there that will create a game that needs 30GB of GPU RAM for example. The point here is that it's easier to optimize for a console. We can be sure that most developers will be making sure not to go over budget on the high speed XSX RAM and kill the performance by loading a tasks that requires high bandwidth on the slow memory pool.

I'm aware of that. I was referring to how when a developer makes a game they have to take into account the different types of RAM sizes and speeds. On PC you also have the option to change the settings to levels that your GPU cannot support at a good framerate. There is no developer out there that will create a game that needs 30GB of GPU RAM for example. The point here is that it's easier to optimize for a console. We can be sure that most developers will be making sure not to go over budget on the high speed XSX RAM and kill the performance by loading a tasks that requires high bandwidth on the slow memory pool.

I doubt memory speed is even much of a factor for 99% of devs out there. Bandwidth just translates to performance. PC devs tend to focus on one or two specs, and just build scalability from there.

Also that " We can be sure that most developers will be making sure not to go over budget on the high speed XSX RAM and kill the performance" Is probably not a safe bet. considering the performance issues of most console titles released the past couple of generations. We cna probably count on first party devs not to though.

It has a slightly stronger GPU so it should have better RT but the question is how much better will it actually look in comparisons ?

It would also help if Microsoft showed some next games running on the damn console at this point.

It would also help if Microsoft showed some next games running on the damn console at this point.

It has a slightly stronger GPU so it should have better RT but the question is how much better will it actually look in comparisons ?

It would also help if Microsoft showed some next games running on the damn console at this point.

My guess is that we will only be able to tell the difference on exclusive first party titles. Those devs will have the time, resources and expertise to push that 20% into differences that will be noticeable.

I expect for 99% of games though, the differences will be minor. I'm guessing slight improvements to IQ and possibly more stable frame rates or resolution.

Take a look at PC for an example. With a 20% increase over a console and entry tier GPU will be doing, mostly the same, just better frame rates. It (usually, there are some exceptions) takes something more powerful than that to start pushing settings up and doubling the framerate.

I doubt memory speed is even much of a factor for 99% of devs out there. Bandwidth just translates to performance. PC devs tend to focus on one or two specs, and just build scalability from there.

Also that " We can be sure that most developers will be making sure not to go over budget on the high speed XSX RAM and kill the performance" Is probably not a safe bet. considering the performance issues of most console titles released the past couple of generations. We cna probably count on first party devs not to though.

OK, so I should expect for most developers that are making games for the XSX not care about going over budget with the fast RAM and willingly drop performance by putting tasks that require high bandwidth on the slow portion of RAM?

OK, so I should expect for most developed that are making games for the XSX not care about going over budget with the fast RAM and willingly drop performance by putting tasks that require high bandwidth on the slow portion of RAM?

It won't be willful. It'll just be somehting that happens. The level editor dude wanted that tree there, or the script editor brought in the wrong LOD model for this sequence, etc, etc. Most developers don't have the time to optimize to a level where everything is perfect, even on fixed hardware.

I mean did the From Software guys purposely decide to have so many issue running their darksouls games on consoles? I doubt it.

Tools and engines are getting better at automating this stuff though, so who knows.

It won't be willful. It'll just be somehting that happens. The level editor dude wanted that tree there, or the script editor brought in the wrong LOD model for this sequence, etc, etc. Most developers don't have the time to optimize to a level where everything is perfect, even on fixed hardware.

I mean did the From Software guys purposely decide to have so many issue running their darksouls games on consoles? I doubt it.

Tools and engines are getting better at automating this stuff though, so who knows.

This will immediately show itself in a drop in performance when it happens. You seem to be saying that most developers wont care about game optimization on the XSX and will just use the RAM pools without any thought. I even said that I expect most developers to not go over budget, taking into account the few that wont. Your answer that that was "Is probably not a safe bet", so you seem to expect for most developers to go over budget with the high speed RAM and kill performance.

Last edited:

It...doesn't have ray tracing so it doesn't count for showing off the Series X ray tracing?

Full path traced Minecraft with direct feed capture from an Xbox Series X (this is from March 2020):

Anything not bound by SSD speeds will look better on SX. This is a fact.

But not much better. Just a tiny bit better. Think of this: if the average person can't tell upscaled 1440p from 4K.

Even though 1440p is only 44% of the resolution of 4K.

PS5 is expected to have 85% of the rasterization/RT of the SX.

You can easily do the math. Nobody will be able to tell game rez apart* if they run at equal framerate. It is only if games run at the same res on PS5 and SX that you may notice the PS5 dropping frames and running at 51 FPS when the SX is running at a GPU-bound 60 FPS.

It's the magic of higher rez. The higher the rez, the more you can get away with, upscaling-wise. DLSS benefits from the same principle I'd wager.

But yeah: Devs: don't run games at the same rez on both. Games on SX should get a 10-20% rez bump over their PS5 counter-parts, depending on how much better the wider but slower architecture performs against PS5.

But not much better. Just a tiny bit better. Think of this: if the average person can't tell upscaled 1440p from 4K.

Even though 1440p is only 44% of the resolution of 4K.

PS5 is expected to have 85% of the rasterization/RT of the SX.

You can easily do the math. Nobody will be able to tell game rez apart* if they run at equal framerate. It is only if games run at the same res on PS5 and SX that you may notice the PS5 dropping frames and running at 51 FPS when the SX is running at a GPU-bound 60 FPS.

It's the magic of higher rez. The higher the rez, the more you can get away with, upscaling-wise. DLSS benefits from the same principle I'd wager.

But yeah: Devs: don't run games at the same rez on both. Games on SX should get a 10-20% rez bump over their PS5 counter-parts, depending on how much better the wider but slower architecture performs against PS5.

Last edited:

more shaders and more number of CU should put Series X on top.

This is a myth rooted in misunderstanding.

RT is purely shading performance related. So overall TFLOPs (i.e. floating point performance) of the GPU is most important. The difference is 18% between XSX and PS5. So an 18% advantage to XSX.

On the other hand, since RT is evaluated on a per pixel basis, an 18% reduction in rendering resolution on PS5 will mean lower RT cost for the same visual quality (i.e. samples per pixel).

Some folks have opined that the higher memory bandwidth on XSX might also play a role in disproportionately widening the gap, but the reality is that RT being highly BW reliant, with a lower resolution on PS5 would offset the pressure on memory BW and thus even everything out.

In all likelihood, RT effects on both consoles will be identical (i.e. same pixel sampling rate), with the only difference being rendering resolution.

That's the formula to calculate the giga rays, but this doesn't proof that clock are as important than more CU. We have to wait and see, but most people think CU scale better with RT and even if those would scale the same, Xbox still has a big bandwidth advantage for RT tasks.No ms showed the amd formula for raytracing in hotchip clip.

4 × number of CU × clock of CU

4× 52× 1850=380 giga rays

So u dont need rumor. Both clock and cu number are as important as one another

Minecraft Path Tracing demo.We should prob w8 too talk about this until we see some more (any) games running on XSX hardware.

I don't think we have seen any gameplay so far on XSX with raytracing?

It's actually a misinformed take (like so much around these consoles, especially the PS5), since Ray Tracing scales roughly linearly with resolution, so there would actually be no difference in Ray Tracing quality between XSX and PS5 versions of games despite the performance delta, rather just in resolution.

pretty sure that digital foundry has expressly said that this is wrong