View: https://www.youtube.com/watch?v=LdrzRVFGR2w

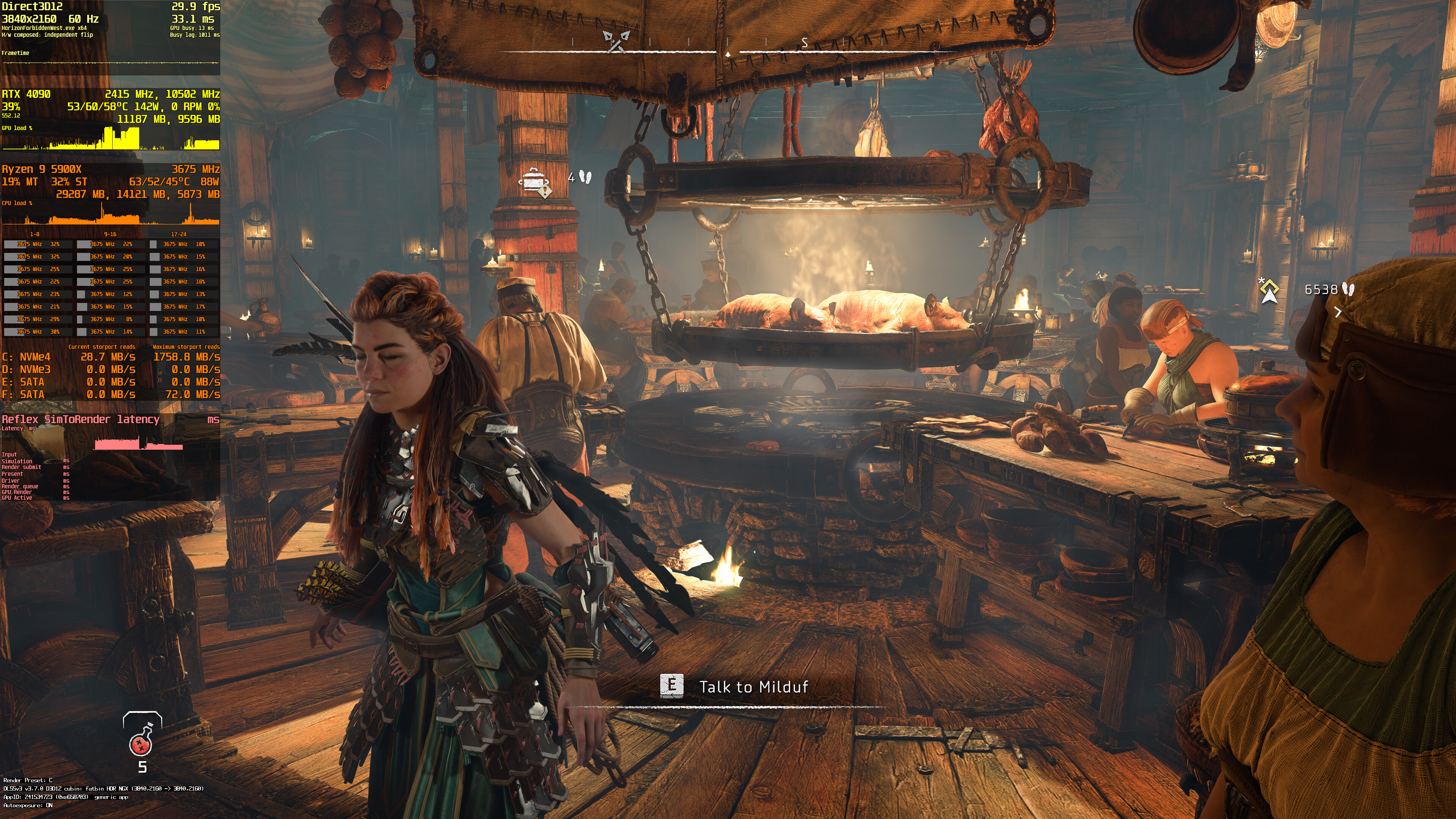

A benchmark of the new patch from today.

There are still frametime issues in both GPU and CPU limited runs on a 10700F. The new patch seem to be doing a bit better though.

Also of note (if that's not a result of streaming and/or shader compilation) is that the latest patch seem to have reduced CPU load a bit.

Also a new Nvidia driver with official ReBAR profile is out today:

GeForce Game Ready Driver | 552.12 | Windows 10 64-bit, Windows 11 | NVIDIA

Download the English (US) GeForce Game Ready Driver for Windows 10 64-bit, Windows 11 systems. Released 2024.4.4

www.nvidia.com

After 60 hours of play I can safely say that that microstuttering is there without Reflex too, it just amplify it (On+Boost more so than just On btw).Frame Gen is broken because it turns on Reflex and introduces micro stuttering.

But since you are getting higher performance with FG the stutters are less noticeable as the frametimes are smaller.

So after playing for ~20 hours without FG I've turned it on. Generally a better experience despite the judder I'd say. But this may depend on what GPU you're using and what is your base framerate of course.