On the other side of the AI spectrum:

www.nbcnews.com

www.nbcnews.com

There seemed like a lot of quotable bits, but it seems like a promising new tool (that's still in clinical trials) that could potentially join the many AI tools already FDA approved for Radiology. The sooner cancer can be detected and treated the better, and it seems kinda crazy that a tool like this could detect signs of cancer potentially over a year before a doctor could in a scan.

It's also good that they touch on the idea of "AI-assisted" work instead of replacement, because in the medical / health field in particular, it seems like there is still a ton of room for it to grow in how it can handle public health when the work is AI-assisted.

This particular article is new, but I think it's based on a story from January, although either way I didn't quite see a thread for it.

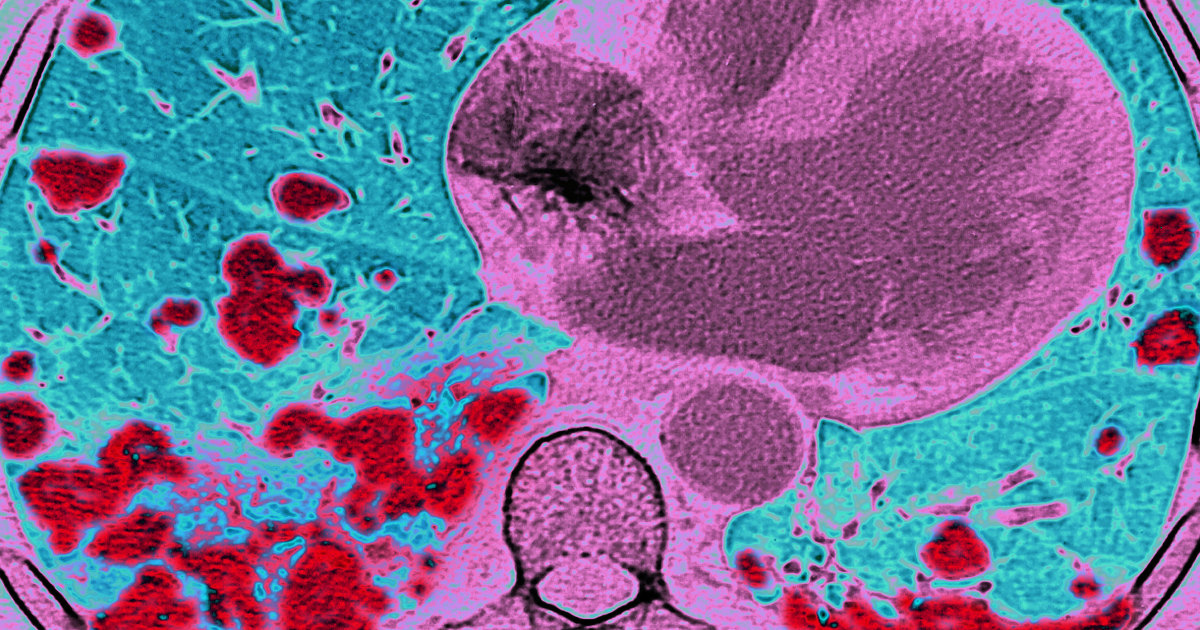

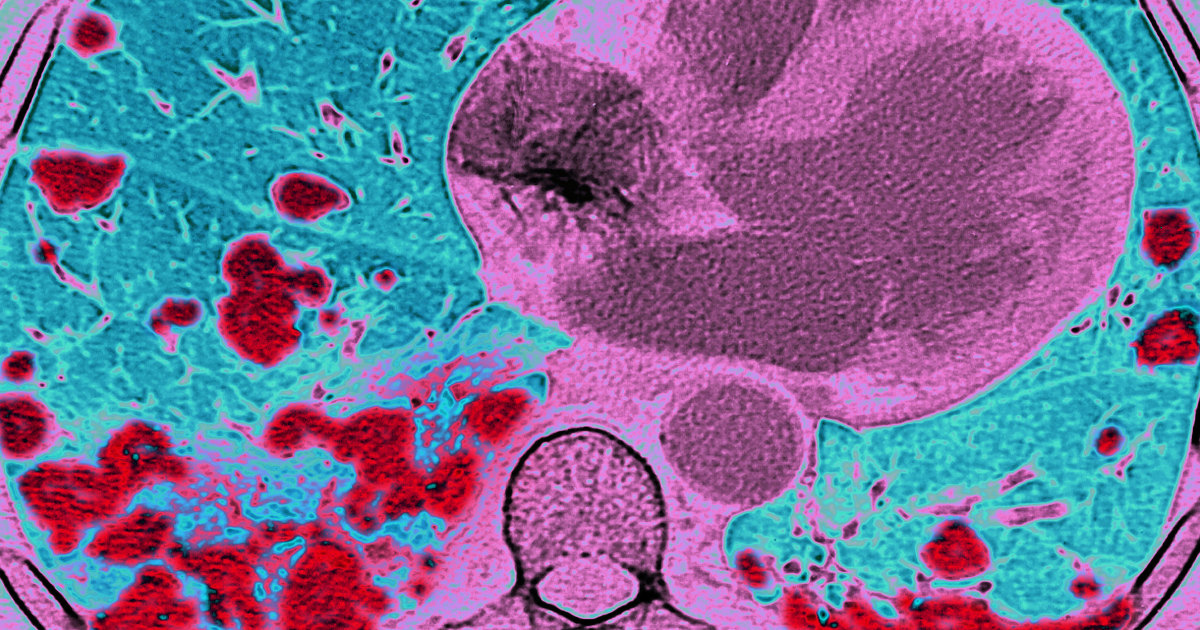

Promising new AI can detect early signs of lung cancer that doctors can't see

The tool, Sybil, looks for signs of where cancer is likely to turn up so doctors can spot it as early as possible.

The new AI tool, called Sybil, was developed by scientists at the Mass General Cancer Center and the Massachusetts Institute of Technology in Cambridge. In one study, it was shown to accurately predict whether a person will develop lung cancer in the next year 86% to 94% of the time.

The tool, experts say, could be a leap forward in the early detection of lung cancer, the third most common cancer in the United States, according to the CDC. The disease is the leading cause of cancer death, according to the American Cancer Society, which estimates that this year there will be more than 238,000 new cases of lung cancer and more than 127,000 deaths.

But early detection is difficult, she said. Since the lungs can't be seen or felt, the only way to spot it early is with a CT scan. By the time symptoms appear, including persistent coughing or trouble breathing, the cancer is usually advanced and the most difficult to treat.

Past research has shown that screening with low-dose CT scans can reduce the risk of death from lung cancer by 24%, because they can help detect cancer sooner, when it's more treatable.

But an AI tool could potentially increase the rates of early detection of lung cancer — and potentially increase survival rates as well, Sandler said.

There have been cases, Fintelmann added, where Sybil has detected signs of cancers that radiologists did not detect until nodules were visible on a CT scan years later.

Fintelmann said he sees a future in which the AI tool is helping radiologists make important treatment decisions — not replacing radiologists altogether.

"The future of radiology is going to be AI-assisted," he said. "You will still need a radiologist to identify where the cancer is, identify the best possible treatment and actually do the treatment."

There seemed like a lot of quotable bits, but it seems like a promising new tool (that's still in clinical trials) that could potentially join the many AI tools already FDA approved for Radiology. The sooner cancer can be detected and treated the better, and it seems kinda crazy that a tool like this could detect signs of cancer potentially over a year before a doctor could in a scan.

It's also good that they touch on the idea of "AI-assisted" work instead of replacement, because in the medical / health field in particular, it seems like there is still a ton of room for it to grow in how it can handle public health when the work is AI-assisted.

This particular article is new, but I think it's based on a story from January, although either way I didn't quite see a thread for it.