Assets are actually pretty close and the workflows and tools are similar. Rendering is at least decades apart. Hardware is not just there yet for realtime to be high budget CGI quality. There's also physics simulations and scene detail levels that aren't even in the same ballpark.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Right now, and apart from IQ and render budget, how big is the gap between high-end videogames and state of the art CGI?

- Thread starter Max|Payne

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

You know, it'd be a really good discussion to talk about how shifting interest in the market, i.e. linear to open world and freedom, affected tech advancements made today, vs. titles like the Order, Quantum Break etc.

For me, animation fluidity is still a holding point for a lot of games. Folks having very limited animations and transitions into them is glaring compared to all the movements we see of characters in films.

Ok, returning to my initial question, just how much more detailed is Thanos in Endgame compared to, say, Kratos in the new God of War? Gimme numbers on those polycounts and material resolutions.

In terms of raw numbers, films generally use "UDIM" textures which basically means multiple UV tiles per model, in game terms you can think of a UV tile as a single texture, since games generally only use a single tile (unless they're laying out the UV islands across tiles in which case the texture generally just repeats rather then the game loading an entirely new one).

How many UV tiles? Well, some films are known to use quite literally hundreds of 4K tiles per hero asset (characters etc). the software Mari is generally used since it's designed to handle that level of texture resolution, alongside really beefy workstations.

(Or if you're Disney, "PTex" textures which is a non human readable format where each face gets it's own UV island which removes to stretching artefacting that normal UVing gives you. Same level of resolution applies though.)

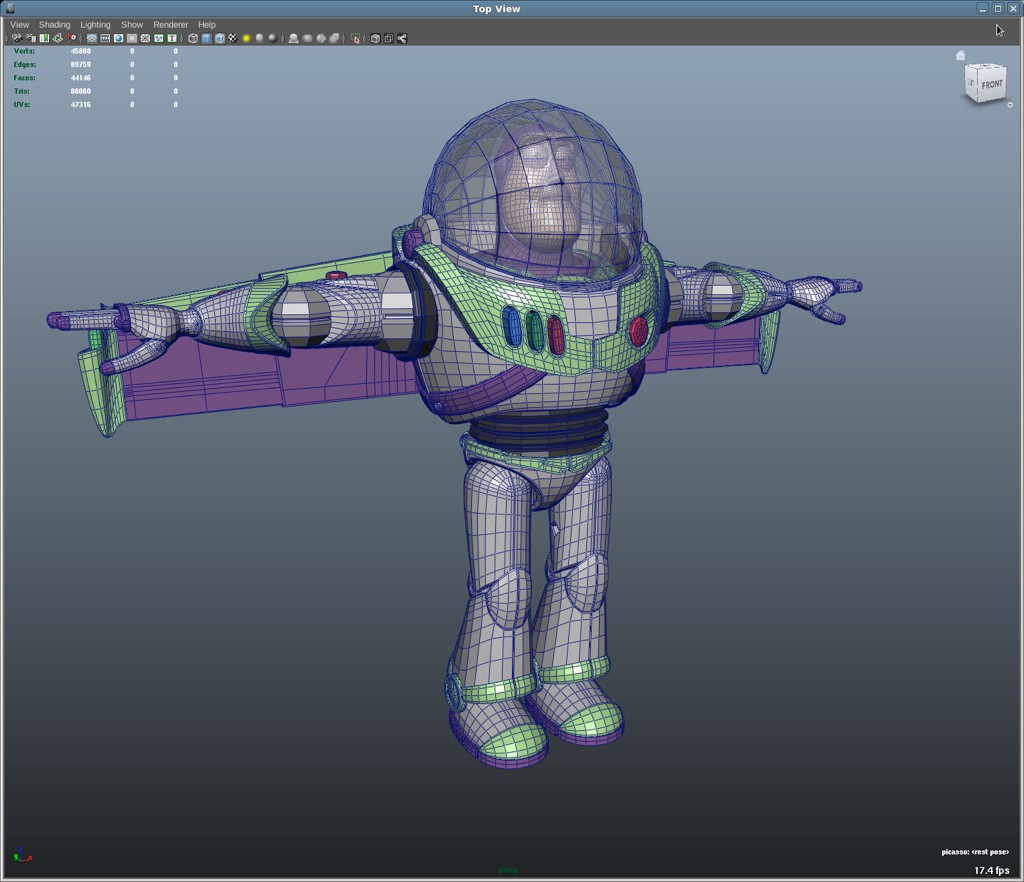

In terms of polycount, this can actually be slightly surprising. Films generally use subdivision surfaces nowadays, which you can think of as an advanced form of tessellation that takes a low poly mesh and then smooths out the raw edges by adding more polygons:

Something that sets this apart from standard tessellation in games is that the result is predictable - a cube subdivided will always become a smooth sphere with enough levels of subdividion. Because of this models in films can actually be fairly low poly to begin with:

(I think this was from Toy Story 3? Here's the article they were from.)

Once loaded into the renderer however the models are generally subdivided to a level where there's more polygons then there are pixels, then displaced using displacement maps to create more complex shapes that mimic say, the Zbrush sculpt. In many cases this means not using normal maps, but rather just having enough polygons to model the pore level detail itself directly. So the end result is multiple orders of magnitude more polygons then what the artist sees in the viewport.

Pixar actually released a video showing how they use subd surfaces which contains some actual film assets, if you're interested:

And to get an idea of the level of detail we're talking about, a while ago Disney released the background assets for the Island in Moana for free for background purposes. The data for just that one shot comes out to be around 100GB, and there is an interesting blog post where someone tries to actually render that data at home - the end result is slightly different owing to the lack of additional lights and the proprietary engine that Disney use, but they still managed to get fairly close. This isn't even close to real time though, they had to disable a significant amount of geometry to even get it to render on their PC without it instantly crashing. and using a render farm they managed to render the whole scene after 3.5 hours at less then HD resolutions. So yeah, games still have a long way to go.

It helps that films have to only worry about a single shot instead of a player.For me, animation fluidity is still a holding point for a lot of games. Folks having very limited animations and transitions into them is glaring compared to all the movements we see of characters in films.

You are correct.

It absolutely does, but if someone were to ask me what will always hold back high end big budget titles from high end big budget titles, this is it. Not everyone wants to be as meticulous as Rockstar with animation and transitions. And not even them are perfect.It helps that films have to only worry about a single shot instead of a player.

How many games are using motion matching?For me, animation fluidity is still a holding point for a lot of games. Folks having very limited animations and transitions into them is glaring compared to all the movements we see of characters in films.

That could at least help close the gap between NPCs and CGI characters in terms of animation fidelity.

As for the player character, will we ever reach a point where the animation system will get really good at handling sudden changes in animation state from user input while always looking natural?

Ofc, but that's more due to the nature of games. Input response was a major priority this gen compared to last gen were most games sacrificed that for animation quality which was also hindered by poor framerates. This gen is different because at the 30fps mark, framerates have been relatively stable, and due to market trends shifting, most people want good input response.It absolutely does, but if someone were to ask me what will always hold back high end big budget titles from high end big budget titles, this is it. Not everyone wants to be as meticulous as Rockstar with animation and transitions. And not even them are perfect.

So far, For Honor and TLOU2. Guerilla is currently conducting research into that technology for HZD2.

Specifically talking about characters and animations and muscle systems, we have caught up with the best that CGI has to offer in real-time, but it just isn't consumer viable yet. In other words, it's in the tech demo phase. This is a good showcase of that here and in VR no less driven by real people:You should watch some UE4 vis demos to see where the cutting edge in terms of real-time graphics is. While it is possibel that someone is further in one aspect or another, the overall movie sequence from the troll should be the most advanced in the overall quality/features.

“Troll’ from Goodbye Kansas and Deep Forest Films | GDC 2019 | Unreal Engine

During the “State of Unreal” GDC opening session, Goodbye Kansas and Deep Forest Films revealed "Troll," a real-time technology demonstration using Unreal En...www.youtube.com

The average movie rig is still way more complex than the game model. Muscle and fat systems which drive something like Pacific Rim monsters, hair for Planet of the Apes, facial animations of Disney character - way beyond games: both because of processing power and because nobody can afford it. You will see those monsters for minutes on-screen, and there will be a team of people working on a single character for a year.

It is not just processing power, the cost of building VFX is in manpower/runtime. Even if you had the infinite processing power machine for gaming, the game still needs at least 10x the runtime looking good with every angle without any manual post-effects or compositing.

In 5 years or so, this could be the norm.

See above. Characters are already past the uncanny valley, but locked down to tech demos.I feel this too. Look at something like Detroit or TLOU2 when it comes to characters. Still artificial looking but getting there. Imagine the huge leap that's coming with next gen consoles and I see something like Detroit made for next gen will have truly indistinguishable moments from time to time, if animation and everything else is at a top level. The generation after that (10 years).. yeah I can see it possible to be playing games that are verging on Blade Runner cityscapes or CGI rendered humans that look truly convincingly real. It will take time and cost a lot to make games of that level, but it will be possible with the technology that will be around in 10 years time that is affordable for consumers.

Edit: Hell I truly expect some next gen games will be there to be honest. I never expected games of the visual fidelity of Detroit or TLOU2 this gen!

I frankly feel that this generation has very nicely surpassed the uncanny valley. Which is a really big step.

There are still big software optimizations to be done. That's how raytracing became somewhat feasible in recent years.Maybe eventually, but with a significant lag. Toy Story 1 had ray tracing over 20 years ago and we are just getting it in real time now. Plus our ability to make transistors smaller is slowing down so processing gains over time aren't as big as they used to be. It will take a pradigm shift to get to where movies are now imho. Either something like quantum computing becoming real or shifting game rendering to super powerful data centers and streaming the game to get more real time rendering power. Home computing power isn't going to be able to get to current movie tech in real time even in 20 years based on current trends.

I mean top of the line CG is out there right now. We aren't close to that. But we made some damn fine progress.

Maybe eventually, but with a significant lag. Toy Story 1 had ray tracing over 20 years ago and we are just getting it in real time now. Plus our ability to make transistors smaller is slowing down so processing gains over time aren't as big as they used to be. It will take a pradigm shift to get to where movies are now imho. Either something like quantum computing becoming real or shifting game rendering to super powerful data centers and streaming the game to get more real time rendering power. Home computing power isn't going to be able to get to current movie tech in real time even in 20 years based on current trends.

Toy Story wasn't raytraced. The first Pixar film to use Raytracing was Monster's University

As in, fully raytraced? Cars only used RT for the reflections?Toy Story wasn't raytraced. The first Pixar film to use Raytracing was Monster's University

Still very far. We wont even reach FFX cgi levels next gen. And thats from 2001. Makes me wonder when will we get close to ffx cgi? PS6? 7?

Still very far. We wont even reach FFX cgi levels next gen. And thats from 2001. Makes me wonder when will we get close to ffx cgi? PS6? 7?

I had a graphics programming teacher tell me that the cityscape scenes in Big Hero 6 had like six million rays being traced to get the proper reflections in skyscrapers and what-not. We're currently tracing like, what, less than a dozen in Minecraft, if you've got a beast rig?

Real-time ray tracing is the brass ring, but we aren't even close to the end result you get from chunking through a scene at a rate of a day per second in a render farm.

Real-time ray tracing is the brass ring, but we aren't even close to the end result you get from chunking through a scene at a rate of a day per second in a render farm.

A dozen per pixel. I have no idea if that dozen number is correct, but this would be per pixel.I had a graphics programming teacher tell me that the cityscape scenes in Big Hero 6 had like six million rays being traced to get the proper reflections in skyscrapers and what-not. We're currently tracing like, what, less than a dozen in Minecraft, if you've got a beast rig?

Real-time ray tracing is the brass ring, but we aren't even close to the end result you get from chunking through a scene at a rate of a day per second in a render farm.

Oooh, Yeah. Ummmm, I'm gonna have to go ahead and uh, disagree with ya there, m'kay?Kratos in the new God Of War actually looks better than Endgame's Thanos and this is more to do with art styles than horsepower and the fact that SSM are crazily talented bunch; just a notch below Naughty Dog when it comes to technical efficiency though.

Exactly, thank you.People are missing OPs point, they didn't ask for "yes the gap exists/is big", they asked "how big is the gap and tell me in hard numbers/details"

Happens all the time tho. It's a bit like the "gameplay tho" posts in graphics threads.

As in, fully raytraced? Cars only used RT for the reflections?

I think for global illumination, rather than just specific materials reflections

A dozen per pixel. I have no idea if that dozen number is correct, but this would be per pixel.

a dozen is too small for samples per pixels, you'd be talking 100's or 1000's. 12 bounces is probably a bit to high for a game at this point.

but to answer the OP's question, I don't think it's the geometry which is where the biggest different is, at going to be the complexity or the materials and lighting which is where the big difference sits.

Every time we work out how to render a great looking approximation in 1/60th of a second, CGI has literal hours in which to produce something more accurate.

Although you want to remove render budget from the equation , it is everything.

the big changes for games production come because you can actaully see the perfectly accurate ground truth version of how you want your game to look, by perhaps waiting for a for minutes, rather than a few days, so our ability to interate upon art and clever fakery to get closer to perfection is rapidly sped up.

but meanwhile CGI can do all of the same things but still put a theoretical infinite budget of time/resource into a single frame of video

Last edited:

It's not too small per pixel when you use denoising on what would otherwise be a lot more ray samples. This is within the range of what games with raytracing support do today.a dozen is too small for samples per pixels, you'd be talking 100's or 1000's. 12 bounces is probably a bit to high for a game at this point

First to use any raytracing was bugs life in which the raytracing was done from shader.Toy Story wasn't raytraced. The first Pixar film to use Raytracing was Monster's University

First to have it big part of rendering the scenes was Cars. (Reflectios.)

Monsters University had pathtraced lighting and yet it was still was REYES rasterized.

Finding Dory was first pure raytraced movie from Pixar.

Not sure, but Ice Age most likely was first animated movie which was fully raytraced.

Last edited:

People are missing OPs point, they didn't ask for "yes the gap exists/is big", they asked "how big is the gap and tell me in hard numbers/details"

The thing is, there is not a single or a few numbers that answers the question. The entire process of how things are done is different in many ways. In some ways it's an apples to oranges comparison.

In geometry side some environment shots are quite ridiculous in complexity.

IE. In elysium the ring was over 3 trillion polygons..

IE. In elysium the ring was over 3 trillion polygons..

Jesus. The last time I heard of such a ridiculous polycount was a few years ago when they meticulously 3D scanned a famous statue and they got a result that was also trillions of polygons for extreme fine detail of microfissures and the like.In geometry side some environment shots are quite ridiculous in complexity.

IE. In elysium the ring was over 3 trillion polygons..

I hadn't heard of or seen this, and it is awesome, even for someone who has little knowledge of the field.a while ago Disney released the background assets for the Island in Moana for free for background purposes. The data for just that one shot comes out to be around 100GB, and there is an interesting blog post where someone tries to actually render that data at home

Simulated SSS, fully ray- or pathtraced, more complex shaders including stuff like thin film interference, muscle/fluid simulations, proper simulated hair-/furstrands instead of cards, insanely complex environments with simulations on trees/vehicles/buildings, UDIMs and big texture res, virtually no memory constraints, displacement maps on literally everything and post production.

VFX and animaiton attempt to re-create the real world through its physical traits, thats how big the gap is.

VFX and animaiton attempt to re-create the real world through its physical traits, thats how big the gap is.

Kratos in the new God Of War actually looks better than Endgame's Thanos and this is more to do with art styles than horsepower and the fact that SSM are crazily talented bunch; just a notch below Naughty Dog when it comes to technical efficiency though.

i think it's more a kind of uncanny valley mixing cg and "real life".

god of war lives in the world and lighting it was made in, so you don't have that kind of jarring effect when things don't line up right in what you're watching

that said, there's probably more polygons in thanos' lips than a whole scene in god of war, just for perspective

People are missing OPs point, they didn't ask for "yes the gap exists/is big", they asked "how big is the gap and tell me in hard numbers/details"

the question is not good - need a baseline of what is the acceptable floor of cg.

the current answer if you take a ps4 in real time vs a recent cg movie is not even worth measuring.

to be fair, real-time graphics need to do a lot behind the scenes for interactivity, which is why graphics go up as interactivity goes down.

I dint think it will happen in our lifetimes tbh. A game matching the cgi in avengers endgame that is

Last edited:

I'm pretty sure the term "render budget" refers to the amount of time you have to render a single frame, not the amount of money allocated to visuals. Games have to render their frames in ~1/30th of a second because it's all being done in real-time, movies can take as long as they want on each frame because it's not real-time.Doubtful about that.

Big budget movies have huge teams working on them solely to produce that visual quality.

Its not reasonable to expect devs to match movie quality all while doing the work required to make the game part.

I believe there is a manpower/resources limit too.

Movies need to have result out in finite time as well, especially if they want to iterate or change the shot. (Waiting farm for a week and seeing problem in a shot may not be a nice feeling..)I'm pretty sure the term "render budget" refers to the amount of time you have to render a single frame, not the amount of money allocated to visuals. Games have to render their frames in ~1/30th of a second because it's all being done in real-time, movies can take as long as they want on each frame because it's not real-time.

A year or two of work on a show is not a lot when thinking how complex those shots can be.

You should watch some UE4 vis demos to see where the cutting edge in terms of real-time graphics is. While it is possibel that someone is further in one aspect or another, the overall movie sequence from the troll should be the most advanced in the overall quality/features.

“Troll’ from Goodbye Kansas and Deep Forest Films | GDC 2019 | Unreal Engine

During the “State of Unreal” GDC opening session, Goodbye Kansas and Deep Forest Films revealed "Troll," a real-time technology demonstration using Unreal En...www.youtube.com

The average movie rig is still way more complex than the game model. Muscle and fat systems which drive something like Pacific Rim monsters, hair for Planet of the Apes, facial animations of Disney character - way beyond games: both because of processing power and because nobody can afford it. You will see those monsters for minutes on-screen, and there will be a team of people working on a single character for a year.

It is not just processing power, the cost of building VFX is in manpower/runtime. Even if you had the infinite processing power machine for gaming, the game still needs at least 10x the runtime looking good with every angle without any manual post-effects or compositing.

Very good explanation!