-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

Battlefield V PC performance thread

- Thread starter GrrImAFridge

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AKAIK it lets the GPU work a tiny bit ahead of the CPU. It adds input lag, but you should see an increase in GPU usage and FPSCould someone please explain what future frame rendering does? Why does it see such an increase in performance? Thanks!

Yo that Future Frame rendering doing work. Dropped from 110 fps to 70 when I turned it off at the spot I tested in Rotterdam. That's on max settings at 1440p, mind you.

I'm interested to know how much it actually affects input lag.

I'm interested to know how much it actually affects input lag.

the higher the framerate the less of an issue it is in most circumstances

my future frame rendering is blocked out, but i'd keep it off anyway. i'd rather have no latency over fps

my future frame rendering is blocked out, but i'd keep it off anyway. i'd rather have no latency over fps

It literally doubles my FPS. Also you have to note that latency in rendering is usually measured in frames - so if it adds 1 frame of latency but doubles FPS you aren't getting any additional overall input lag.

I'm running on a 2080ti and game is above 100 FPS in 1440p but doesn't feel smooth and stutters. That is with vsync off when I turned it on it stopped stuttering.

2080 Ti here as well. Sometimes the game was super smooth, other times I'd get stuttering despite 100-130 FPS.

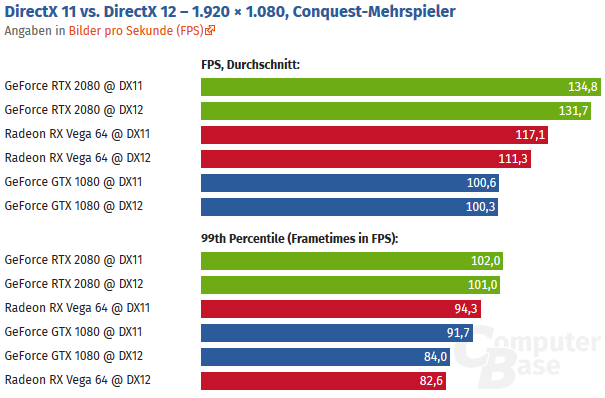

Ended up changing to DX11 and lost about 50 FPS until I enabled Future Frame Rendering, now it's consistently running smooth at an average of about 125 FPS. I may try DX12 + V-Sync as well to see what happens.

my future frame rendering is blocked out, but i'd keep it off anyway. i'd rather have no latency over fps

Set your graphics settings to custom to enable FFR, it will seriously add 10-20fps to your current framerate with very little increase in input lag.

Will state here again that FFR being ON is the default in BF1, BF4, BFH and pretty much every other Frostbite game out there so if you didn't think those had any input lag, you might as well enable it and give yourself the much more important 30+ fps. :)

Will state here again that FFR being ON is the default in BF1, BF4, BFH and pretty much every other Frostbite game out there so if you didn't think those had any input lag, you might as well enable it and give yourself the much more important 30+ fps. :)

Good to know. The framerate difference is massive on my system. Curious for trying to do math on the latency, it's 3 frames being rendered according to some other DICE employee on reddit... is this 3 frames in addition to the normal 1 or how exactly does the math work out in terms of how many added frames there are?

I'm trying to figure out exactly what the difference would be in latency in terms of ms instead of frames when compensating for the added framerate.

Good to know. The framerate difference is massive on my system. Curious for trying to do math on the latency, it's 3 frames being rendered according to some other DICE employee on reddit... is this 3 frames in addition to the normal 1 or how exactly does the math work out in terms of how many added frames there are?

I'm trying to figure out exactly what the difference would be in latency in terms of ms instead of frames when compensating for the added framerate.

Default engine value is 3. When FFR is set to OFF, the value is 1.

Default engine value is 3. When FFR is set to OFF, the value is 1.

Does this override nvidia control panel?

Does the game run better for final release? I remember it lagging badly on my i5 4690k and GTX 980 PC, even on medium.

GTX 970, i7-4790K OC'd at 4.5 GHz on all cores, 16 GB DDR3 2400 RAM.

I can't get to 60fps in 1080p without Future Frame Rendering, which is depressing because I absolutely noticed some input delay. And yes, I use raw mouse input and have VSync disabled. On High I get 35 to 40fps without FFR.

I mean, I wanted to switch to a RTX 2070 or 80 in January anyway, but it's still a bit depressing, especially after how well BF1 ran on my rig.

I can't get to 60fps in 1080p without Future Frame Rendering, which is depressing because I absolutely noticed some input delay. And yes, I use raw mouse input and have VSync disabled. On High I get 35 to 40fps without FFR.

I mean, I wanted to switch to a RTX 2070 or 80 in January anyway, but it's still a bit depressing, especially after how well BF1 ran on my rig.

Does the game run better for final release? I remember it lagging badly on my i5 4690k and GTX 980 PC, even on medium.

I cant vouch for the gpu but my ryzen 1700 reaches 80% usage regularly on Ultra, still 60fps at all times using a 1070. so your cpu could be the issue

GTX 970, i7-4790K OC'd at 4.5 GHz on all cores, 16 GB DDR3 2400 RAM.

I can't get to 60fps in 1080p without Future Frame Rendering, which is depressing because I absolutely noticed some input delay. And yes, I use raw mouse input and have VSync disabled. On High I get 35 to 40fps without FFR.

I mean, I wanted to switch to a RTX 2070 or 80 in January anyway, but it's still a bit depressing, especially after how well BF1 ran on my rig.

Well, just enable FFR. FFR is ON in Battlefield 1 anyways. :)

I have it enabled, because I need those 60fps. I did not know about the default FFR in BF1, the more you know. It somehow still felt a bit better in terms of input delay than BFV does now. Other than that, you guys did a kick-ass job. BF games are always the reason why I upgrade my PCs and V seems to be no exception. ;)

Default engine value is 3. When FFR is set to OFF, the value is 1.

Cool, thanks for clarifying.

So, if I'm locking to 120Hz (gsync gets lower latency if you have 1 FPS or more below the cap, my monitor is 144Hz):

1 frame = 8.333ms

FFR adds 2 frames, so + 16.667 ms, or equivalent to 1 frame at 60FPS. Meaning that since I can't hold 60FPS with it off, but can get over 120 with it on, it should actually be _decreasing_ or at very least breaking even in input latency before considering the other benefits of the higher framerate.

Once I get home tonight I'm gonna try limiting to 142FPS with 'GameTime.MaxVariableFps 85' which worked in previous versions. Also plan to do some Linux testing with DXVK, BF1 ran pretty well in Linux so I'm hoping V does too!

2080 Ti here as well. Sometimes the game was super smooth, other times I'd get stuttering despite 100-130 FPS.

Ended up changing to DX11 and lost about 50 FPS until I enabled Future Frame Rendering, now it's consistently running smooth at an average of about 125 FPS. I may try DX12 + V-Sync as well to see what happens.

I wouldn't use DX12 since it introduces a lot of stuttering. Really a shame since I was looking forward to trying Ray Tracing but in its current state it's pretty much unplayable.

Nonetheless this game is a real looker and exactly why I bought a 2080Ti. Getting 120-140fps at 1440p with Gsync is gaming heaven. Only thing that needs improvement are the heavy pop-ins when parachuting into the map.

Hardware abstraction is something that exists for a reason, and moving to higher or lower levels of abstraction introduces a complex set of tradeoffs. I miss that aspect in the general discussion of it.

I'm not entirely sure what you're advocating here, because we can't really stay on DX11/OpenGL, and introducing a new industry-supported HL API that's directly tied by IVHs (closed source) drivers to the hardware and continue relying on their driver hacks to optimize individual titles doesn't seem like a future worth having, nor am I sure the industry would want that. Anyway, I'll take the downsides to get away from that.

There may be some things that ended up too low-level in Vulkan, in which case it'll evolve over time to better fit. Thankfully there's a thoroughly proven extensions model for that evolution. We could point at the stuff Arseny "zeux" Kapoulkine is already showing off in public as a good example of being able to leverage new low-level HW features already. In the "old days" I think it's safe to say he'd still be waiting for MS to release a major numbered DX to the public to be able to access said featues, or have to use OpenGL which, unfortunately, you can't _really_ ship PC games on (yes I know it's been done).

I think it's too early to throw out the baby with the bath-water, especially based on relatively immature DX12 renderers.

I wouldn't use DX12 since it introduces a lot of stuttering. Really a shame since I was looking forward to trying Ray Tracing but in its current state it's pretty much unplayable.

Nonetheless this game is a real looker and exactly why I bought a 2080Ti. Getting 120-140fps at 1440p with Gsync is gaming heaven. Only thing that needs improvement are the heavy pop-ins when parachuting into the map.

Next patch will introduce some rendering improvements (for both Dx11, Dx12, and DXR). Can't promise that it will fix everything, but hopefully things will be a bit better. :)

GTX 1080

4790k @ 4.6ghz

16GB DDR3 @ 1866mhz

Installed on 7200rpm HDD

All settings at High with HBAO using DX11 with FFR on @ 1080p. I cap the fps to 100 with RTS as my CPU sits between 70% and 90% usage whilst GPU only hits about 80%. Every now and then I will get a lag spike/stutter maybe once or twice per game (usually at the start of the round) which could be the map loading in still. Any reason why my GPU is not hitting 100%?

4790k @ 4.6ghz

16GB DDR3 @ 1866mhz

Installed on 7200rpm HDD

All settings at High with HBAO using DX11 with FFR on @ 1080p. I cap the fps to 100 with RTS as my CPU sits between 70% and 90% usage whilst GPU only hits about 80%. Every now and then I will get a lag spike/stutter maybe once or twice per game (usually at the start of the round) which could be the map loading in still. Any reason why my GPU is not hitting 100%?

Thank you!AKAIK it lets the GPU work a tiny bit ahead of the CPU. It adds input lag, but you should see an increase in GPU usage and FPS

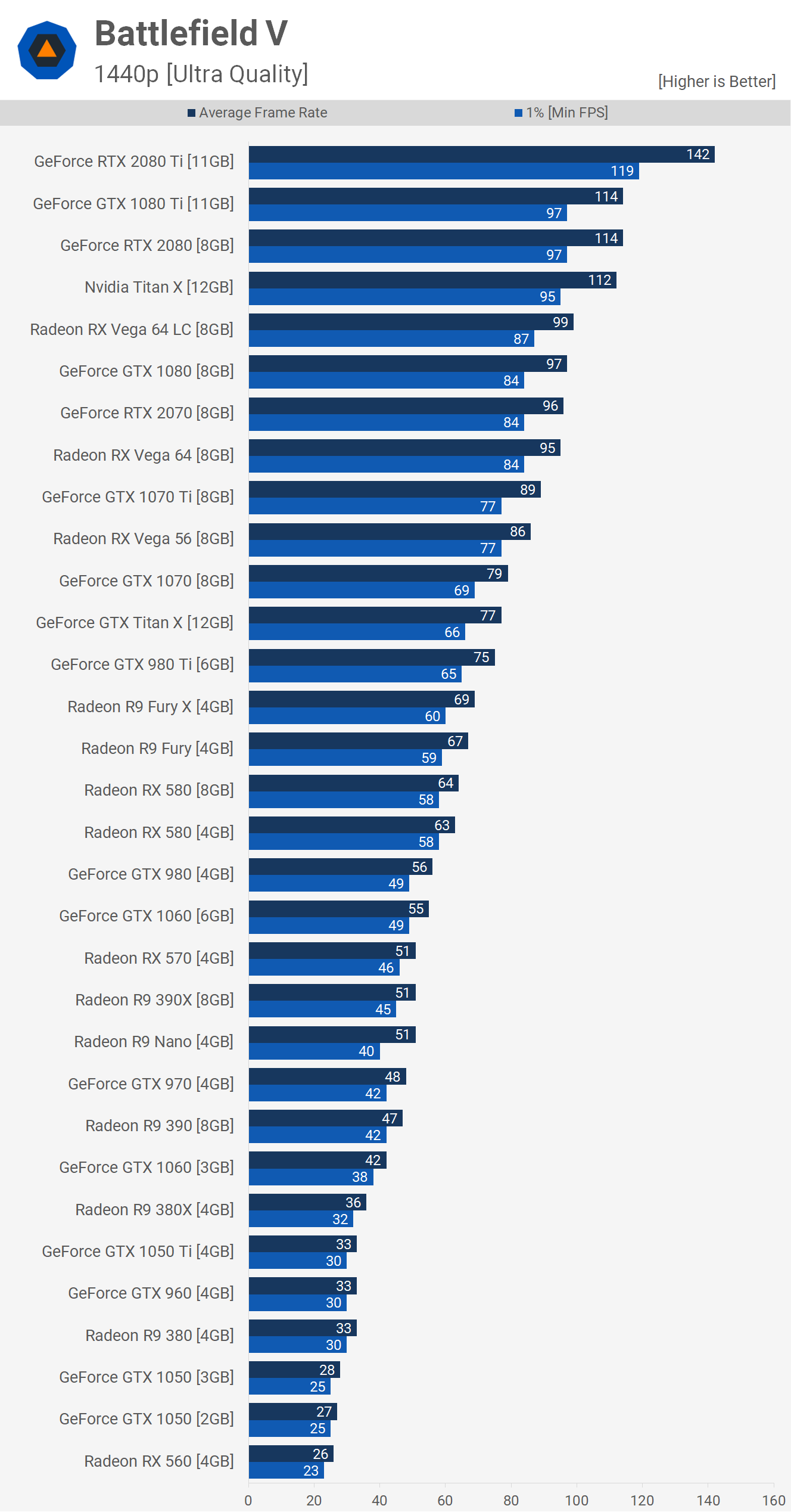

https://www.techspot.com/review/1746-battlefield-5-gpu-performance/

We haven't seen any serious performance regressions in D3D12/VK games on new GPU architectures so far so this is a moot point. Two key issues with LL APIs are resource management (which should really be done separately for every GPU architecture out there and may lead to some performance issues when a new GPU runs old code which wasn't optimized for it) and this thing known as async compute (which should really be done separately for every single GPU even inside one architecture). The first one seems to be doing fine so far - likely because each next GPU arch is an improvement here meaning that running old code won't result in a slowdown, just in a lack of significant gains. The second one matters only to AMD as NV GPUs don't need it anyway and AMD hasn't really updated their core arch since 2012 - hopefully when they will it will be an improvement for them as well to a point where they won't be needing async compute to saturate their shader arrays.

And I mean, do we have a choice? To make full use of multicore CPUs we will have to use LL APIs anyway and it's not like there's any other option as adding more CPU cores is the only way left for further CPU performance improvements. The fact that MS adds new rendering features (DXR, DXML, etc) only to D3D12 runtime is pretty telling here.

What I'm primarily advocating for is more awareness in public discourse of the fact that there are downsides (and they aren't just "lazy devs have to work harder").I'm not entirely sure what you're advocating here, because we can't really stay on DX11/OpenGL, and introducing a new industry-supported HL API that's directly tied by IVHs (closed source) drivers to the hardware and continue relying on their driver hacks to optimize individual titles doesn't seem like a future worth having, nor am I sure the industry would want that. Anyway, I'll take the downsides to get away from that.

Basically, what you do by moving the optimization problem from the driver into the application, conceptually, is replacing the combination of a N : 1 problem (optimizing applications for a given hardware-independent interface - game developer side) and a 1 : M problem (optimizing processes expressed using a generic interface for M different hardware types - driver side) with a M : N problem. The downsides of that should be obvious, especially if you consider that "M"s can be added that aren't even a thing when you do your optimization.

Of course, it's not quite as dire in practice, since actually, high-end games sometimes already had some hardware-specific code paths, and as you rightfully point out drivers certainly had engine-specific optimizations. But in terms of software design the whole setup is still problematic, and it doesn't really get better in several ways by making the whole M:N relationship purely a game/engine dev issue. Especially since the software they ship is more static, generally, than the software shipped by HW vendors.

I think it's too early to throw out the baby with the bath-water, especially based on relatively immature DX12 renderers.

To be clear, I'm not advocating for throwing out low-level APIs and going back to DX11/OpenGL (though those are still perfectly suitable for many use cases).And I mean, do we have a choice? To make full use of multicore CPUs we will have to use LL APIs anyway and it's not like there's any other option as adding more CPU cores is the only way left for further CPU performance improvements. The fact that MS adds new rendering features (DXR, DXML, etc) only to D3D12 runtime is pretty telling here.

That said, I think it's absolutely not proven that you e.g. need low-level control over queuing, resource management or synchronization points to effectively use CPU-side parallelism in a graphics API. It just hasn't been done so far ;)

Last edited:

I'm stuck on Medium at 3440x1440 with my 2500k and GTX 1080.

It's probably time that I upgraded my CPU.

It's probably time that I upgraded my CPU.

What's everyone's experience with "3D sound"? If I choose to enable it, do I also need to turn DTS on in my headset software (Arctis 7)?

What's everyone's experience with "3D sound"? If I choose to enable it, do I also need to turn DTS on in my headset software (Arctis 7)?

No, there is nothing needed but to enable "3D Sound" ingame. It's like CSGO or Overwatch. Nothing special needed. Really cool implementation.

Tested with a $800 CPU.... I get that they don't want a bottleneck but this could give a wrong impression I feel.So according to Techspot a RX 570 can manage 60 fps at ultra settings:

Of course, that's in the singleplayer campaign while running on a i9 9900k.

I also miss a benchmark that shows how important cores/threads are for this game.

Tested with a $800 CPU.... I get that they don't want a bottleneck but this could give a wrong impression I feel.

I also miss a benchmark that shows how important cores/threads are for this game.

That is really difficult since benchmarks of mp games are not really reliable due to their randomness and the strain on the cpu is wayyyy less in singeplayer.

But in the end you are fine as long as you have more than 4 threads. Even my i5-4670k with 4,4ghz went under 60fps in BF1(!) on Amiens for example.

A ryzen 1600(x) should even outperform the higher clocked i5 4c/4t cpus easily in BFV.

I also miss a benchmark that shows how important cores/threads are for this game.

GameGPU said:

Next patch will introduce some rendering improvements (for both Dx11, Dx12, and DXR). Can't promise that it will fix everything, but hopefully things will be a bit better. :)

Such good news. I hope I can play Rotterdam with minimal or no stutter, I love that map

For me the narvik map runs the same. Its the most demanding map in the game surprisingly. Usually the vegetation maps are the most heavyDoes the game run better for final release? I remember it lagging badly on my i5 4690k and GTX 980 PC, even on medium.

Well, the core idea is to remove all of this from the driver and put this into the renderer code meaning that the driver won't need to do nearly as much work freeing up CPU for other things. Can it be done differently? Probably no as for this to work it absolutely has to be a part of the renderer itself since otherwise you're getting the same situation where a driver need to "guess" what the renderer wants and this will always be a heavy weight task which will be nearly impossible to parallelize due to the need to have all the info in one thread for any "guess" to happen.That said, I think it's absolutely not proven that you e.g. need low-level control over queuing, resource management or synchronization points to effectively use CPU-side parallelism in a graphics API. It just hasn't been done so far ;)

I mean, if we would have a better alternative to D3D12/VK programming model which would be easier to use while providing the same benefits - surely we would have seen it appear already, on consoles at least if not on PC. The sole fact that not only MS and Khronos came to what is similar in its core concept but Sony as well (and Nintendo too although it's basically NV again at this point) points us to a complete lack of better options.

Could be so many things, drivers, settings who knows.

You have to change the overall quality setting from Auto to custommy future frame rendering is blocked out, but i'd keep it off anyway. i'd rather have no latency over fps

Edit:

Are people running the binaural audio or war tapes?

I used the binaural initially but everything seemed kinda quiet so I went to war tapes. I love accurate 3D audio though.

Last edited:

You have to change the overall quality setting from Auto to custom

Edit:

Are people running the binaural audio or war tapes?

I used the binaural initially but everything seemed kinda quiet so I went to war tapes. I love accurate 3D audio though.

Im using 3d and I will continue to do so, its just too good.

Next patch will introduce some rendering improvements (for both Dx11, Dx12, and DXR). Can't promise that it will fix everything, but hopefully things will be a bit better. :)

Awesome, really looking forward to it!

Next patch will introduce some rendering improvements (for both Dx11, Dx12, and DXR). Can't promise that it will fix everything, but hopefully things will be a bit better. :)

Any chance it might fix the issue where after enabling DX12 the game refuses to go online (I wish we had an error log or something)? Is this a known/tracked issue? At least it's not in the public known issues list.

Next patch will introduce some rendering improvements (for both Dx11, Dx12, and DXR). Can't promise that it will fix everything, but hopefully things will be a bit better. :)

Sounds awesome. Do we have a date or patch notes yet?

For me it makes a big difference having if OFFDefault engine value is 3. When FFR is set to OFF, the value is 1.

- lower FPS (50/60s)

- lower input lag, I feel much much more skilled with the option off.

So I've played more and 1080p ultra is mostly fine but there is still stuttering and fps tanks with big explosions on my 4.4ghz/6600k/1070. Are there any options I should be lowering in particular?

Golden rule for stuttering: make sure Dx12 is off, make sure Future Frame Rendering is on.