I don't think that's a meaningful distinction. The One X memory configuration was revealed by Microsoft's Project Scorpio CG render at E3 2016.True, but at that time the images were actual HW and not renders.

-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

They have to. CUs are handling vertex transform, so everything needs to scale to be able to feed them and grab the result fast enough to not bottleneck them.

What I meant is that Navi has them as part of an SA rather than part of an SE, so they scale differently, have different ratios depending on the number of WGPs/SAs/SEs.

What I meant is that Navi has them as part of an SA rather than part of an SE, so they scale differently, have different ratios depending on the number of WGPs/SAs/SEs.

Compared to what architecture?

Itself. 5700 vs. 5500, for instance - 18WGPs as 4 SAs and 2 SEs vs. 11WGPs as 2 SAs and 1 SE.

Itself. 5700 vs. 5500, for instance - 18WGPs as 4 SAs and 2 SEs vs. 11WGPs as 2 SAs and 1 SE.

Right, I misunderstood the message.

In that sense yes, it's more scalable and flexible than GCN.

Only for the internal because this is os limited. USB HDDs should be fine and very close to your 1GB connection which should be around 100MB/s and current USB3 drives achieve that in write performance.

Actually... I have 😂 But I admit it's an extreme case. And none of my consoles have the adequate Ethernet, so... When talking about USB on consoles I was thinking about downloading while playing on the same (platter) drive, because I am sure the OS throttles the transfert the same way with the internal drive.1Gb connection translates to ~112Mb/s download, that's about fast enough to max out a mid range HDD. SSDs are in the >500Mb/s and unless you have to 10Gig WAN connection, you're fine.

Nice but close to nothing will give you that speed, unless the content is already at your isp already.Actually... I have 😂 But I admit it's an extreme case. And none of my consoles have the adequate Ethernet, so... When talking about USB on consoles I was thinking about downloading while playing on the same (platter) drive, because I am sure the OS throttles the transfert the same way with the internal drive.

I read a bit about Microsoft's Sampler Feedback, it seems to achieve the purpose, but you have to use it more "explicitly" than mmaped files. I guess page faults can't be handled alone by the GPU at this moment.Not in a conventional sense, but there could be strategies that look similar. The common approach to dealing with memory mapped content is that access is made completely transparent by simply halting the thread trying to access content that is not yet mapped until the kernel loads it, and then releasing the thread to access the newly loaded memory as if it was there all along. The problem with this approach is that it leads to unpredictable and fairly lengthy stalls which would lead to hitching frame rates while loading content.

It's more likely that the level of detail scheme or texture MIP map selection would allow graceful fallback to a lower quality asset while triggering the load for a higher quality version. Microsoft has described how they're approaching this (even on a page-by-page level for high quality textures), and it looks a little like a mapped file on the surface, but the implementation would have to be quite different.

Any news of PS5 and VRS? The MS patent on it is starting to look a bit fishy. Cerny said that their work with AMD results in new features getting added to AMD GPUs, i wonder if MS's work on VRS is somehow helping Sony. Maybe thats the reason Cerny didnt talk about VRS?

To me, thats a game changing feature. 15-20% of extra performance is going to be crucial running games at higher resolutions.

To me, thats a game changing feature. 15-20% of extra performance is going to be crucial running games at higher resolutions.

Fishy, how? It's a patent for their own software implementation with dx12, isn't it?Any news of PS5 and VRS? The MS patent on it is starting to look a bit fishy. Cerny said that their work with AMD results in new features getting added to AMD GPUs, i wonder if MS's work on VRS is somehow helping Sony. Maybe thats the reason Cerny didnt talk about VRS?

To me, thats a game changing feature. 15-20% of extra performance is going to be crucial running games at higher resolutions.

AMD have VRS patent of their own and if I'm not mistaken sony/cerny do as well.

You bastard! Very jealous.

VRS is an RDNA2 feature. PS5 has an RDNA2 APU. Just stop already.Any news of PS5 and VRS? The MS patent on it is starting to look a bit fishy. Cerny said that their work with AMD results in new features getting added to AMD GPUs, i wonder if MS's work on VRS is somehow helping Sony. Maybe thats the reason Cerny didnt talk about VRS?

To me, thats a game changing feature. 15-20% of extra performance is going to be crucial running games at higher resolutions.

fishy because why would they need to patent a dx12 feature sony isnt going to use anyway.Fishy, how? It's a patent for their own software implementation with dx12, isn't it?

AMD have VRS patent of their own and if I'm not mistaken sony/cerny do as well.

You bastard! Very jealous.

also machine learning. MS mentioned it, but Sony didnt. it's crazy to me that cerny spent over 14 minutes on audio (38 min to 52 min) and 15 minutes on the gpu (23-38 min). surely, gamers and devs care more about VRS and Machine Learning than audio.

While waiting at loading screens are the most obvious impact of the fast SSD, it's far from the most important. Modern games constantly stream assets from disk while you're playing, and understanding what role this plays in game design is key to understanding the most important benefits of the SSD. The Spider-Man postmortem talk from GDC is great for showing what's happening behind the scenes while you're playing:

The amount you can load in a second is directly tied to the richness of the visual spectacle that can be presented without stopping the game and presenting a loading screen. Faster is better and should effectively eliminate pop-in both of high detail models and textures, letting developers who have the luxury of building exclusives go wild, and in cross-platform titles there may still be times when you notice the difference in terms of how long it takes to switch to the highest quality version of assets.

Sony was quite explicit that their goal is to make your experience continuous rather than breaking games up with loading. They're not content to cut 30 seconds down to 4, they want new experiences to feel instant and uninterrupted. All we've really seen on the Series X is faster loading in backward compatibility, and of course that'll be similar if perhaps to a different degree on the PS5, but it's far from the most interesting way to use the technology.

Thanks for taking the time to answer my questions!

I watched the video and although most of it is out of my zone of knowledge I did understand some of it.

They spent a lot of time optimising with current streaming restrictions. They specifically mentioned that it took them a month to just make a scene seem continuous when entering a building but they finally bit the bullet and added loading screens for other parts of the game. The speed with which you can traverse the city is also restricted if I understand things correctly. So I guess this could be a thing of the past going forward. I wonder if devs will use the resources saved to make a "better" game or make games cheaper and faster. I suppose first-party devs, at least, want to have showcase games to sell the console.

So, I'm curious, what do you think is the most interesting ways they could use the technology?

fishy because why would they need to patent a dx12 feature sony isnt going to use anyway.

also machine learning. MS mentioned it, but Sony didnt. it's crazy to me that cerny spent over 14 minutes on audio (38 min to 52 min) and 15 minutes on the gpu (23-38 min). surely, gamers and devs care more about VRS and Machine Learning than audio.

Machine learning was mentioned in the second Wired article:

"I could be really specific and talk about experimenting with ambient occlusion techniques, or the examination of ray-traced shadows," says Laura Miele, chief studio officer for EA. "More generally, we're seeing the GPU be able to power machine learning for all sorts of really interesting advancements in the gameplay and other tools." Above all, Miele adds, it's the speed of everything that will define the next crop of consoles. "We're stepping into the generation of immediacy. In mobile games, we expect a game to download in moments and to be just a few taps from jumping right in. Now we're able to tackle that in a big way."

Thats a relief. i totally forgot about this.Machine learning was mentioned in the second Wired article:

"I could be really specific and talk about experimenting with ambient occlusion techniques, or the examination of ray-traced shadows," says Laura Miele, chief studio officer for EA. "More generally, we're seeing the GPU be able to power machine learning for all sorts of really interesting advancements in the gameplay and other tools." Above all, Miele adds, it's the speed of everything that will define the next crop of consoles. "We're stepping into the generation of immediacy. In mobile games, we expect a game to download in moments and to be just a few taps from jumping right in. Now we're able to tackle that in a big way."

You worry a bit too much. Sony hasn't talked about everything.fishy because why would they need to patent a dx12 feature sony isnt going to use anyway.

also machine learning. MS mentioned it, but Sony didnt. it's crazy to me that cerny spent over 14 minutes on audio (38 min to 52 min) and 15 minutes on the gpu (23-38 min). surely, gamers and devs care more about VRS and Machine Learning than audio.

fishy because why would they need to patent a dx12 feature sony isnt going to use anyway.

I don't know if you're joking but when you have a basic understanding of how patents work, you realise that MS patents definitely aren't filed on a basis of "will Sony use it on PS5 or not".

I feel a bit silly saying this but a patent also prohibits others from making AND selling the said invention. It is exceedingly common practice and has no downsides other than public disclosure.

Last edited:

Exactly. There are still some who think that the only thing the next gen consol SSDs will do is allow them to load into GTA5 in 10 seconds instead of 65 seconds. They couldn't be wronger. Current gen games are entirely designed around the slow transfer speeds of mechanical hard drives. For developers to go from making their games for 30 Mbps to 2400+ Mbps will be mindboggling. Faster loading screens will make up around 5% of the benefits from that speed. Screw that, we might not even have loading screens. Entire open world games will be loaded in the time it takes to click on Start and game screen flashing black. It kind of feels frustrating that some people just don't get how much of a leap this is.Thanks for taking the time to answer my questions!

I watched the video and although most of it is out of my zone of knowledge I did understand some of it.

They spent a lot of time optimising with current streaming restrictions. They specifically mentioned that it took them a month to just make a scene seem continuous when entering a building but they finally bit the bullet and added loading screens for other parts of the game. The speed with which you can traverse the city is also restricted if I understand things correctly. So I guess this could be a thing of the past going forward. I wonder if devs will use the resources saved to make a "better" game or make games cheaper and faster. I suppose first-party devs, at least, want to have showcase games to sell the console.

So, I'm curious, what do you think is the most interesting ways they could use the technology?

Surely, gamers and devs care more about VRS and Machine Learning than audio.

I think it's safe to say that development teams on games typically spend far more time working with audio than they do any one graphical optimization or Machine Learning. To me, it's not surprising that this was a big part of the talk. VRS is just one technique among many for improving efficiency, and it's no silver bullet, just a nice tool to have which isn't likely be a major differentiator if it really is baked into the same architecture both have inherited. Cerny's talk was focused primarily on differentiators, areas where they've done something they view as unique rather than an area that everyone is invested in.

So, I'm curious, what do you think is the most interesting ways they could use the technology?

I expect it to contribute to diversity in the gaming experience in a lot of ways:

- Constantly bringing in animation variations so we don't see the same reactions in physical combat over and over.

- Having a wider range of possible reactions to events, from spoken lines of commentary in sports games that more immediately track the on-screen action, to sound effects appropriate for interactions between different materials (it's already common that footsteps sound different on grass versus in water, but swords should sound unique when striking different materials, gunfire should interact make different impact noises, and countless other examples of what movie folks lovingly craft as foley to match onscreen activity.)

- Building interiors can be far more diverse, whether arriving immediately from outside through a door or window, or merely walking from room to room. Having detailed textures available in a fraction of a second for a new environment enables a constantly evolving experience that doesn't need careful level design to enable.

- Science fiction and fantasy tropes such as teleportation and stepping through portals to other worlds can be gameplay activities instead of cut scenes.

- Storytelling in general should be much more seamless, with transitions between chapters happening without being obvious - and without burning out teams trying to figure out how to make it happen.

I expect it to contribute to diversity in the gaming experience in a lot of ways:

That's just offhand. I'm sure we'll see a lot of other creative uses that haven't immediately occurred to me.

- Constantly bringing in animation variations so we don't see the same reactions in physical combat over and over.

- Having a wider range of possible reactions to events, from spoken lines of commentary in sports games that more immediately track the on-screen action, to sound effects appropriate for interactions between different materials (it's already common that footsteps sound different on grass versus in water, but swords should sound unique when striking different materials, gunfire should interact make different impact noises, and countless other examples of what movie folks lovingly craft as foley to match onscreen activity.)

- Building interiors can be far more diverse, whether arriving immediately from outside through a door or window, or merely walking from room to room. Having detailed textures available in a fraction of a second for a new environment enables a constantly evolving experience that doesn't need careful level design to enable.

- Science fiction and fantasy tropes such as teleportation and stepping through portals to other worlds can be gameplay activities instead of cut scenes.

- Storytelling in general should be much more seamless, with transitions between chapters happening without being obvious - and without burning out teams trying to figure out how to make it happen.

This is what I figured, but it's nice to see someone more technical than myself validating those assumptions. I'd love to see more varied characters/NPCs in a single scene become a thing as well.

This is what I figured, but it's nice to see someone more technical than myself validating those assumptions. I'd love to see more varied characters/NPCs in a single scene become a thing as well.

Agreed! Of course there are always limits to what a team can afford to produce in the way of art assets, but I'm looking forward to some really interesting changes in game design. SSDs will make a huge difference to what you might see around the next corner, but it's an increase in available RAM that's necessary to show more varied models and textures on the screen at the same time. I am hopeful that we'll see a pretty hefty increase here, in part by being able to discard parts of the operating system experience that aren't necessary in-game and vice versa, rapidly reloading what's needed at any time. I could see winding up with about 2-1/2 times as much memory available to developers. This is further magnified by not having to keep everything you need for the next 30 seconds of gameplay around, so it could in practice stretch to acting like 3+ times as much available memory.

Lots of speculation there, of course. I look forward to seeing what teams actually do with it. I would imagine we're not really prepared for the impact it can all have in the right hands.

I wonder if with the new consoles,that to me are guaranteed to break many limitations that the devs had till this point, new genres that we haven't previously imagined be created. This gen the battle royale genre was created for instance (not a big fan of it but still) that it would not be possible in previous console hardware. Really excited to see what developers can deliver with these monsters as the baseline!

The appeal of BR games to publishers is that they can be made to run on everything; consoles, PCs, phones, tablets, etc. That's why fortnite and apex look how they look. If you make a BR game that can only run pc or next gen, you're limiting your audience.I wonder if with the new consoles,that to me are guaranteed to break many limitations that the devs had till this point, new genres that we haven't previously imagined be created. This gen the battle royale genre was created for instance (not a big fan of it but still) that it would not be possible in previous console hardware. Really excited to see what developers can deliver with these monsters as the baseline!

Agreed. I pointed BR as an example as it was at the top of my head for the point I was trying to make. BR games are great for the MP scene especially with the play anywhere appeal but I'm more interested to see significant breakthroughs in the single player scene too.The appeal of BR games to publishers is that they can be made to run on everything; consoles, PCs, phones, tablets, etc. That's why fortnite and apex look how they look. If you make a BR game that can only run pc or next gen, you're limiting your audience.

The PlayStation 5 GPU Will Be Supported By Better Hardware Solutions, In Depth Analysis Suggests

According to a recent in-depth analisys, the PlayStation 5 may have the weaker GPU of the next-gen consoles, but it will receive better support from the rest of the system

Ugh more like both are Super Saiyan 2. One is Goku and the other Gohan.

Super Saiyan 3 is stronger but slower then 2 ;)Ugh more like both are Super Saiyan 2. One is Goku and the other Gohan.

Are you saying that the next Xbox will come with a weird lack of eyebrows as well?

Cites the coreteks video and a no name blog. Nothing to see here.

The PlayStation 5 GPU Will Be Supported By Better Hardware Solutions, In Depth Analysis Suggests

According to a recent in-depth analisys, the PlayStation 5 may have the weaker GPU of the next-gen consoles, but it will receive better support from the rest of the systemwccftech.com

Is it not possible for a guy on a "no name blog" to make good points? Anything in particular he wrote you disagree with?Cites the coreteks video and a no name blog. Nothing to see here.

Cites the coreteks video and a no name blog. Nothing to see here.

Yep.

The no name blog is just the same things we have been debating here since the specs came out .

Nothing outlandish to tell the truth .

The PlayStation 5 GPU Will Be Supported By Better Hardware Solutions, In Depth Analysis Suggests

According to a recent in-depth analisys, the PlayStation 5 may have the weaker GPU of the next-gen consoles, but it will receive better support from the rest of the systemwccftech.com

Here's the full post, it's super detailed.

Analyse This: The Next Gen Consoles (Part 9) [UPDATED]

So, the Xbox Series X is mostly unveiled at this point, with only some questions regarding audio implementation and underlying graphics...

He actually covers in-detail some information that has been discussed about XSX's ram set up over at the XSX architecture OT, and how there may not actually be a ram bandwidth advantage vs the PS5, due to the way each systems ram is set up, and the way ram access works. Eg it seems based on the memory stack and memory controller set up, that the XSX's GPU ram likely cannot be accessed at the same time as the slower CPU ram, instead they take turns so to speak, and as a result the average bandwidth ends up taking a hit.

Era discussion about it starting here.

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Microsoft did not address this by splitting theirs, because the CPU and GPU still use the same bus, so similar issues of potential contention arise. Microsoft split their ram this time around not for any efficiency savings (if anything it's less efficient as there's now split speeds and more...

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Ah, thank you for the clarification.

And here's this guys segment about it in the write up. His conclusion is that the PS4 actually potentially has the ram bandwidth advantage. Hopefully we'll learn more soon.

-----

"Microsoft is touting the 10 GB @ 560 GB/s and 6 GB @ 336 GB/s asymmetric configuration as a bonus but it's sort-of not. We've had this specific situation at least once before in the form of the NVidia GTX 650 Ti and a similar situation in the form of the 660 Ti. Both of those cards suffered from an asymmetrical configuration, affecting memory once the "symmetrical" portion of the interface was "full".

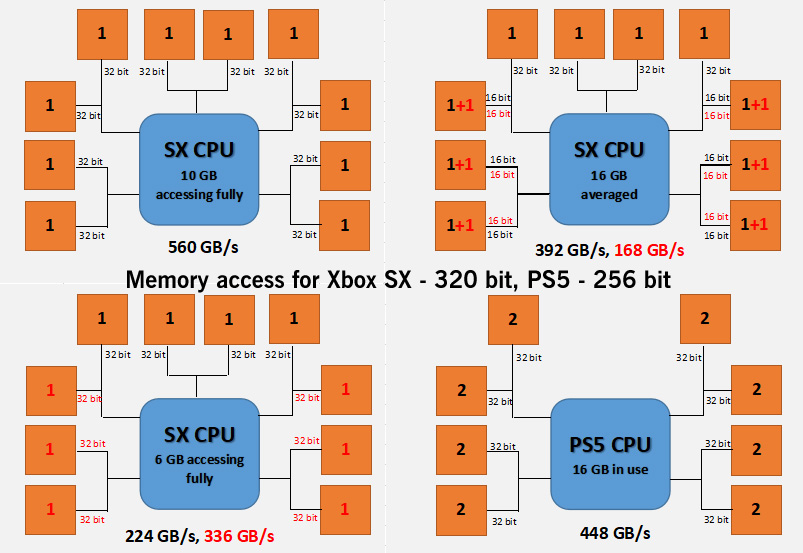

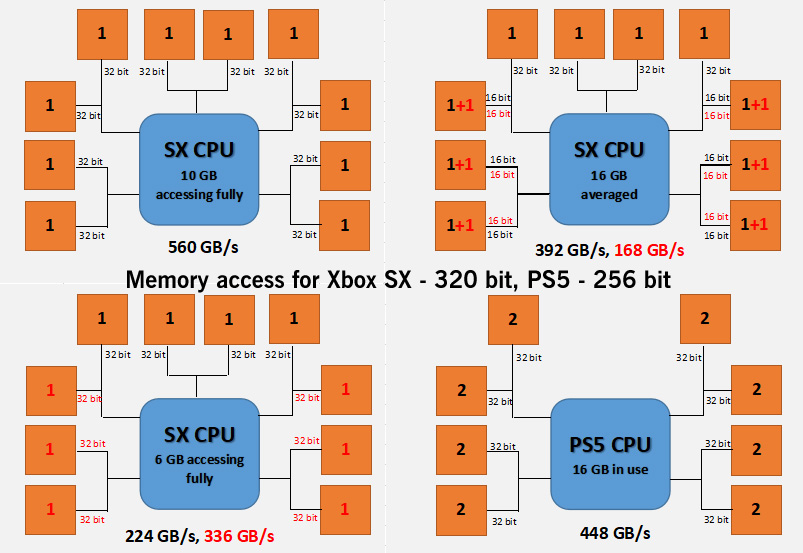

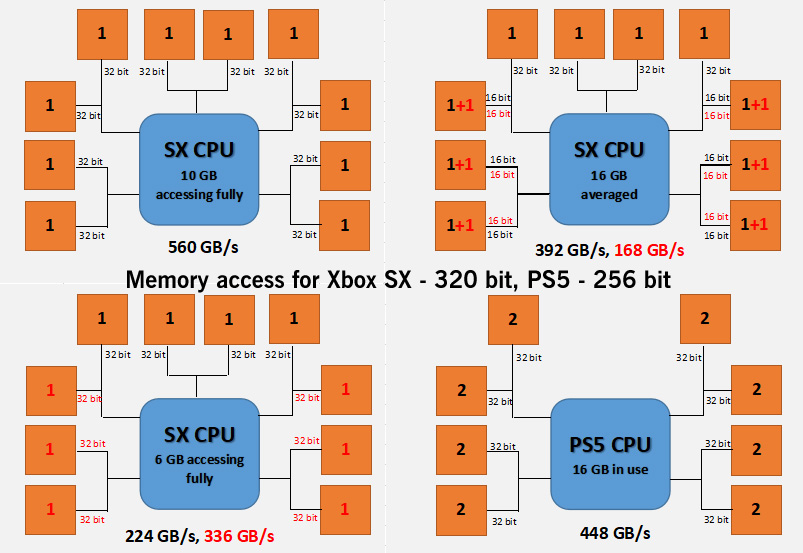

Interleaved memory configurations for the SX's asymmetric memory configuration, an averaged value and the PS5's symmetric memory configuration... You can see that, overall, the PS5 has the edge in pure, consistent throughput...

Now, you may be asking what I mean by "full". Well, it comes down to two things: first is that, unlike some commentators might believe, the maximum bandwidth of the interface is limited to the 320-bit controllers and the matching 10 chips x 32 bit/pin x 14 GHz/Gbps interface of the GDDR6 memory.

That means that the maximum theoretical bandwidth is 560 GB/s, not 896 GB/s (560 + 336). Secondly, memory has to be interleaved in order to function on a given clock timing to improve the parallelism of the configuration. Interleaving is why you don't get a single 16 GB RAM chip, instead we get multiple 1 GB or 2 GB chips because it's vastly more efficient. HBM is a different story because the dies are parallel with multiple channels per pin and multiple frequencies are possible to be run across each chip in a stack, unlike DDR/GDDR which has to have all chips run at the same frequency.

However, what this means is that you need to have address space symmetry in order have interleaving of the RAM, i.e. you need to have all your chips presenting the same "capacity" of memory in order for it to work. Looking at the diagram above, you can see the SX's configuration, the first 1 GB of each RAM chip is interleaved across the entire 320-bit memory interface, giving rise to 10 GB operating with a bandwidth of 560 GB/s but what about the other 6 GB of RAM?

Those two banks of three chips either side of the processor house 2 GB per chip. How does that extra 1 GB get accessed? It can't be accessed at the same time as the first 1 GB because the memory interface is saturated. What happens, instead, is that the memory controller must instead "switch" to the interleaved addressable space covered by those 6x 1 GB portions. This means that, for the 6 GB "slower" memory (in reality, it's not slower but less wide) the memory interface must address that on a separate clock cycle if it wants to be accessed at the full width of the available bus.

The fallout of this can be quite complicated depending on how Microsoft have worked out their memory bus architecture. It could be a complete "switch" whereby on one clock cycle the memory interface uses the interleaved 10 GB portion and on the following clock cycle it accesses the 6 GB portion. This implementation would have the effect of averaging the effective bandwidth for all the memory. If you average this access, you get 392 GB/s for the 10 GB portion and 168 GB/s for the 6 GB portion for a given time frame but individual cycles would be counted at their full bandwidth.

However, there is another scenario with memory being assigned to each portion based on availability. In this configuration, the memory bandwidth (and access) is dependent on how much RAM is in use. Below 10 GB, the RAM will always operate at 560 GB/s. Above 10 GB utilisation, the memory interface must start switching or splitting the access to the memory portions. I don't know if it's technically possible to actually access two different interleaved portions of memory simultaneously by using the two 16-bit channels of the GDDR6 chip but if it were (and the standard appears to allow for it), you'd end up with the same memory bandwidths as the "averaged" scenario mentioned above.

If Microsoft were able to simultaneously access and decouple individual chips from the interleaved portions of memory through their memory controller then you could theoretically push the access to an asymmetric balance, being able to switch between a pure 560 GB/s for 10 GB RAM and a mixed 224 GB/s from 4 GB of that same portion and the full 336 GB/s of the 6 GB portion (also pictured above). This seems unlikely to my understanding of how things work and undesirable from a technical standpoint in terms of game memory access and also architecture design.

In comparison, the PS5 has a static 448 GB/s bandwidth for the entire 16 GB of GDDR6 (also operating at 14 GHz, across a 256-bit interface). Yes, the SX has 2.5 GB reserved for system functions and we don't know how much the PS5 reserves for that similar functionality but it doesn't matter - the Xbox SX either has only 7.5 GB of interleaved memory operating at 560 GB/s for game utilisation before it has to start "lowering" the effective bandwidth of the memory below that of the PS5... or the SX has an averaged mixed memory bandwidth that is always below that of the baseline PS4. Either option puts the SX at a disadvantage to the PS5 for more memory intensive games and the latter puts it at a disadvantage all of the time."

Last edited:

Were people thinking the XSX had two memory buses, one each for the GPU and one for the CPU?Here's the full post, it's super detailed.

Analyse This: The Next Gen Consoles (Part 9) [UPDATED]

So, the Xbox Series X is mostly unveiled at this point, with only some questions regarding audio implementation and underlying graphics...hole-in-my-head.blogspot.com

He actually covers in-detail some information that has been discussed about XSX's ram set up over at the XSX architecture OT, and how there may not actually be a ram bandwidth advantage vs the PS5, due to the way each systems ram is set up, and the way ram access works. Eg it seems based on the memory stack and memory controller set up, that the XSX's GPU ram likely cannot be accessed at the same time as the slower CPU ram, instead they take turns so to speak, and as a result the average bandwidth ends up taking a hit.

<snip>

Do we need to set up a blog or youtube channel for Lady Gaia so people can just repost her content endlessly?

Were people thinking the XSX had two memory buses, one each for the GPU and one for the CPU?

Do we need to set up a blog or youtube channel for Lady Gaia so people can just repost her content endlessly?

I think so, or just didn't understand that fast ram bandwidth is attained through parallelism, which the XSX's ram stack set up impacts.

I think in real world terms, as this author implies, the PS5 may actually end up having the average bandwidth advantage, and much more so if games end up using or needing more than 10GB for GPU/graphics based stuff.

Obviously Sony can't really explain all the above in a sexy and concise way for laymen gamers, thus PR wise it looks like the PS5 is at a ram disadvantage, which isn't actually the case.

It reads like a lot of unfounded assumptions to me that likely have little foundation in reality. If you can't randomly switch between accessing the fast 10GB and the remaining 6GB on a cycle-by-cycle basis someone made a horrific mistake in judgement and I can't imagine that's the case. I fully expect the Series X to have higher average bandwidth to memory as a result of the wider bus. Why else would they design something this unusual? As I've laid out in my prior posts, though, it's not without compromise and will likely not result in dramatic advantages but rather a modest average advantage in line with its GPU computational targets. I expect it's typically something around the 16-18% range rather than the showy 25% advantage when accessing nothing but the first 10GB of RAM.

There's nothing tricky about accessing the narrower part of memory that the memory controller can't readily abstract away. Examining a single address bit is all it takes to distinguish the wider requests from the narrower. Absolute worst case, there's an additional cycle of latency introduced in memory access as a result, but I'm not expecting that, either.

There will be an advantage, just not for the whole RAM pool but you generally don't need the whole pool to be as fast as possible and cases where the asymmetric config of XSX will actually result in lower effective bandwidth than that of PS5 will likely be nearly non-existent.He actually covers in-detail some information that has been discussed about XSX's ram set up over at the XSX architecture OT, and how there may not actually be a ram bandwidth advantage vs the PS5

I'd assume, based on usual pricing for these types of things, that 2x2GB GDDR6 chips would be cheaper than 4x1GB, so if Microsoft's setup actually did end up with a lower overall bandwidth for more cost then something must have gone seriously wrong in the design process.

I'd agree with the argument that the SX's memory setup is definitely a compromise based on the fact that ten 2GB GDDR6 modules would simply have been too cost-prohibitive for their target pricing.It reads like a lot of unfounded assumptions to me that likely have little foundation in reality. If you can't randomly switch between accessing the fast 10GB and the remaining 6GB on a cycle-by-cycle basis someone made a horrific mistake in judgement and I can't imagine that's the case. I fully expect the Series X to have higher average bandwidth to memory as a result of the wider bus. Why else would they design something this unusual? As I've laid out in my prior posts, though, it's not without compromise and will likely not result in dramatic advantages but rather a modest average advantage in line with its GPU computational targets. I expect it's typically something around the 16-18% range rather than the showy 25% advantage when accessing nothing but the first 10GB of RAM.

There's nothing tricky about accessing the narrower part of memory that the memory controller can't readily abstract away. Examining a single address bit is all it takes to distinguish the wider requests from the narrower. Absolute worst case, there's an additional cycle of latency introduced in memory access as a result, but I'm not expecting that, either.

If you're referring to my comment, that's true. But given that SX has 10 ram modules and that they didn't go for 8x2GB + 2x1GB, it seems that cost was a/the deciding factor. I would have loved to see a full 20GB of GDDR6 on a 320bit bus though. Their 16GB layout is a compromise based on cost considerations, methinks.10 would be for 20GB of RAM. They'd only need 8 to match the current 16GB.

Is it not possible for a guy on a "no name blog" to make good points? Anything in particular he wrote you disagree with?

Of course it's possible. I'm simply pointing out there's a reason in this case.

The difference is that the XSX pool is actively managed to avoid that problem.Here's the full post, it's super detailed.

Analyse This: The Next Gen Consoles (Part 9) [UPDATED]

So, the Xbox Series X is mostly unveiled at this point, with only some questions regarding audio implementation and underlying graphics...hole-in-my-head.blogspot.com

He actually covers in-detail some information that has been discussed about XSX's ram set up over at the XSX architecture OT, and how there may not actually be a ram bandwidth advantage vs the PS5, due to the way each systems ram is set up, and the way ram access works. Eg it seems based on the memory stack and memory controller set up, that the XSX's GPU ram likely cannot be accessed at the same time as the slower CPU ram, instead they take turns so to speak, and as a result the average bandwidth ends up taking a hit.

Era discussion about it starting here.

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Microsoft did not address this by splitting theirs, because the CPU and GPU still use the same bus, so similar issues of potential contention arise. Microsoft split their ram this time around not for any efficiency savings (if anything it's less efficient as there's now split speeds and more...www.resetera.com

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Ah, thank you for the clarification.www.resetera.com

And here's this guys segment about it in the write up. His conclusion is that the PS4 actually potentially has the ram bandwidth advantage. Hopefully we'll learn more soon.

-----

"Microsoft is touting the 10 GB @ 560 GB/s and 6 GB @ 336 GB/s asymmetric configuration as a bonus but it's sort-of not. We've had this specific situation at least once before in the form of the NVidia GTX 650 Ti and a similar situation in the form of the 660 Ti. Both of those cards suffered from an asymmetrical configuration, affecting memory once the "symmetrical" portion of the interface was "full".

Interleaved memory configurations for the SX's asymmetric memory configuration, an averaged value and the PS5's symmetric memory configuration... You can see that, overall, the PS5 has the edge in pure, consistent throughput...

Now, you may be asking what I mean by "full". Well, it comes down to two things: first is that, unlike some commentators might believe, the maximum bandwidth of the interface is limited to the 320-bit controllers and the matching 10 chips x 32 bit/pin x 14 GHz/Gbps interface of the GDDR6 memory.

That means that the maximum theoretical bandwidth is 560 GB/s, not 896 GB/s (560 + 336). Secondly, memory has to be interleaved in order to function on a given clock timing to improve the parallelism of the configuration. Interleaving is why you don't get a single 16 GB RAM chip, instead we get multiple 1 GB or 2 GB chips because it's vastly more efficient. HBM is a different story because the dies are parallel with multiple channels per pin and multiple frequencies are possible to be run across each chip in a stack, unlike DDR/GDDR which has to have all chips run at the same frequency.

However, what this means is that you need to have address space symmetry in order have interleaving of the RAM, i.e. you need to have all your chips presenting the same "capacity" of memory in order for it to work. Looking at the diagram above, you can see the SX's configuration, the first 1 GB of each RAM chip is interleaved across the entire 320-bit memory interface, giving rise to 10 GB operating with a bandwidth of 560 GB/s but what about the other 6 GB of RAM?

Those two banks of three chips either side of the processor house 2 GB per chip. How does that extra 1 GB get accessed? It can't be accessed at the same time as the first 1 GB because the memory interface is saturated. What happens, instead, is that the memory controller must instead "switch" to the interleaved addressable space covered by those 6x 1 GB portions. This means that, for the 6 GB "slower" memory (in reality, it's not slower but less wide) the memory interface must address that on a separate clock cycle if it wants to be accessed at the full width of the available bus.

The fallout of this can be quite complicated depending on how Microsoft have worked out their memory bus architecture. It could be a complete "switch" whereby on one clock cycle the memory interface uses the interleaved 10 GB portion and on the following clock cycle it accesses the 6 GB portion. This implementation would have the effect of averaging the effective bandwidth for all the memory. If you average this access, you get 392 GB/s for the 10 GB portion and 168 GB/s for the 6 GB portion for a given time frame but individual cycles would be counted at their full bandwidth.

However, there is another scenario with memory being assigned to each portion based on availability. In this configuration, the memory bandwidth (and access) is dependent on how much RAM is in use. Below 10 GB, the RAM will always operate at 560 GB/s. Above 10 GB utilisation, the memory interface must start switching or splitting the access to the memory portions. I don't know if it's technically possible to actually access two different interleaved portions of memory simultaneously by using the two 16-bit channels of the GDDR6 chip but if it were (and the standard appears to allow for it), you'd end up with the same memory bandwidths as the "averaged" scenario mentioned above.

If Microsoft were able to simultaneously access and decouple individual chips from the interleaved portions of memory through their memory controller then you could theoretically push the access to an asymmetric balance, being able to switch between a pure 560 GB/s for 10 GB RAM and a mixed 224 GB/s from 4 GB of that same portion and the full 336 GB/s of the 6 GB portion (also pictured above). This seems unlikely to my understanding of how things work and undesirable from a technical standpoint in terms of game memory access and also architecture design.

In comparison, the PS5 has a static 448 GB/s bandwidth for the entire 16 GB of GDDR6 (also operating at 14 GHz, across a 256-bit interface). Yes, the SX has 2.5 GB reserved for system functions and we don't know how much the PS5 reserves for that similar functionality but it doesn't matter - the Xbox SX either has only 7.5 GB of interleaved memory operating at 560 GB/s for game utilisation before it has to start "lowering" the effective bandwidth of the memory below that of the PS5... or the SX has an averaged mixed memory bandwidth that is always below that of the baseline PS4. Either option puts the SX at a disadvantage to the PS5 for more memory intensive games and the latter puts it at a disadvantage all of the time."

That'll be when they announce the PS5 Pro launching this holiday along with the base model ;)Maybe there's a Sony-style 8/20GBs of RAM bombshell incoming.

We can dream.

And 720p because 4K is exactly x9 720p, so they can integer scale it using 3x3 pixels instead of 2x2 when scaling 1080p.Paradoxically, while one of the main benefits of 4k and other 4-multiples of 1080p is perfect integer scaling, very few monitors and televisions do this.

Since my words don't good, here's a link:

https://tanalin.com/en/articles/integer-scaling/

Doom Eternal seems to load much faster on SSD and there this time a difference between NVME SSD and SATA SSD probably a window to the future.

SATA SSD is 10 seconds.

If all games are optimized like this the less than 1 second look like possible on PS5.

The difference is even greater than that because the SATA HDD in this test is using a 7200RPG drive and both use a 7700K CPU, a CPU as powerful as the XSX/PS5 CPU while PS4 and X1 have a Jaguar. Or in other words, if Doom would have run at the same settings as this test on PS4, it would have loaded a lot slower than 44 seconds.

PS5's SSD doesn't use 12 lanes, it uses 12 channels. PS5 is still using a 4 lane PCIe 4.0 connection between the SSD and the APU.Well.. the CPUs in the consoles are PCIe gen 4 vs the PCIe 12 lane gen 3 in that laptop chip.

But yh, by aren't bad. I still think they are even too much.

Here's the full post, it's super detailed.

Analyse This: The Next Gen Consoles (Part 9) [UPDATED]

So, the Xbox Series X is mostly unveiled at this point, with only some questions regarding audio implementation and underlying graphics...hole-in-my-head.blogspot.com

He actually covers in-detail some information that has been discussed about XSX's ram set up over at the XSX architecture OT, and how there may not actually be a ram bandwidth advantage vs the PS5, due to the way each systems ram is set up, and the way ram access works. Eg it seems based on the memory stack and memory controller set up, that the XSX's GPU ram likely cannot be accessed at the same time as the slower CPU ram, instead they take turns so to speak, and as a result the average bandwidth ends up taking a hit.

Era discussion about it starting here.

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Microsoft did not address this by splitting theirs, because the CPU and GPU still use the same bus, so similar issues of potential contention arise. Microsoft split their ram this time around not for any efficiency savings (if anything it's less efficient as there's now split speeds and more...www.resetera.com

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Ah, thank you for the clarification.www.resetera.com

And here's this guys segment about it in the write up. His conclusion is that the PS4 actually potentially has the ram bandwidth advantage. Hopefully we'll learn more soon.

-----

"Microsoft is touting the 10 GB @ 560 GB/s and 6 GB @ 336 GB/s asymmetric configuration as a bonus but it's sort-of not. We've had this specific situation at least once before in the form of the NVidia GTX 650 Ti and a similar situation in the form of the 660 Ti. Both of those cards suffered from an asymmetrical configuration, affecting memory once the "symmetrical" portion of the interface was "full".

Interleaved memory configurations for the SX's asymmetric memory configuration, an averaged value and the PS5's symmetric memory configuration... You can see that, overall, the PS5 has the edge in pure, consistent throughput...

Now, you may be asking what I mean by "full". Well, it comes down to two things: first is that, unlike some commentators might believe, the maximum bandwidth of the interface is limited to the 320-bit controllers and the matching 10 chips x 32 bit/pin x 14 GHz/Gbps interface of the GDDR6 memory.

That means that the maximum theoretical bandwidth is 560 GB/s, not 896 GB/s (560 + 336). Secondly, memory has to be interleaved in order to function on a given clock timing to improve the parallelism of the configuration. Interleaving is why you don't get a single 16 GB RAM chip, instead we get multiple 1 GB or 2 GB chips because it's vastly more efficient. HBM is a different story because the dies are parallel with multiple channels per pin and multiple frequencies are possible to be run across each chip in a stack, unlike DDR/GDDR which has to have all chips run at the same frequency.

However, what this means is that you need to have address space symmetry in order have interleaving of the RAM, i.e. you need to have all your chips presenting the same "capacity" of memory in order for it to work. Looking at the diagram above, you can see the SX's configuration, the first 1 GB of each RAM chip is interleaved across the entire 320-bit memory interface, giving rise to 10 GB operating with a bandwidth of 560 GB/s but what about the other 6 GB of RAM?

Those two banks of three chips either side of the processor house 2 GB per chip. How does that extra 1 GB get accessed? It can't be accessed at the same time as the first 1 GB because the memory interface is saturated. What happens, instead, is that the memory controller must instead "switch" to the interleaved addressable space covered by those 6x 1 GB portions. This means that, for the 6 GB "slower" memory (in reality, it's not slower but less wide) the memory interface must address that on a separate clock cycle if it wants to be accessed at the full width of the available bus.

The fallout of this can be quite complicated depending on how Microsoft have worked out their memory bus architecture. It could be a complete "switch" whereby on one clock cycle the memory interface uses the interleaved 10 GB portion and on the following clock cycle it accesses the 6 GB portion. This implementation would have the effect of averaging the effective bandwidth for all the memory. If you average this access, you get 392 GB/s for the 10 GB portion and 168 GB/s for the 6 GB portion for a given time frame but individual cycles would be counted at their full bandwidth.

However, there is another scenario with memory being assigned to each portion based on availability. In this configuration, the memory bandwidth (and access) is dependent on how much RAM is in use. Below 10 GB, the RAM will always operate at 560 GB/s. Above 10 GB utilisation, the memory interface must start switching or splitting the access to the memory portions. I don't know if it's technically possible to actually access two different interleaved portions of memory simultaneously by using the two 16-bit channels of the GDDR6 chip but if it were (and the standard appears to allow for it), you'd end up with the same memory bandwidths as the "averaged" scenario mentioned above.

If Microsoft were able to simultaneously access and decouple individual chips from the interleaved portions of memory through their memory controller then you could theoretically push the access to an asymmetric balance, being able to switch between a pure 560 GB/s for 10 GB RAM and a mixed 224 GB/s from 4 GB of that same portion and the full 336 GB/s of the 6 GB portion (also pictured above). This seems unlikely to my understanding of how things work and undesirable from a technical standpoint in terms of game memory access and also architecture design.

In comparison, the PS5 has a static 448 GB/s bandwidth for the entire 16 GB of GDDR6 (also operating at 14 GHz, across a 256-bit interface). Yes, the SX has 2.5 GB reserved for system functions and we don't know how much the PS5 reserves for that similar functionality but it doesn't matter - the Xbox SX either has only 7.5 GB of interleaved memory operating at 560 GB/s for game utilisation before it has to start "lowering" the effective bandwidth of the memory below that of the PS5... or the SX has an averaged mixed memory bandwidth that is always below that of the baseline PS4. Either option puts the SX at a disadvantage to the PS5 for more memory intensive games and the latter puts it at a disadvantage all of the time."

CTRL-F "cache"

No mention of cache at all in that memory explanation? Odd, because it's instrumental. The 6MB is likely mostly to be used mainly by the CPU. Cache is extremely effective at CPU workloads and it will keep the CPU from having to access the slower pool often, this preserving the bandwidth of the faster pool for the GPU.

Microsoft wouldn't have implemented the memory in this way if there was not an advantage to doing so.

I'd agree with the argument that the SX's memory setup is definitely a compromise based on the fact that ten 2GB GDDR6 modules would simply have been too cost-prohibitive for their target pricing.

It's not just that, though. Even when accessing "fast" memory the CPU and I/O can only access the memory bus at 192-bits, so there's more to this choice.

Also, as soon as I saw the "...limited to 7.5GB of fast RAM..." pull I knew the source's analysis was crap. How can any of you have read that and not had your bullshit meters tripped immediately? Are you that desperate for a hero that you're willing to look past such a glaring error? You don't start filling memory at the beginning of fast memory and then overflow into the slow memory when you max that out. You put things where they need to go. The things that don't require high-bandwidth, like the system reservation, go in the slow pool. The things that need high bandwidth go in the fast pool. Each gets filled up independently.

- Status

- Not open for further replies.