-

Ever wanted an RSS feed of all your favorite gaming news sites? Go check out our new Gaming Headlines feed! Read more about it here.

-

We have made minor adjustments to how the search bar works on ResetEra. You can read about the changes here.

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny

- Thread starter vestan

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

- Status

- Not open for further replies.

CTRL-F "cache"

No mention of cache at all in that memory explanation? Odd, because it's instrumental. The 6MB is likely mostly to be used mainly by the CPU. Cache is extremely effective at CPU workloads and it will keep the CPU from having to access the slower pool often, this preserving the bandwidth of the faster pool for the GPU.

Microsoft wouldn't have implemented the memory in this way if there was not an advantage to doing so.

I very much doubt they've gone with this set up because there was an advantage in doing so, rather it's a cost saving measure they're making the best they can out of.

A full 20GB of GDDR6 on the 320bit bus would have been considerably more expensive. They even saved a bit on cost by not going 8x2GB and 2x1GB.

CTRL-F "cache"

No mention of cache at all in that memory explanation? Odd, because it's instrumental. The 6MB is likely mostly to be used mainly by the CPU. Cache is extremely effective at CPU workloads and it will keep the CPU from having to access the slower pool often, this preserving the bandwidth of the faster pool for the GPU.

Microsoft wouldn't have implemented the memory in this way if there was not an advantage to doing so.

Not every decision gets made with an advantage in mind but rather to save costs.

Edit: beaten

I very much doubt they've gone with this set up because there was an advantage in doing so, rather it's a cost saving measure they're making the best they can out of.

A full 20GB of GDDR6 on the 320bit bus would have been considerably more expensive. They even saved a bit on cost by not going 8x2GB and 2x1GB.

I was talking relative to a more standard interface like Sony's memory setup, not relative to adding another chip.

You have to look at how the two memory pools will actually likely be used before you can analyze it like this. That's missing from this explanation.

Last edited:

Yeah, I think that this generation will be a far greater leap compared to the current generation than PS4/XOne was to PS3/X360. You can really see that they're trying to introduce something new while the last generation felt more like a resolution/graphics upgrade. I haven't bought a new console since the Dreamcast but I'm seriously considering getting one of these new ones at launch. I'd have to see some games first, though!Exactly. There are still some who think that the only thing the next gen consol SSDs will do is allow them to load into GTA5 in 10 seconds instead of 65 seconds. They couldn't be wronger. Current gen games are entirely designed around the slow transfer speeds of mechanical hard drives. For developers to go from making their games for 30 Mbps to 2400+ Mbps will be mindboggling. Faster loading screens will make up around 5% of the benefits from that speed. Screw that, we might not even have loading screens. Entire open world games will be loaded in the time it takes to click on Start and game screen flashing black. It kind of feels frustrating that some people just don't get how much of a leap this is.

I think audio is more important than we generally believe it is. Imagine, for example, stuttering audio because developers choose to prioritise frame rates over sound. I'm guessing almost all of us would prefer having inconsistent frame rates.surely, gamers and devs care more about VRS and Machine Learning than audio.

How people will receive Sony's 3D-audio though is another question. I don't think it will be a game changer but I would have to experience it firsthand to really form an opinion.

Thanks for your input, it's much appreciated!I think it's safe to say that development teams on games typically spend far more time working with audio than they do any one graphical optimization or Machine Learning. To me, it's not surprising that this was a big part of the talk. VRS is just one technique among many for improving efficiency, and it's no silver bullet, just a nice tool to have which isn't likely be a major differentiator if it really is baked into the same architecture both have inherited. Cerny's talk was focused primarily on differentiators, areas where they've done something they view as unique rather than an area that everyone is invested in.

I expect it to contribute to diversity in the gaming experience in a lot of ways:

That's just offhand. I'm sure we'll see a lot of other creative uses that haven't immediately occurred to me.

- Constantly bringing in animation variations so we don't see the same reactions in physical combat over and over.

- Having a wider range of possible reactions to events, from spoken lines of commentary in sports games that more immediately track the on-screen action, to sound effects appropriate for interactions between different materials (it's already common that footsteps sound different on grass versus in water, but swords should sound unique when striking different materials, gunfire should interact make different impact noises, and countless other examples of what movie folks lovingly craft as foley to match onscreen activity.)

- Building interiors can be far more diverse, whether arriving immediately from outside through a door or window, or merely walking from room to room. Having detailed textures available in a fraction of a second for a new environment enables a constantly evolving experience that doesn't need careful level design to enable.

- Science fiction and fantasy tropes such as teleportation and stepping through portals to other worlds can be gameplay activities instead of cut scenes.

- Storytelling in general should be much more seamless, with transitions between chapters happening without being obvious - and without burning out teams trying to figure out how to make it happen.

So, basically, it will affect almost all parts of what a game constitutes and possibly open up entirely new ways of gameplay. It's funny to think that you can do all this by "just" increasing the bandwidth between the storage and other parts of the console. I can see why some people are sceptical about what this will mean for game developing.

I was also thinking about AI. As far as I know AI is generally CPU intensive but is there any chance that this can be used to have more variety of NPC behaviour?

Something like "pre-baked" AI maybe. The actions of an NPC will be pre-programmed and streamed to ram when needed and only when they have to react to a players action will actual AI be used. Does this even make any sense or do I look like a complete fool now?

I know, we are talking about something different.PS5's SSD doesn't use 12 lanes, it uses 12 channels. PS5 is still using a 4 lane PCIe 4.0 connection between the SSD and the APU.

I was pointing out that the laptop iteration of this CPU isn't using PCIe 4 but rater PCIe 3 and it has 12 lanes of it, of which 8 lanes is for the discrete GPU in that laptop.

It's not so hard to understand. The source states what the poster wants to believe.It's not just that, though. Even when accessing "fast" memory the CPU and I/O can only access the memory bus at 192-bits, so there's more to this choice.

Also, as soon as I saw the "...limited to 7.5GB of fast RAM..." pull I knew the source's analysis was crap. How can any of you have read that and not had your bullshit meters tripped immediately? Are you that desperate for a hero that you're willing to look past such a glaring error? You don't start filling memory at the beginning of fast memory and then overflow into the slow memory when you max that out. You put things where they need to go. The things that don't require high-bandwidth, like the system reservation, go in the slow pool. The things that need high bandwidth go in the fast pool. Each gets filled up independently.

I was also thinking about AI. As far as I know AI is generally CPU intensive but is there any chance that this can be used to have more variety of NPC behaviour?

Something like "pre-baked" AI maybe. The actions of an NPC will be pre-programmed and streamed to ram when needed and only when they have to react to a players action will actual AI be used.

Well, kinda depends on what you consider to be proper NPC AI. They usually stick to their schedules, some of those might be more complex than the other. I would dig it when you I dunno, happen to burn down some villager's house, the owner swears to get his revenge because his family was in there, trains in the ways of magic arts and tries to organise an uprising to overthrow the clearly way too overpowered player character, heh.

I would like to see a lot of variety in NPC behaviour. It doesn't have to be "true" AI, I'd be content with the illusion of AI. The scenario you describe sounds great! Is something like that possible and if not, what would it take to have something like that I wonder.Well, kinda depends on what you consider to be proper NPC AI. They usually stick to their schedules, some of those might be more complex than the other. I would dig it when you I dunno, happen to burn down some villager's house, the owner swears to get his revenge because his family was in there, trains in the ways of magic arts and tries to organise an uprising to overthrow the clearly way too overpowered player character, heh.

Sry, I misread your post.I know, we are talking about something different.

I was pointing out that the laptop iteration of this CPU isn't using PCIe 4 but rater PCIe 3 and it has 12 lanes of it, of which 8 lanes is for the discrete GPU in that laptop.

I was also thinking about AI. As far as I know AI is generally CPU intensive but is there any chance that this can be used to have more variety of NPC behaviour?

Something like "pre-baked" AI maybe. The actions of an NPC will be pre-programmed and streamed to ram when needed and only when they have to react to a players action will actual AI be used. Does this even make any sense or do I look like a complete fool now?

Code and data for AI doesn't occupy enough memory that it's likely to be the biggest concern. The animations associated with particular behaviors are a different story, and we could well see some significant benefits by being able to pull in a particular motion matched animation right as it is needed. This is a huge improvement over having to keep every potentially relevant animation in memory all the time to avoid overcommitting an already saturated I/O bus that's busy streaming essential assets. So the SSD should help a lot in this regard.

Frankly, though, I expect the biggest breakthroughs in game AI to come from having significantly more CPU power available to allow a wider range of possible actions to be evaluated.

Thanks for all your informative posts, I've learned much. So does this mean we're likely to see a significant improvement in the possibilities of emergent gameplay?Code and data for AI doesn't occupy enough memory that it's likely to be the biggest concern. The animations associated with particular behaviors are a different story, and we could well see some significant benefits by being able to pull in a particular motion matched animation right as it is needed. This is a huge improvement over having to keep every potentially relevant animation in memory all the time to avoid overcommitting an already saturated I/O bus that's busy streaming essential assets. So the SSD should help a lot in this regard.

Frankly, though, I expect the biggest breakthroughs in game AI to come from having significantly more CPU power available to allow a wider range of possible actions to be evaluated.

- Storytelling in general should be much more seamless, with transitions between chapters happening without being obvious - and without burning out teams trying to figure out how to make it happen.

One of the things I've realised is how much closer cutscenes and even gameplay will be able to match movies in the sense of cutting back and forth between totally different locations. You could even cut between totally different action scenes while playing. There's a scene in the season 2 finale of Arrow where we cut back and forth between two different Green Arrow vs Deathstroke fights separated by 5 years. Imagine that in a game, switching between playstyles on the fly as the game puts you in the shoes of a hero fighting for his life while also flashing back to the last time he fought his rival. Or we could go between different heroes in different fights happening simultaneously as the tension builds to multiple victories.

Thanks for all your informative posts, I've learned much. So does this mean we're likely to see a significant improvement in the possibilities of emergent gameplay?

That depends entirely on whether a publisher is willing to fund something ambitious in that space, and whether there's a team with clever ideas and the reputation to be worth betting on. I certainly hope that's the case! I'd love to see more of this kind of experience.

I really don't have any insight into specific projects in development. I simply wouldn't feel right asking the folks I know in the industry for any details, and so I'm as much in the dark as the rest of you - except where I can use my technical background to interpret publicly available information.

I expect it to contribute to diversity in the gaming experience in a lot of ways:

That's just offhand. I'm sure we'll see a lot of other creative uses that haven't immediately occurred to me.

- Constantly bringing in animation variations so we don't see the same reactions in physical combat over and over.

- Having a wider range of possible reactions to events, from spoken lines of commentary in sports games that more immediately track the on-screen action, to sound effects appropriate for interactions between different materials (it's already common that footsteps sound different on grass versus in water, but swords should sound unique when striking different materials, gunfire should interact make different impact noises, and countless other examples of what movie folks lovingly craft as foley to match onscreen activity.)

- Building interiors can be far more diverse, whether arriving immediately from outside through a door or window, or merely walking from room to room. Having detailed textures available in a fraction of a second for a new environment enables a constantly evolving experience that doesn't need careful level design to enable.

- Science fiction and fantasy tropes such as teleportation and stepping through portals to other worlds can be gameplay activities instead of cut scenes.

- Storytelling in general should be much more seamless, with transitions between chapters happening without being obvious - and without burning out teams trying to figure out how to make it happen.

I don't know anything about game development, but I have this scene on my head everytime people talk about the SSD changing how games are made.

Creative Director: Our game will be like that, and the player will be able to do this, and there's this very epic moment when the player can do that and the whole game becomes this.

Technical Director (I don't know if this is a thing): Ehhh, about that... This we can't do, that we can't do on the scale you want. Hmm... This we can do, but we'll need some months to optimise, and we'll need a BIG CORRIDOR before any of this.

CD: Why is that?

TD: *slaps HDD*

So your issue with it is mostly religious? Got it.Lol, let me elaborate on my claim then!

The video has issues, and other people have already gone over them😊

Here's the full post, it's super detailed.

Analyse This: The Next Gen Consoles (Part 9) [UPDATED]

So, the Xbox Series X is mostly unveiled at this point, with only some questions regarding audio implementation and underlying graphics...hole-in-my-head.blogspot.com

He actually covers in-detail some information that has been discussed about XSX's ram set up over at the XSX architecture OT, and how there may not actually be a ram bandwidth advantage vs the PS5, due to the way each systems ram is set up, and the way ram access works. Eg it seems based on the memory stack and memory controller set up, that the XSX's GPU ram likely cannot be accessed at the same time as the slower CPU ram, instead they take turns so to speak, and as a result the average bandwidth ends up taking a hit.

Era discussion about it starting here.

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Microsoft did not address this by splitting theirs, because the CPU and GPU still use the same bus, so similar issues of potential contention arise. Microsoft split their ram this time around not for any efficiency savings (if anything it's less efficient as there's now split speeds and more...www.resetera.com

Xbox Series X: A Closer Look at the Technology Powering the Next Generation

Ah, thank you for the clarification.www.resetera.com

And here's this guys segment about it in the write up. His conclusion is that the PS4 actually potentially has the ram bandwidth advantage. Hopefully we'll learn more soon.

-----

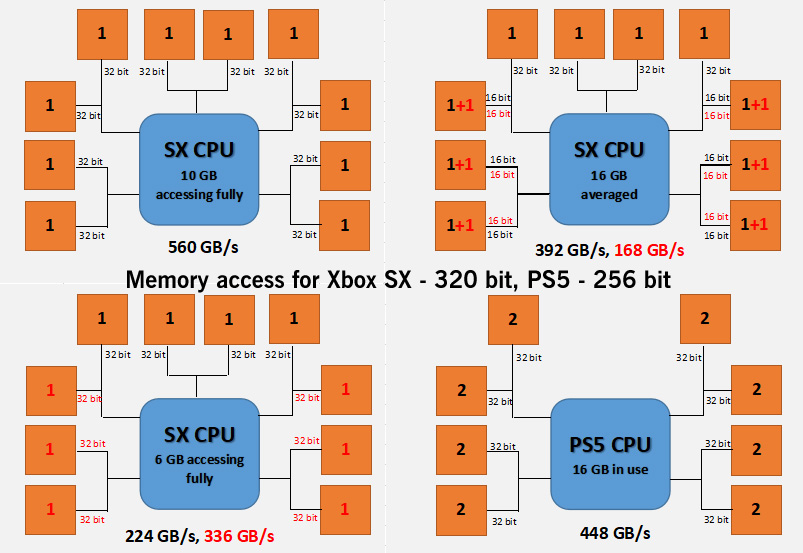

"Microsoft is touting the 10 GB @ 560 GB/s and 6 GB @ 336 GB/s asymmetric configuration as a bonus but it's sort-of not. We've had this specific situation at least once before in the form of the NVidia GTX 650 Ti and a similar situation in the form of the 660 Ti. Both of those cards suffered from an asymmetrical configuration, affecting memory once the "symmetrical" portion of the interface was "full".

Interleaved memory configurations for the SX's asymmetric memory configuration, an averaged value and the PS5's symmetric memory configuration... You can see that, overall, the PS5 has the edge in pure, consistent throughput...

Now, you may be asking what I mean by "full". Well, it comes down to two things: first is that, unlike some commentators might believe, the maximum bandwidth of the interface is limited to the 320-bit controllers and the matching 10 chips x 32 bit/pin x 14 GHz/Gbps interface of the GDDR6 memory.

That means that the maximum theoretical bandwidth is 560 GB/s, not 896 GB/s (560 + 336). Secondly, memory has to be interleaved in order to function on a given clock timing to improve the parallelism of the configuration. Interleaving is why you don't get a single 16 GB RAM chip, instead we get multiple 1 GB or 2 GB chips because it's vastly more efficient. HBM is a different story because the dies are parallel with multiple channels per pin and multiple frequencies are possible to be run across each chip in a stack, unlike DDR/GDDR which has to have all chips run at the same frequency.

However, what this means is that you need to have address space symmetry in order have interleaving of the RAM, i.e. you need to have all your chips presenting the same "capacity" of memory in order for it to work. Looking at the diagram above, you can see the SX's configuration, the first 1 GB of each RAM chip is interleaved across the entire 320-bit memory interface, giving rise to 10 GB operating with a bandwidth of 560 GB/s but what about the other 6 GB of RAM?

Those two banks of three chips either side of the processor house 2 GB per chip. How does that extra 1 GB get accessed? It can't be accessed at the same time as the first 1 GB because the memory interface is saturated. What happens, instead, is that the memory controller must instead "switch" to the interleaved addressable space covered by those 6x 1 GB portions. This means that, for the 6 GB "slower" memory (in reality, it's not slower but less wide) the memory interface must address that on a separate clock cycle if it wants to be accessed at the full width of the available bus.

The fallout of this can be quite complicated depending on how Microsoft have worked out their memory bus architecture. It could be a complete "switch" whereby on one clock cycle the memory interface uses the interleaved 10 GB portion and on the following clock cycle it accesses the 6 GB portion. This implementation would have the effect of averaging the effective bandwidth for all the memory. If you average this access, you get 392 GB/s for the 10 GB portion and 168 GB/s for the 6 GB portion for a given time frame but individual cycles would be counted at their full bandwidth.

However, there is another scenario with memory being assigned to each portion based on availability. In this configuration, the memory bandwidth (and access) is dependent on how much RAM is in use. Below 10 GB, the RAM will always operate at 560 GB/s. Above 10 GB utilisation, the memory interface must start switching or splitting the access to the memory portions. I don't know if it's technically possible to actually access two different interleaved portions of memory simultaneously by using the two 16-bit channels of the GDDR6 chip but if it were (and the standard appears to allow for it), you'd end up with the same memory bandwidths as the "averaged" scenario mentioned above.

If Microsoft were able to simultaneously access and decouple individual chips from the interleaved portions of memory through their memory controller then you could theoretically push the access to an asymmetric balance, being able to switch between a pure 560 GB/s for 10 GB RAM and a mixed 224 GB/s from 4 GB of that same portion and the full 336 GB/s of the 6 GB portion (also pictured above). This seems unlikely to my understanding of how things work and undesirable from a technical standpoint in terms of game memory access and also architecture design.

In comparison, the PS5 has a static 448 GB/s bandwidth for the entire 16 GB of GDDR6 (also operating at 14 GHz, across a 256-bit interface). Yes, the SX has 2.5 GB reserved for system functions and we don't know how much the PS5 reserves for that similar functionality but it doesn't matter - the Xbox SX either has only 7.5 GB of interleaved memory operating at 560 GB/s for game utilisation before it has to start "lowering" the effective bandwidth of the memory below that of the PS5... or the SX has an averaged mixed memory bandwidth that is always below that of the baseline PS4. Either option puts the SX at a disadvantage to the PS5 for more memory intensive games and the latter puts it at a disadvantage all of the time."

This is not true, on 6GB they will let CPU workload and some data which aren't access very often. The advantage in memory is less than the full 560 GB/s but not dramatic maybe it will cause problem in some non very well optimized title but the devs will very fast mastering it.

And why would MS use fast memory for OS?

Last edited:

The operating system does routine, standardized work on behalf of the applications that run on it. That's pretty much textbook what an operating system is for. So if your game needs to trigger a trophy overlay, or record and broadcast live gameplay, monitor when friends come online, or communicate with the hardware at the driver layer, then having the OS do any of that work using the slow 6GB means that it's doing so more slowly than it could and taking as well as occupying a disproportionate amount of bandwidth by tying up the data bus.

The only parts of the OS that would have no negative impact by occupying the 6GB portion are the generic system apps, like media playback, browsing the store, and so forth that are only executed when the game is on hold. On a system with a fast SSD, though, it's not clear to me why you'd keep them in memory at all while playing a game.

The video in question isn't as accurate as some of the more technical evaluations, but it's not all that bad, either. I wrote up some of my impressions a few pages back.

The operating system does routine, standardized work on behalf of the applications that run on it. That's pretty much textbook what an operating system is for. So if your game needs to trigger a trophy overlay, or record and broadcast live gameplay, monitor when friends come online, or communicate with the hardware at the driver layer, then having the OS do any of that work using the slow 6GB means that it's doing so more slowly than it could and taking as well as occupying a disproportionate amount of bandwidth by tying up the data bus.

The only parts of the OS that would have no negative impact by occupying the 6GB portion are the generic system apps, like media playback, browsing the store, and so forth that are only executed when the game is on hold. On a system with a fast SSD, though, it's not clear to me why you'd keep them in memory at all while playing a game.

The video in question isn't as accurate as some of the more technical evaluations, but it's not all that bad, either. I wrote up some of my impressions a few pages back.

Thanks for the explanation. Surprising but it will help with having bandwidth higher.

I'm not going to get into this. There's an entire thread dedicated to the video with actual, verified individuals giving their thoughts.

But yes, call me a fanboy. Thanks!

Last edited:

Seems like people have done a pretty good job debunking the video in the other thread.

Cerny talked about the custom SSD having 6 levels of priority. I understand what having levels mean and can imagine some use cases where it is very useful (aka. absolutely necessary), but I am just having a hard time thinking how 6 levels can be used? Why can 6 levels not be 'too much'?

I am just having a hard time thinking how 6 levels can be used? Why can 6 levels not be 'too much'?

When you have too many priority levels you simply don't use some of them. When you have too few, you pull your hair out wondering why someone skimped and made your life difficult. I wouldn't be surprised if the lowest and highest levels were reserved for system use, leaving four for games.

Curious what these laptop CPUs mean for the PS5's variable clock/cooling solution. Sounds like they don't throttle much in the laptops, even under sustained load so maybe good news for the PS5's sustained clock speed.

If it's like PC with games using the needed clocks and not constantly at max clock no matter what (what it seems like consoles do?), it should be good for saving power and saving fan speed/noise. I keep my rivatuner going all the time out of habit, I like overclocking and undervolting (keeping cool while pushing the limit). At the least my GPU goes up and down in clocks, from when it's bored (stays super low with simple games), or if it's getting a bit hot for it's higher clocks (goes to max OC but drops a bit if it's over 60degrees, darn Pascal GPUs, I miss my AMD Polaris GPU). I'm sure my CPU does it too with boost clock but I only monitor it's temperatures, since I don't really care what it does outside of temperatures.

I can see PS5 holding it's max clock if developers want it to, unless it's full of dust and is overheating for whatever reason.

The "teardown" videoCome on random Tuesday! I'd love for something, anything to drop tomorrow :)

I think it's safe to say that development teams on games typically spend far more time working with audio than they do any one graphical optimization or Machine Learning. To me, it's not surprising that this was a big part of the talk. VRS is just one technique among many for improving efficiency, and it's no silver bullet, just a nice tool to have which isn't likely be a major differentiator if it really is baked into the same architecture both have inherited. Cerny's talk was focused primarily on differentiators, areas where they've done something they view as unique rather than an area that everyone is invested in.

I expect it to contribute to diversity in the gaming experience in a lot of ways:

That's just offhand. I'm sure we'll see a lot of other creative uses that haven't immediately occurred to me.

- Constantly bringing in animation variations so we don't see the same reactions in physical combat over and over.

- Having a wider range of possible reactions to events, from spoken lines of commentary in sports games that more immediately track the on-screen action, to sound effects appropriate for interactions between different materials (it's already common that footsteps sound different on grass versus in water, but swords should sound unique when striking different materials, gunfire should interact make different impact noises, and countless other examples of what movie folks lovingly craft as foley to match onscreen activity.)

- Building interiors can be far more diverse, whether arriving immediately from outside through a door or window, or merely walking from room to room. Having detailed textures available in a fraction of a second for a new environment enables a constantly evolving experience that doesn't need careful level design to enable.

- Science fiction and fantasy tropes such as teleportation and stepping through portals to other worlds can be gameplay activities instead of cut scenes.

- Storytelling in general should be much more seamless, with transitions between chapters happening without being obvious - and without burning out teams trying to figure out how to make it happen.

Nice sum up of what we can expect in terms of game design and news ways of creating assets.

After seeing the Marvel's Spiderman GDC talk, I was wondering how long does it take to optimize streaming and every problemes arround it (algorithm of compression, IA, procedural content to lower the impact on data size). How SSD's will impact developpment times in that regard ?

EDIT: There is one little issue that VRS gonna address is the pop in and it's like AA in the current gen, it's something we will get ride of it.

Nice sum up of what we can expect in terms of game design and news ways of creating assets.

After seeing the Marvel's Spiderman GDC talk, I was wondering how long does it take to optimize streaming and every problemes arround it (algorithm of compression, IA, procedural content to lower the impact on data size). How SSD's will impact developpment times in that regard ?

EDIT: There is one little issue that VRS gonna address is the pop in and it's like AA in the current gen, it's something we will get ride of it.

VRS is not for popin, the popin will be solve part by fast storage for texture popin and for LOD popin it can be mitigated by fast storage but it can be solved with great geometry engine/mesh shading transition LOD system.

VRS will help to reduce shading where it is not needed for example why shade at the best quality element behind depth of field or motion blur or for VR not in center of the screen.

Last edited:

The operating system does routine, standardized work on behalf of the applications that run on it. That's pretty much textbook what an operating system is for. So if your game needs to trigger a trophy overlay, or record and broadcast live gameplay, monitor when friends come online, or communicate with the hardware at the driver layer, then having the OS do any of that work using the slow 6GB means that it's doing so more slowly than it could and taking as well as occupying a disproportionate amount of bandwidth by tying up the data bus.

The only parts of the OS that would have no negative impact by occupying the 6GB portion are the generic system apps, like media playback, browsing the store, and so forth that are only executed when the game is on hold. On a system with a fast SSD, though, it's not clear to me why you'd keep them in memory at all while playing a game.

The video in question isn't as accurate as some of the more technical evaluations, but it's not all that bad, either. I wrote up some of my impressions a few pages back.

Inside Xbox Series X: the full specs

This is it. After months of teaser trailers, blog posts and even the occasional leak, we can finally reveal firm, hard …

In terms of how the memory is allocated, games get a total of 13.5GB in total, which encompasses all 10GB of GPU optimal memory and 3.5GB of standard memory. This leaves 2.5GB of GDDR6 memory from the slower pool for the operating system and the front-end shell. From Microsoft's perspective, it is still a unified memory system, even if performance can vary. "In conversations with developers, it's typically easy for games to more than fill up their standard memory quota with CPU, audio data, stack data, and executable data, script data, and developers like such a trade-off when it gives them more potential bandwidth," says Goossen.

It seems all the OS is in the slower memory from what they told Digitalfoundry.

Thts some fine PR wording right there. He talks about the separate pools of RAM as if where data is is all that matters whereas what data is being accessed and when is what determines their bandwidth. The four on where the OS sits is actually irrelevant.

Inside Xbox Series X: the full specs

This is it. After months of teaser trailers, blog posts and even the occasional leak, we can finally reveal firm, hard …www.eurogamer.net

It seems all the OS is in the slower memory from what they told Digitalfoundry.

If the RAM that the CPU uses resides in the slower pool, it doesn't matter how much of it is being used, what matters is that it has to be accessed in lockstep with the GPU. As its the CPU that draws up every frame anyways along with all the other stuff it does.

And from my understanding of all that has been said here, it's impossible for the slower and faster RAM to be accessed simultaneously.

I'm not that knowledgeable, but I was under the impression that GDDR6's finer granularity is supposed to mitigate the adverse bw hit that comes with the shared memory pool?Thts some fine PR wording right there. He talks about the separate pools of RAM as if where data is is all that matters whereas what data is being accessed and when is what determines their bandwidth. The four on where the OS sits is actually irrelevant.

If the RAM that the CPU uses resides in the slower pool, it doesn't matter how much of it is being used, what matters is that it has to be accessed in lockstep with the GPU. As its the CPU that draws up every frame anyways along with all the other stuff it does.

And from my understanding of all that has been said here, it's impossible for the slower and faster RAM to be accessed simultaneously.

Thts some fine PR wording right there. He talks about the separate pools of RAM as if where data is is all that matters whereas what data is being accessed and when is what determines their bandwidth. The four on where the OS sits is actually irrelevant.

If the RAM that the CPU uses resides in the slower pool, it doesn't matter how much of it is being used, what matters is that it has to be accessed in lockstep with the GPU. As its the CPU that draws up every frame anyways along with all the other stuff it does.

And from my understanding of all that has been said here, it's impossible for the slower and faster RAM to be accessed simultaneously.

There is a cost too but if this is the truth, they took the bandwidth penalty.

That sounds very good, another thing to look forward to :)Code and data for AI doesn't occupy enough memory that it's likely to be the biggest concern. The animations associated with particular behaviors are a different story, and we could well see some significant benefits by being able to pull in a particular motion matched animation right as it is needed. This is a huge improvement over having to keep every potentially relevant animation in memory all the time to avoid overcommitting an already saturated I/O bus that's busy streaming essential assets. So the SSD should help a lot in this regard.

Frankly, though, I expect the biggest breakthroughs in game AI to come from having significantly more CPU power available to allow a wider range of possible actions to be evaluated.

There is a cost too but if this is the truth, they took the bandwidth penalty.

Which based on the design decisions they made, including always restricting CPU accesses to 192-bit whether they are to slow or fast RAM, tells me that there's something off with the analysis here. If this were legitimately an issue, why compound it by restricting your use of the bus even more than is absolutely necessary?

Thts some fine PR wording right there. He talks about the separate pools of RAM as if where data is is all that matters whereas what data is being accessed and when is what determines their bandwidth. The four on where the OS sits is actually irrelevant.

If the RAM that the CPU uses resides in the slower pool, it doesn't matter how much of it is being used, what matters is that it has to be accessed in lockstep with the GPU. As its the CPU that draws up every frame anyways along with all the other stuff it does.

No.

Which based on the design decisions they made, including always restricting CPU accesses to 192-bit whether they are to slow or fast RAM, tells me that there's something off with the analysis here. If this were legitimately an issue, why compound it by restricting your use of the bus even more than is absolutely necessary?

This is a cost decison. At the same time, Sony tested many APU with bigger memory bandwidth... At the end console are compromise. If Xbox Series X had 20 GB of RAM at 560 GB/s it would be better but not perfect.

Ideally, I think the two consoles would be better with 16 Gbps GDDR6 module for 512 GB/s of bandwidth for Sony and 640 GB/s for Microsoft but they want to reach a certain MSRP. And only SK Hynix offer 16 Gbps 2 GB module, only in sample phase for Samsung.

This is a cost decison. At the same time, Sony tested many APU with bigger memory bandwidth... At the end console are compromise. If Xbox Series X had 20 GB of RAM at 560 GB/s it would be better but not perfect.

Ideally, I think the two consoles would be better with 16 Gbps GDDR6 module for 512 GB/s of bandwidth for Sony and 640 GB/s for Microsoft but they want to reach a certain MSRP.

Restricting the CPU to 192-bit accesses to the fast RAM pool is not a cost decision. If this has a performance impact, as is being speculated, why do it?

Restricting the CPU to 192-bit accesses to the fast RAM pool is not a cost decision. If this has a performance impact, as is being speculated, why do it?

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny

The CPU and GPU share the same bus to the same pool of RAM on both the Series X and PS5. There's no way around that. Only when the CPU is doing literally nothing can the GPU utilize the full bandwidth on either one (except it won't have any work to do because the CPU is what queues up work, so...

Here ;) This has a bandwidth impact because it help keep the cost lower for having a full 560 GB/s with a 320 bit bus MS need 20 GB of GDDR6 RAM... And 20 GB of RAM is more expensive than 16 GB of RAM...

Restricting the CPU to 192-bit accesses to the fast RAM pool is not a cost decision. If this has a performance impact, as is being speculated, why do it?

It's still a cost decision .

They are trying to make things easier on the devs but putting stuff that don't need as much bandwidth into the slower parts of the ram .

Still this come about by them trying to save money .

PlayStation 5 System Architecture Deep Dive |OT| Secret Agent Cerny

The CPU and GPU share the same bus to the same pool of RAM on both the Series X and PS5. There's no way around that. Only when the CPU is doing literally nothing can the GPU utilize the full bandwidth on either one (except it won't have any work to do because the CPU is what queues up work, so...www.resetera.com

Here ;) This has a bandwidth impact.

I've seen it.

This speculation of an actual negative performance impact based on a reduction in the peak memory access capability, like the earlier one regarding where the system reservation resides, doesn't fit the known facts.

This same speculation doesn't fit with a choice that we know the system architects made, restricting CPU accesses to fast RAM to 192-bit, that was not dictated by cost. There was an option to allow the CPU to access the full bus when accessing this memory and this option was not chosen. If this results in a real negative performance impact, why make this choice?

It's still a cost decision .

They are trying to make things easier on the devs but putting stuff that don't need as much bandwidth into the slower parts of the ram .

Still come about by them trying to save money .

The CPU access things that are in the fast RAM at the same rate as things in the slow RAM. This is a deliberate choice not to use the full bus for CPU accesses even when it is available and makes no sense if it has a negative impact on performance.

I've seen it.

This speculation of an actual negative performance impact based on a reduction in the peak memory access capability, like the earlier one regarding where the system reservation resides, doesn't fit the known facts.

This same speculation doesn't fit with a choice that we know the system architects made, restricting CPU accesses to fast RAM to 192-bit, that was not dictated by cost. There was an option to allow the CPU to access the full bus when accessing this memory and this option was not chosen. If this results in a real negative performance impact, why make this choice?

Believe what you want, I believe dev. There is an impact. This is not the first GPU to do this some Nvidia GPU did it and it had an impact...

Nvidia CEO addresses GTX 970 controversy

Jen-Hsun Huang: "We won't let this happen again. We'll do a better job next time."

EDIT: Difference it is a console and dev can precisely target the 10 GB GPU memory and Microsoft was honest and said the truth not Nvidia.

The CPU access things that are in the fast RAM at the same rate as things in the slow RAM. This is a deliberate choice not to use the full bus for CPU accesses even when it is available and makes no sense if it has a negative impact on performance.

The point is they would be no need for none of that if they just went with another set up but that cost more money .

It's the same with Sony and how low there bandwidth is.

Having slower ram is going to have a negative impact on performance( or at least take some more work ) but they try there best to avoid it.

Believe what you want, I believe dev. There is an impact. This is not the first GPU to do this some Nvidia GPU did it and it had an impact...

Nvidia CEO addresses GTX 970 controversy

Jen-Hsun Huang: "We won't let this happen again. We'll do a better job next time."www.pcgamer.com

I'm not believing anything. I'm looking for an explanation for this inconsistency. I choose not to be spoon-fed information and take it as truth without engaging my critical thinking skills.

970 was a mess because it was running at 1/7th speed wasn't it? Whereas XSX won;t be anywhere near that.

Edit:

www.anandtech.com

www.anandtech.com

Edit:

Fast Segment (3.5GB)

192GB/sec

Slow Segment (512MB)

28GB/sec

192GB/sec

Slow Segment (512MB)

28GB/sec

GeForce GTX 970: Correcting The Specs & Exploring Memory Allocation

Last edited:

The point is they would be no need for none of that if they just went with another set up but that cost more money.

There's no need for it in this case, but they did it anyway.

I'm not believing anything. I'm looking for an explanation for this inconsistency. I choose not to be spoon-fed information and take it as truth without engaging my critical thinking skills.

I know it and speak about it a few days before in another thread before where I said this you can verify my history. If for half a second you adress the slower memory and half a second you adress the faster memory the bandwidth will be 448 GB/s...

This is the same address space, there is no secret or magic.

970 was a mess because it was running at 1/7th speed wasn't it? Whereas XSX won;t be anywhere near that.

Beyond that, it was also running on a system where it was much more difficult specific data to specific portions of memory. There was no "split pool" there.

I know it and speak about it a few days before in another thread before where I said this you can verify my history. if for half a second you adress the slower memory and half a second you adress the faster memory the bandwidth will be 448 GB/s...

At a cycle level, this is almost certainly not what happens given how much higher of a bandwidth consumer the GPU is. And how about those cycles where load doesn't actually meet the bandwidth capability? Those cycles where 192-bit is enough. I'm not sold on the actual impact of this to peak bandwidth (a measurement of capacity to do work over time) because of insufficient understanding of how much access is going to be to fast vs. slow memory and I'm even less sold on the actual impact to each consumer devices access to bandwidth as needed because in any given second you probably won't hit peak bandwidth. It's a question of theoretical vs. actual impact and in my mind there are too many variables to apply the former to the latter.

Last edited:

CPU getting variable bandwidth would have increased complexity, it certainly isn't a cost-free decision.This same speculation doesn't fit with a choice that we know the system architects made, restricting CPU accesses to fast RAM to 192-bit, that was not dictated by cost.

- Status

- Not open for further replies.