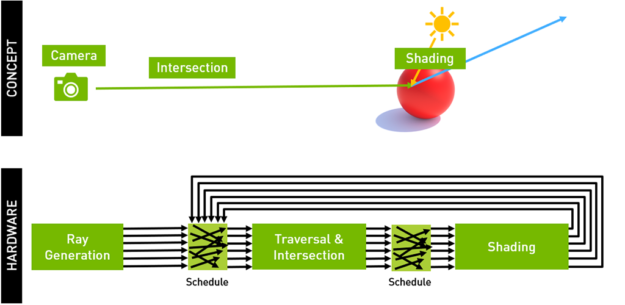

NVIDIA announced the RTX line of cards today showcasing some amazing tech that will eventually be industry standard over the coming years. From their presentation, this one specific moment stood out the most:

Aside from the above images, the reels that they showed for SotTR, Metro (!!), and BFV were pretty astounding as well and don't feel like current-gen games, even though they're all current-gen games, thanks to this new tech.

Seeing the drastic changes in lighting, shadows, reflections, and damn-near-lifelike representation of these 3D objects (and light refraction through the various glass shapes), can we consider this to be the first step into "next-gen" ? (Next-gen being defined here as a full on generational leap in visuals).

Also, can we also expect the "next-gen" consoles to have similar architecture to be standardized within their custom GPUs (supposed new AMD architecture thanks to the rumor mills)?

(images - NVIDIA)

Aside from the above images, the reels that they showed for SotTR, Metro (!!), and BFV were pretty astounding as well and don't feel like current-gen games, even though they're all current-gen games, thanks to this new tech.

Seeing the drastic changes in lighting, shadows, reflections, and damn-near-lifelike representation of these 3D objects (and light refraction through the various glass shapes), can we consider this to be the first step into "next-gen" ? (Next-gen being defined here as a full on generational leap in visuals).

Also, can we also expect the "next-gen" consoles to have similar architecture to be standardized within their custom GPUs (supposed new AMD architecture thanks to the rumor mills)?

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/12321457/twarren_nvidia_223.JPG)

:format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/12321467/twarren_nvidia_22.JPG)