There's a driver bug with RDNA3 that impacts VR performance. It's a software problem as running VR titles under Linux shows substantially better performance.

I suppose an RDNA2 card would be fine, as they're not impacted.

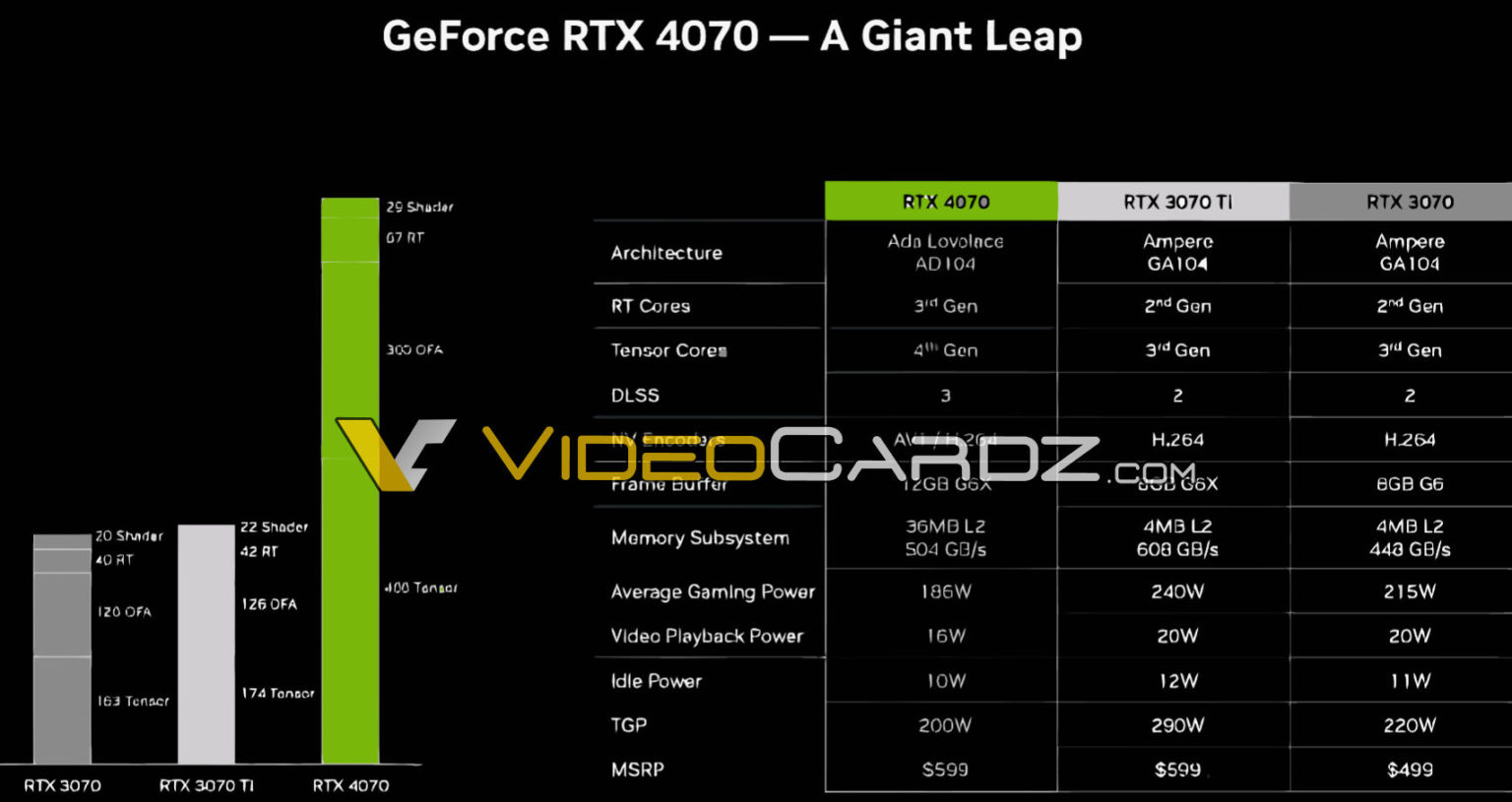

Probably worse given the choked off VRAM bandwidth judging from the 4070ti. (Almost as if the extra cache doesn't offset that...)So is this basically going to perform like the $700 3080 from 2020?

Waiting 2.5 years got you an extra 2GB of VRAM and saved you $100...

But you were talking about RT games? IIRC the only one that has RT without a reconstruction option (even if some of them are AMD sponsored and are FSR2 only) is Elden Ring? Which has it's own problems, even with a 4090Well, not every game supports DLSS and/or FSR and not only that but the quality of them can vary on the game. Like in some games it looks better than native and other times worse with issues like blurriness, flickering and such. I'm sure it also relies on the cpu more as well. I would say Quality mode is the only one worth using really for image quality and performance. Balanced can be okay in certain situations but not always worth it. The performance modes just look...ugh.

Those upscaling methods won't be a standard until nearly every game from now on supports both!

I swear, people just refuse to let that 600W rumor go. Lovelaces is way more power efficient than RDNA 3, and drastically more power efficient than Ampere (which itself also chose a dumb point on the power curve for it's default, and could be moved a good 50-100W down with barely effecting the performance).I really hope Nvidia sees the writing on the wall and with 5000 series they just focus on efficiency and bringing cost down. Like even if next gen isn't a massive leap in performance, I think it would be much more successful if they just focus on achieving around the same performance as 4000 series at a cheaper price.

Both RT and non RT games. COD MW2 2022 has DLSS and FSR but no RT for example. But yeah RT games normally have DLSS and FSR.But you were talking about RT games? IIRC the only one that has RT without a reconstruction option (even if some of them are AMD sponsored and are FSR2 only) is Elden Ring? Which has it's own problems, even with a 4090

I wasn't making a comment on the efficiency of Ampere. I'm saying next generation instead of pushing to the limits, I hope they just focus on cutting power and achieving the same performance as 40 series while keeping prices lower.But you were talking about RT games? IIRC the only one that has RT without a reconstruction option (even if some of them are AMD sponsored and are FSR2 only) is Elden Ring? Which has it's own problems, even with a 4090

I swear, people just refuse to let that 600W rumor go. Lovelaces is way more power efficient than RDNA 3, and drastically more power efficient than Ampere (which itself also chose a dumb point on the power curve for it's default, and could be moved a good 50-100W down with barely effecting the performance).

From that TPU article linked earlier:

Because they've upped the cache size about 10 times.

Because smaller bus is cheaper and if they did 256 they would need to either deploy 16 or 8gb of vram. The 4070ti has the same 192 bit bus + 12gb vram.

In terms of real world performance, the dramatic cache increase between Ampere and Lovelace GPUs has meant performance saw good improvements beyond just the final memory bandwidth figures, particularly at lower resolutions.

The price:perf ratio based on empirical benchmarks is the true test, don't get too hung up on the paper specs.

Because they've upped the cache size about 10 times.

And 4070 will get 21Gbps G6X instead of 14Gbps G6 giving it more external VRAM bandwidth than 3070 had with its 256 bit bus in addition to a 10X increase in cache size.

People seem to constantly miss these parts when talking about bus widths.

Thanks very interesting explanation. I was trying to convince myself that my "recently" purchased laptop with 3070 was not that bad in comparison to new 4070 equivalent 😅

Should be 200W for reference model.Is the specs graph in the OP the most up to date rumoured. I thought the 4070 was going to between 200-220 watts

Dang I haven't built a computer in yeaarss. Wouldn't even know how to do it now. What websites do people order their parts on? I should upgrade...

pcpartpicker like above is a fantastic resource of scoping out prices, compatibility, etc. I typically buy my components on Amazon > Best Buy > Newegg.Dang I haven't built a computer in yeaarss. Wouldn't even know how to do it now. What websites do people order their parts on? I should upgrade...

Amazon is really the only one I use these days! Used to use Best Buy and Newegg.pcpartpicker like above is a fantastic resource of scoping out prices, compatibility, etc. I typically buy my components on Amazon > Best Buy > Newegg

pcpartpicker like above is a fantastic resource of scoping out prices, compatibility, etc. I typically buy my components on Amazon > Best Buy > Newegg.

There's a Microcenter in Houston that's an hour or so away from my parent's that I will visit on occasion, but the deal has to be really worth it to deal with the drive + toll road + insane traffic at that store or if they have EVERYTHING in stock

Sweet, so it is still how it was. Thanks for the resources, time to start this process!PC Part Picker ( https://pcpartpicker.com/ ) is a great resource for building computers. It will track the parts list, check part compatibility, estimate the power usage, and tell you which retailer has the cheapest price.

What a age we live in that a 1600 bucks card looks like a great deal.

4090 may look like a great deal but it is also the card which is the most likely to be followed up with a successor with the same performance costing about half of what 4090 does.Legit how I feel lol... all the cards that have come out after the 4090, esp the 4080 and made me wonder why I don't just swallow the hard pill and get a 4090

4090 may look like a great deal but it is also the card which is the most likely to be followed up with a successor with the same performance costing about half of what 4090 does.

Yeah, unless Starfield gives my PC a heart attack, sticking to my 6600 XT for now.Going to keep rocking my 2060 Super for the foreseeable future.

Like a 4090ti or you mean the 5000 series?

That amount of VRAM and RT would be pretty nice for using in UE

5000 series

2080 Ti -> 3070

3090 -> 4070 Ti

A 4090 Ti would be a late gen uber expensive halo card like the 3090 Ti was

Basically I need to figure out what the value of buying a 4090 now and think I'd be set for at least 4-5 years. But I've noticed a lot of people that do buy these top end cards also end up flipping them out for the next top end ones.

Sweet, so it is still how it was. Thanks for the resources, time to start this process!

Like a 5070 at $600 for example. Probably won't have 24GBs of VRAM but will still perform similarly.Like a 4090ti or you mean the 5000 series?

That amount of VRAM and RT would be pretty nice for using in UE

Like a 5070 at $600 for example. Probably won't have 24GBs of VRAM but will still perform similarly.

3080s and 3090s are pretty close to each other anyway.Isn't the 4070 specs slating it to be 20%~ slower than the 4070 ti, which already is anywhere between a 3080 12GB and a 3090 ti in performance? 4070 itself seems far more likely to be a little slower than a 3080 (though, not by much)

Going by that I don't know if I would expect the hypothetical 5070 to match a 4090.

so seeing that pricing of the cards is based on performance is there even a chance of the 5000 series getting cheaper again?

like for this to be the case Nvidia would need to get rid of all its stock before releasing the 5000 series. and I dont see that happening at all.

They will be cheaper, AMD is already cutting prices slowly that even diehard Nvidia users like myself maybe should have got a 7900 XTX instead of a 4070 Ti.so seeing that pricing of the cards is based on performance is there even a chance of the 5000 series getting cheaper again?

like for this to be the case Nvidia would need to get rid of all its stock before releasing the 5000 series. and I dont see that happening at all.

Starting to wonder if it is only the single 8 pin cards that will be 599.

So....will the 4070 be about on par with a 3080 in terms of rasterization performance? Or slightly better?

My guess is it will be around the 3080 or sightly better for most games.So....will the 4070 be about on par with a 3080 in terms of rasterization performance? Or slightly better?

Single 8 pin should be fine? 150w from the 8 pin + 75w from the PCIe connection. That still leaves room for a +10% power limit increase if they want to include it.

This is really low power draw card, it's good to see there'll be plenty dual fan designs available at launch. It's about time we got some more sensible GPU designs.

NVIDIA GeForce RTX 4070 Founders Edition GPU has been pictured - VideoCardz.com

The third RTX 40 Founders Edition For the new launch, NVIDIA is indeed making their own design. The RTX 4070 Ti did not get a Founders Edition, in an effort to push more custom designs to the market, but it seems the non-Ti SKU will see a different approach. NVIDIA has sent out a Founders […]videocardz.com

Do you think it (the FE) will be hard to get at launch? You seem to have a positive outlook on its value proposition but will the wider market feel the same?I'm liking this launch more and more, a nice compact 2 slot founders edition card at MSRP is going to be a great option in the current market.